通用日志类

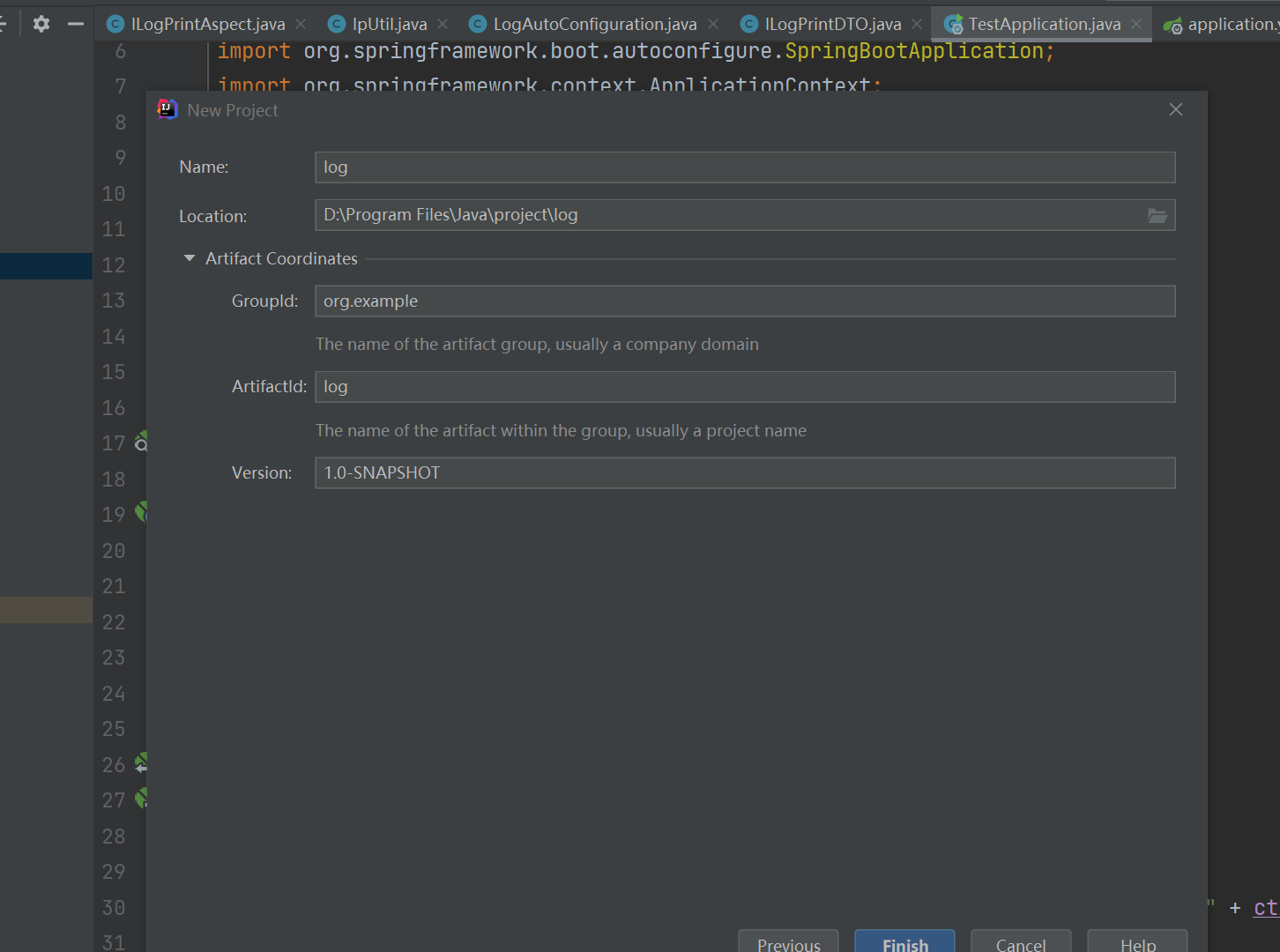

创建日志模块

1、导入相关的依赖

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba.fastjson2</groupId>

<artifactId>fastjson2</artifactId>

</dependency>

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

</dependency>

</dependencies>

2、日志类包含信息

1、请求类型 GET、POST、DELETE、PUT

2、请求路径 URL

3、请求入参

4、返回结果

5、IP 地址

6、消耗时间

7、返回结果

编写代码

1、日志注解

@Target({ElementType.TYPE, ElementType.METHOD})

@Retention(RetentionPolicy.RUNTIME)

public @interface ILog {

/**

* 入参打印

*

* @return 打印结果中是否包含入参,{@link Boolean#TRUE} 打印,{@link Boolean#FALSE} 不打印

*/

boolean input() default true;

/**

* 出参打印

*

* @return 打印结果中是否包含出参,{@link Boolean#TRUE} 打印,{@link Boolean#FALSE} 不打印

*/

boolean output() default true;

}

2、日志切面

@Aspect

public class ILogPrintAspect {

/**

* 打印类或方法上的 {@link ILog}

*/

@Around("@within(com.ayi.annotation.ILog) || @annotation(com.ayi.annotation.ILog)")

public Object printMLog(ProceedingJoinPoint joinPoint) throws Throwable {

long startTime = SystemClock.now();

MethodSignature methodSignature = (MethodSignature) joinPoint.getSignature();

//获取当前请求对象

ServletRequestAttributes attributes = (ServletRequestAttributes) RequestContextHolder.getRequestAttributes();

HttpServletRequest request = attributes.getRequest();

Logger log = LoggerFactory.getLogger(methodSignature.getDeclaringType());

String beginTime = DateUtil.now();

Object result = null;

try {

result = joinPoint.proceed();

} finally {

Method targetMethod = joinPoint.getTarget().getClass().getDeclaredMethod(methodSignature.getName(), methodSignature.getMethod().getParameterTypes());

ILog logAnnotation = Optional.ofNullable(targetMethod.getAnnotation(ILog.class)).orElse(joinPoint.getTarget().getClass().getAnnotation(ILog.class));

if (logAnnotation != null) {

ILogPrintDTO logPrint = new ILogPrintDTO();

logPrint.setBeginTime(beginTime);

if (logAnnotation.input()) {

logPrint.setInputParams(buildInput(joinPoint));

}

if (logAnnotation.output()) {

logPrint.setOutputParams(result);

}

String methodType = "", requestURI = "";

try {

ServletRequestAttributes servletRequestAttributes = (ServletRequestAttributes) RequestContextHolder.getRequestAttributes();

assert servletRequestAttributes != null;

methodType = servletRequestAttributes.getRequest().getMethod();

requestURI = servletRequestAttributes.getRequest().getRequestURI();

} catch (Exception ignored) {

}

log.info("ip : [{}], [{}] {}, executeTime: {}ms, info: {}", IpUtil.getRequestIp(request) , methodType, requestURI, SystemClock.now() - startTime, JSON.toJSONString(logPrint));

}

}

return result;

}

private Object[] buildInput(ProceedingJoinPoint joinPoint) {

Object[] args = joinPoint.getArgs();

Object[] printArgs = new Object[args.length];

for (int i = 0; i < args.length; i++) {

if ((args[i] instanceof HttpServletRequest) || args[i] instanceof HttpServletResponse) {

continue;

}

if (args[i] instanceof byte[]) {

printArgs[i] = "byte array";

} else if (args[i] instanceof MultipartFile) {

printArgs[i] = "file";

} else {

printArgs[i] = args[i];

}

}

return printArgs;

}

}

3、日志打印实体类

@Data

public class ILogPrintDTO {

/**

* 开始时间

*/

private String beginTime;

/**

* 请求入参

*/

private Object[] inputParams;

/**

* 返回参数

*/

private Object outputParams;

}

4、定义 IP 解析工具类

public final class IpUtil {

/**

* 获取请求真实IP地址

*/

public static String getRequestIp(HttpServletRequest request) {

//通过HTTP代理服务器转发时添加

String ipAddress = request.getHeader("x-forwarded-for");

if (ipAddress == null || ipAddress.length() == 0 || "unknown".equalsIgnoreCase(ipAddress)) {

ipAddress = request.getHeader("Proxy-Client-IP");

}

if (ipAddress == null || ipAddress.length() == 0 || "unknown".equalsIgnoreCase(ipAddress)) {

ipAddress = request.getHeader("WL-Proxy-Client-IP");

}

if (ipAddress == null || ipAddress.length() == 0 || "unknown".equalsIgnoreCase(ipAddress)) {

ipAddress = request.getRemoteAddr();

// 从本地访问时根据网卡取本机配置的IP

if (ipAddress.equals("127.0.0.1") || ipAddress.equals("0:0:0:0:0:0:0:1")) {

InetAddress inetAddress = null;

try {

inetAddress = InetAddress.getLocalHost();

} catch (UnknownHostException e) {

e.printStackTrace();

}

ipAddress = inetAddress.getHostAddress();

}

}

// 通过多个代理转发的情况,第一个IP为客户端真实IP,多个IP会按照','分割

if (ipAddress != null && ipAddress.length() > 15) {

if (ipAddress.indexOf(",") > 0) {

ipAddress = ipAddress.substring(0, ipAddress.indexOf(","));

}

}

return ipAddress;

}

}

5、注入 Spring 容器,并注入 切面类

public class LogAutoConfiguration {

/**

* {@link ILog} 日志打印 AOP 切面

*/

@Bean

public ILogPrintAspect iLogPrintAspect() {

return new ILogPrintAspect();

}

}

6、自动注入配置 resource/META-INF/spring.factories

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

org.springframework.boot.autoconfigure.EnableAutoConfiguration=com.ayi.config.LogAutoConfiguration

7、在 resource/logback-spring.xml 配置日志相关信息

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property name="FILE_ERROR_PATTERN"

value="${FILE_LOG_PATTERN:-%d{${LOG_DATEFORMAT_PATTERN:-yyyy-MM-dd HH:mm:ss.SSS}} ${LOG_LEVEL_PATTERN:-%5p} ${PID:- } --- [%t] %-40.40logger{39} %file:%line: %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}}"/>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>INFO</level>

</filter>

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="FILE_INFO" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!--如果只是想要 Info 级别的日志,只是过滤 info 还是会输出 Error 日志,因为 Error 的级别高, 所以我们使用下面的策略,可以避免输出 Error 的日志-->

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<!--过滤 Error-->

<level>ERROR</level>

<!--匹配到就禁止-->

<onMatch>DENY</onMatch>

<!--没有匹配到就允许-->

<onMismatch>ACCEPT</onMismatch>

</filter>

<!--日志名称,如果没有File 属性,那么只会使用FileNamePattern的文件路径规则如果同时有<File>和<FileNamePattern>,那么当天日志是<File>,明天会自动把今天的日志改名为今天的日期。即,<File> 的日志都是当天的。-->

<!--<File>logs/info.demo-logback.log</File>-->

<!--滚动策略,按照时间滚动 TimeBasedRollingPolicy-->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!--文件路径,定义了日志的切分方式——把每一天的日志归档到一个文件中,以防止日志填满整个磁盘空间-->

<FileNamePattern>logs/demo-logback/info.created_on_%d{yyyy-MM-dd}.part_%i.log</FileNamePattern>

<!--只保留最近90天的日志-->

<maxHistory>90</maxHistory>

<!--用来指定日志文件的上限大小,那么到了这个值,就会删除旧的日志-->

<!--<totalSizeCap>1GB</totalSizeCap>-->

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<!-- maxFileSize:这是活动文件的大小,默认值是10MB,本篇设置为1KB,只是为了演示 -->

<maxFileSize>2MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

</rollingPolicy>

<!--<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">-->

<!--<maxFileSize>1KB</maxFileSize>-->

<!--</triggeringPolicy>-->

<encoder>

<pattern>${FILE_LOG_PATTERN}</pattern>

<charset>UTF-8</charset> <!-- 此处设置字符集 -->

</encoder>

</appender>

<appender name="FILE_ERROR" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!--如果只是想要 Error 级别的日志,那么需要过滤一下,默认是 info 级别的,ThresholdFilter-->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>Error</level>

</filter>

<!--日志名称,如果没有File 属性,那么只会使用FileNamePattern的文件路径规则如果同时有<File>和<FileNamePattern>,那么当天日志是<File>,明天会自动把今天的日志改名为今天的日期。即,<File> 的日志都是当天的。-->

<!--<File>logs/error.demo-logback.log</File>-->

<!--滚动策略,按照时间滚动 TimeBasedRollingPolicy-->

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!--文件路径,定义了日志的切分方式——把每一天的日志归档到一个文件中,以防止日志填满整个磁盘空间-->

<FileNamePattern>logs/demo-logback/error.created_on_%d{yyyy-MM-dd}.part_%i.log</FileNamePattern>

<!--只保留最近90天的日志-->

<maxHistory>90</maxHistory>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<!-- maxFileSize:这是活动文件的大小,默认值是10MB,本篇设置为1KB,只是为了演示 -->

<maxFileSize>2MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

</rollingPolicy>

<encoder>

<pattern>${FILE_ERROR_PATTERN}</pattern>

<charset>UTF-8</charset> <!-- 此处设置字符集 -->

</encoder>

</appender>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE_INFO"/>

<appender-ref ref="FILE_ERROR"/>

</root>

</configuration>