python爬取今日热榜数据到txt文件

今日热榜:https://tophub.today/

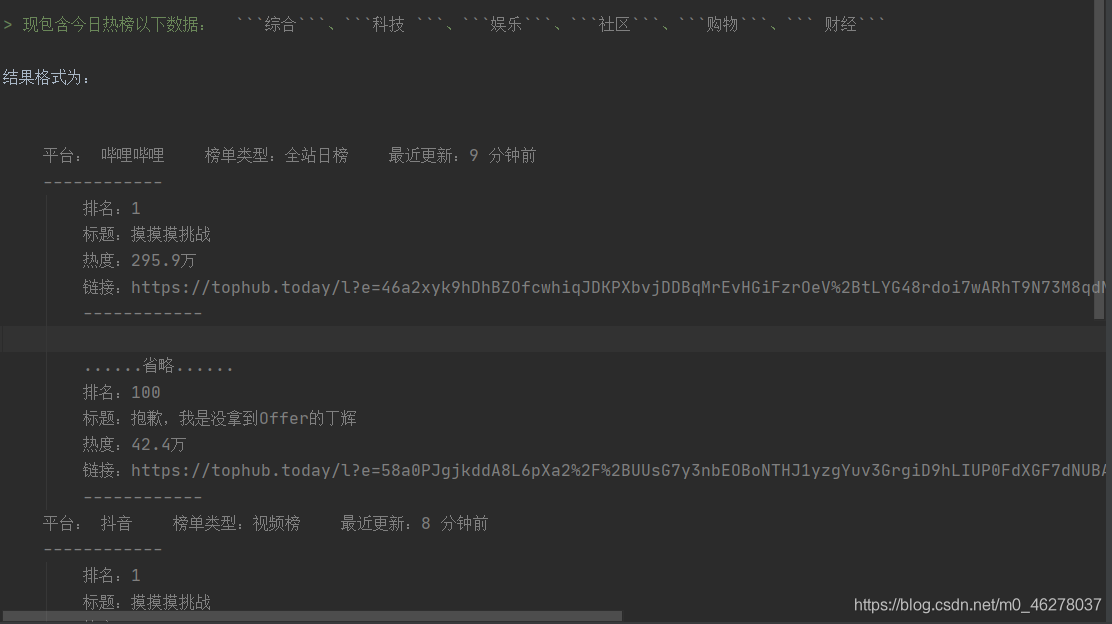

爬取数据及保存格式:

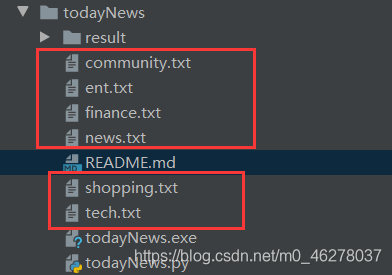

爬取后保存为.txt文件:

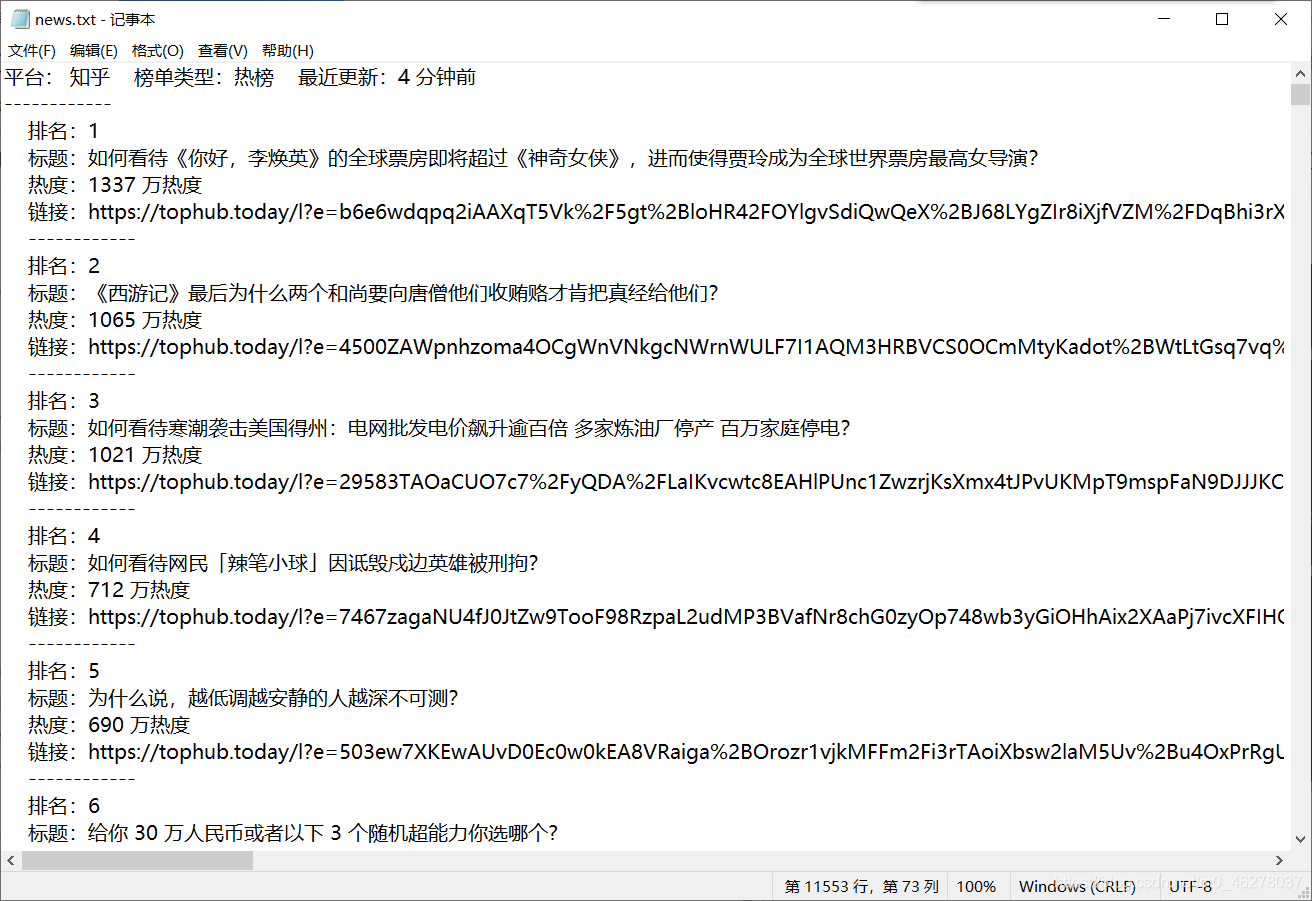

部分内容:

源码及注释:

1 import requests 2 from bs4 import BeautifulSoup 3 4 def download_page(url): 5 headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"} 6 try: 7 r = requests.get(url,timeout = 30,headers=headers) 8 return r.text 9 except: 10 return "please inspect your url or setup" 11 12 13 def get_content(html,tag): 14 output = """ 排名:{}\n 标题:{} \n 热度:{}\n 链接:{}\n ------------\n""" 15 output2 = """平台:{} 榜单类型:{} 最近更新:{}\n------------\n""" 16 num=[] 17 title=[] 18 hot=[] 19 href=[] 20 soup = BeautifulSoup(html, 'html.parser') 21 con = soup.find('div',attrs={'class':'bc-cc'}) 22 con_list = con.find_all('div', class_="cc-cd") 23 for i in con_list: 24 author = i.find('div', class_='cc-cd-lb').get_text() # 获取平台名字 25 time = i.find('div', class_='i-h').get_text() # 获取最近更新 26 link = i.find('div', class_='cc-cd-cb-l').find_all('a') # 获取所有链接 27 gender = i.find('span', class_='cc-cd-sb-st').get_text() # 获取类型 28 save_txt(tag,output2.format(author, gender,time)) 29 for k in link: 30 href.append(k['href']) 31 num.append(k.find('span', class_='s').get_text()) 32 title.append(str(k.find('span', class_='t').get_text())) 33 hot.append(str(k.find('span', class_='e').get_text())) 34 for h in range(len(num)): 35 save_txt(tag,output.format(num[h], title[h], hot[h], href[h])) 36 37 38 def save_txt(tag,*args): 39 for i in args: 40 with open(tag+'.txt', 'a', encoding='utf-8') as f: 41 f.write(i) 42 43 44 def main(): 45 # 综合 科技 娱乐 社区 购物 财经 46 page=['news','tech','ent','community','shopping','finance'] 47 for tag in page: 48 url = 'https://tophub.today/c/{}'.format(tag) 49 html = download_page(url) 50 get_content(html,tag) 51 52 if __name__ == '__main__': 53 main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号