Python爬虫之酷安应用商店

酷安应用商店的爬虫,提取各个App的下载链接。

源码:

1 # -*- coding: UTF-8 -*- 2 import requests 3 import queue 4 import threading 5 import re 6 from lxml import etree 7 import csv 8 from copy import deepcopy 9 10 class KuAn(object): 11 12 def __init__(self, type, page): 13 self.type = type 14 self.page = page 15 self.header = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36'} 16 self.csv_header = ['应用名称', '下载链接'] 17 with open('{}.csv'.format(self.type), 'a+', newline='', encoding='utf-8-sig') as f: 18 csv_file = csv.writer(f) 19 csv_file.writerow(self.csv_header) 20 self.url = 'https://www.coolapk.com' 21 self.base_url = 'https://www.coolapk.com/{}'.format(type) 22 if type not in ['apk', 'game']: 23 raise ValueError('type参数不在范围内') 24 self.page_url_queue = queue.Queue() 25 self.detail_url_queue = queue.Queue() 26 self.save_queue = queue.Queue() 27 28 def get_detail_url_fun(self): 29 while True: 30 page_url = self.page_url_queue.get() 31 req = requests.get(url=page_url,headers=self.header) 32 if req.status_code == 200: 33 req.encoding = req.apparent_encoding 34 html = etree.HTML(req.text) 35 path = html.xpath('//*[@class="app_left_list"]/a/@href') 36 for _ in path: 37 detail_url = self.url + _ 38 print('正在获取详情链接:',detail_url) 39 self.detail_url_queue.put(deepcopy(detail_url)) 40 self.page_url_queue.task_done() 41 42 def get_download_url_fun(self): 43 while True: 44 detail_url = self.detail_url_queue.get() 45 req = requests.get(url=detail_url, headers=self.header) 46 if req.status_code == 200: 47 req.encoding = 'utf-8' 48 url_reg = '"(.*?)&from=click' 49 name_reg = '<p class="detail_app_title">(.*?)<' 50 download_url = re.findall(url_reg, req.text)[0] 51 name = re.findall(name_reg, req.text)[0] 52 data = {'name': name, 'url': download_url} 53 print('获取到数据:', data) 54 self.save_queue.put(data) 55 self.detail_url_queue.task_done() 56 57 def save_data_fun(self): 58 while True: 59 data = self.save_queue.get() 60 name = data.get('name') 61 url = data.get('url') 62 with open('{}.csv'.format(self.type), 'a+', newline='', encoding='utf-8-sig') as f: 63 csv_file = csv.writer(f) 64 csv_file.writerow([name, url]) 65 self.save_queue.task_done() 66 67 68 def run(self): 69 for _ in range(1, self.page+1): 70 page_url = self.base_url + '?p={}'.format(_) 71 print('下发页面url', page_url) 72 self.page_url_queue.put(page_url) 73 74 thread_list = [] 75 for _ in range(2): 76 get_detail_url = threading.Thread(target=self.get_detail_url_fun) 77 thread_list.append(get_detail_url) 78 79 for _ in range(5): 80 get_download_url = threading.Thread(target=self.get_download_url_fun) 81 thread_list.append(get_download_url) 82 83 for _ in range(2): 84 save_data = threading.Thread(target=self.save_data_fun) 85 thread_list.append(save_data) 86 87 for t in thread_list: 88 t.setDaemon(True) 89 t.start() 90 91 for q in [self.page_url_queue, self.detail_url_queue, self.save_queue]: 92 q.join() 93 94 print('爬取完成,结束') 95 96 if __name__ == '__main__': 97 98 a= KuAn(type='apk', page=302).run()

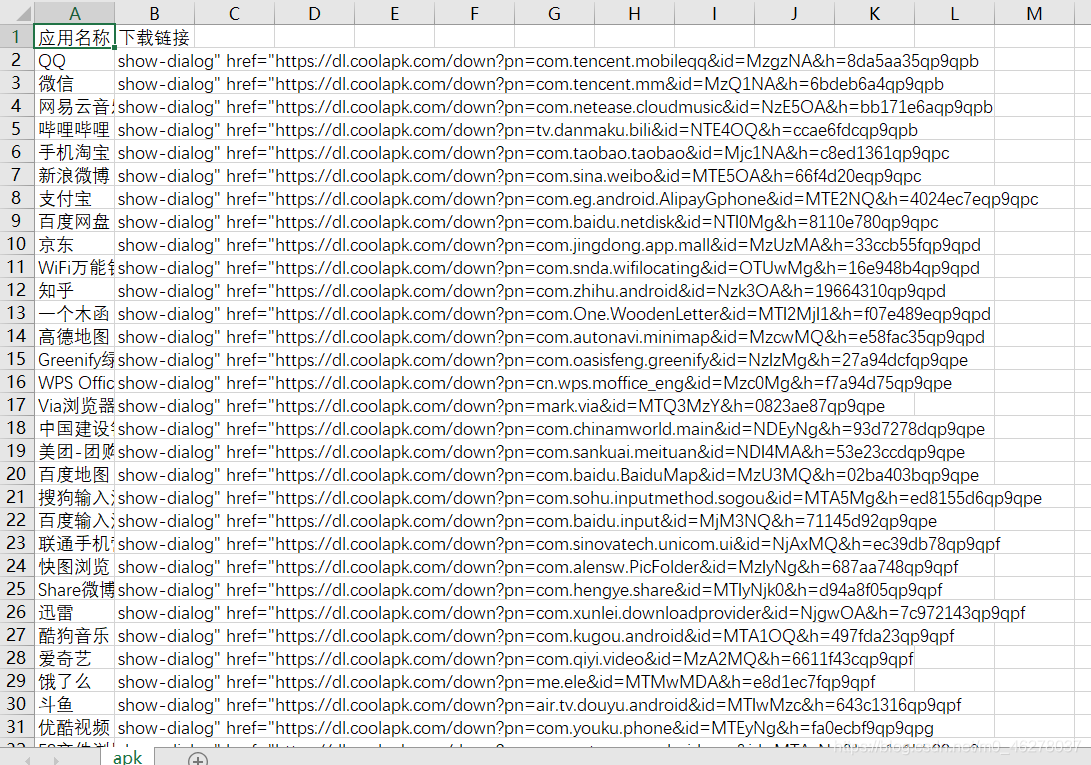

运行结果(部分):

浙公网安备 33010602011771号

浙公网安备 33010602011771号