SLAM:理论与实践

第一讲,第二讲:概述与预备知识

1,课程内容与预备知识

1.1,SLAM是什么怎么学:

1,SLAM:Simultaneous Localization and Mapping

2,参考书:MVG多视图几何,机器人学状态估计State Estimation For Robotics(如何估计相机的运动)

3,视觉SLAM十四讲

1.2,基础知识:

数学:高等数学,线代,矩阵论,概率论

编程:等知识

2,SLAM是什么

2.1,作用:

1,定位:提取点,来确定相机的位置,运动轨迹。

2,建图:根据单目相机提取的点,来构建稀疏-半稠密的重建,用rgb-d相机可进行三维稠密的重建

2.2,应用:

1,手持设备定位

2,自动驾驶定位

3,AR增强现实

2.3,传感器:

内质:感受机器人本体的信息,IMU,激光,相机,编码器

外质:安装在环境中,二维码,GPS,导轨(受环境的限制)

2.4,相机:

分类:

单目相机Monocular:

双目相机Stereo:

深度相机RGB-D:

其他,全景,鱼眼相机

相机的特点:二维投影形式记录了三维世界的信息,丢失了距离的纬度

3,视觉SLAM数学表述与框架

3.1,框架

视觉里程计:前端,连续的相邻图像间进行移动轨迹的估计

后端:更长时间内,由于误差的累计,会出现偏移,是估计,优化问题,符合长时间领域的观测。

回环检测loop closing:由于误差的累积,可能到同一位置时,路径有差别,要检测出轨迹回到某个地方。

建图:

方法:特征点法,直接法

后端:

3.2,SLAM问题的数学描述

4,实践:Linux下的C++编程基础

5,作业

1,熟悉linux

1.1 如何在Ubuntu 中安装软件(命令⾏界⾯)?它们通常被安装在什么地⽅?

1)apt-get 方式的安装;

普通安装:sudo apt-get install XXX (包名)

修复安装:sudo apt-get -f install XXX

重新安装:sudo apt-get -f reinstall XXX

(例如安装g++编译环境,可在终端中输入命令:sudo apt-get install g++,附加说明可

通过g++ -v命令查看g++版本)

2)dpkg方式安装

sudo dpkg -i package_name.deb

安装后软件默认位置 /usr/share

1.2 linux 的环境变量是什么?我如何定义新的环境变量?

环境变量:环境变量是在操作系统中一个具有特定名字的对象,它包含了一个或多个应用程序将使用到的信息;它分为永久生效和shell临时生效两种。

1)对所有用户生效的永久性变量(系统级):

这类变量对系统内的所有用户都生效,所有用户都可以使用这类变量。作用范围是整个系统。

设置方式: 用vim 或者gedit 打开/etc/profile 文件,然后添加我们想要的环境变量,用export指令添加环境变量。这个文件只有在root(超级用户)下才能修改。我们可以在etc目录下使用ls -l查看这个文件的用户及权限。以gedit为例:

$ gedit /etc/profile

$ export 新增环境变量

注:添加完成后新的环境变量不会立即生效,需要source /etc/profile 该文件才会生效。否则只能在下次重进此用户时才能生效。记住要在终端source一下。。。

2)对当前用户生效的永久性变量(用户级):

只针对当前用户,和上面的一样,只不过不需要在etc 下面进行添加,直接在.bash_profile文件最下面用export添加就好了。

这里 .bashrc和.bash_profile原则上来说设置此类环境变量时在这两个文件任意一个里面添加都是可以的。

~/.bash_profile是交互式login方式进入bash shell运行。

~/ .bashrc是交互式non-login方式进入bash shell运行。

二者设置大致相同。

.bash_profile文件只会在用户登录的时候读取一次;而.bashrc在每次打开终端进行一次新的会话时都会读取。

3)临时有效的环境变量(只对当前shell有效):

此类环境变量只对当前的shell有效。当我们退出登录或者关闭终端再重新打开时,这个环境变量就会消失,是临时的,可以直接使用export指令添加。

$ export 新增环境变量

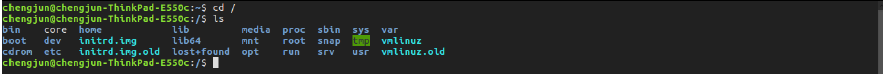

`1.3 linux 根⽬录下⾯的⽬录结构是什么样的?⾄少说出3 个⽬录的⽤途。

根目录一般结构如下:(以本人笔记本目前ubuntu16.04系统为例,截图如下) 1)/bin 用户二进制文件

1)/bin 用户二进制文件

包含二进制可执行文件,系统所有用户可执行文件都在这个文件夹里,例如:ls,cp,ping等。

2)/sbin 系统二进制文件

包含二进制可执行文件,但只能由系统管理员运行,对系统进行维护。

3)/etc 配置文件

包含所有程序配置文件,也包含了用于启动/停止单个程序的启动和关闭shell脚本。

4)/dev 设备文件

包含终端所有设备,USB或连接到系统的任何设备。例如:/dev/tty1、/dev/usbmon0

5)/proc 进程信息

包含系统进程的相关信息。

这是一个虚拟的文件系统,包含有关正在运行的进程的信息。例如:/proc/{pid}目录中包含的与特定pid相关的信息。

6)/var 变量文件

可以找到内容可能增长的文件。这包括 - 系统日志文件(/var/log);包和数据库文件(/var/lib);电子邮件(/var/mail);打印队列(/var/spool);锁文件(/var/lock);多次重新启动需要的临时文件(/var/tmp);

7)/tmp 临时文件

包含系统和用户创建的临时文件。当系统重新启动时,这个目录下的文件都将被删除。

8)/usr 用户程序

包含二进制文件、库文件、文档和二级程序的源代码。

/usr/bin 中包含用户程序的二进制文件。如果你在/bin 中找不到用户二进制文件,到/usr/bin目录看看。例如:at、awk、cc、less、scp。

/usr/sbin 中包含系统管理员的二进制文件。如果你在/sbin 中找不到系统二进制文件,

到/usr/sbin目录看看。例如:atd、cron、sshd、useradd、userdel。

/usr/lib中包含了/usr/bin和/usr/sbin用到的库。

/usr/local 中包含了从源安装的用户程序。例如,当你从源安装Apache,它会在/usr/local/apache2中。

9)/home HOME目录

所有用户用来存档他们的个人档案。

10)/boot 引导加载程序文件

包含引导加载程序相关的文件。内核的initrd、vmlinux、grub文件位于/boot下。

11)/lib 系统库

包含支持位于/bin和/sbin下的二进制文件的库文件,库文件名为 ld或lib.so.*

12)/opt 可选的附加应用程序

opt代表opitional;包含从个别厂商的附加应用程序。附加应用程序应该安装在/opt/或者/opt/的子目录下。

13)/mnt 挂载目录

临时安装目录,系统管理员可以挂载文件系统。

14)/media 可移动媒体设备

用于挂载可移动设备的临时目录。

举例来说,挂载CD-ROM的/media/cdrom,挂载软盘驱动器的/media/floppy;

15)/srv 服务数据

srv代表服务。包含服务器特定服务相关的数据。例如,/srv/cvs包含cvs相关的数据。

1.4 假设我要给a.sh 加上可执⾏权限,该输⼊什么命令?

chmod 777 +文件名: 将文件设置成对拥有者、组成员、其他人可读、可写、可执行。

chmod a+x +文件名 :将文件在原来的配置上增加可执行权限。

1.5假设我要将a.sh ⽂件的所有者改成xiang:xiang,该输⼊什么命令?

chown xiang:xiang a.sh

第三讲:三维空间的刚体运动

第四讲:李群与李代数

第五讲:第六讲:相机模型与非线性优化

第七讲:特征点法视觉里程计

章节总括:代数的方式去求解,优化的方式去求解,有了2D的信息能够推出3D的信息,深度的信息,三角化

1,特征点提取与匹配

1,由于是RGBD图像,2d-2d 2d-3d 3d-3d的代码都可以用。

2,ORB是一个类

ORB特征点提取,匹配代码:feature_extraction.cpp代码

1 #include <iostream> 2 #include <opencv2/core/core.hpp> 3 #include <opencv2/features2d/features2d.hpp> //features2d模块支持很多很多的特征,包括orb。 4 #include <opencv2/highgui/highgui.hpp> 5 6 using namespace std; 7 using namespace cv; 8 9 int main ( int argc, char** argv ) 10 { 11 if ( argc != 3 ) 12 { 13 cout<<"usage: feature_extraction img1 img2"<<endl; 14 return 1; 15 } 16 //-- 读取图像 17 Mat img_1 = imread ( argv[1], CV_LOAD_IMAGE_COLOR ); 18 Mat img_2 = imread ( argv[2], CV_LOAD_IMAGE_COLOR ); 19 20 //-- 初始化 21 std::vector<KeyPoint> keypoints_1, keypoints_2; 22 Mat descriptors_1, descriptors_2; 23 Ptr<FeatureDetector> detector = ORB::create(); 24 Ptr<DescriptorExtractor> descriptor = ORB::create(); 25 // Ptr<FeatureDetector> detector = FeatureDetector::create(detector_name); 26 // Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create(descriptor_name); 27 Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" ); 28 29 //-- 第一步:检测 Oriented FAST 角点位置 30 detector->detect ( img_1,keypoints_1 ); 31 detector->detect ( img_2,keypoints_2 ); 32 33 //-- 第二步:根据角点位置计算 BRIEF 描述子 34 descriptor->compute ( img_1, keypoints_1, descriptors_1 ); 35 descriptor->compute ( img_2, keypoints_2, descriptors_2 ); 36 37 Mat outimg1; 38 drawKeypoints( img_1, keypoints_1, outimg1, Scalar::all(-1), DrawMatchesFlags::DEFAULT ); 39 imshow("ORB特征点",outimg1); 40 41 //-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离 42 vector<DMatch> matches; 43 //BFMatcher matcher ( NORM_HAMMING ); 44 matcher->match ( descriptors_1, descriptors_2, matches ); 45 46 //-- 第四步:匹配点对筛选 47 double min_dist=10000, max_dist=0; 48 49 //找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离 50 for ( int i = 0; i < descriptors_1.rows; i++ ) 51 { 52 double dist = matches[i].distance; 53 if ( dist < min_dist ) min_dist = dist; 54 if ( dist > max_dist ) max_dist = dist; 55 } 56 57 // 仅供娱乐的写法 58 min_dist = min_element( matches.begin(), matches.end(), [](const DMatch& m1, const DMatch& m2) {return m1.distance<m2.distance;} )->distance; 59 max_dist = max_element( matches.begin(), matches.end(), [](const DMatch& m1, const DMatch& m2) {return m1.distance<m2.distance;} )->distance; 60 61 printf ( "-- Max dist : %f \n", max_dist ); 62 printf ( "-- Min dist : %f \n", min_dist ); 63 64 //当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限. 65 std::vector< DMatch > good_matches; 66 for ( int i = 0; i < descriptors_1.rows; i++ ) 67 { 68 if ( matches[i].distance <= max ( 2*min_dist, 30.0 ) ) 69 { 70 good_matches.push_back ( matches[i] ); 71 } 72 } 73 74 //-- 第五步:绘制匹配结果 75 Mat img_match; 76 Mat img_goodmatch; 77 drawMatches ( img_1, keypoints_1, img_2, keypoints_2, matches, img_match ); 78 drawMatches ( img_1, keypoints_1, img_2, keypoints_2, good_matches, img_goodmatch ); 79 imshow ( "所有匹配点对", img_match ); 80 imshow ( "优化后匹配点对", img_goodmatch ); 81 waitKey(0); 82 83 return 0; 84 }

2,2D-2D 对极几何

从单应矩阵恢复R,t;

求得R,t利用三角化计算特征点的3D位置,即深度

给E算R 和 t

2D-2D对极几何代码:pose_estimation_2d2d.cpp

1 #include <iostream> 2 #include <opencv2/core/core.hpp> 3 #include <opencv2/features2d/features2d.hpp> 4 #include <opencv2/highgui/highgui.hpp> 5 #include <opencv2/calib3d/calib3d.hpp> 6 // #include "extra.h" // use this if in OpenCV2 7 using namespace std; 8 using namespace cv; 9 10 /**************************************************** 11 * 本程序演示了如何使用2D-2D的特征匹配估计相机运动 12 * **************************************************/ 13 14 void find_feature_matches ( 15 const Mat& img_1, const Mat& img_2, 16 std::vector<KeyPoint>& keypoints_1, 17 std::vector<KeyPoint>& keypoints_2, 18 std::vector< DMatch >& matches ); 19 20 void pose_estimation_2d2d ( 21 std::vector<KeyPoint> keypoints_1, 22 std::vector<KeyPoint> keypoints_2, 23 std::vector< DMatch > matches, 24 Mat& R, Mat& t ); 25 26 // 像素坐标转相机归一化坐标 27 Point2d pixel2cam ( const Point2d& p, const Mat& K ); 28 29 int main ( int argc, char** argv ) 30 { 31 if ( argc != 3 ) 32 { 33 cout<<"usage: pose_estimation_2d2d img1 img2"<<endl; 34 return 1; 35 } 36 //-- 读取图像 37 Mat img_1 = imread ( argv[1], CV_LOAD_IMAGE_COLOR ); 38 Mat img_2 = imread ( argv[2], CV_LOAD_IMAGE_COLOR ); 39 40 vector<KeyPoint> keypoints_1, keypoints_2; 41 vector<DMatch> matches; 42 find_feature_matches ( img_1, img_2, keypoints_1, keypoints_2, matches ); 43 cout<<"一共找到了"<<matches.size() <<"组匹配点"<<endl; 44 45 //-- 估计两张图像间运动 46 Mat R,t; 47 pose_estimation_2d2d ( keypoints_1, keypoints_2, matches, R, t ); 48 49 //-- 验证E=t^R*scale 50 Mat t_x = ( Mat_<double> ( 3,3 ) << 51 0, -t.at<double> ( 2,0 ), t.at<double> ( 1,0 ), 52 t.at<double> ( 2,0 ), 0, -t.at<double> ( 0,0 ), 53 -t.at<double> ( 1.0 ), t.at<double> ( 0,0 ), 0 ); 54 55 cout<<"t^R="<<endl<<t_x*R<<endl; 56 57 //-- 验证对极约束 58 Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 ); 59 for ( DMatch m: matches ) 60 { 61 Point2d pt1 = pixel2cam ( keypoints_1[ m.queryIdx ].pt, K ); 62 Mat y1 = ( Mat_<double> ( 3,1 ) << pt1.x, pt1.y, 1 ); 63 Point2d pt2 = pixel2cam ( keypoints_2[ m.trainIdx ].pt, K ); 64 Mat y2 = ( Mat_<double> ( 3,1 ) << pt2.x, pt2.y, 1 ); 65 Mat d = y2.t() * t_x * R * y1; 66 cout << "epipolar constraint = " << d << endl; 67 } 68 return 0; 69 } 70 71 void find_feature_matches ( const Mat& img_1, const Mat& img_2, 72 std::vector<KeyPoint>& keypoints_1, 73 std::vector<KeyPoint>& keypoints_2, 74 std::vector< DMatch >& matches ) 75 { 76 //-- 初始化 77 Mat descriptors_1, descriptors_2; 78 // used in OpenCV3 79 Ptr<FeatureDetector> detector = ORB::create(); 80 Ptr<DescriptorExtractor> descriptor = ORB::create(); 81 // use this if you are in OpenCV2 82 // Ptr<FeatureDetector> detector = FeatureDetector::create ( "ORB" ); 83 // Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create ( "ORB" ); 84 Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" ); 85 //-- 第一步:检测 Oriented FAST 角点位置 86 detector->detect ( img_1,keypoints_1 ); 87 detector->detect ( img_2,keypoints_2 ); 88 89 //-- 第二步:根据角点位置计算 BRIEF 描述子 90 descriptor->compute ( img_1, keypoints_1, descriptors_1 ); 91 descriptor->compute ( img_2, keypoints_2, descriptors_2 ); 92 93 //-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离 94 vector<DMatch> match; 95 //BFMatcher matcher ( NORM_HAMMING ); 96 matcher->match ( descriptors_1, descriptors_2, match ); 97 98 //-- 第四步:匹配点对筛选 99 double min_dist=10000, max_dist=0; 100 101 //找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离 102 for ( int i = 0; i < descriptors_1.rows; i++ ) 103 { 104 double dist = match[i].distance; 105 if ( dist < min_dist ) min_dist = dist; 106 if ( dist > max_dist ) max_dist = dist; 107 } 108 109 printf ( "-- Max dist : %f \n", max_dist ); 110 printf ( "-- Min dist : %f \n", min_dist ); 111 112 //当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限. 113 for ( int i = 0; i < descriptors_1.rows; i++ ) 114 { 115 if ( match[i].distance <= max ( 2*min_dist, 30.0 ) ) 116 { 117 matches.push_back ( match[i] ); 118 } 119 } 120 } 121 122 123 Point2d pixel2cam ( const Point2d& p, const Mat& K ) 124 { 125 return Point2d 126 ( 127 ( p.x - K.at<double> ( 0,2 ) ) / K.at<double> ( 0,0 ), 128 ( p.y - K.at<double> ( 1,2 ) ) / K.at<double> ( 1,1 ) 129 ); 130 } 131 132 133 void pose_estimation_2d2d ( std::vector<KeyPoint> keypoints_1, 134 std::vector<KeyPoint> keypoints_2, 135 std::vector< DMatch > matches, 136 Mat& R, Mat& t ) 137 { 138 // 相机内参,TUM Freiburg2 139 Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 ); 140 141 //-- 把匹配点转换为vector<Point2f>的形式 142 vector<Point2f> points1; 143 vector<Point2f> points2; 144 145 for ( int i = 0; i < ( int ) matches.size(); i++ ) 146 { 147 points1.push_back ( keypoints_1[matches[i].queryIdx].pt ); 148 points2.push_back ( keypoints_2[matches[i].trainIdx].pt ); 149 } 150 151 //-- 计算基础矩阵 152 Mat fundamental_matrix; 153 fundamental_matrix = findFundamentalMat ( points1, points2, CV_FM_8POINT ); 154 cout<<"fundamental_matrix is "<<endl<< fundamental_matrix<<endl; 155 156 //-- 计算本质矩阵 157 Point2d principal_point ( 325.1, 249.7 ); //相机光心, TUM dataset标定值 158 double focal_length = 521; //相机焦距, TUM dataset标定值 159 Mat essential_matrix; 160 essential_matrix = findEssentialMat ( points1, points2, focal_length, principal_point ); 161 cout<<"essential_matrix is "<<endl<< essential_matrix<<endl; 162 163 //-- 计算单应矩阵 164 Mat homography_matrix; 165 homography_matrix = findHomography ( points1, points2, RANSAC, 3 ); 166 cout<<"homography_matrix is "<<endl<<homography_matrix<<endl; 167 168 //-- 从本质矩阵中恢复旋转和平移信息. 169 recoverPose ( essential_matrix, points1, points2, R, t, focal_length, principal_point ); 170 cout<<"R is "<<endl<<R<<endl; 171 cout<<"t is "<<endl<<t<<endl; 172 173 }

3,3D-2D PnP

在双目或者Rgbd,从3D到2D位姿的估计,已知3D点的空间位置,和相机上的投影点,求相机的旋转和平移(外参)

代数的解法:

1,DLT:直接线性变换

非代数的解法:非线性优化的方式

1 #include <iostream> 2 #include <opencv2/core/core.hpp> 3 #include <opencv2/features2d/features2d.hpp> 4 #include <opencv2/highgui/highgui.hpp> 5 #include <opencv2/calib3d/calib3d.hpp> 6 #include <Eigen/Core> 7 #include <Eigen/Geometry> 8 #include <g2o/core/base_vertex.h> 9 #include <g2o/core/base_unary_edge.h> 10 #include <g2o/core/block_solver.h> 11 #include <g2o/core/optimization_algorithm_levenberg.h> 12 #include <g2o/solvers/csparse/linear_solver_csparse.h> 13 #include <g2o/types/sba/types_six_dof_expmap.h> 14 #include <chrono> 15 16 using namespace std; 17 using namespace cv; 18 19 void find_feature_matches ( 20 const Mat& img_1, const Mat& img_2, 21 std::vector<KeyPoint>& keypoints_1, 22 std::vector<KeyPoint>& keypoints_2, 23 std::vector< DMatch >& matches ); 24 25 // 像素坐标转相机归一化坐标 26 Point2d pixel2cam ( const Point2d& p, const Mat& K ); 27 28 void bundleAdjustment ( 29 const vector<Point3f> points_3d, 30 const vector<Point2f> points_2d, 31 const Mat& K, 32 Mat& R, Mat& t 33 ); 34 35 int main ( int argc, char** argv ) 36 { 37 if ( argc != 5 ) 38 { 39 cout<<"usage: pose_estimation_3d2d img1 img2 depth1 depth2"<<endl; 40 return 1; 41 } 42 //-- 读取图像 43 Mat img_1 = imread ( argv[1], CV_LOAD_IMAGE_COLOR ); 44 Mat img_2 = imread ( argv[2], CV_LOAD_IMAGE_COLOR ); 45 46 vector<KeyPoint> keypoints_1, keypoints_2; 47 vector<DMatch> matches; 48 find_feature_matches ( img_1, img_2, keypoints_1, keypoints_2, matches ); 49 cout<<"一共找到了"<<matches.size() <<"组匹配点"<<endl; 50 51 // 建立3D点 52 Mat d1 = imread ( argv[3], CV_LOAD_IMAGE_UNCHANGED ); // 深度图为16位无符号数,单通道图像 53 Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 ); 54 vector<Point3f> pts_3d; 55 vector<Point2f> pts_2d; 56 for ( DMatch m:matches ) 57 { 58 ushort d = d1.ptr<unsigned short> (int ( keypoints_1[m.queryIdx].pt.y )) [ int ( keypoints_1[m.queryIdx].pt.x ) ]; 59 if ( d == 0 ) // bad depth 60 continue; 61 float dd = d/5000.0; 62 Point2d p1 = pixel2cam ( keypoints_1[m.queryIdx].pt, K ); 63 pts_3d.push_back ( Point3f ( p1.x*dd, p1.y*dd, dd ) ); 64 pts_2d.push_back ( keypoints_2[m.trainIdx].pt ); 65 } 66 67 cout<<"3d-2d pairs: "<<pts_3d.size() <<endl; 68 69 Mat r, t; 70 solvePnP ( pts_3d, pts_2d, K, Mat(), r, t, false ); // 调用OpenCV 的 PnP 求解,可选择EPNP,DLS等方法 71 Mat R; 72 cv::Rodrigues ( r, R ); // r为旋转向量形式,用Rodrigues公式转换为矩阵 73 74 cout<<"R="<<endl<<R<<endl; 75 cout<<"t="<<endl<<t<<endl; 76 77 cout<<"calling bundle adjustment"<<endl; 78 79 bundleAdjustment ( pts_3d, pts_2d, K, R, t ); 80 } 81 82 void find_feature_matches ( const Mat& img_1, const Mat& img_2, 83 std::vector<KeyPoint>& keypoints_1, 84 std::vector<KeyPoint>& keypoints_2, 85 std::vector< DMatch >& matches ) 86 { 87 //-- 初始化 88 Mat descriptors_1, descriptors_2; 89 // used in OpenCV3 90 Ptr<FeatureDetector> detector = ORB::create(); 91 Ptr<DescriptorExtractor> descriptor = ORB::create(); 92 // use this if you are in OpenCV2 93 // Ptr<FeatureDetector> detector = FeatureDetector::create ( "ORB" ); 94 // Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create ( "ORB" ); 95 Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" ); 96 //-- 第一步:检测 Oriented FAST 角点位置 97 detector->detect ( img_1,keypoints_1 ); 98 detector->detect ( img_2,keypoints_2 ); 99 100 //-- 第二步:根据角点位置计算 BRIEF 描述子 101 descriptor->compute ( img_1, keypoints_1, descriptors_1 ); 102 descriptor->compute ( img_2, keypoints_2, descriptors_2 ); 103 104 //-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离 105 vector<DMatch> match; 106 // BFMatcher matcher ( NORM_HAMMING ); 107 matcher->match ( descriptors_1, descriptors_2, match ); 108 109 //-- 第四步:匹配点对筛选 110 double min_dist=10000, max_dist=0; 111 112 //找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离 113 for ( int i = 0; i < descriptors_1.rows; i++ ) 114 { 115 double dist = match[i].distance; 116 if ( dist < min_dist ) min_dist = dist; 117 if ( dist > max_dist ) max_dist = dist; 118 } 119 120 printf ( "-- Max dist : %f \n", max_dist ); 121 printf ( "-- Min dist : %f \n", min_dist ); 122 123 //当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限. 124 for ( int i = 0; i < descriptors_1.rows; i++ ) 125 { 126 if ( match[i].distance <= max ( 2*min_dist, 30.0 ) ) 127 { 128 matches.push_back ( match[i] ); 129 } 130 } 131 } 132 133 Point2d pixel2cam ( const Point2d& p, const Mat& K ) 134 { 135 return Point2d 136 ( 137 ( p.x - K.at<double> ( 0,2 ) ) / K.at<double> ( 0,0 ), 138 ( p.y - K.at<double> ( 1,2 ) ) / K.at<double> ( 1,1 ) 139 ); 140 } 141 142 void bundleAdjustment ( 143 const vector< Point3f > points_3d, 144 const vector< Point2f > points_2d, 145 const Mat& K, 146 Mat& R, Mat& t ) 147 { 148 // 初始化g2o 149 typedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose 维度为 6, landmark 维度为 3 150 Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器 151 Block* solver_ptr = new Block ( linearSolver ); // 矩阵块求解器 152 g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr ); 153 g2o::SparseOptimizer optimizer; 154 optimizer.setAlgorithm ( solver ); 155 156 // vertex 157 g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap(); // camera pose 158 Eigen::Matrix3d R_mat; 159 R_mat << 160 R.at<double> ( 0,0 ), R.at<double> ( 0,1 ), R.at<double> ( 0,2 ), 161 R.at<double> ( 1,0 ), R.at<double> ( 1,1 ), R.at<double> ( 1,2 ), 162 R.at<double> ( 2,0 ), R.at<double> ( 2,1 ), R.at<double> ( 2,2 ); 163 pose->setId ( 0 ); 164 pose->setEstimate ( g2o::SE3Quat ( 165 R_mat, 166 Eigen::Vector3d ( t.at<double> ( 0,0 ), t.at<double> ( 1,0 ), t.at<double> ( 2,0 ) ) 167 ) ); 168 optimizer.addVertex ( pose ); 169 170 int index = 1; 171 for ( const Point3f p:points_3d ) // landmarks 172 { 173 g2o::VertexSBAPointXYZ* point = new g2o::VertexSBAPointXYZ(); 174 point->setId ( index++ ); 175 point->setEstimate ( Eigen::Vector3d ( p.x, p.y, p.z ) ); 176 point->setMarginalized ( true ); // g2o 中必须设置 marg 参见第十讲内容 177 optimizer.addVertex ( point ); 178 } 179 180 // parameter: camera intrinsics 181 g2o::CameraParameters* camera = new g2o::CameraParameters ( 182 K.at<double> ( 0,0 ), Eigen::Vector2d ( K.at<double> ( 0,2 ), K.at<double> ( 1,2 ) ), 0 183 ); 184 camera->setId ( 0 ); 185 optimizer.addParameter ( camera ); 186 187 // edges 188 index = 1; 189 for ( const Point2f p:points_2d ) 190 { 191 g2o::EdgeProjectXYZ2UV* edge = new g2o::EdgeProjectXYZ2UV(); 192 edge->setId ( index ); 193 edge->setVertex ( 0, dynamic_cast<g2o::VertexSBAPointXYZ*> ( optimizer.vertex ( index ) ) ); 194 edge->setVertex ( 1, pose ); 195 edge->setMeasurement ( Eigen::Vector2d ( p.x, p.y ) ); 196 edge->setParameterId ( 0,0 ); 197 edge->setInformation ( Eigen::Matrix2d::Identity() ); 198 optimizer.addEdge ( edge ); 199 index++; 200 } 201 202 chrono::steady_clock::time_point t1 = chrono::steady_clock::now(); 203 optimizer.setVerbose ( true ); 204 optimizer.initializeOptimization(); 205 optimizer.optimize ( 100 ); 206 chrono::steady_clock::time_point t2 = chrono::steady_clock::now(); 207 chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>> ( t2-t1 ); 208 cout<<"optimization costs time: "<<time_used.count() <<" seconds."<<endl; 209 210 cout<<endl<<"after optimization:"<<endl; 211 cout<<"T="<<endl<<Eigen::Isometry3d ( pose->estimate() ).matrix() <<endl; 212 }

4,3D-3D ICP

1 #include <iostream> 2 #include <opencv2/core/core.hpp> 3 #include <opencv2/features2d/features2d.hpp> 4 #include <opencv2/highgui/highgui.hpp> 5 #include <opencv2/calib3d/calib3d.hpp> 6 #include <Eigen/Core> 7 #include <Eigen/Geometry> 8 #include <Eigen/SVD> 9 #include <g2o/core/base_vertex.h> 10 #include <g2o/core/base_unary_edge.h> 11 #include <g2o/core/block_solver.h> 12 #include <g2o/core/optimization_algorithm_gauss_newton.h> 13 #include <g2o/solvers/eigen/linear_solver_eigen.h> 14 #include <g2o/types/sba/types_six_dof_expmap.h> 15 #include <chrono> 16 17 using namespace std; 18 using namespace cv; 19 20 void find_feature_matches ( 21 const Mat& img_1, const Mat& img_2, 22 std::vector<KeyPoint>& keypoints_1, 23 std::vector<KeyPoint>& keypoints_2, 24 std::vector< DMatch >& matches ); 25 26 // 像素坐标转相机归一化坐标 27 Point2d pixel2cam ( const Point2d& p, const Mat& K ); 28 29 void pose_estimation_3d3d ( 30 const vector<Point3f>& pts1, 31 const vector<Point3f>& pts2, 32 Mat& R, Mat& t 33 ); 34 35 void bundleAdjustment( 36 const vector<Point3f>& points_3d, 37 const vector<Point3f>& points_2d, 38 Mat& R, Mat& t 39 ); 40 41 // g2o edge 42 class EdgeProjectXYZRGBDPoseOnly : public g2o::BaseUnaryEdge<3, Eigen::Vector3d, g2o::VertexSE3Expmap> 43 { 44 public: 45 EIGEN_MAKE_ALIGNED_OPERATOR_NEW; 46 EdgeProjectXYZRGBDPoseOnly( const Eigen::Vector3d& point ) : _point(point) {} 47 48 virtual void computeError() 49 { 50 const g2o::VertexSE3Expmap* pose = static_cast<const g2o::VertexSE3Expmap*> ( _vertices[0] ); 51 // measurement is p, point is p' 52 _error = _measurement - pose->estimate().map( _point ); 53 } 54 55 virtual void linearizeOplus() 56 { 57 g2o::VertexSE3Expmap* pose = static_cast<g2o::VertexSE3Expmap *>(_vertices[0]); 58 g2o::SE3Quat T(pose->estimate()); 59 Eigen::Vector3d xyz_trans = T.map(_point); 60 double x = xyz_trans[0]; 61 double y = xyz_trans[1]; 62 double z = xyz_trans[2]; 63 64 _jacobianOplusXi(0,0) = 0; 65 _jacobianOplusXi(0,1) = -z; 66 _jacobianOplusXi(0,2) = y; 67 _jacobianOplusXi(0,3) = -1; 68 _jacobianOplusXi(0,4) = 0; 69 _jacobianOplusXi(0,5) = 0; 70 71 _jacobianOplusXi(1,0) = z; 72 _jacobianOplusXi(1,1) = 0; 73 _jacobianOplusXi(1,2) = -x; 74 _jacobianOplusXi(1,3) = 0; 75 _jacobianOplusXi(1,4) = -1; 76 _jacobianOplusXi(1,5) = 0; 77 78 _jacobianOplusXi(2,0) = -y; 79 _jacobianOplusXi(2,1) = x; 80 _jacobianOplusXi(2,2) = 0; 81 _jacobianOplusXi(2,3) = 0; 82 _jacobianOplusXi(2,4) = 0; 83 _jacobianOplusXi(2,5) = -1; 84 } 85 86 bool read ( istream& in ) {} 87 bool write ( ostream& out ) const {} 88 protected: 89 Eigen::Vector3d _point; 90 }; 91 92 int main ( int argc, char** argv ) 93 { 94 if ( argc != 5 ) 95 { 96 cout<<"usage: pose_estimation_3d3d img1 img2 depth1 depth2"<<endl; 97 return 1; 98 } 99 //-- 读取图像 100 Mat img_1 = imread ( argv[1], CV_LOAD_IMAGE_COLOR ); 101 Mat img_2 = imread ( argv[2], CV_LOAD_IMAGE_COLOR ); 102 103 vector<KeyPoint> keypoints_1, keypoints_2; 104 vector<DMatch> matches; 105 find_feature_matches ( img_1, img_2, keypoints_1, keypoints_2, matches ); 106 cout<<"一共找到了"<<matches.size() <<"组匹配点"<<endl; 107 108 // 建立3D点 109 Mat depth1 = imread ( argv[3], CV_LOAD_IMAGE_UNCHANGED ); // 深度图为16位无符号数,单通道图像 110 Mat depth2 = imread ( argv[4], CV_LOAD_IMAGE_UNCHANGED ); // 深度图为16位无符号数,单通道图像 111 Mat K = ( Mat_<double> ( 3,3 ) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1 ); 112 vector<Point3f> pts1, pts2; 113 114 for ( DMatch m:matches ) 115 { 116 ushort d1 = depth1.ptr<unsigned short> ( int ( keypoints_1[m.queryIdx].pt.y ) ) [ int ( keypoints_1[m.queryIdx].pt.x ) ]; 117 ushort d2 = depth2.ptr<unsigned short> ( int ( keypoints_2[m.trainIdx].pt.y ) ) [ int ( keypoints_2[m.trainIdx].pt.x ) ]; 118 if ( d1==0 || d2==0 ) // bad depth 119 continue; 120 Point2d p1 = pixel2cam ( keypoints_1[m.queryIdx].pt, K ); 121 Point2d p2 = pixel2cam ( keypoints_2[m.trainIdx].pt, K ); 122 float dd1 = float ( d1 ) /5000.0; 123 float dd2 = float ( d2 ) /5000.0; 124 pts1.push_back ( Point3f ( p1.x*dd1, p1.y*dd1, dd1 ) ); 125 pts2.push_back ( Point3f ( p2.x*dd2, p2.y*dd2, dd2 ) ); 126 } 127 128 cout<<"3d-3d pairs: "<<pts1.size() <<endl; 129 Mat R, t; 130 pose_estimation_3d3d ( pts1, pts2, R, t ); 131 cout<<"ICP via SVD results: "<<endl; 132 cout<<"R = "<<R<<endl; 133 cout<<"t = "<<t<<endl; 134 cout<<"R_inv = "<<R.t() <<endl; 135 cout<<"t_inv = "<<-R.t() *t<<endl; 136 137 cout<<"calling bundle adjustment"<<endl; 138 139 bundleAdjustment( pts1, pts2, R, t ); 140 141 // verify p1 = R*p2 + t 142 for ( int i=0; i<5; i++ ) 143 { 144 cout<<"p1 = "<<pts1[i]<<endl; 145 cout<<"p2 = "<<pts2[i]<<endl; 146 cout<<"(R*p2+t) = "<< 147 R * (Mat_<double>(3,1)<<pts2[i].x, pts2[i].y, pts2[i].z) + t 148 <<endl; 149 cout<<endl; 150 } 151 } 152 153 void find_feature_matches ( const Mat& img_1, const Mat& img_2, 154 std::vector<KeyPoint>& keypoints_1, 155 std::vector<KeyPoint>& keypoints_2, 156 std::vector< DMatch >& matches ) 157 { 158 //-- 初始化 159 Mat descriptors_1, descriptors_2; 160 // used in OpenCV3 161 Ptr<FeatureDetector> detector = ORB::create(); 162 Ptr<DescriptorExtractor> descriptor = ORB::create(); 163 // use this if you are in OpenCV2 164 // Ptr<FeatureDetector> detector = FeatureDetector::create ( "ORB" ); 165 // Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create ( "ORB" ); 166 Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming"); 167 //-- 第一步:检测 Oriented FAST 角点位置 168 detector->detect ( img_1,keypoints_1 ); 169 detector->detect ( img_2,keypoints_2 ); 170 171 //-- 第二步:根据角点位置计算 BRIEF 描述子 172 descriptor->compute ( img_1, keypoints_1, descriptors_1 ); 173 descriptor->compute ( img_2, keypoints_2, descriptors_2 ); 174 175 //-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离 176 vector<DMatch> match; 177 // BFMatcher matcher ( NORM_HAMMING ); 178 matcher->match ( descriptors_1, descriptors_2, match ); 179 180 //-- 第四步:匹配点对筛选 181 double min_dist=10000, max_dist=0; 182 183 //找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离 184 for ( int i = 0; i < descriptors_1.rows; i++ ) 185 { 186 double dist = match[i].distance; 187 if ( dist < min_dist ) min_dist = dist; 188 if ( dist > max_dist ) max_dist = dist; 189 } 190 191 printf ( "-- Max dist : %f \n", max_dist ); 192 printf ( "-- Min dist : %f \n", min_dist ); 193 194 //当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限. 195 for ( int i = 0; i < descriptors_1.rows; i++ ) 196 { 197 if ( match[i].distance <= max ( 2*min_dist, 30.0 ) ) 198 { 199 matches.push_back ( match[i] ); 200 } 201 } 202 } 203 204 Point2d pixel2cam ( const Point2d& p, const Mat& K ) 205 { 206 return Point2d 207 ( 208 ( p.x - K.at<double> ( 0,2 ) ) / K.at<double> ( 0,0 ), 209 ( p.y - K.at<double> ( 1,2 ) ) / K.at<double> ( 1,1 ) 210 ); 211 } 212 213 void pose_estimation_3d3d ( 214 const vector<Point3f>& pts1, 215 const vector<Point3f>& pts2, 216 Mat& R, Mat& t 217 ) 218 { 219 Point3f p1, p2; // center of mass 220 int N = pts1.size(); 221 for ( int i=0; i<N; i++ ) 222 { 223 p1 += pts1[i]; 224 p2 += pts2[i]; 225 } 226 p1 = Point3f( Vec3f(p1) / N); 227 p2 = Point3f( Vec3f(p2) / N); 228 vector<Point3f> q1 ( N ), q2 ( N ); // remove the center 229 for ( int i=0; i<N; i++ ) 230 { 231 q1[i] = pts1[i] - p1; 232 q2[i] = pts2[i] - p2; 233 } 234 235 // compute q1*q2^T 236 Eigen::Matrix3d W = Eigen::Matrix3d::Zero(); 237 for ( int i=0; i<N; i++ ) 238 { 239 W += Eigen::Vector3d ( q1[i].x, q1[i].y, q1[i].z ) * Eigen::Vector3d ( q2[i].x, q2[i].y, q2[i].z ).transpose(); 240 } 241 cout<<"W="<<W<<endl; 242 243 // SVD on W 244 Eigen::JacobiSVD<Eigen::Matrix3d> svd ( W, Eigen::ComputeFullU|Eigen::ComputeFullV ); 245 Eigen::Matrix3d U = svd.matrixU(); 246 Eigen::Matrix3d V = svd.matrixV(); 247 cout<<"U="<<U<<endl; 248 cout<<"V="<<V<<endl; 249 250 Eigen::Matrix3d R_ = U* ( V.transpose() ); 251 Eigen::Vector3d t_ = Eigen::Vector3d ( p1.x, p1.y, p1.z ) - R_ * Eigen::Vector3d ( p2.x, p2.y, p2.z ); 252 253 // convert to cv::Mat 254 R = ( Mat_<double> ( 3,3 ) << 255 R_ ( 0,0 ), R_ ( 0,1 ), R_ ( 0,2 ), 256 R_ ( 1,0 ), R_ ( 1,1 ), R_ ( 1,2 ), 257 R_ ( 2,0 ), R_ ( 2,1 ), R_ ( 2,2 ) 258 ); 259 t = ( Mat_<double> ( 3,1 ) << t_ ( 0,0 ), t_ ( 1,0 ), t_ ( 2,0 ) ); 260 } 261 262 void bundleAdjustment ( 263 const vector< Point3f >& pts1, 264 const vector< Point3f >& pts2, 265 Mat& R, Mat& t ) 266 { 267 // 初始化g2o 268 typedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose维度为 6, landmark 维度为 3 269 Block::LinearSolverType* linearSolver = new g2o::LinearSolverEigen<Block::PoseMatrixType>(); // 线性方程求解器 270 271 Block* solver_ptr = new Block( linearSolver ); // 矩阵块求解器 272 273 g2o::OptimizationAlgorithmGaussNewton* solver = new g2o::OptimizationAlgorithmGaussNewton( solver_ptr ); 274 275 g2o::SparseOptimizer optimizer; 276 optimizer.setAlgorithm( solver ); 277 278 // vertex 279 g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap(); // camera pose 280 pose->setId(0); 281 pose->setEstimate( g2o::SE3Quat( 282 Eigen::Matrix3d::Identity(), 283 Eigen::Vector3d( 0,0,0 ) 284 ) ); 285 optimizer.addVertex( pose ); 286 287 // edges 288 int index = 1; 289 vector<EdgeProjectXYZRGBDPoseOnly*> edges; 290 for ( size_t i=0; i<pts1.size(); i++ ) 291 { 292 EdgeProjectXYZRGBDPoseOnly* edge = new EdgeProjectXYZRGBDPoseOnly( 293 Eigen::Vector3d(pts2[i].x, pts2[i].y, pts2[i].z) ); 294 edge->setId( index ); 295 edge->setVertex( 0, dynamic_cast<g2o::VertexSE3Expmap*> (pose) ); 296 edge->setMeasurement( Eigen::Vector3d( 297 pts1[i].x, pts1[i].y, pts1[i].z) ); 298 edge->setInformation( Eigen::Matrix3d::Identity()*1e4 ); 299 optimizer.addEdge(edge); 300 index++; 301 edges.push_back(edge); 302 } 303 304 chrono::steady_clock::time_point t1 = chrono::steady_clock::now(); 305 optimizer.setVerbose( true ); 306 optimizer.initializeOptimization(); 307 optimizer.optimize(10); 308 chrono::steady_clock::time_point t2 = chrono::steady_clock::now(); 309 chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2-t1); 310 cout<<"optimization costs time: "<<time_used.count()<<" seconds."<<endl; 311 312 cout<<endl<<"after optimization:"<<endl; 313 cout<<"T="<<endl<<Eigen::Isometry3d( pose->estimate() ).matrix()<<endl; 314 315 }

5,三角化与深度估计

第八讲:直接法视觉里程计

1,光流法(不需要特征点)

估计的是像素点

2,直接法 ,光流法稍加修改。

估计的是相机是怎么运动的

第九讲:实践

第十讲,第十一讲:后端优化

第十三讲:回环检测

第十四讲:展望