简单网络 Sequential model

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

# X-Data

N = 200

X = np.random.random(N)

print(X)

[0.87043833 0.34352561 0.81362902 0.43800459 0.69327997 0.6323433

0.45008738 0.11416858 0.97250895 0.79673929 0.11541241 0.86617814

0.27171221 0.9816876 0.16753845 0.98913371 0.8581325 0.0757954

0.86031813 0.59289839 0.29749813 0.20563715 0.9997178 0.59003228

0.35757194 0.80381234 0.242631 0.11467421 0.34143111 0.27654834

0.20942507 0.23010397 0.33766935 0.95545649 0.65183404 0.99802424

0.92849039 0.57854204 0.83632395 0.7312833 0.53311664 0.92955895

0.4696443 0.30995492 0.15573472 0.30820987 0.74374888 0.20746479

0.63707138 0.25891428 0.89856103 0.77827489 0.62232385 0.66847401

0.16673846 0.85111978 0.54047361 0.06522549 0.85827134 0.89141919

0.58324072 0.25189043 0.54356471 0.98293161 0.86076638 0.2782616

0.40062945 0.73617715 0.27848053 0.19417744 0.26946711 0.56730615

0.21616535 0.50243466 0.28316786 0.31296928 0.89592931 0.92521469

0.00288017 0.39206302 0.93884799 0.4215962 0.22473894 0.54789537

0.69687102 0.33094136 0.22288206 0.73659617 0.38045493 0.9333922

0.38773277 0.55031938 0.01651959 0.26053489 0.90197014 0.94971847

0.23267036 0.46570646 0.43948164 0.41231935 0.95609519 0.08347694

0.60496824 0.10225825 0.01716315 0.78068431 0.87508406 0.58938599

0.28905644 0.61419796 0.62117595 0.10484637 0.38090871 0.38990318

0.53892778 0.92143317 0.26954451 0.24797848 0.18022549 0.03566011

0.63383763 0.23730579 0.51140095 0.40433532 0.83947813 0.01262781

0.98735757 0.23460468 0.27307607 0.48511314 0.6239909 0.59096001

0.63845825 0.77437862 0.36975273 0.37521626 0.25038518 0.55399587

0.69018968 0.11738883 0.11489286 0.56623511 0.13860632 0.03696333

0.70701076 0.31451895 0.05047509 0.4000243 0.18951662 0.54795957

0.32405597 0.58581542 0.19052749 0.97184877 0.08121297 0.5277435

0.95102183 0.70232852 0.92241819 0.5559153 0.78083361 0.40355639

0.98407045 0.72578303 0.87454461 0.05761464 0.30351022 0.7906215

0.83869084 0.09198238 0.17471775 0.40895357 0.82516278 0.79565455

0.01031771 0.80233015 0.62315751 0.8618836 0.92953252 0.25230357

0.70523593 0.18452694 0.8373015 0.13110077 0.86873592 0.59722102

0.26999434 0.3517313 0.18223504 0.09235062 0.58780589 0.42629025

0.31286228 0.64693046 0.47166465 0.49182858 0.45446215 0.91291826

0.06898951 0.53389829]

# Generation Y-Data

sign = (- np.ones((N,)))**np.random.randint(2,size=N)

Y = np.sqrt(X) * sign

print(sign)

print(Y)

[-1. 1. -1. -1. 1. 1. 1. 1. -1. 1. -1. -1. -1. -1. -1. 1. -1. 1.

-1. 1. 1. 1. -1. 1. 1. 1. -1. -1. -1. 1. -1. 1. -1. -1. -1. 1.

1. 1. 1. 1. -1. -1. 1. -1. 1. -1. -1. -1. -1. 1. -1. -1. 1. -1.

1. 1. 1. -1. -1. -1. -1. 1. -1. 1. -1. -1. -1. 1. 1. 1. -1. -1.

1. -1. -1. -1. 1. 1. 1. 1. 1. -1. 1. 1. 1. 1. -1. 1. -1. 1.

1. 1. -1. -1. 1. 1. -1. 1. 1. -1. -1. -1. -1. 1. 1. -1. -1. 1.

1. -1. 1. 1. -1. 1. 1. 1. -1. -1. -1. 1. 1. 1. -1. 1. -1. 1.

-1. -1. -1. -1. -1. 1. 1. -1. -1. 1. -1. 1. -1. 1. -1. 1. -1. 1.

-1. 1. 1. -1. -1. 1. 1. 1. -1. -1. -1. 1. -1. 1. -1. 1. -1. 1.

1. 1. 1. -1. -1. 1. 1. -1. -1. 1. 1. 1. 1. -1. -1. 1. 1. -1.

-1. 1. 1. -1. 1. 1. 1. 1. -1. 1. -1. -1. 1. 1. -1. -1. -1. -1.

1. 1.]

[-0.93297284 0.58611058 -0.90201387 -0.66181915 0.83263436 0.79520016

0.67088552 0.33788841 -0.98615868 0.89260254 -0.33972403 -0.93068692

-0.52126021 -0.99080149 -0.4093146 0.99455202 -0.92635441 0.27530964

-0.92753336 0.76999895 0.54543389 0.45347233 -0.99985889 0.76813558

0.59797319 0.89655582 -0.49257589 -0.33863581 -0.58432107 0.52587864

-0.45762984 0.47969154 -0.58109324 -0.97747455 -0.8073624 0.99901163

0.96358206 0.76061951 0.91450749 0.85515104 -0.73014837 -0.96413638

0.68530599 -0.55673595 0.39463239 -0.55516653 -0.86240876 -0.45548303

-0.79816751 0.5088362 -0.94792459 -0.88219889 0.78887505 -0.8176026

0.40833621 0.92256153 0.7351691 -0.25539282 -0.92642935 -0.94414998

-0.76370198 0.50188687 -0.73726841 0.99142908 -0.92777496 -0.52750507

-0.63295296 0.85800766 0.52771255 0.4406557 -0.51910222 -0.75319729

0.46493585 -0.70882626 -0.53213519 -0.55943657 0.94653542 0.96188081

0.05366721 0.62614936 0.96894169 -0.6493044 0.47406639 0.74019955

0.83478801 0.57527503 -0.47210387 0.85825181 -0.61681029 0.96612225

0.62268192 0.74183515 -0.12852857 -0.51042619 0.94972109 0.974535

-0.48235917 0.68242689 0.66293411 -0.64212097 -0.9778012 -0.28892376

-0.77779704 0.31977844 0.13100821 -0.88356342 -0.93545928 0.76771478

0.53763969 -0.78370783 0.78814716 0.32379989 -0.61717802 0.62442227

0.73411701 0.95991311 -0.51917676 -0.49797438 -0.42452973 0.18883884

0.7961392 0.48714042 -0.71512303 0.63587367 -0.91623039 0.11237352

-0.99365868 -0.48436007 -0.52256681 -0.69650064 -0.78993095 0.76873923

0.79903583 -0.87998785 -0.60807297 0.61254899 -0.50038504 0.74430899

-0.83077655 0.34262053 -0.3389585 0.75248595 -0.3722987 0.1922585

-0.84083932 0.56081989 0.22466663 -0.63247474 -0.43533507 0.74024291

0.56925914 0.76538579 -0.43649455 -0.98582391 -0.28497889 0.72645956

-0.97520348 0.83805043 -0.96042605 0.74559728 -0.8836479 0.63526089

0.99200325 0.851929 0.9351709 -0.24003049 -0.55091762 0.889169

0.91580065 -0.30328597 -0.41799252 0.63949478 0.90838471 0.8919947

0.10157615 -0.89572883 -0.78940326 0.92837686 0.96412267 -0.50229829

-0.83978326 0.42956599 0.9150418 -0.3620784 0.93206004 0.77280076

0.5196098 0.59306939 -0.42688997 0.30389244 -0.766685 -0.65290906

0.55934093 0.80431987 -0.68677846 -0.70130491 -0.67413808 -0.95546756

0.26265854 0.73068344]

# Neural network

act = tf.keras.layers.ReLU()

nn_sv = tf.keras.models.Sequential([

tf.keras.layers.Dense(10, activation=act, input_shape=(1,)),

tf.keras.layers.Dense(10, activation=act),

tf.keras.layers.Dense(1,activation='linear')])

# Loss function

loss_sv = tf.keras.losses.MeanSquaredError()

optimizer_sv = tf.keras.optimizers.Adam(learning_rate=0.001)

nn_sv.compile(optimizer=optimizer_sv, loss=loss_sv)

# Training

results_sv = nn_sv.fit(X, Y, epochs=5, batch_size= 5, verbose=1)

Epoch 1/5

40/40 [==============================] - 0s 773us/step - loss: 0.5016

Epoch 2/5

40/40 [==============================] - 0s 2ms/step - loss: 0.4989

Epoch 3/5

40/40 [==============================] - 0s 2ms/step - loss: 0.4988

Epoch 4/5

40/40 [==============================] - 0s 2ms/step - loss: 0.4983

Epoch 5/5

40/40 [==============================] - 0s 2ms/step - loss: 0.4987

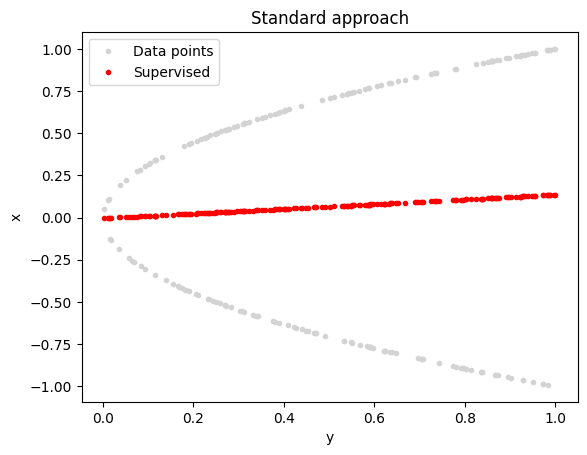

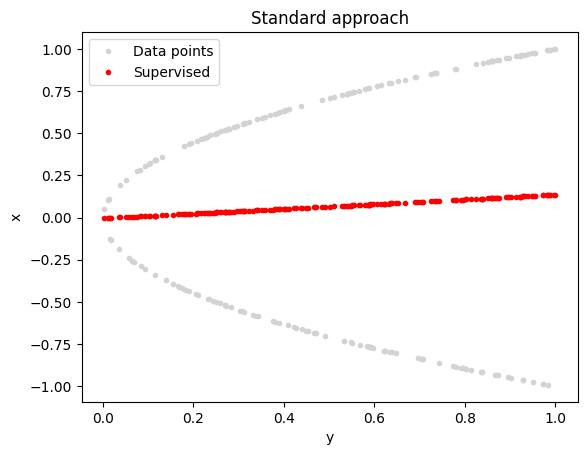

# Results

plt.plot(X,Y,'.',label='Data points', color="lightgray")

plt.plot(X,nn_sv.predict(X),'.',label='Supervised', color="red")

plt.xlabel('y')

plt.ylabel('x')

plt.title('Standard approach')

plt.legend()

plt.show()

# 改进后的方法

# X-Data

# X = X , we can directly re-use the X from above, nothing has changed...

# Y is evaluated on the fly

# Model

nn_dp = tf.keras.models.Sequential([

tf.keras.layers.Dense(10, activation=act, input_shape=(1,)),

tf.keras.layers.Dense(10, activation=act),

tf.keras.layers.Dense(1, activation='linear')])

#Loss

mse = tf.keras.losses.MeanSquaredError()

def loss_dp(y_true, y_pred):

return mse(y_true,y_pred**2)

optimizer_dp = tf.keras.optimizers.Adam(learning_rate=0.001)

nn_dp.compile(optimizer=optimizer_dp, loss=loss_dp)

#Training

results_dp = nn_dp.fit(X, X, epochs=5, batch_size=5, verbose=1)

Epoch 1/5

40/40 [==============================] - 0s 4ms/step - loss: 0.2881

Epoch 2/5

40/40 [==============================] - 0s 4ms/step - loss: 0.0988

Epoch 3/5

40/40 [==============================] - 0s 4ms/step - loss: 0.0027

Epoch 4/5

40/40 [==============================] - 0s 3ms/step - loss: 0.0012

Epoch 5/5

40/40 [==============================] - 0s 2ms/step - loss: 8.4034e-04

# Results

plt.plot(X,Y,'.',label='Datapoints', color="lightgray")

#plt.plot(X,nn_sv.predict(X),'.',label='Supervised', color="red") # optional for comparison

plt.plot(X,nn_dp.predict(X),'.',label='Diff. Phys.', color="green")

plt.xlabel('x')

plt.ylabel('y')

plt.title('Differentiable physics approach')

plt.legend()

plt.show()

参考资料: https://colab.research.google.com/github/tum-pbs/pbdl-book/blob/main/intro-teaser.ipynb#scrollTo=star-radio

https://www.topcfd.cn/Ebook/PBDL/基于物理的深度学习/0_Welcome.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· winform 绘制太阳,地球,月球 运作规律

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人