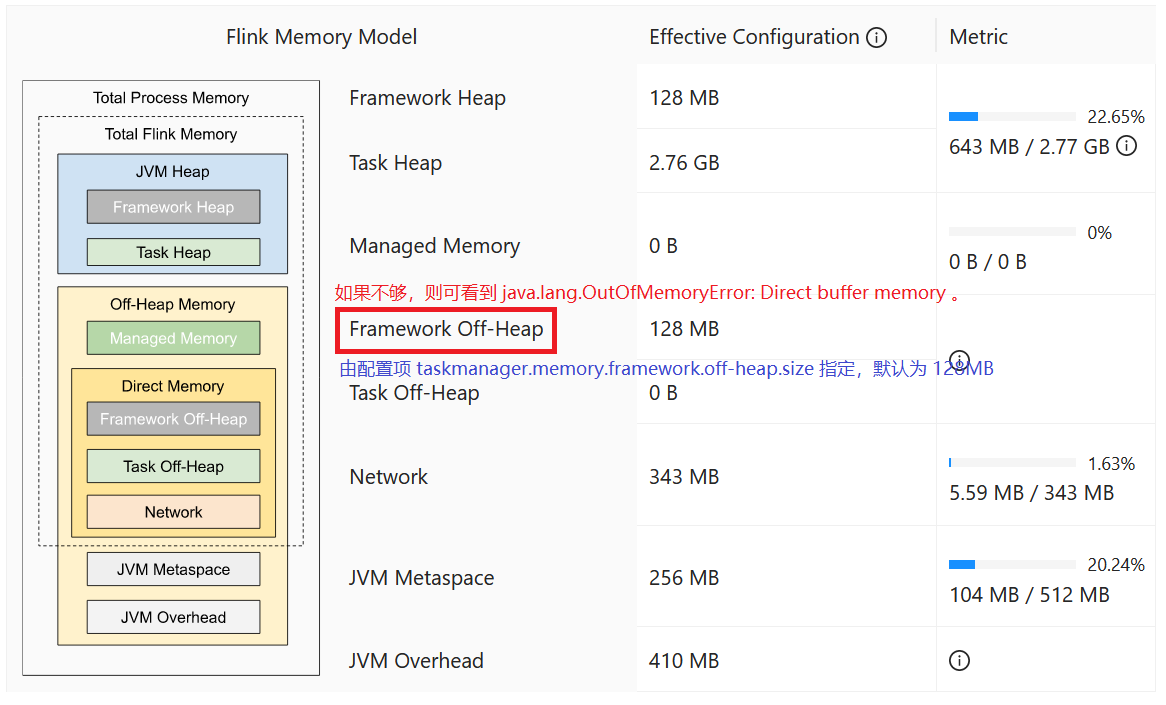

如遇到如下错误,表示需要调大配置项 taskmanager.memory.framework.off-heap.size 的值,taskmanager.memory.framework.off-heap.size 的默认值为 128MB,错误显示不够用需要调大。

|

2022-12-16 09:09:21,633 INFO [464321] [org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:366)] - Hadoop UGI authentication : TAUTH

2022-12-16 09:09:21,735 ERROR [464355] [org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager$1.accept(SplitFetcherManager.java:119)] - Received uncaught exception.

java.lang.OutOfMemoryError: Direct buffer memory

at java.nio.Bits.reserveMemory(Bits.java:695)

at java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:123)

at java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311)

at sun.nio.ch.Util.getTemporaryDirectBuffer(Util.java:241)

at sun.nio.ch.IOUtil.read(IOUtil.java:195)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:379)

at org.apache.kafka.common.network.PlaintextTransportLayer.read(PlaintextTransportLayer.java:103)

at org.apache.kafka.common.network.NetworkReceive.readFrom(NetworkReceive.java:118)

at org.apache.kafka.common.network.KafkaChannel.receive(KafkaChannel.java:452)

at org.apache.kafka.common.network.KafkaChannel.read(KafkaChannel.java:402)

at org.apache.kafka.common.network.Selector.attemptRead(Selector.java:674)

at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:576)

at org.apache.kafka.common.network.Selector.poll(Selector.java:481)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:561)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.transmitSends(ConsumerNetworkClient.java:324)

at org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1246)

at org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1210)

at org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader.fetch(KafkaPartitionSplitReader.java:100)

at org.apache.flink.connector.base.source.reader.fetcher.FetchTask.run(FetchTask.java:58)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:142)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2022-12-16 09:09:21,735 INFO [464355] [org.apache.kafka.common.metrics.Metrics.close(Metrics.java:659)] - Metrics scheduler closed

2022-12-16 09:09:21,735 INFO [464355] [org.apache.kafka.common.metrics.Metrics.close(Metrics.java:663)] - Closing reporter org.apache.kafka.common.metrics.JmxReporter

2022-12-16 09:09:21,735 INFO [464262] [org.apache.flink.connector.base.source.reader.SourceReaderBase.close(SourceReaderBase.java:259)] - Closing Source Reader.

2022-12-16 09:09:21,735 INFO [464355] [org.apache.kafka.common.metrics.Metrics.close(Metrics.java:669)] - Metrics reporters closed

2022-12-16 09:09:21,736 INFO [464262] [org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.shutdown(SplitFetcher.java:196)] - Shutting down split fetcher 0

2022-12-16 09:09:21,736 INFO [464355] [org.apache.kafka.common.utils.AppInfoParser.unregisterAppInfo(AppInfoParser.java:83)] - App info kafka.consumer for Group-Ods99Log-xuexi-0008-0 unregistered

2022-12-16 09:09:21,736 INFO [464355] [org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:115)] - Split fetcher 0 exited.

2022-12-16 09:09:21,737 WARN [464262] [org.apache.flink.runtime.taskmanager.Task.transitionState(Task.java:1111)] - Source: t_abc_log_kafka[1] -> Calc[2] -> ConstraintEnforcer[3] -> row_data_to_hoodie_record (1/2)#1832 (16a69b5652ee73524a3f889acbe13ad5) switched from RUNNING to FAILED with failure cause: java.lang.RuntimeException: One or more fetchers have encountered exception

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager.checkErrors(SplitFetcherManager.java:225)

at org.apache.flink.connector.base.source.reader.SourceReaderBase.getNextFetch(SourceReaderBase.java:169)

at org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:130)

at org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:385)

at org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:68)

at org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:65)

at org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:527)

at org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:203)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:812)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:761)

at org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:955)

at org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:934)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:748)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:569)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.OutOfMemoryError: Direct buffer memory. The direct out-of-memory error has occurred. This can mean two things: either job(s) require(s) a larger size of JVM direct memory or there is a direct memory leak. The direct memory can be allocated by user code or some of its dependencies. In this case 'taskmanager.memory.task.off-heap.size' configuration option should be increased. Flink framework and its dependencies also consume the direct memory, mostly for network communication. The most of network memory is managed by Flink and should not result in out-of-memory error. In certain special cases, in particular for jobs with high parallelism, the framework may require more direct memory which is not managed by Flink. In this case 'taskmanager.memory.framework.off-heap.size' configuration option should be increased. If the error persists then there is probably a direct memory leak in user code or some of its dependencies which has to be investigated and fixed. The task executor has to be shutdown...

at java.nio.Bits.reserveMemory(Bits.java:695)

at java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:123)

at java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311)

at sun.nio.ch.Util.getTemporaryDirectBuffer(Util.java:241)

at sun.nio.ch.IOUtil.read(IOUtil.java:195)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:379)

at org.apache.kafka.common.network.PlaintextTransportLayer.read(PlaintextTransportLayer.java:103)

at org.apache.kafka.common.network.NetworkReceive.readFrom(NetworkReceive.java:118)

at org.apache.kafka.common.network.KafkaChannel.receive(KafkaChannel.java:452)

at org.apache.kafka.common.network.KafkaChannel.read(KafkaChannel.java:402)

at org.apache.kafka.common.network.Selector.attemptRead(Selector.java:674)

at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:576)

at org.apache.kafka.common.network.Selector.poll(Selector.java:481)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:561)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.transmitSends(ConsumerNetworkClient.java:324)

at org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1246)

at org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1210)

at org.apache.flink.connector.kafka.source.reader.KafkaPartitionSplitReader.fetch(KafkaPartitionSplitReader.java:100)

at org.apache.flink.connector.base.source.reader.fetcher.FetchTask.run(FetchTask.java:58)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.runOnce(SplitFetcher.java:142)

at org.apache.flink.connector.base.source.reader.fetcher.SplitFetcher.run(SplitFetcher.java:105)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

... 1 more

2022-12-16 09:09:21,737 INFO [464262] [org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:832)] - Freeing task resources for Source: t_abc_log_kafka[1] -> Calc[2] -> ConstraintEnforcer[3] -> row_data_to_hoodie_record (1/2)#1832 (16a69b5652ee73524a3f889acbe13ad5).

|

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号