Prometheus Operator 安装部署

Prometheus Operator功能

Prometheus operator并非Prometheus官方组件,是由CoreOS公司研发

Prometheus Operator提供在Kubernetes环境中本地部署并支持Prometheus相关组件监控管理,该组件主要目的针对在Kubernetes Cluster环境中简化且自动配置Prometheus

-

使用kubernetes Custom Resource 部署与管理Prometheus Alertmanager及其它组件及策略

-

使用Kubernetes 资源来配置Prometheus,如版本、持久化、保留策略及副本

-

可以根据Kubernetes label查询自动生成目标监控配置,不需要Proemtheus特定的配置语法

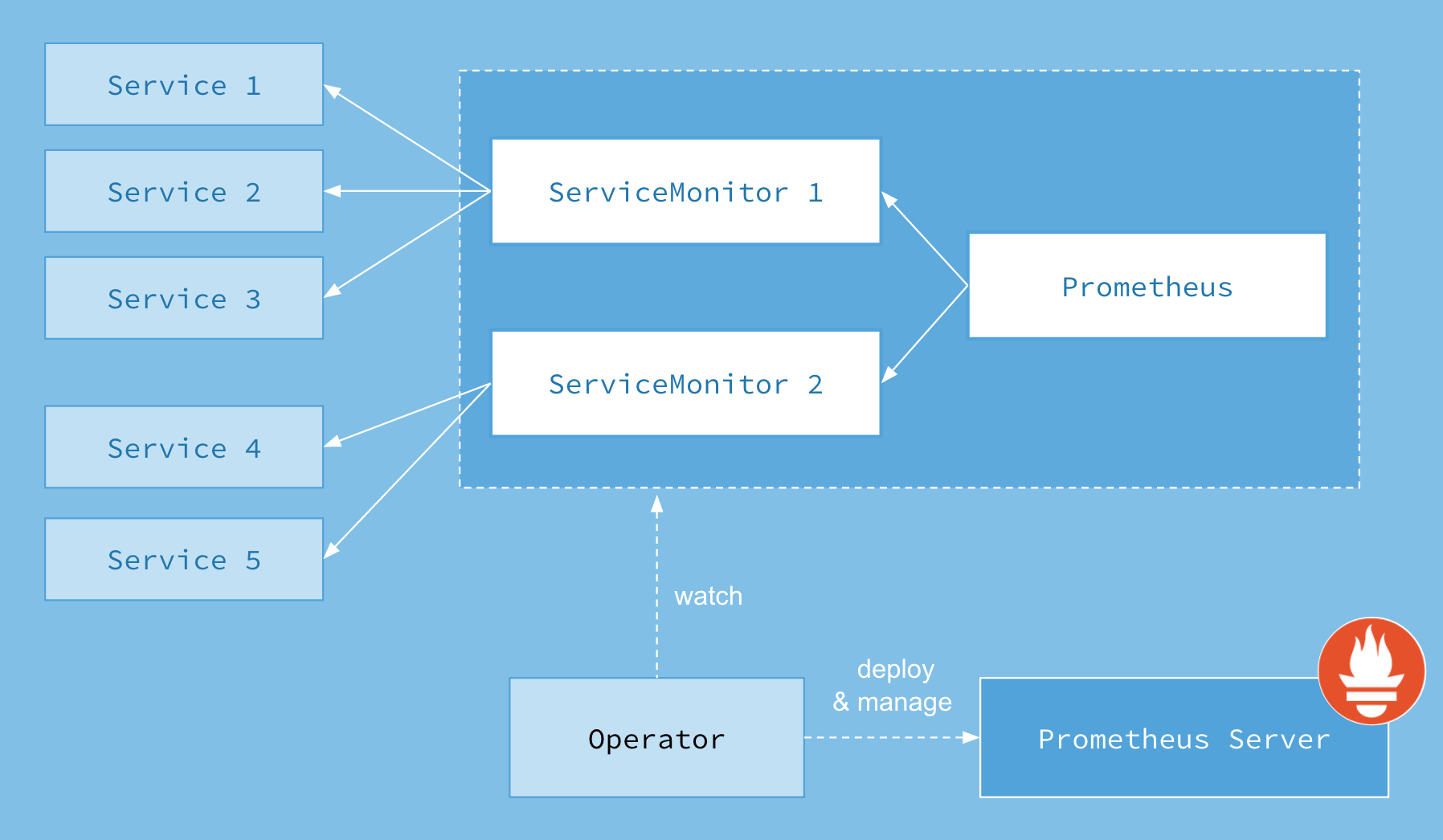

Prometheus Operator架构

- 架构图

Prometheus Operator & Kube-Prometheus & Helm chart 区别

-

Prometheus Operator Prometheus Operator 使用Kubernetes Custom Resource简化部署与配置Prometheus、Alertmanager等相关的监控组件 官方安装文档: https://prometheus-operator.dev/docs/user-guides/getting-started/ Prometheus Operator requires use of Kubernetes v1.16.x and up.)需要Kubernetes版本至少在v1.16.x以上 官方Github地址:https://github.com/prometheus-operator/prometheus-operator

-

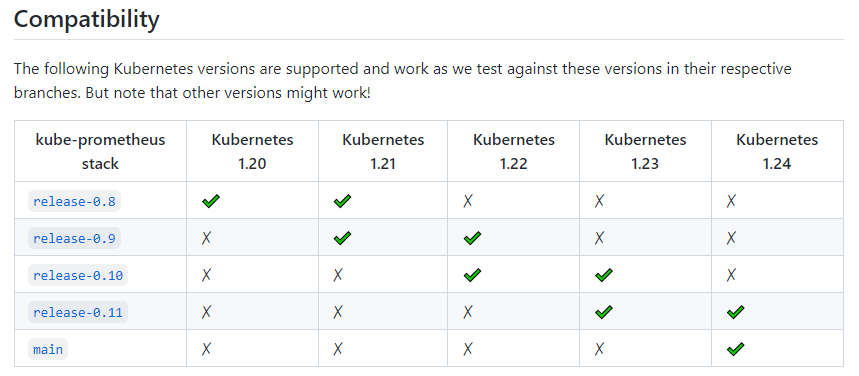

kube-prometheus kube-prometheus提供基于Prometheus & Prometheus Operator完整的集群监控配置示例,包括多实例Prometheus & Alertmanager部署与配置及node exporter的metrics采集,以及scrape Prometheus target各种不同的metrics endpoints,并提供Alerting rules一些示例,触发告警集群潜在的问题 官方安装文档:https://prometheus-operator.dev/docs/prologue/quick-start/ 安装要求:https://github.com/prometheus-operator/kube-prometheus#compatibility 官方Github地址:https://github.com/prometheus-operator/kube-prometheus

-

helm chart prometheus-community/kube-prometheus-stack 提供类似kube-prometheus的功能,但是该项目是由Prometheus-community来维护,具体信息参考https://github.com/prometheus-community/helm-charts/tree/main/charts/kube-prometheus-stack#kube-prometheus-stack

安装过程 kube-Prometheus

-

选择使用kube-Prometheus部署方式,如下

根据安装要求部署,如下版本兼容表格说明

https://github.com/prometheus-operator/kube-prometheus#compatibility

- 安装要求

-

获取kube-prometheus项目,如下

15:44 root@qcloud-shanghai-4-saltmaster ~ # git clone https://github.com/prometheus-operator/kube-prometheus.git Initialized empty Git repository in /root/kube-prometheus/.git/ remote: Enumerating objects: 17126, done. remote: Counting objects: 100% (1/1), done. remote: Total 17126 (delta 0), reused 0 (delta 0), pack-reused 17125 Receiving objects: 100% (17126/17126), 8.76 MiB | 1.72 MiB/s, done. Resolving deltas: 100% (11219/11219), done.

-

切换git branch,如下

15:55 root@qcloud-shanghai-4-saltmaster kube-prometheus # git branch -a main * origin/release-0.8 remotes/origin/HEAD -> origin/main remotes/origin/automated-updates-main remotes/origin/automated-updates-release-0.10 remotes/origin/dependabot/github_actions/azure/setup-helm-3.4 remotes/origin/dependabot/go_modules/github.com/prometheus/client_golang-1.13.1 remotes/origin/dependabot/go_modules/k8s.io/apimachinery-0.25.3 remotes/origin/dependabot/go_modules/k8s.io/client-go-0.25.3 remotes/origin/dependabot/go_modules/scripts/github.com/google/go-jsonnet-0.19.1 remotes/origin/dependabot/go_modules/scripts/github.com/yannh/kubeconform-0.5.0 remotes/origin/main remotes/origin/release-0.1 remotes/origin/release-0.10 remotes/origin/release-0.11 remotes/origin/release-0.2 remotes/origin/release-0.3 remotes/origin/release-0.4 remotes/origin/release-0.5 remotes/origin/release-0.6 remotes/origin/release-0.7 remotes/origin/release-0.8 remotes/origin/release-0.9

-

创建namespace & CRD资源,如下

18:23 root@qcloud-shanghai-4-saltmaster kube-prometheus # kubectl create -f manifests/setup customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created namespace/monitoring created

-

修改prometheusAdapter-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/component: metrics-adapter app.kubernetes.io/name: prometheus-adapter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.10.0 name: prometheus-adapter namespace: monitoring spec: #replicas: 2 replicas: 1 selector: matchLabels: app.kubernetes.io/component: metrics-adapter app.kubernetes.io/name: prometheus-adapter app.kubernetes.io/part-of: kube-prometheus strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 template: metadata: labels: app.kubernetes.io/component: metrics-adapter app.kubernetes.io/name: prometheus-adapter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.10.0 spec: automountServiceAccountToken: true containers: - args: - --cert-dir=/var/run/serving-cert - --config=/etc/adapter/config.yaml - --logtostderr=true - --metrics-relist-interval=1m - --prometheus-url=http://prometheus-k8s.monitoring.svc:9090/ - --secure-port=6443 - --tls-cipher-suites=TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA,TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA,TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA,TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA,TLS_RSA_WITH_AES_128_GCM_SHA256,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_CBC_SHA,TLS_RSA_WITH_AES_256_CBC_SHA image: registry.k8s.io/prometheus-adapter/prometheus-adapter:v0.10.0 livenessProbe: failureThreshold: 5 httpGet: path: /livez port: https scheme: HTTPS initialDelaySeconds: 30 periodSeconds: 5 name: prometheus-adapter ports: - containerPort: 6443 name: https readinessProbe: failureThreshold: 5 httpGet: path: /readyz port: https scheme: HTTPS initialDelaySeconds: 30 periodSeconds: 5 resources: limits: cpu: 250m memory: 180Mi requests: cpu: 102m memory: 180Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true volumeMounts: - mountPath: /tmp name: tmpfs readOnly: false - mountPath: /var/run/serving-cert name: volume-serving-cert readOnly: false - mountPath: /etc/adapter name: config readOnly: false nodeSelector: kubernetes.io/os: linux tolerations: - key: monitor operator: Exists effect: NoSchedule affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/monitor operator: In values: - prometheus serviceAccountName: prometheus-adapter volumes: - emptyDir: {} name: tmpfs - emptyDir: {} name: volume-serving-cert - configMap: name: adapter-config name: config

-

blackboxExporter-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/component: exporter app.kubernetes.io/name: blackbox-exporter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.22.0 name: blackbox-exporter namespace: monitoring spec: replicas: 1 selector: matchLabels: app.kubernetes.io/component: exporter app.kubernetes.io/name: blackbox-exporter app.kubernetes.io/part-of: kube-prometheus template: metadata: annotations: kubectl.kubernetes.io/default-container: blackbox-exporter labels: app.kubernetes.io/component: exporter app.kubernetes.io/name: blackbox-exporter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.22.0 spec: automountServiceAccountToken: true containers: - args: - --config.file=/etc/blackbox_exporter/config.yml - --web.listen-address=:19115 image: quay.io/prometheus/blackbox-exporter:v0.22.0 name: blackbox-exporter ports: - containerPort: 19115 name: http resources: limits: cpu: 20m memory: 40Mi requests: cpu: 10m memory: 20Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 65534 volumeMounts: - mountPath: /etc/blackbox_exporter/ name: config readOnly: true - args: - --webhook-url=http://localhost:19115/-/reload - --volume-dir=/etc/blackbox_exporter/ image: jimmidyson/configmap-reload:v0.5.0 name: module-configmap-reloader resources: limits: cpu: 20m memory: 40Mi requests: cpu: 10m memory: 20Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 65534 terminationMessagePath: /dev/termination-log terminationMessagePolicy: FallbackToLogsOnError volumeMounts: - mountPath: /etc/blackbox_exporter/ name: config readOnly: true - args: - --logtostderr - --secure-listen-address=:9115 - --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305 - --upstream=http://127.0.0.1:19115/ image: quay.io/brancz/kube-rbac-proxy:v0.13.1 name: kube-rbac-proxy ports: - containerPort: 9115 name: https resources: limits: cpu: 20m memory: 40Mi requests: cpu: 10m memory: 20Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsGroup: 65532 runAsNonRoot: true runAsUser: 65532 nodeSelector: kubernetes.io/os: linux tolerations: - key: monitor operator: Exists effect: NoSchedule affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/monitor operator: In values: - prometheus serviceAccountName: blackbox-exporter volumes: - configMap: name: blackbox-exporter-configuration name: config

-

kubeStateMetrics-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/component: exporter app.kubernetes.io/name: kube-state-metrics app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 2.6.0 name: kube-state-metrics namespace: monitoring spec: replicas: 1 selector: matchLabels: app.kubernetes.io/component: exporter app.kubernetes.io/name: kube-state-metrics app.kubernetes.io/part-of: kube-prometheus template: metadata: annotations: kubectl.kubernetes.io/default-container: kube-state-metrics labels: app.kubernetes.io/component: exporter app.kubernetes.io/name: kube-state-metrics app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 2.6.0 spec: automountServiceAccountToken: true containers: - args: - --host=$(POD_IP) - --port=8081 - --telemetry-host=$(POD_IP) - --telemetry-port=8082 image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.6.0 name: kube-state-metrics resources: limits: cpu: 100m memory: 250Mi requests: cpu: 10m memory: 190Mi env: - name: POD_IP valueFrom: fieldRef: apiVersion: v1 fieldPath: status.podIP securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsUser: 65534 - args: - --logtostderr - --secure-listen-address=:8443 - --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305 - --upstream=http://$(POD_IP):8081/ image: quay.io/brancz/kube-rbac-proxy:v0.13.1 name: kube-rbac-proxy-main env: - name: POD_IP valueFrom: fieldRef: apiVersion: v1 fieldPath: status.podIP ports: - containerPort: 8443 name: https-main resources: limits: cpu: 40m memory: 40Mi requests: cpu: 20m memory: 20Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsGroup: 65532 runAsNonRoot: true runAsUser: 65532 - args: - --logtostderr - --secure-listen-address=:9443 - --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305 - --upstream=http://$(POD_IP):8082/ image: quay.io/brancz/kube-rbac-proxy:v0.13.1 name: kube-rbac-proxy-self env: - name: POD_IP valueFrom: fieldRef: apiVersion: v1 fieldPath: status.podIP ports: - containerPort: 9443 name: https-self resources: limits: cpu: 20m memory: 40Mi requests: cpu: 10m memory: 20Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsGroup: 65532 runAsNonRoot: true runAsUser: 65532 nodeSelector: kubernetes.io/os: linux tolerations: - key: monitor operator: Exists effect: NoSchedule affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/monitor operator: In values: - prometheus serviceAccountName: kube-state-metrics

-

prometheusOperator-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app.kubernetes.io/component: metrics-adapter app.kubernetes.io/name: prometheus-adapter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.10.0 name: prometheus-adapter namespace: monitoring spec: #replicas: 2 replicas: 1 selector: matchLabels: app.kubernetes.io/component: metrics-adapter app.kubernetes.io/name: prometheus-adapter app.kubernetes.io/part-of: kube-prometheus strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 template: metadata: labels: app.kubernetes.io/component: metrics-adapter app.kubernetes.io/name: prometheus-adapter app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.10.0 spec: automountServiceAccountToken: true containers: - args: - --cert-dir=/var/run/serving-cert - --config=/etc/adapter/config.yaml - --logtostderr=true - --metrics-relist-interval=1m - --prometheus-url=http://prometheus-k8s.monitoring.svc:9090/ - --secure-port=6443 - --tls-cipher-suites=TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA,TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA,TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA,TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA,TLS_RSA_WITH_AES_128_GCM_SHA256,TLS_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_CBC_SHA,TLS_RSA_WITH_AES_256_CBC_SHA image: registry.k8s.io/prometheus-adapter/prometheus-adapter:v0.10.0 livenessProbe: failureThreshold: 5 httpGet: path: /livez port: https scheme: HTTPS initialDelaySeconds: 30 periodSeconds: 5 name: prometheus-adapter ports: - containerPort: 6443 name: https readinessProbe: failureThreshold: 5 httpGet: path: /readyz port: https scheme: HTTPS initialDelaySeconds: 30 periodSeconds: 5 resources: limits: cpu: 250m memory: 180Mi requests: cpu: 102m memory: 180Mi securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true volumeMounts: - mountPath: /tmp name: tmpfs readOnly: false - mountPath: /var/run/serving-cert name: volume-serving-cert readOnly: false - mountPath: /etc/adapter name: config readOnly: false nodeSelector: kubernetes.io/os: linux tolerations: - key: monitor operator: Exists effect: NoSchedule affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/monitor operator: In values: - prometheus serviceAccountName: prometheus-adapter volumes: - emptyDir: {} name: tmpfs - emptyDir: {} name: volume-serving-cert - configMap: name: adapter-config name: config

-

alertmanager-alertmanager.yaml

apiVersion: monitoring.coreos.com/v1 kind: Alertmanager metadata: labels: app.kubernetes.io/component: alert-router app.kubernetes.io/instance: main app.kubernetes.io/name: alertmanager app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.24.0 name: main namespace: monitoring spec: image: quay.io/prometheus/alertmanager:v0.24.0 nodeSelector: kubernetes.io/os: linux tolerations: - key: monitor operator: Exists effect: NoSchedule affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/monitor operator: In values: - prometheus podMetadata: labels: app.kubernetes.io/component: alert-router app.kubernetes.io/instance: main app.kubernetes.io/name: alertmanager app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 0.24.0 replicas: 1 resources: limits: cpu: 1 memory: 1Gi requests: cpu: 4m memory: 100Mi securityContext: fsGroup: 2000 runAsNonRoot: true runAsUser: 1000 serviceAccountName: alertmanager-main version: 0.24.0

-

prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1 kind: Prometheus metadata: labels: app.kubernetes.io/component: prometheus app.kubernetes.io/instance: k8s app.kubernetes.io/name: prometheus app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 2.39.1 name: k8s namespace: monitoring spec: alerting: alertmanagers: - apiVersion: v2 name: alertmanager-main namespace: monitoring port: web enableFeatures: [] externalLabels: {} image: quay.io/prometheus/prometheus:v2.39.1 nodeSelector: kubernetes.io/os: linux tolerations: - key: monitor operator: Exists effect: NoSchedule affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/monitor operator: In values: - prometheus podMetadata: labels: app.kubernetes.io/component: prometheus app.kubernetes.io/instance: k8s app.kubernetes.io/name: prometheus app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 2.39.1 podMonitorNamespaceSelector: {} podMonitorSelector: {} probeNamespaceSelector: {} probeSelector: {} #replicas: 2 replicas: 1 resources: requests: memory: 400Mi cpu: 100m limits: memory: 16Gi cpu: 2 ruleNamespaceSelector: {} ruleSelector: {} securityContext: fsGroup: 2000 runAsNonRoot: true runAsUser: 1000 serviceAccountName: prometheus-k8s serviceMonitorNamespaceSelector: {} serviceMonitorSelector: {} version: 2.39.1

-

创建监控资源对象

18:24 root@qcloud-shanghai-4-saltmaster kube-prometheus # kubectl create -f manifests/ alertmanager.monitoring.coreos.com/main created networkpolicy.networking.k8s.io/alertmanager-main created prometheusrule.monitoring.coreos.com/alertmanager-main-rules created secret/alertmanager-main created service/alertmanager-main created serviceaccount/alertmanager-main created servicemonitor.monitoring.coreos.com/alertmanager-main created clusterrole.rbac.authorization.k8s.io/blackbox-exporter created clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created configmap/blackbox-exporter-configuration created deployment.apps/blackbox-exporter created networkpolicy.networking.k8s.io/blackbox-exporter created service/blackbox-exporter created serviceaccount/blackbox-exporter created servicemonitor.monitoring.coreos.com/blackbox-exporter created prometheusrule.monitoring.coreos.com/kube-prometheus-rules created clusterrole.rbac.authorization.k8s.io/kube-state-metrics created clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created deployment.apps/kube-state-metrics created networkpolicy.networking.k8s.io/kube-state-metrics created prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created service/kube-state-metrics created serviceaccount/kube-state-metrics created servicemonitor.monitoring.coreos.com/kube-state-metrics created prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created servicemonitor.monitoring.coreos.com/kube-apiserver created servicemonitor.monitoring.coreos.com/coredns created servicemonitor.monitoring.coreos.com/kube-controller-manager created servicemonitor.monitoring.coreos.com/kube-scheduler created servicemonitor.monitoring.coreos.com/kubelet created clusterrole.rbac.authorization.k8s.io/node-exporter created clusterrolebinding.rbac.authorization.k8s.io/node-exporter created daemonset.apps/node-exporter created networkpolicy.networking.k8s.io/node-exporter created prometheusrule.monitoring.coreos.com/node-exporter-rules created service/node-exporter created serviceaccount/node-exporter created servicemonitor.monitoring.coreos.com/node-exporter created clusterrole.rbac.authorization.k8s.io/prometheus-k8s created clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created networkpolicy.networking.k8s.io/prometheus-k8s created prometheus.monitoring.coreos.com/k8s created prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created rolebinding.rbac.authorization.k8s.io/prometheus-k8s created rolebinding.rbac.authorization.k8s.io/prometheus-k8s created rolebinding.rbac.authorization.k8s.io/prometheus-k8s created role.rbac.authorization.k8s.io/prometheus-k8s-config created role.rbac.authorization.k8s.io/prometheus-k8s created role.rbac.authorization.k8s.io/prometheus-k8s created role.rbac.authorization.k8s.io/prometheus-k8s created service/prometheus-k8s created serviceaccount/prometheus-k8s created servicemonitor.monitoring.coreos.com/prometheus-k8s created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created clusterrole.rbac.authorization.k8s.io/prometheus-adapter created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created configmap/adapter-config created deployment.apps/prometheus-adapter created networkpolicy.networking.k8s.io/prometheus-adapter created rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created service/prometheus-adapter created serviceaccount/prometheus-adapter created servicemonitor.monitoring.coreos.com/prometheus-adapter created clusterrole.rbac.authorization.k8s.io/prometheus-operator created clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created deployment.apps/prometheus-operator created networkpolicy.networking.k8s.io/prometheus-operator created prometheusrule.monitoring.coreos.com/prometheus-operator-rules created service/prometheus-operator created serviceaccount/prometheus-operator created servicemonitor.monitoring.coreos.com/prometheus-operator created unable to recognize "manifests/alertmanager-podDisruptionBudget.yaml": no matches for kind "PodDisruptionBudget" in version "policy/v1" unable to recognize "manifests/prometheus-podDisruptionBudget.yaml": no matches for kind "PodDisruptionBudget" in version "policy/v1"

-

如果在创建完成,其中node-exporter daemonSet.apps找不到node-exporter serviceaccount,执行 kubectl delete -f nodeExporter-daemonset.yaml && kubectl create -f nodeExporter-daemonset.yaml 这是因为在创建的时候发生先后顺序,不是很关键的错误

- 创建一个NodePort类型的service资源,目的可以访问Prometheus的WEB控制台