香港Kubernetes环境配置

新增cilium-agent配置 ipMasqAgent.enabled=true && nonMasqueradeCIDRs

- 部署命令

helm install cilium cilium/cilium --version 1.9.10 \ --namespace kube-system \ --set tunnel=disabled \ --set kubeProxyReplacement=strict \ --set hostServices.enabled=true \ --set ipMasqAgent.enabled=true \ --set prometheus.enabled=true \ --set operator.prometheus.enabled=true \ --set nativeRoutingCIDR=172.20.0.0/20 \ --set ipam.mode=kubernetes \ --set ipam.operator.clusterPoolIPv4PodCIDR=172.20.0.0/20 \ --set ipam.operator.clusterPoolIPv4MaskSize=24 \ --set k8sServiceHost=172.19.1.119 \ --set k8sServicePort=6443

- cilium-agent输出日志

<root@HK-K8S-CP ~># docker logs -f a57 level=info msg="Skipped reading configuration file" reason="Config File \"ciliumd\" Not Found in \"[/root]\"" subsys=config level=info msg="Started gops server" address="127.0.0.1:9890" subsys=daemon level=info msg="Memory available for map entries (0.003% of 8198389760B): 20495974B" subsys=config level=info msg="option bpf-ct-global-tcp-max set by dynamic sizing to 131072" subsys=config level=info msg="option bpf-ct-global-any-max set by dynamic sizing to 65536" subsys=config level=info msg="option bpf-nat-global-max set by dynamic sizing to 131072" subsys=config level=info msg="option bpf-neigh-global-max set by dynamic sizing to 131072" subsys=config level=info msg="option bpf-sock-rev-map-max set by dynamic sizing to 65536" subsys=config level=info msg=" --agent-health-port='9876'" subsys=daemon level=info msg=" --agent-labels=''" subsys=daemon level=info msg=" --allow-icmp-frag-needed='true'" subsys=daemon level=info msg=" --allow-localhost='auto'" subsys=daemon level=info msg=" --annotate-k8s-node='true'" subsys=daemon level=info msg=" --api-rate-limit='map[]'" subsys=daemon level=info msg=" --arping-refresh-period='5m0s'" subsys=daemon level=info msg=" --auto-create-cilium-node-resource='true'" subsys=daemon level=info msg=" --auto-direct-node-routes='false'" subsys=daemon level=info msg=" --blacklist-conflicting-routes='false'" subsys=daemon level=info msg=" --bpf-compile-debug='false'" subsys=daemon level=info msg=" --bpf-ct-global-any-max='262144'" subsys=daemon level=info msg=" --bpf-ct-global-tcp-max='524288'" subsys=daemon level=info msg=" --bpf-ct-timeout-regular-any='1m0s'" subsys=daemon level=info msg=" --bpf-ct-timeout-regular-tcp='6h0m0s'" subsys=daemon level=info msg=" --bpf-ct-timeout-regular-tcp-fin='10s'" subsys=daemon level=info msg=" --bpf-ct-timeout-regular-tcp-syn='1m0s'" subsys=daemon level=info msg=" --bpf-ct-timeout-service-any='1m0s'" subsys=daemon level=info msg=" --bpf-ct-timeout-service-tcp='6h0m0s'" subsys=daemon level=info msg=" --bpf-fragments-map-max='8192'" subsys=daemon level=info msg=" --bpf-lb-acceleration='disabled'" subsys=daemon level=info msg=" --bpf-lb-algorithm='random'" subsys=daemon level=info msg=" --bpf-lb-maglev-hash-seed='JLfvgnHc2kaSUFaI'" subsys=daemon level=info msg=" --bpf-lb-maglev-table-size='16381'" subsys=daemon level=info msg=" --bpf-lb-map-max='65536'" subsys=daemon level=info msg=" --bpf-lb-mode='snat'" subsys=daemon level=info msg=" --bpf-map-dynamic-size-ratio='0.0025'" subsys=daemon level=info msg=" --bpf-nat-global-max='524288'" subsys=daemon level=info msg=" --bpf-neigh-global-max='524288'" subsys=daemon level=info msg=" --bpf-policy-map-max='16384'" subsys=daemon level=info msg=" --bpf-root=''" subsys=daemon level=info msg=" --bpf-sock-rev-map-max='262144'" subsys=daemon level=info msg=" --certificates-directory='/var/run/cilium/certs'" subsys=daemon level=info msg=" --cgroup-root='/run/cilium/cgroupv2'" subsys=daemon level=info msg=" --cluster-id=''" subsys=daemon level=info msg=" --cluster-name='default'" subsys=daemon level=info msg=" --clustermesh-config='/var/lib/cilium/clustermesh/'" subsys=daemon level=info msg=" --cmdref=''" subsys=daemon level=info msg=" --config=''" subsys=daemon level=info msg=" --config-dir='/tmp/cilium/config-map'" subsys=daemon level=info msg=" --conntrack-gc-interval='0s'" subsys=daemon level=info msg=" --crd-wait-timeout='5m0s'" subsys=daemon level=info msg=" --datapath-mode='veth'" subsys=daemon level=info msg=" --debug='false'" subsys=daemon level=info msg=" --debug-verbose=''" subsys=daemon level=info msg=" --device=''" subsys=daemon level=info msg=" --devices=''" subsys=daemon level=info msg=" --direct-routing-device=''" subsys=daemon level=info msg=" --disable-cnp-status-updates='true'" subsys=daemon level=info msg=" --disable-conntrack='false'" subsys=daemon level=info msg=" --disable-endpoint-crd='false'" subsys=daemon level=info msg=" --disable-envoy-version-check='false'" subsys=daemon level=info msg=" --disable-iptables-feeder-rules=''" subsys=daemon level=info msg=" --dns-max-ips-per-restored-rule='1000'" subsys=daemon level=info msg=" --egress-masquerade-interfaces=''" subsys=daemon level=info msg=" --egress-multi-home-ip-rule-compat='false'" subsys=daemon level=info msg=" --enable-auto-protect-node-port-range='true'" subsys=daemon level=info msg=" --enable-bandwidth-manager='false'" subsys=daemon level=info msg=" --enable-bpf-clock-probe='true'" subsys=daemon level=info msg=" --enable-bpf-masquerade='true'" subsys=daemon level=info msg=" --enable-bpf-tproxy='false'" subsys=daemon level=info msg=" --enable-endpoint-health-checking='true'" subsys=daemon level=info msg=" --enable-endpoint-routes='false'" subsys=daemon level=info msg=" --enable-external-ips='true'" subsys=daemon level=info msg=" --enable-health-check-nodeport='true'" subsys=daemon level=info msg=" --enable-health-checking='true'" subsys=daemon level=info msg=" --enable-host-firewall='false'" subsys=daemon level=info msg=" --enable-host-legacy-routing='false'" subsys=daemon level=info msg=" --enable-host-port='true'" subsys=daemon level=info msg=" --enable-host-reachable-services='true'" subsys=daemon level=info msg=" --enable-hubble='true'" subsys=daemon level=info msg=" --enable-identity-mark='true'" subsys=daemon level=info msg=" --enable-ip-masq-agent='true'" subsys=daemon level=info msg=" --enable-ipsec='false'" subsys=daemon level=info msg=" --enable-ipv4='true'" subsys=daemon level=info msg=" --enable-ipv4-fragment-tracking='true'" subsys=daemon level=info msg=" --enable-ipv6='false'" subsys=daemon level=info msg=" --enable-ipv6-ndp='false'" subsys=daemon level=info msg=" --enable-k8s-api-discovery='false'" subsys=daemon level=info msg=" --enable-k8s-endpoint-slice='true'" subsys=daemon level=info msg=" --enable-k8s-event-handover='false'" subsys=daemon level=info msg=" --enable-l7-proxy='true'" subsys=daemon level=info msg=" --enable-local-node-route='true'" subsys=daemon level=info msg=" --enable-local-redirect-policy='false'" subsys=daemon level=info msg=" --enable-monitor='true'" subsys=daemon level=info msg=" --enable-node-port='false'" subsys=daemon level=info msg=" --enable-policy='default'" subsys=daemon level=info msg=" --enable-remote-node-identity='true'" subsys=daemon level=info msg=" --enable-selective-regeneration='true'" subsys=daemon level=info msg=" --enable-session-affinity='true'" subsys=daemon level=info msg=" --enable-svc-source-range-check='true'" subsys=daemon level=info msg=" --enable-tracing='false'" subsys=daemon level=info msg=" --enable-well-known-identities='false'" subsys=daemon level=info msg=" --enable-xt-socket-fallback='true'" subsys=daemon level=info msg=" --encrypt-interface=''" subsys=daemon level=info msg=" --encrypt-node='false'" subsys=daemon level=info msg=" --endpoint-interface-name-prefix='lxc+'" subsys=daemon level=info msg=" --endpoint-queue-size='25'" subsys=daemon level=info msg=" --endpoint-status=''" subsys=daemon level=info msg=" --envoy-log=''" subsys=daemon level=info msg=" --exclude-local-address=''" subsys=daemon level=info msg=" --fixed-identity-mapping='map[]'" subsys=daemon level=info msg=" --flannel-master-device=''" subsys=daemon level=info msg=" --flannel-uninstall-on-exit='false'" subsys=daemon level=info msg=" --force-local-policy-eval-at-source='true'" subsys=daemon level=info msg=" --gops-port='9890'" subsys=daemon level=info msg=" --host-reachable-services-protos='tcp,udp'" subsys=daemon level=info msg=" --http-403-msg=''" subsys=daemon level=info msg=" --http-idle-timeout='0'" subsys=daemon level=info msg=" --http-max-grpc-timeout='0'" subsys=daemon level=info msg=" --http-normalize-path='true'" subsys=daemon level=info msg=" --http-request-timeout='3600'" subsys=daemon level=info msg=" --http-retry-count='3'" subsys=daemon level=info msg=" --http-retry-timeout='0'" subsys=daemon level=info msg=" --hubble-disable-tls='false'" subsys=daemon level=info msg=" --hubble-event-queue-size='0'" subsys=daemon level=info msg=" --hubble-flow-buffer-size='4095'" subsys=daemon level=info msg=" --hubble-listen-address=':4244'" subsys=daemon level=info msg=" --hubble-metrics=''" subsys=daemon level=info msg=" --hubble-metrics-server=''" subsys=daemon level=info msg=" --hubble-socket-path='/var/run/cilium/hubble.sock'" subsys=daemon level=info msg=" --hubble-tls-cert-file='/var/lib/cilium/tls/hubble/server.crt'" subsys=daemon level=info msg=" --hubble-tls-client-ca-files='/var/lib/cilium/tls/hubble/client-ca.crt'" subsys=daemon level=info msg=" --hubble-tls-key-file='/var/lib/cilium/tls/hubble/server.key'" subsys=daemon level=info msg=" --identity-allocation-mode='crd'" subsys=daemon level=info msg=" --identity-change-grace-period='5s'" subsys=daemon level=info msg=" --install-iptables-rules='true'" subsys=daemon level=info msg=" --ip-allocation-timeout='2m0s'" subsys=daemon level=info msg=" --ip-masq-agent-config-path='/etc/config/ip-masq-agent'" subsys=daemon level=info msg=" --ipam='kubernetes'" subsys=daemon level=info msg=" --ipsec-key-file=''" subsys=daemon level=info msg=" --iptables-lock-timeout='5s'" subsys=daemon level=info msg=" --iptables-random-fully='false'" subsys=daemon level=info msg=" --ipv4-node='auto'" subsys=daemon level=info msg=" --ipv4-pod-subnets=''" subsys=daemon level=info msg=" --ipv4-range='auto'" subsys=daemon level=info msg=" --ipv4-service-loopback-address='169.254.42.1'" subsys=daemon level=info msg=" --ipv4-service-range='auto'" subsys=daemon level=info msg=" --ipv6-cluster-alloc-cidr='f00d::/64'" subsys=daemon level=info msg=" --ipv6-mcast-device=''" subsys=daemon level=info msg=" --ipv6-node='auto'" subsys=daemon level=info msg=" --ipv6-pod-subnets=''" subsys=daemon level=info msg=" --ipv6-range='auto'" subsys=daemon level=info msg=" --ipv6-service-range='auto'" subsys=daemon level=info msg=" --ipvlan-master-device='undefined'" subsys=daemon level=info msg=" --join-cluster='false'" subsys=daemon level=info msg=" --k8s-api-server=''" subsys=daemon level=info msg=" --k8s-force-json-patch='false'" subsys=daemon level=info msg=" --k8s-heartbeat-timeout='30s'" subsys=daemon level=info msg=" --k8s-kubeconfig-path=''" subsys=daemon level=info msg=" --k8s-namespace='kube-system'" subsys=daemon level=info msg=" --k8s-require-ipv4-pod-cidr='false'" subsys=daemon level=info msg=" --k8s-require-ipv6-pod-cidr='false'" subsys=daemon level=info msg=" --k8s-service-cache-size='128'" subsys=daemon level=info msg=" --k8s-service-proxy-name=''" subsys=daemon level=info msg=" --k8s-sync-timeout='3m0s'" subsys=daemon level=info msg=" --k8s-watcher-endpoint-selector='metadata.name!=kube-scheduler,metadata.name!=kube-controller-manager,metadata.name!=etcd-operator,metadata.name!=gcp-controller-manager'" subsys=daemon level=info msg=" --k8s-watcher-queue-size='1024'" subsys=daemon level=info msg=" --keep-config='false'" subsys=daemon level=info msg=" --kube-proxy-replacement='strict'" subsys=daemon level=info msg=" --kube-proxy-replacement-healthz-bind-address=''" subsys=daemon level=info msg=" --kvstore=''" subsys=daemon level=info msg=" --kvstore-connectivity-timeout='2m0s'" subsys=daemon level=info msg=" --kvstore-lease-ttl='15m0s'" subsys=daemon level=info msg=" --kvstore-opt='map[]'" subsys=daemon level=info msg=" --kvstore-periodic-sync='5m0s'" subsys=daemon level=info msg=" --label-prefix-file=''" subsys=daemon level=info msg=" --labels=''" subsys=daemon level=info msg=" --lib-dir='/var/lib/cilium'" subsys=daemon level=info msg=" --log-driver=''" subsys=daemon level=info msg=" --log-opt='map[]'" subsys=daemon level=info msg=" --log-system-load='false'" subsys=daemon level=info msg=" --masquerade='true'" subsys=daemon level=info msg=" --max-controller-interval='0'" subsys=daemon level=info msg=" --metrics=''" subsys=daemon level=info msg=" --monitor-aggregation='medium'" subsys=daemon level=info msg=" --monitor-aggregation-flags='all'" subsys=daemon level=info msg=" --monitor-aggregation-interval='5s'" subsys=daemon level=info msg=" --monitor-queue-size='0'" subsys=daemon level=info msg=" --mtu='0'" subsys=daemon level=info msg=" --nat46-range='0:0:0:0:0:FFFF::/96'" subsys=daemon level=info msg=" --native-routing-cidr='172.20.0.0/20'" subsys=daemon level=info msg=" --node-port-acceleration='disabled'" subsys=daemon level=info msg=" --node-port-algorithm='random'" subsys=daemon level=info msg=" --node-port-bind-protection='true'" subsys=daemon level=info msg=" --node-port-mode='snat'" subsys=daemon level=info msg=" --node-port-range='30000,32767'" subsys=daemon level=info msg=" --policy-audit-mode='false'" subsys=daemon level=info msg=" --policy-queue-size='100'" subsys=daemon level=info msg=" --policy-trigger-interval='1s'" subsys=daemon level=info msg=" --pprof='false'" subsys=daemon level=info msg=" --preallocate-bpf-maps='false'" subsys=daemon level=info msg=" --prefilter-device='undefined'" subsys=daemon level=info msg=" --prefilter-mode='native'" subsys=daemon level=info msg=" --prepend-iptables-chains='true'" subsys=daemon level=info msg=" --prometheus-serve-addr=':9090'" subsys=daemon level=info msg=" --proxy-connect-timeout='1'" subsys=daemon level=info msg=" --proxy-prometheus-port='9095'" subsys=daemon level=info msg=" --read-cni-conf=''" subsys=daemon level=info msg=" --restore='true'" subsys=daemon level=info msg=" --sidecar-istio-proxy-image='cilium/istio_proxy'" subsys=daemon level=info msg=" --single-cluster-route='false'" subsys=daemon level=info msg=" --skip-crd-creation='false'" subsys=daemon level=info msg=" --socket-path='/var/run/cilium/cilium.sock'" subsys=daemon level=info msg=" --sockops-enable='false'" subsys=daemon level=info msg=" --state-dir='/var/run/cilium'" subsys=daemon level=info msg=" --tofqdns-dns-reject-response-code='refused'" subsys=daemon level=info msg=" --tofqdns-enable-dns-compression='true'" subsys=daemon level=info msg=" --tofqdns-endpoint-max-ip-per-hostname='50'" subsys=daemon level=info msg=" --tofqdns-idle-connection-grace-period='0s'" subsys=daemon level=info msg=" --tofqdns-max-deferred-connection-deletes='10000'" subsys=daemon level=info msg=" --tofqdns-min-ttl='0'" subsys=daemon level=info msg=" --tofqdns-pre-cache=''" subsys=daemon level=info msg=" --tofqdns-proxy-port='0'" subsys=daemon level=info msg=" --tofqdns-proxy-response-max-delay='100ms'" subsys=daemon level=info msg=" --trace-payloadlen='128'" subsys=daemon level=info msg=" --tunnel='disabled'" subsys=daemon level=info msg=" --version='false'" subsys=daemon level=info msg=" --write-cni-conf-when-ready=''" subsys=daemon level=info msg=" _ _ _" subsys=daemon level=info msg=" ___|_| |_|_ _ _____" subsys=daemon level=info msg="| _| | | | | | |" subsys=daemon level=info msg="|___|_|_|_|___|_|_|_|" subsys=daemon level=info msg="Cilium 1.9.10 4e26039 2021-09-01T12:57:41-07:00 go version go1.15.15 linux/amd64" subsys=daemon level=info msg="cilium-envoy version: 9b1701da9cc035a1696f3e492ee2526101262e56/1.18.4/Distribution/RELEASE/BoringSSL" subsys=daemon level=info msg="clang (10.0.0) and kernel (5.11.1) versions: OK!" subsys=linux-datapath level=info msg="linking environment: OK!" subsys=linux-datapath level=warning msg="================================= WARNING ==========================================" subsys=bpf level=warning msg="BPF filesystem is not mounted. This will lead to network disruption when Cilium pods" subsys=bpf level=warning msg="are restarted. Ensure that the BPF filesystem is mounted in the host." subsys=bpf level=warning msg="https://docs.cilium.io/en/stable/operations/system_requirements/#mounted-ebpf-filesystem" subsys=bpf level=warning msg="====================================================================================" subsys=bpf level=info msg="Mounting BPF filesystem at /sys/fs/bpf" subsys=bpf level=info msg="Mounted cgroupv2 filesystem at /run/cilium/cgroupv2" subsys=cgroups level=info msg="Parsing base label prefixes from default label list" subsys=labels-filter level=info msg="Parsing additional label prefixes from user inputs: []" subsys=labels-filter level=info msg="Final label prefixes to be used for identity evaluation:" subsys=labels-filter level=info msg=" - reserved:.*" subsys=labels-filter level=info msg=" - :io.kubernetes.pod.namespace" subsys=labels-filter level=info msg=" - :io.cilium.k8s.namespace.labels" subsys=labels-filter level=info msg=" - :app.kubernetes.io" subsys=labels-filter level=info msg=" - !:io.kubernetes" subsys=labels-filter level=info msg=" - !:kubernetes.io" subsys=labels-filter level=info msg=" - !:.*beta.kubernetes.io" subsys=labels-filter level=info msg=" - !:k8s.io" subsys=labels-filter level=info msg=" - !:pod-template-generation" subsys=labels-filter level=info msg=" - !:pod-template-hash" subsys=labels-filter level=info msg=" - !:controller-revision-hash" subsys=labels-filter level=info msg=" - !:annotation.*" subsys=labels-filter level=info msg=" - !:etcd_node" subsys=labels-filter level=info msg="Auto-disabling \"enable-bpf-clock-probe\" feature since KERNEL_HZ cannot be determined" error="Cannot probe CONFIG_HZ" subsys=daemon level=info msg="Using autogenerated IPv4 allocation range" subsys=node v4Prefix=10.119.0.0/16 level=info msg="Initializing daemon" subsys=daemon level=info msg="Establishing connection to apiserver" host="https://172.19.1.119:6443" subsys=k8s level=info msg="Connected to apiserver" subsys=k8s level=info msg="Trying to auto-enable \"enable-node-port\", \"enable-external-ips\", \"enable-host-reachable-services\", \"enable-host-port\", \"enable-session-affinity\" features" subsys=daemon level=info msg="Inheriting MTU from external network interface" device=eth0 ipAddr=172.19.1.119 mtu=1500 subsys=mtu level=info msg="Restored services from maps" failed=0 restored=0 subsys=service level=info msg="Reading old endpoints..." subsys=daemon level=info msg="No old endpoints found." subsys=daemon level=info msg="Envoy: Starting xDS gRPC server listening on /var/run/cilium/xds.sock" subsys=envoy-manager level=info msg="Waiting until all Cilium CRDs are available" subsys=k8s level=info msg="All Cilium CRDs have been found and are available" subsys=k8s level=info msg="Retrieved node information from kubernetes node" nodeName=hk-k8s-cp subsys=k8s level=info msg="Received own node information from API server" ipAddr.ipv4=172.19.1.119 ipAddr.ipv6="<nil>" k8sNodeIP=172.19.1.119 labels="map[beta.kubernetes.io/arch:amd64 beta.kubernetes.io/os:linux kubernetes.io/arch:amd64 kubernetes.io/hostname:hk-k8s-cp kubernetes.io/os:linux node-role.kubernetes.io/master:]" nodeName=hk-k8s-cp subsys=k8s v4Prefix=172.20.0.0/24 v6Prefix="<nil>" level=info msg="Restored router IPs from node information" ipv4=172.20.0.56 ipv6="<nil>" subsys=k8s level=info msg="k8s mode: Allowing localhost to reach local endpoints" subsys=daemon level=info msg="Using auto-derived devices to attach Loadbalancer, Host Firewall or Bandwidth Manager program" devices="[eth0]" directRoutingDevice=eth0 subsys=daemon level=info msg="Enabling k8s event listener" subsys=k8s-watcher level=info msg="Removing stale endpoint interfaces" subsys=daemon level=info msg="Skipping kvstore configuration" subsys=daemon level=info msg="Initializing node addressing" subsys=daemon level=info msg="Initializing kubernetes IPAM" subsys=ipam v4Prefix=172.20.0.0/24 v6Prefix="<nil>" level=info msg="Restoring endpoints..." subsys=daemon level=info msg="Endpoints restored" failed=0 restored=0 subsys=daemon level=info msg="Addressing information:" subsys=daemon level=info msg=" Cluster-Name: default" subsys=daemon level=info msg=" Cluster-ID: 0" subsys=daemon level=info msg=" Local node-name: hk-k8s-cp" subsys=daemon level=info msg=" Node-IPv6: <nil>" subsys=daemon level=info msg=" External-Node IPv4: 172.19.1.119" subsys=daemon level=info msg=" Internal-Node IPv4: 172.20.0.56" subsys=daemon level=info msg=" IPv4 allocation prefix: 172.20.0.0/24" subsys=daemon level=info msg=" IPv4 native routing prefix: 172.20.0.0/20" subsys=daemon level=info msg=" Loopback IPv4: 169.254.42.1" subsys=daemon level=info msg=" Local IPv4 addresses:" subsys=daemon level=info msg=" - 172.19.1.119" subsys=daemon level=info msg="Creating or updating CiliumNode resource" node=hk-k8s-cp subsys=nodediscovery level=info msg="Waiting until all pre-existing resources related to policy have been received" subsys=k8s-watcher level=info msg="Adding local node to cluster" node="{hk-k8s-cp default [{InternalIP 172.19.1.119} {CiliumInternalIP 172.20.0.56}] 172.20.0.0/24 <nil> 172.20.0.244 <nil> 0 local 0 map[beta.kubernetes.io/arch:amd64 beta.kubernetes.io/os:linux kubernetes.io/arch:amd64 kubernetes.io/hostname:hk-k8s-cp kubernetes.io/os:linux node-role.kubernetes.io/master:] 6}" subsys=nodediscovery level=info msg="All pre-existing resources related to policy have been received; continuing" subsys=k8s-watcher level=info msg="Successfully created CiliumNode resource" subsys=nodediscovery level=info msg="Annotating k8s node" subsys=daemon v4CiliumHostIP.IPv4=172.20.0.56 v4Prefix=172.20.0.0/24 v4healthIP.IPv4=172.20.0.244 v6CiliumHostIP.IPv6="<nil>" v6Prefix="<nil>" v6healthIP.IPv6="<nil>" level=info msg="Initializing identity allocator" subsys=identity-cache level=info msg="Cluster-ID is not specified, skipping ClusterMesh initialization" subsys=daemon level=info msg="Setting up BPF datapath" bpfClockSource=ktime bpfInsnSet=v3 subsys=datapath-loader level=info msg="Setting sysctl" subsys=datapath-loader sysParamName=net.core.bpf_jit_enable sysParamValue=1 level=info msg="Setting sysctl" subsys=datapath-loader sysParamName=net.ipv4.conf.all.rp_filter sysParamValue=0 level=info msg="Setting sysctl" subsys=datapath-loader sysParamName=kernel.unprivileged_bpf_disabled sysParamValue=1 level=info msg="Setting sysctl" subsys=datapath-loader sysParamName=kernel.timer_migration sysParamValue=0 level=info msg="Adding new proxy port rules for cilium-dns-egress:41605" proxy port name=cilium-dns-egress subsys=proxy level=info msg="Serving cilium node monitor v1.2 API at unix:///var/run/cilium/monitor1_2.sock" subsys=monitor-agent level=info msg="Validating configured node address ranges" subsys=daemon level=info msg="Starting connection tracking garbage collector" subsys=daemon level=info msg="Starting IP identity watcher" subsys=ipcache level=info msg="Initial scan of connection tracking completed" subsys=ct-gc level=info msg="Regenerating restored endpoints" numRestored=0 subsys=daemon level=info msg="Datapath signal listener running" subsys=signal level=info msg="Creating host endpoint" subsys=daemon level=info msg="New endpoint" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=189 ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Resolving identity labels (blocking)" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=189 identityLabels="k8s:node-role.kubernetes.io/master,reserved:host" ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Identity of endpoint changed" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=189 identity=1 identityLabels="k8s:node-role.kubernetes.io/master,reserved:host" ipv4= ipv6= k8sPodName=/ oldIdentity="no identity" subsys=endpoint level=info msg="Config file not found" file-path=/etc/config/ip-masq-agent subsys=ipmasq level=info msg="Adding CIDR" cidr=192.0.2.0/24 subsys=ipmasq level=info msg="Adding CIDR" cidr=169.254.0.0/16 subsys=ipmasq level=info msg="Adding CIDR" cidr=192.168.0.0/16 subsys=ipmasq level=info msg="Adding CIDR" cidr=172.16.0.0/12 subsys=ipmasq level=info msg="Adding CIDR" cidr=203.0.113.0/24 subsys=ipmasq level=info msg="Adding CIDR" cidr=198.51.100.0/24 subsys=ipmasq level=info msg="Adding CIDR" cidr=240.0.0.0/4 subsys=ipmasq level=info msg="Adding CIDR" cidr=10.0.0.0/8 subsys=ipmasq level=info msg="Adding CIDR" cidr=100.64.0.0/10 subsys=ipmasq level=info msg="Adding CIDR" cidr=192.0.0.0/24 subsys=ipmasq level=info msg="Adding CIDR" cidr=192.88.99.0/24 subsys=ipmasq level=info msg="Adding CIDR" cidr=198.18.0.0/15 subsys=ipmasq level=info msg="Launching Cilium health daemon" subsys=daemon level=info msg="Finished regenerating restored endpoints" regenerated=0 subsys=daemon total=0 level=info msg="Launching Cilium health endpoint" subsys=daemon level=info msg="Serving prometheus metrics on :9090" subsys=daemon level=info msg="Started healthz status API server" address="127.0.0.1:9876" subsys=daemon level=info msg="Initializing Cilium API" subsys=daemon level=info msg="Daemon initialization completed" bootstrapTime=6.959436933s subsys=daemon level=info msg="Serving cilium API at unix:///var/run/cilium/cilium.sock" subsys=daemon level=info msg="Configuring Hubble server" eventQueueSize=2048 maxFlows=4095 subsys=hubble level=info msg="Starting local Hubble server" address="unix:///var/run/cilium/hubble.sock" subsys=hubble level=info msg="Beginning to read perf buffer" startTime="2021-09-13 11:41:58.562153566 +0000 UTC m=+7.289934686" subsys=monitor-agent level=info msg="Starting Hubble server" address=":4244" subsys=hubble level=info msg="Processing API request with rate limiter" name=endpoint-delete parallelRequests=4 subsys=rate uuid=9aa729a2-1487-11ec-9904-00163e00720e level=info msg="API request released by rate limiter" name=endpoint-delete parallelRequests=4 subsys=rate uuid=9aa729a2-1487-11ec-9904-00163e00720e waitDurationTotal="28.371µs" level=info msg="Delete endpoint request" id="container-id:a2df965a66e948eef94b7d25e321ef73cfe3eb49886f467cf6c8c5454014d5c5" subsys=daemon level=info msg="API call has been processed" error="endpoint not found" name=endpoint-delete processingDuration="15.421µs" subsys=rate totalDuration="59.237µs" uuid=9aa729a2-1487-11ec-9904-00163e00720e waitDurationTotal="28.371µs" level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager level=info msg="Processing API request with rate limiter" maxWaitDuration=15s name=endpoint-create parallelRequests=4 rateLimiterSkipped=true subsys=rate uuid=9af62004-1487-11ec-9904-00163e00720e level=info msg="API request released by rate limiter" maxWaitDuration=15s name=endpoint-create parallelRequests=4 rateLimiterSkipped=true subsys=rate uuid=9af62004-1487-11ec-9904-00163e00720e waitDurationTotal=0s level=info msg="Create endpoint request" addressing="&{172.20.0.57 9af4e464-1487-11ec-9904-00163e00720e }" containerID=7bf8f586668e5df8c0a23d8a053a8e78704ca0cfa1c5c72416fe6722d91ac84b datapathConfiguration="<nil>" interface=lxc9bcea339fc25 k8sPodName=kube-system/coredns-8677bddd54-88k5q labels="[]" subsys=daemon sync-build=true level=info msg="New endpoint" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=2818 ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Resolving identity labels (blocking)" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=2818 identityLabels="k8s:io.cilium.k8s.policy.cluster=default,k8s:io.cilium.k8s.policy.serviceaccount=coredns,k8s:io.kubernetes.pod.namespace=kube-system,k8s:k8s-app=kube-dns" ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Skipped non-kubernetes labels when labelling ciliumidentity. All labels will still be used in identity determination" labels="map[]" subsys=crd-allocator level=info msg="Allocated new global key" key="k8s:io.cilium.k8s.policy.cluster=default;k8s:io.cilium.k8s.policy.serviceaccount=coredns;k8s:io.kubernetes.pod.namespace=kube-system;k8s:k8s-app=kube-dns;" subsys=allocator level=info msg="Identity of endpoint changed" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=2818 identity=33064 identityLabels="k8s:io.cilium.k8s.policy.cluster=default,k8s:io.cilium.k8s.policy.serviceaccount=coredns,k8s:io.kubernetes.pod.namespace=kube-system,k8s:k8s-app=kube-dns" ipv4= ipv6= k8sPodName=/ oldIdentity="no identity" subsys=endpoint level=info msg="Waiting for endpoint to be generated" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=2818 identity=33064 ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="New endpoint" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=515 ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Resolving identity labels (blocking)" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=515 identityLabels="reserved:health" ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Identity of endpoint changed" containerID= datapathPolicyRevision=0 desiredPolicyRevision=0 endpointID=515 identity=4 identityLabels="reserved:health" ipv4= ipv6= k8sPodName=/ oldIdentity="no identity" subsys=endpoint level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager level=info msg="Compiled new BPF template" BPFCompilationTime=2.71807356s file-path=/var/run/cilium/state/templates/8b59ba8fa9a5a85409ec928c85a1818cf2a7da87/bpf_host.o subsys=datapath-loader level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager level=info msg="Compiled new BPF template" BPFCompilationTime=2.434021821s file-path=/var/run/cilium/state/templates/700e8cafead597c02c98f7d229a013a21fda2b34/bpf_lxc.o subsys=datapath-loader level=info msg="Rewrote endpoint BPF program" containerID= datapathPolicyRevision=0 desiredPolicyRevision=1 endpointID=2818 identity=33064 ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Successful endpoint creation" containerID= datapathPolicyRevision=1 desiredPolicyRevision=1 endpointID=2818 identity=33064 ipv4= ipv6= k8sPodName=/ subsys=daemon level=info msg="API call has been processed" name=endpoint-create processingDuration=2.764428582s subsys=rate totalDuration=2.764479213s uuid=9af62004-1487-11ec-9904-00163e00720e waitDurationTotal=0s level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager level=info msg="Rewrote endpoint BPF program" containerID= datapathPolicyRevision=0 desiredPolicyRevision=1 endpointID=515 identity=4 ipv4= ipv6= k8sPodName=/ subsys=endpoint level=info msg="Rewrote endpoint BPF program" containerID= datapathPolicyRevision=0 desiredPolicyRevision=1 endpointID=189 identity=1 ipv4= ipv6= k8sPodName=/ subsys=endpoint level=warning msg="Unable to update ipcache map entry on pod add" error="ipcache entry for podIP 172.20.0.57 owned by kvstore or agent" k8sNamespace=kube-system k8sPodName=coredns-8677bddd54-88k5q new-hostIP=172.20.0.57 new-podIP=172.20.0.57 new-podIPs="[{172.20.0.57}]" old-hostIP= old-podIP= old-podIPs="[]" subsys=k8s-watcher level=info msg="regenerating all endpoints" reason= subsys=endpoint-manager level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager level=info msg="Serving cilium health API at unix:///var/run/cilium/health.sock" subsys=health-server level=warning msg="Unable to update ipcache map entry on pod add" error="ipcache entry for podIP 172.20.0.57 owned by kvstore or agent" k8sNamespace=kube-system k8sPodName=coredns-8677bddd54-88k5q new-hostIP=172.20.0.57 new-podIP=172.20.0.57 new-podIPs="[{172.20.0.57}]" old-hostIP=172.20.0.57 old-podIP=172.20.0.57 old-podIPs="[{172.20.0.57}]" subsys=k8s-watcher level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager level=info msg="regenerating all endpoints" reason="one or more identities created or deleted" subsys=endpoint-manager

- 查看cilium守护进程状态信息

root@HK-K8S-CP:/home/cilium# cilium status --verbose KVStore: Ok Disabled Kubernetes: Ok 1.18 (v1.18.5) [linux/amd64] Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1beta1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"] KubeProxyReplacement: Strict [eth0 (Direct Routing)] Cilium: Ok 1.9.10 (v1.9.10-4e26039) NodeMonitor: Listening for events on 2 CPUs with 64x4096 of shared memory Cilium health daemon: Ok IPAM: IPv4: 3/255 allocated from 172.20.0.0/24, Allocated addresses: 172.20.0.244 (health) 172.20.0.56 (router) 172.20.0.57 (kube-system/coredns-8677bddd54-88k5q) BandwidthManager: Disabled Host Routing: BPF Masquerading: BPF (ip-masq-agent) [eth0] 172.20.0.0/20 Clock Source for BPF: ktime Controller Status: 22/22 healthy Name Last success Last error Count Message cilium-health-ep 16s ago never 0 no error dns-garbage-collector-job 23s ago never 0 no error endpoint-189-regeneration-recovery never never 0 no error endpoint-2818-regeneration-recovery never never 0 no error endpoint-515-regeneration-recovery never never 0 no error k8s-heartbeat 23s ago never 0 no error mark-k8s-node-as-available 6m17s ago never 0 no error metricsmap-bpf-prom-sync 3s ago never 0 no error neighbor-table-refresh 1m17s ago never 0 no error resolve-identity-189 1m17s ago never 0 no error resolve-identity-2818 1m16s ago never 0 no error resolve-identity-515 1m16s ago never 0 no error sync-endpoints-and-host-ips 17s ago never 0 no error sync-lb-maps-with-k8s-services 6m17s ago never 0 no error sync-policymap-189 28s ago never 0 no error sync-policymap-2818 28s ago never 0 no error sync-policymap-515 28s ago never 0 no error sync-to-k8s-ciliumendpoint (189) 7s ago never 0 no error sync-to-k8s-ciliumendpoint (2818) 6s ago never 0 no error sync-to-k8s-ciliumendpoint (515) 6s ago never 0 no error template-dir-watcher never never 0 no error update-k8s-node-annotations 6m22s ago never 0 no error Proxy Status: OK, ip 172.20.0.56, 0 redirects active on ports 10000-20000 Hubble: Ok Current/Max Flows: 1030/4096 (25.15%), Flows/s: 2.75 Metrics: Disabled KubeProxyReplacement Details: Status: Strict Protocols: TCP, UDP Devices: eth0 (Direct Routing) Mode: SNAT Backend Selection: Random Session Affinity: Enabled XDP Acceleration: Disabled Services: - ClusterIP: Enabled - NodePort: Enabled (Range: 30000-32767) - LoadBalancer: Enabled - externalIPs: Enabled - HostPort: Enabled BPF Maps: dynamic sizing: on (ratio: 0.002500) Name Size Non-TCP connection tracking 65536 TCP connection tracking 131072 Endpoint policy 65535 Events 2 IP cache 512000 IP masquerading agent 16384 IPv4 fragmentation 8192 IPv4 service 65536 IPv6 service 65536 IPv4 service backend 65536 IPv6 service backend 65536 IPv4 service reverse NAT 65536 IPv6 service reverse NAT 65536 Metrics 1024 NAT 131072 Neighbor table 131072 Global policy 16384 Per endpoint policy 65536 Session affinity 65536 Signal 2 Sockmap 65535 Sock reverse NAT 65536 Tunnel 65536 Cluster health: 4/4 reachable (2021-09-13T11:48:04Z) Name IP Node Endpoints hk-k8s-cp (localhost) 172.19.1.119 reachable reachable hk-k8s-wn1 172.19.1.120 reachable reachable hk-k8s-wn2 172.19.1.121 reachable reachable hk-k8s-wn3 172.19.1.122 reachable reachable

高级配置

使用Source-IP

-

externalTrafficPolicy=Local

使用eBPF实现保留源地址,与kube-proxy不同的是(如果服务类型是NodePort)不需要在每个Node节点上Open一个TCP端口,如果在集群中连接任何一个service则可以从任何一个节点,并且该节点没有后端服务也可以转发,不需要执行任何SNAT

-

externalTrafficPolicy=Cluster

kubernetes默认策略是Cluster,但是在这种方式也可以使用多种方式实现保留源IP地址,如DSR、Hybird模式都可以支持

Maglev 哈希一致性

Maglev哈希只能用在外部流量,内部转发不适用于该策略,因为在内部服务连接时,socket直接分配并转发到后端服务

Maglev使用哈希时,有几个选项需要注意,即maglev.tableSize && maglev.hashSeed

-

maglev.tableSize: 为每个服务配置lookup table size 单位是M(这个M并不是兆的概念就是一个数字)相对的公式是服务的lookup table size M >= 100*后端服务*N

默认的maglev.tableSize是16381,但是如下的值也可以被支持maglev.tableSizevalue251 509 1021 2039 4093 8191 16381 32749 65521 131071 例如tableSize是16381的话,那么服务的最大后端数量是约等于≈ 16831/100 ≈ 160

- maglev.hashSeed

Seed是一个基于base64-enconed的16字节的随机数字,可以使用head -c12 /dev/urandom | base64 -w0生成, 如果在每个节点上该值不一致,Cilium-agent可能无法正常运行

启用maglev如下SEED=$(head -c16 /dev/urandom | base64 -w0) helm install cilium cilium/cilium --version 1.9.4 \ --namespace kube-system \ --set kubeProxyReplacement=strict \ --set loadBalancer.algorithm=maglev \ --set maglev.tableSize=65521 \ --set maglev.hashSeed=$SEED \ --set k8sServiceHost=172.19.1.119 \ --set k8sServicePort=6443

Direct Server Ruturn

默认情况下,Cilium eBPF的NodePort是以SNAT方式实现的, 当外部流量到达时node节点决定负载均衡到后端, NodePort或者是Service相对于服务的外层,并且具有一个外部IP,使用SNAT转换源地址IP将请求转发到后端, 然后后端返回请求时, 再做一次SNAT,在整个过程不需要修改MTU消耗额外的开销

避免上面这种情况,可以通过修改loalbalance的模式 dsr, 将返回的请求直接返回到Client, 在返回的过程将使用service IP/Port 作为源地址, DSR将根据部署好的Native-Routing决定将请求如何返回给源客户端

在使用DSR模式时,客户端的源地址将被保留, 但是此种模式需要调整一下MTU共享信息

当然在公有云环境, 很依赖于公有云的底层underlay网络, 因为有可能会将Cilium的IP信息丢弃,那么就需要切换到SNAT模式

- 如何开启DSR模式,如下

helm install cilium cilium/cilium --version 1.9.4 \ --namespace kube-system \ --set tunnel=disabled \ --set autoDirectNodeRoutes=true \ --set kubeProxyReplacement=strict \ --set loadBalancer.mode=dsr \ --set k8sServiceHost=172.19.1.119 \ --set k8sServicePort=6443

IPAM

功能:

IPAM(ip address management)负责分配和管理由Cilium管理的网络端点(容器和其他)使用的IP地址,以下是官方支持IPAM模式

- Cluster Scope (Default)

- Kubernetes Host Scope

- Azure IPAM

- AWS ENI

- Google Kubernetes Engine

- CRD-Backed

- Technical Deep Dive

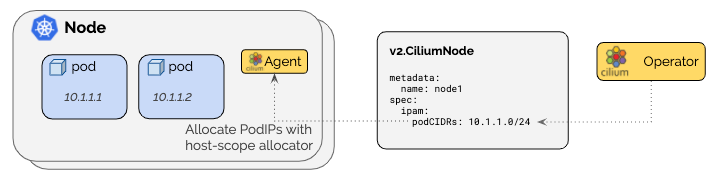

- Cluster scope

使用host scope分配器将每个node节点的PodCIDRs分配到每个节点上,类似于Kubernetes Host Scope模式,区别在于Kubernetes不是通过Kubernetes v1.Node资源分配每个节点的PodCIDRs,Cilium operator通过kubernetes v2.Node资源来管理每个node的PodCIDRs,这种模式的优点在于不需要依赖kubernetes来配置分发给每个node的PodCIDRs

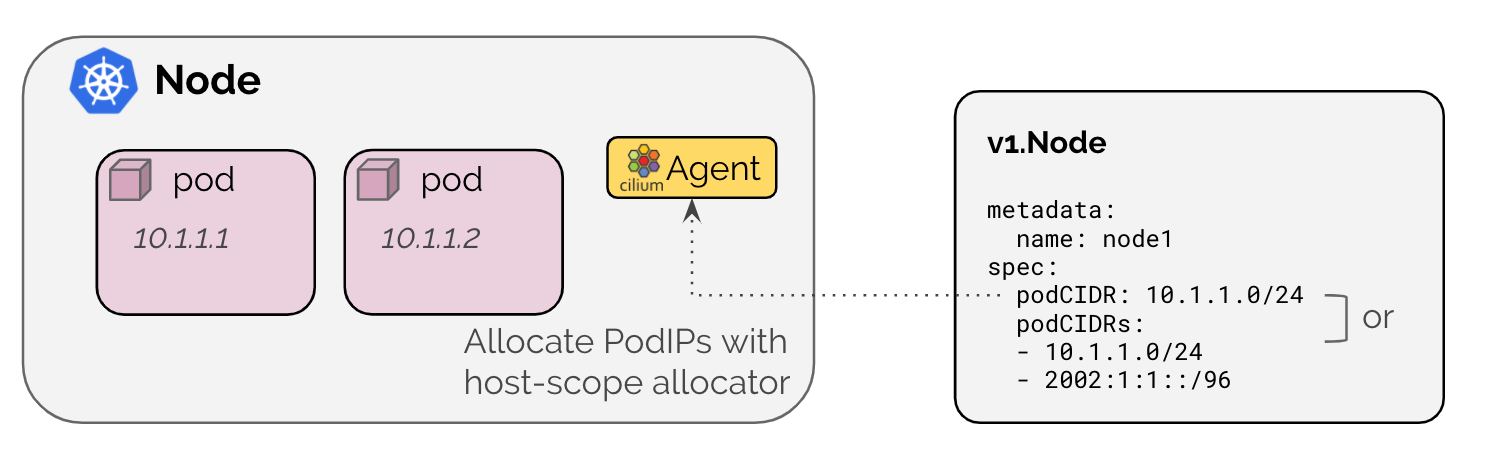

- kubernetes host socpe

启用此模式ipam: kubernetes以委派地址分配到每台独立到的node节点上,分配的地址范围取决于PodCIDR的值,具体架构模式

需要配置controller-manager启动参数--allocate-node-cidrs=true指示从PodCIDR地址范围分配