Yolov8-源码解析-一-

Yolov8 源码解析(一)

comments: true

description: Learn how to contribute to Ultralytics YOLO open-source repositories. Follow guidelines for pull requests, code of conduct, and bug reporting.

keywords: Ultralytics, YOLO, open-source, contribution, pull request, code of conduct, bug reporting, GitHub, CLA, Google-style docstrings

Contributing to Ultralytics Open-Source YOLO Repositories

Thank you for your interest in contributing to Ultralytics open-source YOLO repositories! Your contributions will enhance the project and benefit the entire community. This document provides guidelines and best practices to help you get started.

Table of Contents

Code of Conduct

All contributors must adhere to the Code of Conduct to ensure a welcoming and inclusive environment for everyone.

Contributing via Pull Requests

We welcome contributions in the form of pull requests. To streamline the review process, please follow these guidelines:

-

Fork the repository: Fork the Ultralytics YOLO repository to your GitHub account.

-

Create a branch: Create a new branch in your forked repository with a descriptive name for your changes.

-

Make your changes: Ensure that your changes follow the project's coding style and do not introduce new errors or warnings.

-

Test your changes: Test your changes locally to ensure they work as expected and do not introduce new issues.

-

Commit your changes: Commit your changes with a descriptive commit message. Include any relevant issue numbers in your commit message.

-

Create a pull request: Create a pull request from your forked repository to the main Ultralytics YOLO repository. Provide a clear explanation of your changes and how they improve the project.

CLA Signing

Before we can accept your pull request, you must sign a Contributor License Agreement (CLA). This legal document ensures that your contributions are properly licensed and that the project can continue to be distributed under the AGPL-3.0 license.

To sign the CLA, follow the instructions provided by the CLA bot after you submit your PR and add a comment in your PR saying:

I have read the CLA Document and I sign the CLA

Google-Style Docstrings

When adding new functions or classes, include a Google-style docstring to provide clear and concise documentation for other developers. This helps ensure your contributions are easy to understand and maintain.

Google-style

This example shows a Google-style docstring. Note that both input and output types must always be enclosed by parentheses, i.e. (bool).

def example_function(arg1, arg2=4):

"""

This example shows a Google-style docstring. Note that both input and output `types` must always be enclosed by

parentheses, i.e., `(bool)`.

Args:

arg1 (int): The first argument.

arg2 (int): The second argument. Default value is 4.

Returns:

(bool): True if successful, False otherwise.

Examples:

>>> result = example_function(1, 2) # returns False

"""

if arg1 == arg2:

return True

return False

Google-style with type hints

This example shows both a Google-style docstring and argument and return type hints, though both are not required, one can be used without the other.

def example_function(arg1: int, arg2: int = 4) -> bool:

"""

This example shows both a Google-style docstring and argument and return type hints, though both are not required;

one can be used without the other.

Args:

arg1: The first argument.

arg2: The second argument. Default value is 4.

Returns:

True if successful, False otherwise.

Examples:

>>> result = example_function(1, 2) # returns False

"""

if arg1 == arg2:

return True

return False

Single-line

Smaller or simpler functions can utilize a single-line docstring. Note the docstring must use 3 double-quotes, and be a complete sentence starting with a capital letter and ending with a period.

def example_small_function(arg1: int, arg2: int = 4) -> bool:

"""Example function that demonstrates a single-line docstring."""

return arg1 == arg2

GitHub Actions CI Tests

Before your pull request can be merged, all GitHub Actions Continuous Integration (CI) tests must pass. These tests include linting, unit tests, and other checks to ensure that your changes meet the quality standards of the project. Make sure to review the output of the GitHub Actions and fix any issues

Reporting Bugs

We appreciate bug reports as they play a crucial role in maintaining the project's quality. When reporting bugs it is important to provide a Minimum Reproducible Example: a clear, concise code example that replicates the issue. This helps in quick identification and resolution of the bug.

License

Ultralytics embraces the GNU Affero General Public License v3.0 (AGPL-3.0) for its repositories, promoting openness, transparency, and collaborative enhancement in software development. This strong copyleft license ensures that all users and developers retain the freedom to use, modify, and share the software. It fosters community collaboration, ensuring that any improvements remain accessible to all.

Users and developers are encouraged to familiarize themselves with the terms of AGPL-3.0 to contribute effectively and ethically to the Ultralytics open-source community.

Conclusion

Thank you for your interest in contributing to Ultralytics open-source YOLO projects. Your participation is crucial in shaping the future of our software and fostering a community of innovation and collaboration. Whether you're improving code, reporting bugs, or suggesting features, your contributions make a significant impact.

We look forward to seeing your ideas in action and appreciate your commitment to advancing object detection technology. Let's continue to grow and innovate together in this exciting open-source journey. Happy coding! 🚀🌟

.\yolov8\docs\build_docs.py

# 设置环境变量以解决 DeprecationWarning:Jupyter 正在迁移到使用标准的 platformdirs

os.environ["JUPYTER_PLATFORM_DIRS"] = "1"

# 定义常量 DOCS 为当前脚本所在目录的父目录的绝对路径

DOCS = Path(__file__).parent.resolve()

# 定义常量 SITE 为 DOCS 的父目录下的 'site' 目录的路径

SITE = DOCS.parent / "site"

def prepare_docs_markdown(clone_repos=True):

"""使用 mkdocs 构建文档。"""

# 如果 SITE 目录存在,则删除已有的 SITE 目录

if SITE.exists():

print(f"Removing existing {SITE}")

shutil.rmtree(SITE)

# 如果 clone_repos 为 True,则获取 hub-sdk 仓库

if clone_repos:

repo = "https://github.com/ultralytics/hub-sdk"

local_dir = DOCS.parent / Path(repo).name

# 如果本地目录不存在,则克隆仓库

if not local_dir.exists():

os.system(f"git clone {repo} {local_dir}")

# 更新仓库

os.system(f"git -C {local_dir} pull")

# 如果存在,则删除现有的 'en/hub/sdk' 目录

shutil.rmtree(DOCS / "en/hub/sdk", ignore_errors=True)

# 拷贝仓库中的 'docs' 目录到 'en/hub/sdk'

shutil.copytree(local_dir / "docs", DOCS / "en/hub/sdk")

# 如果存在,则删除现有的 'hub_sdk' 目录

shutil.rmtree(DOCS.parent / "hub_sdk", ignore_errors=True)

# 拷贝仓库中的 'hub_sdk' 目录到 'hub_sdk'

shutil.copytree(local_dir / "hub_sdk", DOCS.parent / "hub_sdk")

print(f"Cloned/Updated {repo} in {local_dir}")

# 对 'en' 目录下的所有 '.md' 文件添加 frontmatter

for file in tqdm((DOCS / "en").rglob("*.md"), desc="Adding frontmatter"):

update_markdown_files(file)

def update_page_title(file_path: Path, new_title: str):

"""更新 HTML 文件的标题。"""

# 打开文件并读取其内容,使用 UTF-8 编码

with open(file_path, encoding="utf-8") as file:

content = file.read()

# 使用正则表达式替换现有标题为新标题

updated_content = re.sub(r"<title>.*?</title>", f"<title>{new_title}</title>", content)

# 将更新后的内容写回文件

with open(file_path, "w", encoding="utf-8") as file:

file.write(updated_content)

def update_html_head(script=""):

"""Update the HTML head section of each file."""

# 获取指定目录下所有的 HTML 文件

html_files = Path(SITE).rglob("*.html")

# 遍历每个 HTML 文件

for html_file in tqdm(html_files, desc="Processing HTML files"):

# 打开文件并读取 HTML 内容

with html_file.open("r", encoding="utf-8") as file:

html_content = file.read()

# 如果指定的脚本已经在 HTML 文件中,则直接返回

if script in html_content: # script already in HTML file

return

# 查找 HTML 头部结束标签的索引位置

head_end_index = html_content.lower().rfind("</head>")

if head_end_index != -1:

# 在头部结束标签之前插入指定的 JavaScript 脚本

new_html_content = html_content[:head_end_index] + script + html_content[head_end_index:]

# 将更新后的 HTML 内容写回文件

with html_file.open("w", encoding="utf-8") as file:

file.write(new_html_content)

def update_subdir_edit_links(subdir="", docs_url=""):

"""Update the HTML head section of each file."""

# 如果子目录以斜杠开头,则去掉斜杠

if str(subdir[0]) == "/":

subdir = str(subdir[0])[1:]

# 获取子目录下所有的 HTML 文件

html_files = (SITE / subdir).rglob("*.html")

# 遍历每个 HTML 文件

for html_file in tqdm(html_files, desc="Processing subdir files"):

# 打开文件并解析为 BeautifulSoup 对象

with html_file.open("r", encoding="utf-8") as file:

soup = BeautifulSoup(file, "html.parser")

# 查找包含特定类和标题的锚点标签,并更新其 href 属性

a_tag = soup.find("a", {"class": "md-content__button md-icon"})

if a_tag and a_tag["title"] == "Edit this page":

a_tag["href"] = f"{docs_url}{a_tag['href'].split(subdir)[-1]}"

# 将更新后的 HTML 内容写回文件

with open(html_file, "w", encoding="utf-8") as file:

file.write(str(soup))

def update_markdown_files(md_filepath: Path):

"""Creates or updates a Markdown file, ensuring frontmatter is present."""

# 如果 Markdown 文件存在,则读取其内容

if md_filepath.exists():

content = md_filepath.read_text().strip()

# 替换特定的书名号字符为直引号

content = content.replace("‘", "'").replace("’", "'")

# 如果前置内容缺失,则添加默认的前置内容

if not content.strip().startswith("---\n"):

header = "---\ncomments: true\ndescription: TODO ADD DESCRIPTION\nkeywords: TODO ADD KEYWORDS\n---\n\n"

content = header + content

# 确保 MkDocs admonitions "=== " 开始的行前后有空行

lines = content.split("\n")

new_lines = []

for i, line in enumerate(lines):

stripped_line = line.strip()

if stripped_line.startswith("=== "):

if i > 0 and new_lines[-1] != "":

new_lines.append("")

new_lines.append(line)

if i < len(lines) - 1 and lines[i + 1].strip() != "":

new_lines.append("")

else:

new_lines.append(line)

content = "\n".join(new_lines)

# 如果文件末尾缺少换行符,则添加一个

if not content.endswith("\n"):

content += "\n"

# 将更新后的内容写回 Markdown 文件

md_filepath.write_text(content)

return

def update_docs_html():

# 此函数可能尚未实现具体功能,暂无需添加注释

pass

"""Updates titles, edit links, head sections, and converts plaintext links in HTML documentation."""

# 更新页面标题

update_page_title(SITE / "404.html", new_title="Ultralytics Docs - Not Found")

# 更新编辑链接

update_subdir_edit_links(

subdir="hub/sdk/", # 不要使用开头的斜杠

docs_url="https://github.com/ultralytics/hub-sdk/tree/main/docs/",

)

# 将纯文本链接转换为 HTML 超链接

files_modified = 0

for html_file in tqdm(SITE.rglob("*.html"), desc="Converting plaintext links"):

# 打开 HTML 文件并读取内容

with open(html_file, "r", encoding="utf-8") as file:

content = file.read()

# 将纯文本链接转换为 HTML 格式

updated_content = convert_plaintext_links_to_html(content)

# 如果内容有更新,则写入更新后的内容

if updated_content != content:

with open(html_file, "w", encoding="utf-8") as file:

file.write(updated_content)

# 记录已修改的文件数

files_modified += 1

# 打印修改的文件数

print(f"Modified plaintext links in {files_modified} files.")

# 更新 HTML 文件的 head 部分

script = ""

# 如果有脚本内容,则更新 HTML 的 head 部分

if any(script):

update_html_head(script)

def convert_plaintext_links_to_html(content):

"""Converts plaintext links to HTML hyperlinks in the main content area only."""

# 使用BeautifulSoup解析传入的HTML内容

soup = BeautifulSoup(content, "html.parser")

# 查找主要内容区域(根据HTML结构调整选择器)

main_content = soup.find("main") or soup.find("div", class_="md-content")

if not main_content:

return content # 如果找不到主内容区域,则返回原始内容

modified = False

# 遍历主内容区域中的段落和列表项

for paragraph in main_content.find_all(["p", "li"]):

for text_node in paragraph.find_all(string=True, recursive=False):

# 忽略链接和代码块的父节点

if text_node.parent.name not in {"a", "code"}:

# 使用正则表达式将文本节点中的链接转换为HTML超链接

new_text = re.sub(

r'(https?://[^\s()<>]+(?:\.[^\s()<>]+)+)(?<![.,:;\'"])',

r'<a href="\1">\1</a>',

str(text_node),

)

# 如果生成了新的<a>标签,则替换原文本节点

if "<a" in new_text:

new_soup = BeautifulSoup(new_text, "html.parser")

text_node.replace_with(new_soup)

modified = True

# 如果修改了内容,则返回修改后的HTML字符串;否则返回原始内容

return str(soup) if modified else content

def main():

"""Builds docs, updates titles and edit links, and prints local server command."""

prepare_docs_markdown()

# 构建主文档

print(f"Building docs from {DOCS}")

subprocess.run(f"mkdocs build -f {DOCS.parent}/mkdocs.yml --strict", check=True, shell=True)

print(f"Site built at {SITE}")

# 更新文档的HTML页面

update_docs_html()

# 显示用于启动本地服务器的命令

print('Docs built correctly ✅\nServe site at http://localhost:8000 with "python -m http.server --directory site"')

if __name__ == "__main__":

main()

.\yolov8\docs\build_reference.py

# Ultralytics YOLO 🚀, AGPL-3.0 license

"""

Helper file to build Ultralytics Docs reference section. Recursively walks through ultralytics dir and builds an MkDocs

reference section of *.md files composed of classes and functions, and also creates a nav menu for use in mkdocs.yaml.

Note: Must be run from repository root directory. Do not run from docs directory.

"""

import re # 导入正则表达式模块

import subprocess # 导入子进程模块

from collections import defaultdict # 导入 defaultdict 集合

from pathlib import Path # 导入 Path 模块

# Constants

hub_sdk = False # 设置 hub_sdk 常量为 False

if hub_sdk:

PACKAGE_DIR = Path("/Users/glennjocher/PycharmProjects/hub-sdk/hub_sdk") # 设置 PACKAGE_DIR 变量为指定路径

REFERENCE_DIR = PACKAGE_DIR.parent / "docs/reference" # 设置 REFERENCE_DIR 变量为参考文档路径

GITHUB_REPO = "ultralytics/hub-sdk" # 设置 GitHub 仓库路径

else:

FILE = Path(__file__).resolve() # 获取当前脚本文件的路径

PACKAGE_DIR = FILE.parents[1] / "ultralytics" # 设置 PACKAGE_DIR 变量为指定路径

REFERENCE_DIR = PACKAGE_DIR.parent / "docs/en/reference" # 设置 REFERENCE_DIR 变量为参考文档路径

GITHUB_REPO = "ultralytics/ultralytics" # 设置 GitHub 仓库路径

def extract_classes_and_functions(filepath: Path) -> tuple:

"""Extracts class and function names from a given Python file."""

content = filepath.read_text() # 读取文件内容为文本

class_pattern = r"(?:^|\n)class\s(\w+)(?:\(|:)" # 定义匹配类名的正则表达式模式

func_pattern = r"(?:^|\n)def\s(\w+)\(" # 定义匹配函数名的正则表达式模式

classes = re.findall(class_pattern, content) # 使用正则表达式从内容中查找类名列表

functions = re.findall(func_pattern, content) # 使用正则表达式从内容中查找函数名列表

return classes, functions # 返回类名列表和函数名列表的元组

def create_markdown(py_filepath: Path, module_path: str, classes: list, functions: list):

"""Creates a Markdown file containing the API reference for the given Python module."""

md_filepath = py_filepath.with_suffix(".md") # 将 Python 文件路径转换为 Markdown 文件路径

exists = md_filepath.exists() # 检查 Markdown 文件是否已存在

# Read existing content and keep header content between first two ---

header_content = ""

if exists:

existing_content = md_filepath.read_text() # 读取现有 Markdown 文件的内容

header_parts = existing_content.split("---") # 使用 --- 分割内容为头部部分

for part in header_parts:

if "description:" in part or "comments:" in part:

header_content += f"---{part}---\n\n" # 将符合条件的头部部分添加到 header_content 中

if not any(header_content):

header_content = "---\ndescription: TODO ADD DESCRIPTION\nkeywords: TODO ADD KEYWORDS\n---\n\n"

module_name = module_path.replace(".__init__", "") # 替换模块路径中的特定字符串

module_path = module_path.replace(".", "/") # 将模块路径中的点号替换为斜杠

url = f"https://github.com/{GITHUB_REPO}/blob/main/{module_path}.py" # 构建 GitHub 文件链接

edit = f"https://github.com/{GITHUB_REPO}/edit/main/{module_path}.py" # 构建 GitHub 编辑链接

pretty = url.replace("__init__.py", "\\_\\_init\\_\\_.py") # 替换文件名以更好地显示 __init__.py

title_content = (

f"# Reference for `{module_path}.py`\n\n" # 创建 Markdown 文件的标题部分

f"!!! Note\n\n"

f" This file is available at [{pretty}]({url}). If you spot a problem please help fix it by [contributing]"

f"(https://docs.ultralytics.com/help/contributing/) a [Pull Request]({edit}) 🛠️. Thank you 🙏!\n\n"

)

md_content = ["<br>\n"] + [f"## ::: {module_name}.{class_name}\n\n<br><br><hr><br>\n" for class_name in classes]

# 创建 Markdown 文件的内容部分,包含每个类的标题

# 使用列表推导式生成 Markdown 内容的标题部分,每个函数名都以特定格式添加到列表中

md_content.extend(f"## ::: {module_name}.{func_name}\n\n<br><br><hr><br>\n" for func_name in functions)

# 移除最后一个元素中的水平线标记,确保 Markdown 内容格式正确

md_content[-1] = md_content[-1].replace("<hr><br>", "")

# 将标题、内容和生成的 Markdown 内容合并为一个完整的 Markdown 文档

md_content = header_content + title_content + "\n".join(md_content)

# 如果 Markdown 文件内容末尾不是换行符,则添加一个换行符

if not md_content.endswith("\n"):

md_content += "\n"

# 根据指定路径创建 Markdown 文件的父目录,如果父目录不存在则递归创建

md_filepath.parent.mkdir(parents=True, exist_ok=True)

# 将 Markdown 内容写入到指定路径的文件中

md_filepath.write_text(md_content)

# 如果 Markdown 文件是新创建的:

if not exists:

# 将新创建的 Markdown 文件添加到 Git 的暂存区中

print(f"Created new file '{md_filepath}'")

subprocess.run(["git", "add", "-f", str(md_filepath)], check=True, cwd=PACKAGE_DIR)

# 返回 Markdown 文件相对于其父目录的路径

return md_filepath.relative_to(PACKAGE_DIR.parent)

def nested_dict() -> defaultdict:

"""Creates and returns a nested defaultdict."""

# 创建并返回一个嵌套的 defaultdict 对象

return defaultdict(nested_dict)

def sort_nested_dict(d: dict) -> dict:

"""Sorts a nested dictionary recursively."""

# 递归地对嵌套字典进行排序

return {key: sort_nested_dict(value) if isinstance(value, dict) else value for key, value in sorted(d.items())}

def create_nav_menu_yaml(nav_items: list, save: bool = False):

"""Creates a YAML file for the navigation menu based on the provided list of items."""

# 创建一个嵌套的 defaultdict 作为导航树的基础结构

nav_tree = nested_dict()

# 遍历传入的导航项列表

for item_str in nav_items:

# 将每个导航项解析为路径对象

item = Path(item_str)

# 获取路径的各个部分

parts = item.parts

# 初始化当前层级为导航树的 "reference" 键对应的值

current_level = nav_tree["reference"]

# 遍历路径的部分,跳过前两个部分(docs 和 reference)和最后一个部分(文件名)

for part in parts[2:-1]:

# 将当前层级深入到下一级

current_level = current_level[part]

# 提取 Markdown 文件名(去除扩展名)

md_file_name = parts[-1].replace(".md", "")

# 将 Markdown 文件名与路径项关联存储到导航树中

current_level[md_file_name] = item

# 对导航树进行递归排序

nav_tree_sorted = sort_nested_dict(nav_tree)

def _dict_to_yaml(d, level=0):

"""Converts a nested dictionary to a YAML-formatted string with indentation."""

# 初始化空的 YAML 字符串

yaml_str = ""

# 计算当前层级的缩进

indent = " " * level

# 遍历字典的键值对

for k, v in d.items():

# 如果值是字典类型,则递归调用该函数处理

if isinstance(v, dict):

yaml_str += f"{indent}- {k}:\n{_dict_to_yaml(v, level + 1)}"

else:

# 如果值不是字典,则将键值对格式化为 YAML 行并追加到 yaml_str

yaml_str += f"{indent}- {k}: {str(v).replace('docs/en/', '')}\n"

return yaml_str

# 打印更新后的 YAML 参考部分

print("Scan complete, new mkdocs.yaml reference section is:\n\n", _dict_to_yaml(nav_tree_sorted))

# 如果设置了保存标志,则将更新后的 YAML 参考部分写入文件

if save:

(PACKAGE_DIR.parent / "nav_menu_updated.yml").write_text(_dict_to_yaml(nav_tree_sorted))

def main():

"""Main function to extract class and function names, create Markdown files, and generate a YAML navigation menu."""

# 初始化导航项列表

nav_items = []

# 遍历指定目录下的所有 Python 文件

for py_filepath in PACKAGE_DIR.rglob("*.py"):

# 提取文件中的类和函数列表

classes, functions = extract_classes_and_functions(py_filepath)

# 如果文件中存在类或函数,则处理该文件

if classes or functions:

# 计算相对于包目录的路径

py_filepath_rel = py_filepath.relative_to(PACKAGE_DIR)

# 构建 Markdown 文件路径

md_filepath = REFERENCE_DIR / py_filepath_rel

# 构建模块路径字符串

module_path = f"{PACKAGE_DIR.name}.{py_filepath_rel.with_suffix('').as_posix().replace('/', '.')}"

# 创建 Markdown 文件,并返回相对路径

md_rel_filepath = create_markdown(md_filepath, module_path, classes, functions)

# 将 Markdown 文件的相对路径添加到导航项列表中

nav_items.append(str(md_rel_filepath))

# 创建导航菜单的 YAML 文件

create_nav_menu_yaml(nav_items)

if __name__ == "__main__":

main()

description: Discover what's next for Ultralytics with our under-construction page, previewing new, groundbreaking AI and ML features coming soon.

keywords: Ultralytics, coming soon, under construction, new features, AI updates, ML advancements, YOLO, technology preview

Under Construction 🏗️🌟

Welcome to the Ultralytics "Under Construction" page! Here, we're hard at work developing the next generation of AI and ML innovations. This page serves as a teaser for the exciting updates and new features we're eager to share with you!

Exciting New Features on the Way 🎉

- Innovative Breakthroughs: Get ready for advanced features and services that will transform your AI and ML experience.

- New Horizons: Anticipate novel products that redefine AI and ML capabilities.

- Enhanced Services: We're upgrading our services for greater efficiency and user-friendliness.

Stay Updated 🚧

This placeholder page is your first stop for upcoming developments. Keep an eye out for:

- Newsletter: Subscribe here for the latest news.

- Social Media: Follow us here for updates and teasers.

- Blog: Visit our blog for detailed insights.

We Value Your Input 🗣️

Your feedback shapes our future releases. Share your thoughts and suggestions here.

Thank You, Community! 🌍

Your contributions inspire our continuous innovation. Stay tuned for the big reveal of what's next in AI and ML at Ultralytics!

Excited for what's coming? Bookmark this page and get ready for a transformative AI and ML journey with Ultralytics! 🛠️🤖

comments: true

description: Explore the widely-used Caltech-101 dataset with 9,000 images across 101 categories. Ideal for object recognition tasks in machine learning and computer vision.

keywords: Caltech-101, dataset, object recognition, machine learning, computer vision, YOLO, deep learning, research, AI

Caltech-101 Dataset

The Caltech-101 dataset is a widely used dataset for object recognition tasks, containing around 9,000 images from 101 object categories. The categories were chosen to reflect a variety of real-world objects, and the images themselves were carefully selected and annotated to provide a challenging benchmark for object recognition algorithms.

Key Features

- The Caltech-101 dataset comprises around 9,000 color images divided into 101 categories.

- The categories encompass a wide variety of objects, including animals, vehicles, household items, and people.

- The number of images per category varies, with about 40 to 800 images in each category.

- Images are of variable sizes, with most images being medium resolution.

- Caltech-101 is widely used for training and testing in the field of machine learning, particularly for object recognition tasks.

Dataset Structure

Unlike many other datasets, the Caltech-101 dataset is not formally split into training and testing sets. Users typically create their own splits based on their specific needs. However, a common practice is to use a random subset of images for training (e.g., 30 images per category) and the remaining images for testing.

Applications

The Caltech-101 dataset is extensively used for training and evaluating deep learning models in object recognition tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. Its wide variety of categories and high-quality images make it an excellent dataset for research and development in the field of machine learning and computer vision.

Usage

To train a YOLO model on the Caltech-101 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="caltech101", epochs=100, imgsz=416)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=caltech101 model=yolov8n-cls.pt epochs=100 imgsz=416

```

Sample Images and Annotations

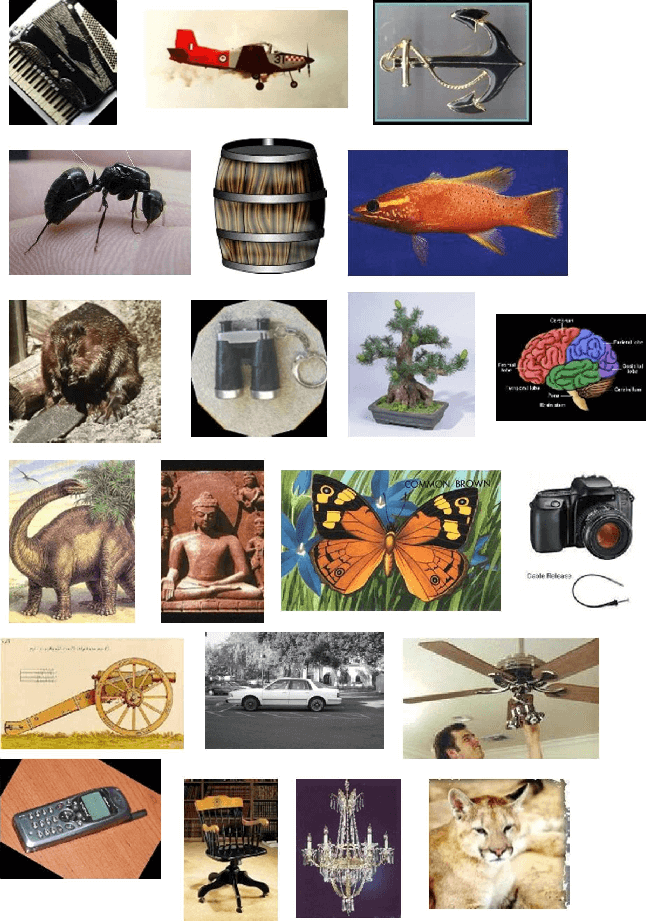

The Caltech-101 dataset contains high-quality color images of various objects, providing a well-structured dataset for object recognition tasks. Here are some examples of images from the dataset:

The example showcases the variety and complexity of the objects in the Caltech-101 dataset, emphasizing the significance of a diverse dataset for training robust object recognition models.

Citations and Acknowledgments

If you use the Caltech-101 dataset in your research or development work, please cite the following paper:

!!! Quote ""

=== "BibTeX"

```py

@article{fei2007learning,

title={Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories},

author={Fei-Fei, Li and Fergus, Rob and Perona, Pietro},

journal={Computer vision and Image understanding},

volume={106},

number={1},

pages={59--70},

year={2007},

publisher={Elsevier}

}

```

We would like to acknowledge Li Fei-Fei, Rob Fergus, and Pietro Perona for creating and maintaining the Caltech-101 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the Caltech-101 dataset and its creators, visit the Caltech-101 dataset website.

FAQ

What is the Caltech-101 dataset used for in machine learning?

The Caltech-101 dataset is widely used in machine learning for object recognition tasks. It contains around 9,000 images across 101 categories, providing a challenging benchmark for evaluating object recognition algorithms. Researchers leverage it to train and test models, especially Convolutional Neural Networks (CNNs) and Support Vector Machines (SVMs), in computer vision.

How can I train an Ultralytics YOLO model on the Caltech-101 dataset?

To train an Ultralytics YOLO model on the Caltech-101 dataset, you can use the provided code snippets. For example, to train for 100 epochs:

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="caltech101", epochs=100, imgsz=416)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=caltech101 model=yolov8n-cls.pt epochs=100 imgsz=416

```

For more detailed arguments and options, refer to the model Training page.

What are the key features of the Caltech-101 dataset?

The Caltech-101 dataset includes:

- Around 9,000 color images across 101 categories.

- Categories covering a diverse range of objects, including animals, vehicles, and household items.

- Variable number of images per category, typically between 40 and 800.

- Variable image sizes, with most being medium resolution.

These features make it an excellent choice for training and evaluating object recognition models in machine learning and computer vision.

Why should I cite the Caltech-101 dataset in my research?

Citing the Caltech-101 dataset in your research acknowledges the creators' contributions and provides a reference for others who might use the dataset. The recommended citation is:

!!! Quote ""

=== "BibTeX"

```py

@article{fei2007learning,

title={Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories},

author={Fei-Fei, Li and Fergus, Rob and Perona, Pietro},

journal={Computer vision and Image understanding},

volume={106},

number={1},

pages={59--70},

year={2007},

publisher={Elsevier}

}

```

Citing helps in maintaining the integrity of academic work and assists peers in locating the original resource.

Can I use Ultralytics HUB for training models on the Caltech-101 dataset?

Yes, you can use Ultralytics HUB for training models on the Caltech-101 dataset. Ultralytics HUB provides an intuitive platform for managing datasets, training models, and deploying them without extensive coding. For a detailed guide, refer to the how to train your custom models with Ultralytics HUB blog post.

comments: true

description: Explore the Caltech-256 dataset, featuring 30,000 images across 257 categories, ideal for training and testing object recognition algorithms.

keywords: Caltech-256 dataset, object classification, image dataset, machine learning, computer vision, deep learning, YOLO, training dataset

Caltech-256 Dataset

The Caltech-256 dataset is an extensive collection of images used for object classification tasks. It contains around 30,000 images divided into 257 categories (256 object categories and 1 background category). The images are carefully curated and annotated to provide a challenging and diverse benchmark for object recognition algorithms.

Watch: How to Train Image Classification Model using Caltech-256 Dataset with Ultralytics HUB

Key Features

- The Caltech-256 dataset comprises around 30,000 color images divided into 257 categories.

- Each category contains a minimum of 80 images.

- The categories encompass a wide variety of real-world objects, including animals, vehicles, household items, and people.

- Images are of variable sizes and resolutions.

- Caltech-256 is widely used for training and testing in the field of machine learning, particularly for object recognition tasks.

Dataset Structure

Like Caltech-101, the Caltech-256 dataset does not have a formal split between training and testing sets. Users typically create their own splits according to their specific needs. A common practice is to use a random subset of images for training and the remaining images for testing.

Applications

The Caltech-256 dataset is extensively used for training and evaluating deep learning models in object recognition tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. Its diverse set of categories and high-quality images make it an invaluable dataset for research and development in the field of machine learning and computer vision.

Usage

To train a YOLO model on the Caltech-256 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="caltech256", epochs=100, imgsz=416)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=caltech256 model=yolov8n-cls.pt epochs=100 imgsz=416

```

Sample Images and Annotations

The Caltech-256 dataset contains high-quality color images of various objects, providing a comprehensive dataset for object recognition tasks. Here are some examples of images from the dataset (credit):

The example showcases the diversity and complexity of the objects in the Caltech-256 dataset, emphasizing the importance of a varied dataset for training robust object recognition models.

Citations and Acknowledgments

If you use the Caltech-256 dataset in your research or development work, please cite the following paper:

!!! Quote ""

=== "BibTeX"

```py

@article{griffin2007caltech,

title={Caltech-256 object category dataset},

author={Griffin, Gregory and Holub, Alex and Perona, Pietro},

year={2007}

}

```

We would like to acknowledge Gregory Griffin, Alex Holub, and Pietro Perona for creating and maintaining the Caltech-256 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the

Caltech-256 dataset and its creators, visit the Caltech-256 dataset website.

FAQ

What is the Caltech-256 dataset and why is it important for machine learning?

The Caltech-256 dataset is a large image dataset used primarily for object classification tasks in machine learning and computer vision. It consists of around 30,000 color images divided into 257 categories, covering a wide range of real-world objects. The dataset's diverse and high-quality images make it an excellent benchmark for evaluating object recognition algorithms, which is crucial for developing robust machine learning models.

How can I train a YOLO model on the Caltech-256 dataset using Python or CLI?

To train a YOLO model on the Caltech-256 dataset for 100 epochs, you can use the following code snippets. Refer to the model Training page for additional options.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model

# Train the model

results = model.train(data="caltech256", epochs=100, imgsz=416)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=caltech256 model=yolov8n-cls.pt epochs=100 imgsz=416

```

What are the most common use cases for the Caltech-256 dataset?

The Caltech-256 dataset is widely used for various object recognition tasks such as:

- Training Convolutional Neural Networks (CNNs)

- Evaluating the performance of Support Vector Machines (SVMs)

- Benchmarking new deep learning algorithms

- Developing object detection models using frameworks like Ultralytics YOLO

Its diversity and comprehensive annotations make it ideal for research and development in machine learning and computer vision.

How is the Caltech-256 dataset structured and split for training and testing?

The Caltech-256 dataset does not come with a predefined split for training and testing. Users typically create their own splits according to their specific needs. A common approach is to randomly select a subset of images for training and use the remaining images for testing. This flexibility allows users to tailor the dataset to their specific project requirements and experimental setups.

Why should I use Ultralytics YOLO for training models on the Caltech-256 dataset?

Ultralytics YOLO models offer several advantages for training on the Caltech-256 dataset:

- High Accuracy: YOLO models are known for their state-of-the-art performance in object detection tasks.

- Speed: They provide real-time inference capabilities, making them suitable for applications requiring quick predictions.

- Ease of Use: With Ultralytics HUB, users can train, validate, and deploy models without extensive coding.

- Pretrained Models: Starting from pretrained models, like

yolov8n-cls.pt, can significantly reduce training time and improve model accuracy.

For more details, explore our comprehensive training guide.

comments: true

description: Explore the CIFAR-10 dataset, featuring 60,000 color images in 10 classes. Learn about its structure, applications, and how to train models using YOLO.

keywords: CIFAR-10, dataset, machine learning, computer vision, image classification, YOLO, deep learning, neural networks

CIFAR-10 Dataset

The CIFAR-10 (Canadian Institute For Advanced Research) dataset is a collection of images used widely for machine learning and computer vision algorithms. It was developed by researchers at the CIFAR institute and consists of 60,000 32x32 color images in 10 different classes.

Watch: How to Train an Image Classification Model with CIFAR-10 Dataset using Ultralytics YOLOv8

Key Features

- The CIFAR-10 dataset consists of 60,000 images, divided into 10 classes.

- Each class contains 6,000 images, split into 5,000 for training and 1,000 for testing.

- The images are colored and of size 32x32 pixels.

- The 10 different classes represent airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

- CIFAR-10 is commonly used for training and testing in the field of machine learning and computer vision.

Dataset Structure

The CIFAR-10 dataset is split into two subsets:

- Training Set: This subset contains 50,000 images used for training machine learning models.

- Testing Set: This subset consists of 10,000 images used for testing and benchmarking the trained models.

Applications

The CIFAR-10 dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The diversity of the dataset in terms of classes and the presence of color images make it a well-rounded dataset for research and development in the field of machine learning and computer vision.

Usage

To train a YOLO model on the CIFAR-10 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="cifar10", epochs=100, imgsz=32)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=cifar10 model=yolov8n-cls.pt epochs=100 imgsz=32

```

Sample Images and Annotations

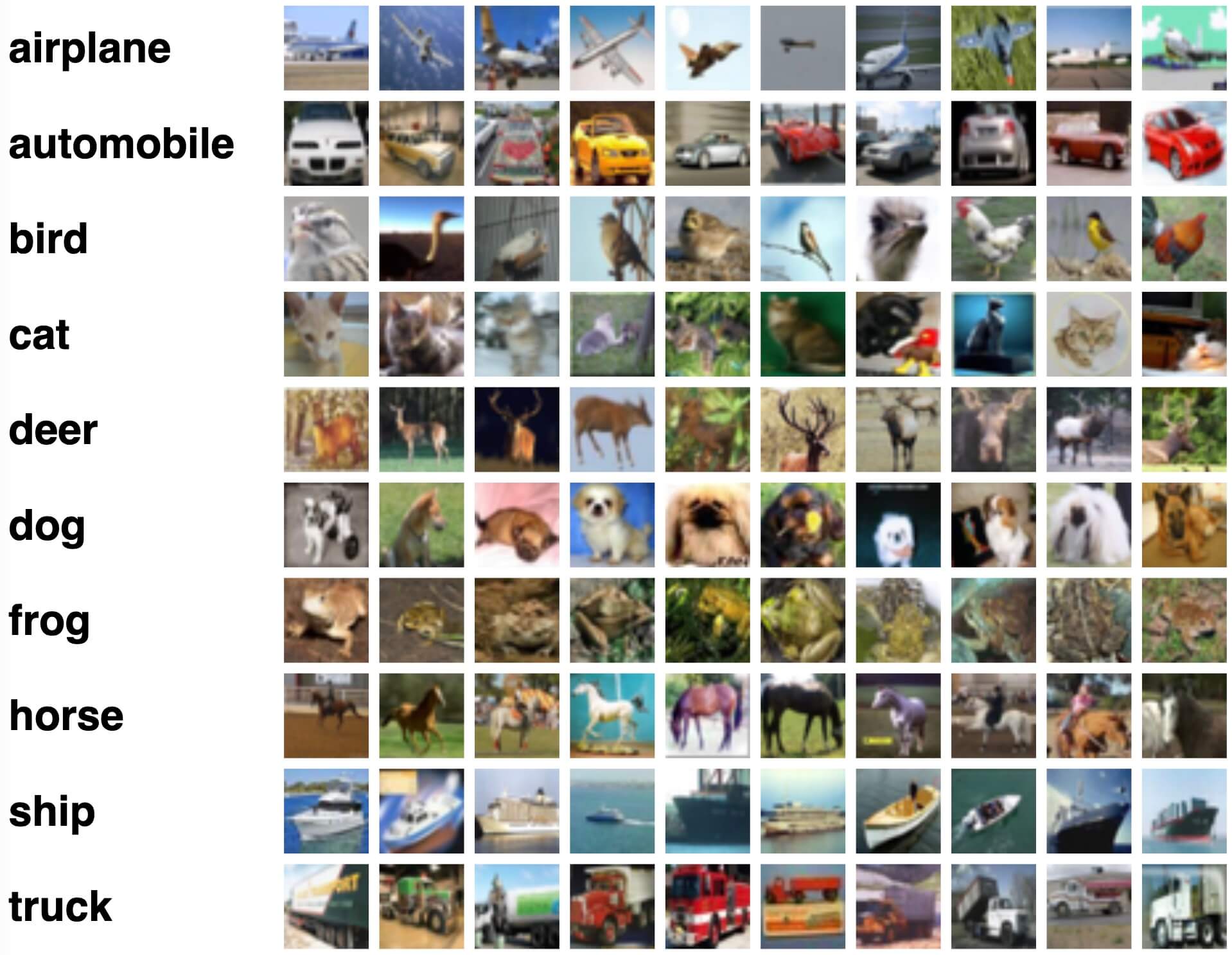

The CIFAR-10 dataset contains color images of various objects, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset:

The example showcases the variety and complexity of the objects in the CIFAR-10 dataset, highlighting the importance of a diverse dataset for training robust image classification models.

Citations and Acknowledgments

If you use the CIFAR-10 dataset in your research or development work, please cite the following paper:

!!! Quote ""

=== "BibTeX"

```py

@TECHREPORT{Krizhevsky09learningmultiple,

author={Alex Krizhevsky},

title={Learning multiple layers of features from tiny images},

institution={},

year={2009}

}

```

We would like to acknowledge Alex Krizhevsky for creating and maintaining the CIFAR-10 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the CIFAR-10 dataset and its creator, visit the CIFAR-10 dataset website.

FAQ

How can I train a YOLO model on the CIFAR-10 dataset?

To train a YOLO model on the CIFAR-10 dataset using Ultralytics, you can follow the examples provided for both Python and CLI. Here is a basic example to train your model for 100 epochs with an image size of 32x32 pixels:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="cifar10", epochs=100, imgsz=32)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=cifar10 model=yolov8n-cls.pt epochs=100 imgsz=32

```

For more details, refer to the model Training page.

What are the key features of the CIFAR-10 dataset?

The CIFAR-10 dataset consists of 60,000 color images divided into 10 classes. Each class contains 6,000 images, with 5,000 for training and 1,000 for testing. The images are 32x32 pixels in size and vary across the following categories:

- Airplanes

- Cars

- Birds

- Cats

- Deer

- Dogs

- Frogs

- Horses

- Ships

- Trucks

This diverse dataset is essential for training image classification models in fields such as machine learning and computer vision. For more information, visit the CIFAR-10 sections on dataset structure and applications.

Why use the CIFAR-10 dataset for image classification tasks?

The CIFAR-10 dataset is an excellent benchmark for image classification due to its diversity and structure. It contains a balanced mix of 60,000 labeled images across 10 different categories, which helps in training robust and generalized models. It is widely used for evaluating deep learning models, including Convolutional Neural Networks (CNNs) and other machine learning algorithms. The dataset is relatively small, making it suitable for quick experimentation and algorithm development. Explore its numerous applications in the applications section.

How is the CIFAR-10 dataset structured?

The CIFAR-10 dataset is structured into two main subsets:

- Training Set: Contains 50,000 images used for training machine learning models.

- Testing Set: Consists of 10,000 images for testing and benchmarking the trained models.

Each subset comprises images categorized into 10 classes, with their annotations readily available for model training and evaluation. For more detailed information, refer to the dataset structure section.

How can I cite the CIFAR-10 dataset in my research?

If you use the CIFAR-10 dataset in your research or development projects, make sure to cite the following paper:

@TECHREPORT{Krizhevsky09learningmultiple,

author={Alex Krizhevsky},

title={Learning multiple layers of features from tiny images},

institution={},

year={2009}

}

Acknowledging the dataset's creators helps support continued research and development in the field. For more details, see the citations and acknowledgments section.

What are some practical examples of using the CIFAR-10 dataset?

The CIFAR-10 dataset is often used for training image classification models, such as Convolutional Neural Networks (CNNs) and Support Vector Machines (SVMs). These models can be employed in various computer vision tasks including object detection, image recognition, and automated tagging. To see some practical examples, check the code snippets in the usage section.

comments: true

description: Explore the CIFAR-100 dataset, consisting of 60,000 32x32 color images across 100 classes. Ideal for machine learning and computer vision tasks.

keywords: CIFAR-100, dataset, machine learning, computer vision, image classification, deep learning, YOLO, training, testing, Alex Krizhevsky

CIFAR-100 Dataset

The CIFAR-100 (Canadian Institute For Advanced Research) dataset is a significant extension of the CIFAR-10 dataset, composed of 60,000 32x32 color images in 100 different classes. It was developed by researchers at the CIFAR institute, offering a more challenging dataset for more complex machine learning and computer vision tasks.

Key Features

- The CIFAR-100 dataset consists of 60,000 images, divided into 100 classes.

- Each class contains 600 images, split into 500 for training and 100 for testing.

- The images are colored and of size 32x32 pixels.

- The 100 different classes are grouped into 20 coarse categories for higher level classification.

- CIFAR-100 is commonly used for training and testing in the field of machine learning and computer vision.

Dataset Structure

The CIFAR-100 dataset is split into two subsets:

- Training Set: This subset contains 50,000 images used for training machine learning models.

- Testing Set: This subset consists of 10,000 images used for testing and benchmarking the trained models.

Applications

The CIFAR-100 dataset is extensively used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The diversity of the dataset in terms of classes and the presence of color images make it a more challenging and comprehensive dataset for research and development in the field of machine learning and computer vision.

Usage

To train a YOLO model on the CIFAR-100 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="cifar100", epochs=100, imgsz=32)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=cifar100 model=yolov8n-cls.pt epochs=100 imgsz=32

```

Sample Images and Annotations

The CIFAR-100 dataset contains color images of various objects, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset:

The example showcases the variety and complexity of the objects in the CIFAR-100 dataset, highlighting the importance of a diverse dataset for training robust image classification models.

Citations and Acknowledgments

If you use the CIFAR-100 dataset in your research or development work, please cite the following paper:

!!! Quote ""

=== "BibTeX"

```py

@TECHREPORT{Krizhevsky09learningmultiple,

author={Alex Krizhevsky},

title={Learning multiple layers of features from tiny images},

institution={},

year={2009}

}

```

We would like to acknowledge Alex Krizhevsky for creating and maintaining the CIFAR-100 dataset as a valuable resource for the machine learning and computer vision research community. For more information about the CIFAR-100 dataset and its creator, visit the CIFAR-100 dataset website.

FAQ

What is the CIFAR-100 dataset and why is it significant?

The CIFAR-100 dataset is a large collection of 60,000 32x32 color images classified into 100 classes. Developed by the Canadian Institute For Advanced Research (CIFAR), it provides a challenging dataset ideal for complex machine learning and computer vision tasks. Its significance lies in the diversity of classes and the small size of the images, making it a valuable resource for training and testing deep learning models, like Convolutional Neural Networks (CNNs), using frameworks such as Ultralytics YOLO.

How do I train a YOLO model on the CIFAR-100 dataset?

You can train a YOLO model on the CIFAR-100 dataset using either Python or CLI commands. Here's how:

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="cifar100", epochs=100, imgsz=32)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=cifar100 model=yolov8n-cls.pt epochs=100 imgsz=32

```

For a comprehensive list of available arguments, please refer to the model Training page.

What are the primary applications of the CIFAR-100 dataset?

The CIFAR-100 dataset is extensively used in training and evaluating deep learning models for image classification. Its diverse set of 100 classes, grouped into 20 coarse categories, provides a challenging environment for testing algorithms such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning approaches. This dataset is a key resource in research and development within machine learning and computer vision fields.

How is the CIFAR-100 dataset structured?

The CIFAR-100 dataset is split into two main subsets:

- Training Set: Contains 50,000 images used for training machine learning models.

- Testing Set: Consists of 10,000 images used for testing and benchmarking the trained models.

Each of the 100 classes contains 600 images, with 500 images for training and 100 for testing, making it uniquely suited for rigorous academic and industrial research.

Where can I find sample images and annotations from the CIFAR-100 dataset?

The CIFAR-100 dataset includes a variety of color images of various objects, making it a structured dataset for image classification tasks. You can refer to the documentation page to see sample images and annotations. These examples highlight the dataset's diversity and complexity, important for training robust image classification models.

comments: true

description: Explore the Fashion-MNIST dataset, a modern replacement for MNIST with 70,000 Zalando article images. Ideal for benchmarking machine learning models.

keywords: Fashion-MNIST, image classification, Zalando dataset, machine learning, deep learning, CNN, dataset overview

Fashion-MNIST Dataset

The Fashion-MNIST dataset is a database of Zalando's article images—consisting of a training set of 60,000 examples and a test set of 10,000 examples. Each example is a 28x28 grayscale image, associated with a label from 10 classes. Fashion-MNIST is intended to serve as a direct drop-in replacement for the original MNIST dataset for benchmarking machine learning algorithms.

Watch: How to do Image Classification on Fashion MNIST Dataset using Ultralytics YOLOv8

Key Features

- Fashion-MNIST contains 60,000 training images and 10,000 testing images of Zalando's article images.

- The dataset comprises grayscale images of size 28x28 pixels.

- Each pixel has a single pixel-value associated with it, indicating the lightness or darkness of that pixel, with higher numbers meaning darker. This pixel-value is an integer between 0 and 255.

- Fashion-MNIST is widely used for training and testing in the field of machine learning, especially for image classification tasks.

Dataset Structure

The Fashion-MNIST dataset is split into two subsets:

- Training Set: This subset contains 60,000 images used for training machine learning models.

- Testing Set: This subset consists of 10,000 images used for testing and benchmarking the trained models.

Labels

Each training and test example is assigned to one of the following labels:

- T-shirt/top

- Trouser

- Pullover

- Dress

- Coat

- Sandal

- Shirt

- Sneaker

- Bag

- Ankle boot

Applications

The Fashion-MNIST dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), Support Vector Machines (SVMs), and various other machine learning algorithms. The dataset's simple and well-structured format makes it an essential resource for researchers and practitioners in the field of machine learning and computer vision.

Usage

To train a CNN model on the Fashion-MNIST dataset for 100 epochs with an image size of 28x28, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="fashion-mnist", epochs=100, imgsz=28)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=fashion-mnist model=yolov8n-cls.pt epochs=100 imgsz=28

```

Sample Images and Annotations

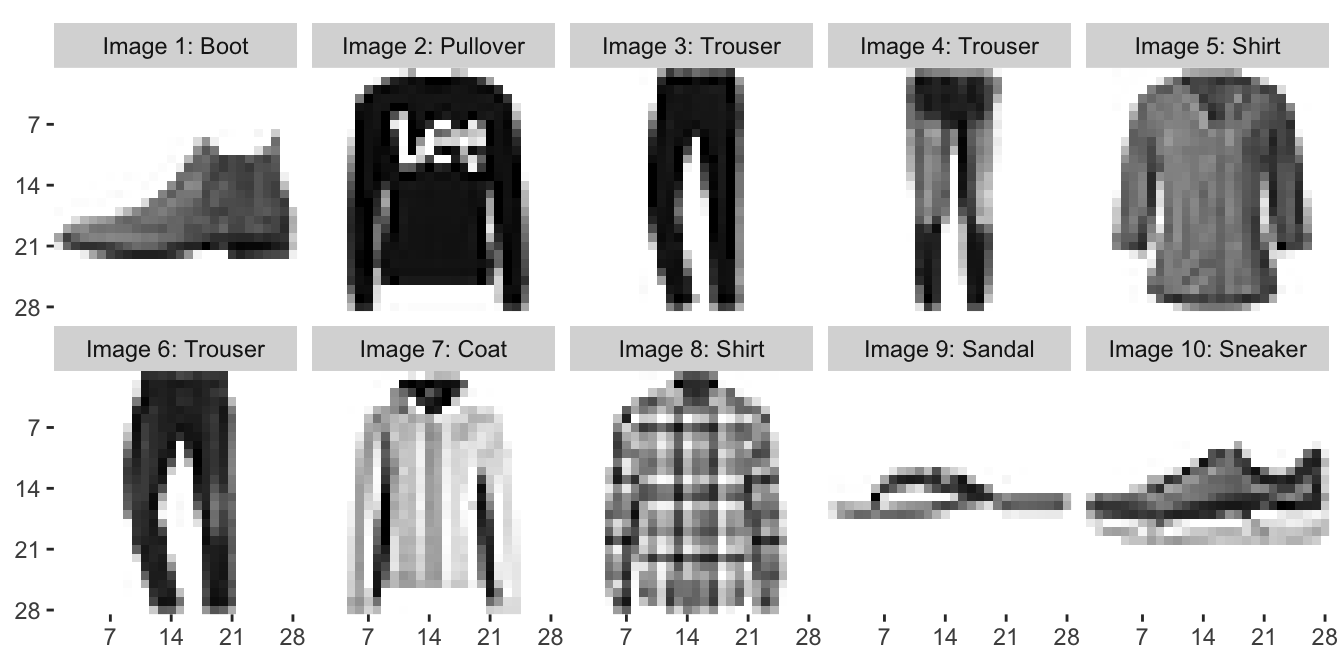

The Fashion-MNIST dataset contains grayscale images of Zalando's article images, providing a well-structured dataset for image classification tasks. Here are some examples of images from the dataset:

The example showcases the variety and complexity of the images in the Fashion-MNIST dataset, highlighting the importance of a diverse dataset for training robust image classification models.

Acknowledgments

If you use the Fashion-MNIST dataset in your research or development work, please acknowledge the dataset by linking to the GitHub repository. This dataset was made available by Zalando Research.

FAQ

What is the Fashion-MNIST dataset and how is it different from MNIST?

The Fashion-MNIST dataset is a collection of 70,000 grayscale images of Zalando's article images, intended as a modern replacement for the original MNIST dataset. It serves as a benchmark for machine learning models in the context of image classification tasks. Unlike MNIST, which contains handwritten digits, Fashion-MNIST consists of 28x28-pixel images categorized into 10 fashion-related classes, such as T-shirt/top, trouser, and ankle boot.

How can I train a YOLO model on the Fashion-MNIST dataset?

To train an Ultralytics YOLO model on the Fashion-MNIST dataset, you can use both Python and CLI commands. Here's a quick example to get you started:

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a pretrained model

model = YOLO("yolov8n-cls.pt")

# Train the model on Fashion-MNIST

results = model.train(data="fashion-mnist", epochs=100, imgsz=28)

```

=== "CLI"

```py

yolo detect train data=fashion-mnist model=yolov8n-cls.pt epochs=100 imgsz=28

```

For more detailed training parameters, refer to the Training page.

Why should I use the Fashion-MNIST dataset for benchmarking my machine learning models?

The Fashion-MNIST dataset is widely recognized in the deep learning community as a robust alternative to MNIST. It offers a more complex and varied set of images, making it an excellent choice for benchmarking image classification models. The dataset's structure, comprising 60,000 training images and 10,000 testing images, each labeled with one of 10 classes, makes it ideal for evaluating the performance of different machine learning algorithms in a more challenging context.

Can I use Ultralytics YOLO for image classification tasks like Fashion-MNIST?

Yes, Ultralytics YOLO models can be used for image classification tasks, including those involving the Fashion-MNIST dataset. YOLOv8, for example, supports various vision tasks such as detection, segmentation, and classification. To get started with image classification tasks, refer to the Classification page.

What are the key features and structure of the Fashion-MNIST dataset?

The Fashion-MNIST dataset is divided into two main subsets: 60,000 training images and 10,000 testing images. Each image is a 28x28-pixel grayscale picture representing one of 10 fashion-related classes. The simplicity and well-structured format make it ideal for training and evaluating models in machine learning and computer vision tasks. For more details on the dataset structure, see the Dataset Structure section.

How can I acknowledge the use of the Fashion-MNIST dataset in my research?

If you utilize the Fashion-MNIST dataset in your research or development projects, it's important to acknowledge it by linking to the GitHub repository. This helps in attributing the data to Zalando Research, who made the dataset available for public use.

comments: true

description: Explore the extensive ImageNet dataset and discover its role in advancing deep learning in computer vision. Access pretrained models and training examples.

keywords: ImageNet, deep learning, visual recognition, computer vision, pretrained models, YOLO, dataset, object detection, image classification

ImageNet Dataset

ImageNet is a large-scale database of annotated images designed for use in visual object recognition research. It contains over 14 million images, with each image annotated using WordNet synsets, making it one of the most extensive resources available for training deep learning models in computer vision tasks.

ImageNet Pretrained Models

| Model | size (pixels) |

acc top1 |

acc top5 |

Speed CPU ONNX (ms) |

Speed A100 TensorRT (ms) |

params (M) |

FLOPs (B) at 640 |

|---|---|---|---|---|---|---|---|

| YOLOv8n-cls | 224 | 69.0 | 88.3 | 12.9 | 0.31 | 2.7 | 4.3 |

| YOLOv8s-cls | 224 | 73.8 | 91.7 | 23.4 | 0.35 | 6.4 | 13.5 |

| YOLOv8m-cls | 224 | 76.8 | 93.5 | 85.4 | 0.62 | 17.0 | 42.7 |

| YOLOv8l-cls | 224 | 76.8 | 93.5 | 163.0 | 0.87 | 37.5 | 99.7 |

| YOLOv8x-cls | 224 | 79.0 | 94.6 | 232.0 | 1.01 | 57.4 | 154.8 |

Key Features

- ImageNet contains over 14 million high-resolution images spanning thousands of object categories.

- The dataset is organized according to the WordNet hierarchy, with each synset representing a category.

- ImageNet is widely used for training and benchmarking in the field of computer vision, particularly for image classification and object detection tasks.

- The annual ImageNet Large Scale Visual Recognition Challenge (ILSVRC) has been instrumental in advancing computer vision research.

Dataset Structure

The ImageNet dataset is organized using the WordNet hierarchy. Each node in the hierarchy represents a category, and each category is described by a synset (a collection of synonymous terms). The images in ImageNet are annotated with one or more synsets, providing a rich resource for training models to recognize various objects and their relationships.

ImageNet Large Scale Visual Recognition Challenge (ILSVRC)

The annual ImageNet Large Scale Visual Recognition Challenge (ILSVRC) has been an important event in the field of computer vision. It has provided a platform for researchers and developers to evaluate their algorithms and models on a large-scale dataset with standardized evaluation metrics. The ILSVRC has led to significant advancements in the development of deep learning models for image classification, object detection, and other computer vision tasks.

Applications

The ImageNet dataset is widely used for training and evaluating deep learning models in various computer vision tasks, such as image classification, object detection, and object localization. Some popular deep learning architectures, such as AlexNet, VGG, and ResNet, were developed and benchmarked using the ImageNet dataset.

Usage

To train a deep learning model on the ImageNet dataset for 100 epochs with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="imagenet", epochs=100, imgsz=224)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo train data=imagenet model=yolov8n-cls.pt epochs=100 imgsz=224

```

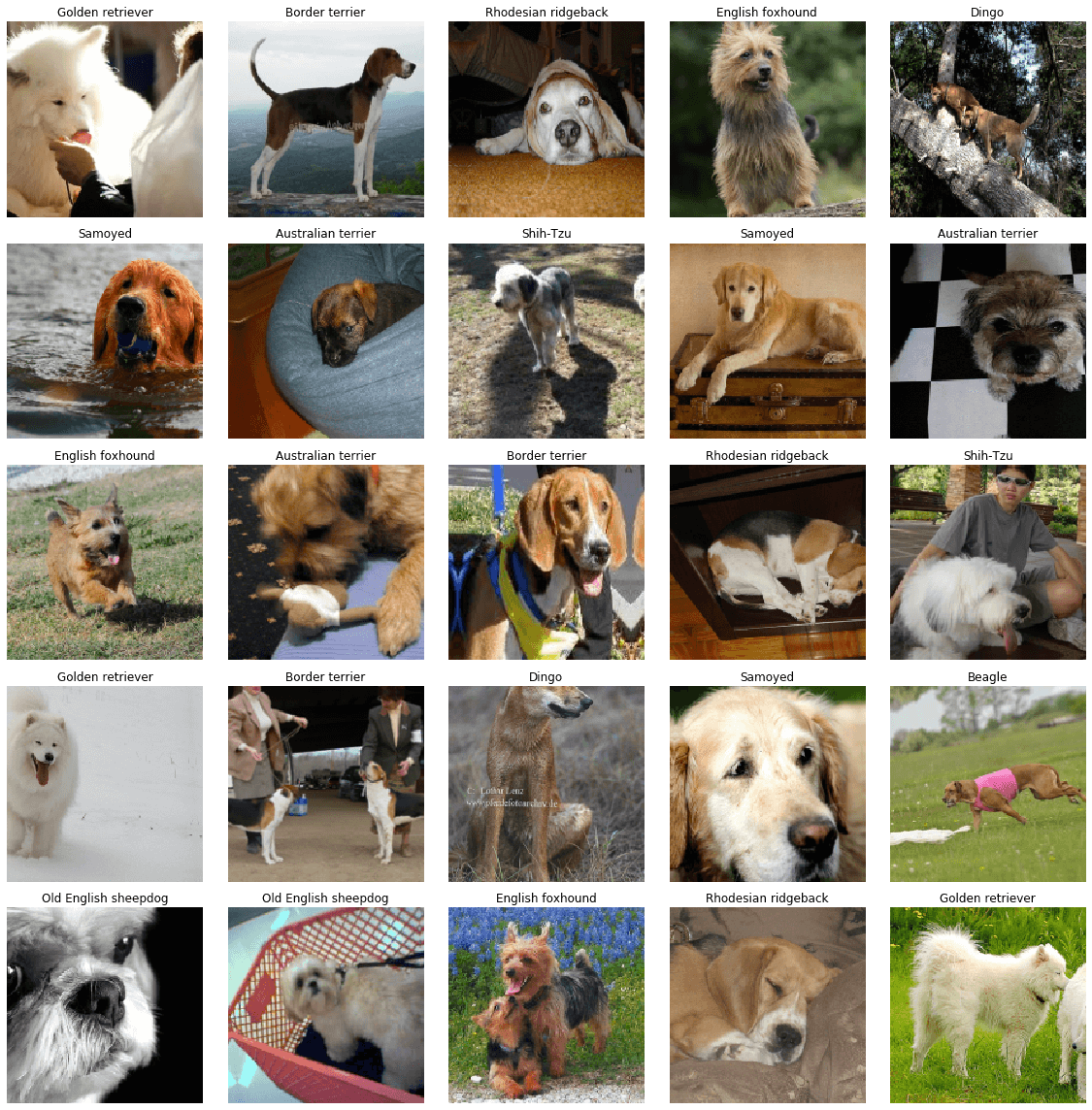

Sample Images and Annotations

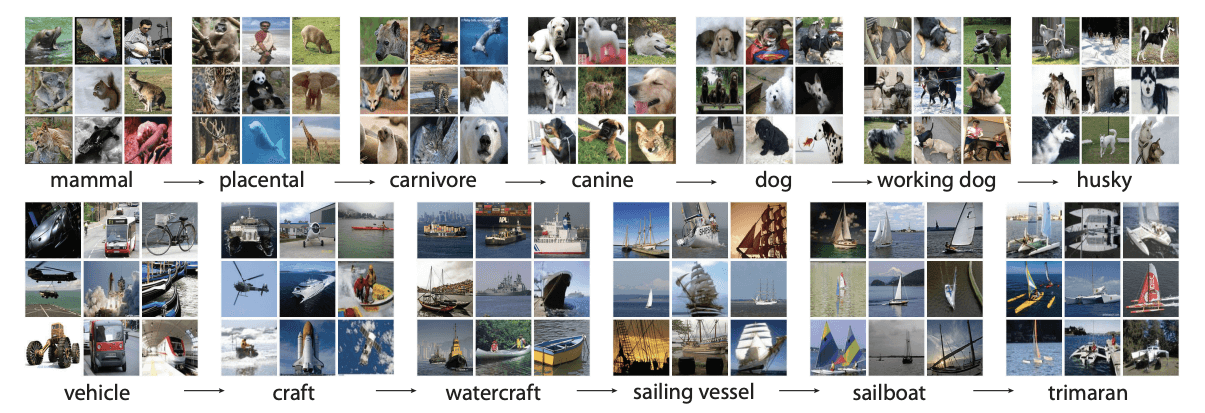

The ImageNet dataset contains high-resolution images spanning thousands of object categories, providing a diverse and extensive dataset for training and evaluating computer vision models. Here are some examples of images from the dataset:

The example showcases the variety and complexity of the images in the ImageNet dataset, highlighting the importance of a diverse dataset for training robust computer vision models.

Citations and Acknowledgments

If you use the ImageNet dataset in your research or development work, please cite the following paper:

!!! Quote ""

=== "BibTeX"

```py

@article{ILSVRC15,

author = {Olga Russakovsky and Jia Deng and Hao Su and Jonathan Krause and Sanjeev Satheesh and Sean Ma and Zhiheng Huang and Andrej Karpathy and Aditya Khosla and Michael Bernstein and Alexander C. Berg and Li Fei-Fei},

title={ImageNet Large Scale Visual Recognition Challenge},

year={2015},

journal={International Journal of Computer Vision (IJCV)},

volume={115},

number={3},

pages={211-252}

}

```

We would like to acknowledge the ImageNet team, led by Olga Russakovsky, Jia Deng, and Li Fei-Fei, for creating and maintaining the ImageNet dataset as a valuable resource for the machine learning and computer vision research community. For more information about the ImageNet dataset and its creators, visit the ImageNet website.

FAQ

What is the ImageNet dataset and how is it used in computer vision?

The ImageNet dataset is a large-scale database consisting of over 14 million high-resolution images categorized using WordNet synsets. It is extensively used in visual object recognition research, including image classification and object detection. The dataset's annotations and sheer volume provide a rich resource for training deep learning models. Notably, models like AlexNet, VGG, and ResNet have been trained and benchmarked using ImageNet, showcasing its role in advancing computer vision.

How can I use a pretrained YOLO model for image classification on the ImageNet dataset?

To use a pretrained Ultralytics YOLO model for image classification on the ImageNet dataset, follow these steps:

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="imagenet", epochs=100, imgsz=224)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo train data=imagenet model=yolov8n-cls.pt epochs=100 imgsz=224

```

For more in-depth training instruction, refer to our Training page.

Why should I use the Ultralytics YOLOv8 pretrained models for my ImageNet dataset projects?

Ultralytics YOLOv8 pretrained models offer state-of-the-art performance in terms of speed and accuracy for various computer vision tasks. For example, the YOLOv8n-cls model, with a top-1 accuracy of 69.0% and a top-5 accuracy of 88.3%, is optimized for real-time applications. Pretrained models reduce the computational resources required for training from scratch and accelerate development cycles. Learn more about the performance metrics of YOLOv8 models in the ImageNet Pretrained Models section.

How is the ImageNet dataset structured, and why is it important?

The ImageNet dataset is organized using the WordNet hierarchy, where each node in the hierarchy represents a category described by a synset (a collection of synonymous terms). This structure allows for detailed annotations, making it ideal for training models to recognize a wide variety of objects. The diversity and annotation richness of ImageNet make it a valuable dataset for developing robust and generalizable deep learning models. More about this organization can be found in the Dataset Structure section.

What role does the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) play in computer vision?

The annual ImageNet Large Scale Visual Recognition Challenge (ILSVRC) has been pivotal in driving advancements in computer vision by providing a competitive platform for evaluating algorithms on a large-scale, standardized dataset. It offers standardized evaluation metrics, fostering innovation and development in areas such as image classification, object detection, and image segmentation. The challenge has continuously pushed the boundaries of what is possible with deep learning and computer vision technologies.

comments: true

description: Discover ImageNet10 a compact version of ImageNet for rapid model testing and CI checks. Perfect for quick evaluations in computer vision tasks.

keywords: ImageNet10, ImageNet, Ultralytics, CI tests, sanity checks, training pipelines, computer vision, deep learning, dataset

ImageNet10 Dataset

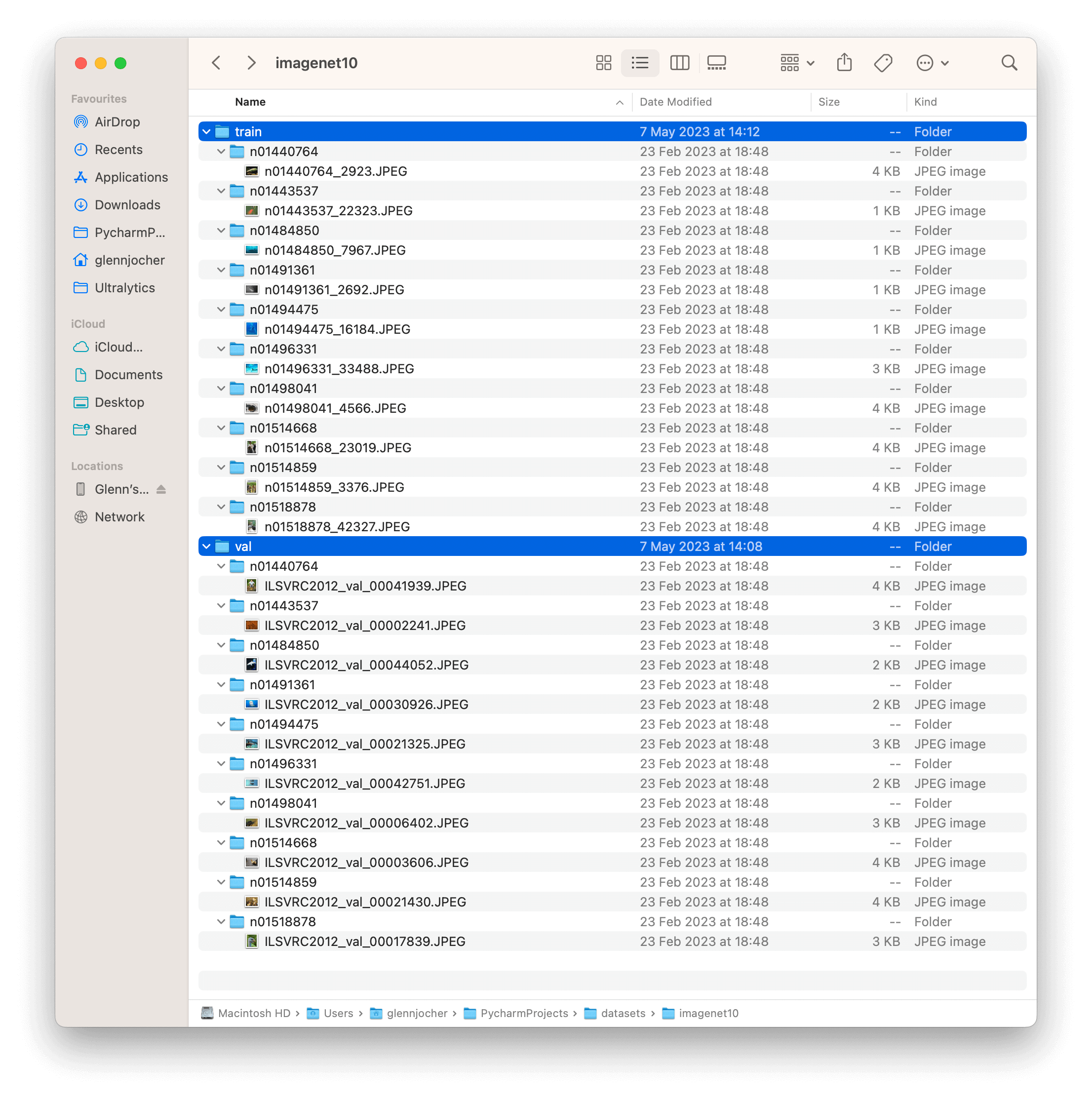

The ImageNet10 dataset is a small-scale subset of the ImageNet database, developed by Ultralytics and designed for CI tests, sanity checks, and fast testing of training pipelines. This dataset is composed of the first image in the training set and the first image from the validation set of the first 10 classes in ImageNet. Although significantly smaller, it retains the structure and diversity of the original ImageNet dataset.

Key Features

- ImageNet10 is a compact version of ImageNet, with 20 images representing the first 10 classes of the original dataset.

- The dataset is organized according to the WordNet hierarchy, mirroring the structure of the full ImageNet dataset.

- It is ideally suited for CI tests, sanity checks, and rapid testing of training pipelines in computer vision tasks.

- Although not designed for model benchmarking, it can provide a quick indication of a model's basic functionality and correctness.

Dataset Structure

The ImageNet10 dataset, like the original ImageNet, is organized using the WordNet hierarchy. Each of the 10 classes in ImageNet10 is described by a synset (a collection of synonymous terms). The images in ImageNet10 are annotated with one or more synsets, providing a compact resource for testing models to recognize various objects and their relationships.

Applications

The ImageNet10 dataset is useful for quickly testing and debugging computer vision models and pipelines. Its small size allows for rapid iteration, making it ideal for continuous integration tests and sanity checks. It can also be used for fast preliminary testing of new models or changes to existing models before moving on to full-scale testing with the complete ImageNet dataset.

Usage

To test a deep learning model on the ImageNet10 dataset with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Test Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="imagenet10", epochs=5, imgsz=224)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo train data=imagenet10 model=yolov8n-cls.pt epochs=5 imgsz=224

```

Sample Images and Annotations

The ImageNet10 dataset contains a subset of images from the original ImageNet dataset. These images are chosen to represent the first 10 classes in the dataset, providing a diverse yet compact dataset for quick testing and evaluation.

The example showcases the variety and complexity of the images in the ImageNet10 dataset, highlighting its usefulness for sanity checks and quick testing of computer vision models.

The example showcases the variety and complexity of the images in the ImageNet10 dataset, highlighting its usefulness for sanity checks and quick testing of computer vision models.

Citations and Acknowledgments

If you use the ImageNet10 dataset in your research or development work, please cite the original ImageNet paper:

!!! Quote ""

=== "BibTeX"

```py

@article{ILSVRC15,

author = {Olga Russakovsky and Jia Deng and Hao Su and Jonathan Krause and Sanjeev Satheesh and Sean Ma and Zhiheng Huang and Andrej Karpathy and Aditya Khosla and Michael Bernstein and Alexander C. Berg and Li Fei-Fei},

title={ImageNet Large Scale Visual Recognition Challenge},

year={2015},

journal={International Journal of Computer Vision (IJCV)},

volume={115},

number={3},

pages={211-252}

}

```

We would like to acknowledge the ImageNet team, led by Olga Russakovsky, Jia Deng, and Li Fei-Fei, for creating and maintaining the ImageNet dataset. The ImageNet10 dataset, while a compact subset, is a valuable resource for quick testing and debugging in the machine learning and computer vision research community. For more information about the ImageNet dataset and its creators, visit the ImageNet website.

FAQ

What is the ImageNet10 dataset and how is it different from the full ImageNet dataset?

The ImageNet10 dataset is a compact subset of the original ImageNet database, created by Ultralytics for rapid CI tests, sanity checks, and training pipeline evaluations. ImageNet10 comprises only 20 images, representing the first image in the training and validation sets of the first 10 classes in ImageNet. Despite its small size, it maintains the structure and diversity of the full dataset, making it ideal for quick testing but not for benchmarking models.

How can I use the ImageNet10 dataset to test my deep learning model?

To test your deep learning model on the ImageNet10 dataset with an image size of 224x224, use the following code snippets.

!!! Example "Test Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="imagenet10", epochs=5, imgsz=224)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo train data=imagenet10 model=yolov8n-cls.pt epochs=5 imgsz=224

```

Refer to the Training page for a comprehensive list of available arguments.

Why should I use the ImageNet10 dataset for CI tests and sanity checks?

The ImageNet10 dataset is designed specifically for CI tests, sanity checks, and quick evaluations in deep learning pipelines. Its small size allows for rapid iteration and testing, making it perfect for continuous integration processes where speed is crucial. By maintaining the structural complexity and diversity of the original ImageNet dataset, ImageNet10 provides a reliable indication of a model's basic functionality and correctness without the overhead of processing a large dataset.

What are the main features of the ImageNet10 dataset?

The ImageNet10 dataset has several key features:

- Compact Size: With only 20 images, it allows for rapid testing and debugging.

- Structured Organization: Follows the WordNet hierarchy, similar to the full ImageNet dataset.

- CI and Sanity Checks: Ideally suited for continuous integration tests and sanity checks.

- Not for Benchmarking: While useful for quick model evaluations, it is not designed for extensive benchmarking.

Where can I download the ImageNet10 dataset?

You can download the ImageNet10 dataset from the Ultralytics GitHub releases page. For more detailed information about its structure and applications, refer to the ImageNet10 Dataset page.

comments: true

description: Explore the ImageNette dataset, a subset of ImageNet with 10 classes for efficient training and evaluation of image classification models. Ideal for ML and CV projects.

keywords: ImageNette dataset, ImageNet subset, image classification, machine learning, deep learning, YOLO, Convolutional Neural Networks, ML dataset, education, training

ImageNette Dataset

The ImageNette dataset is a subset of the larger Imagenet dataset, but it only includes 10 easily distinguishable classes. It was created to provide a quicker, easier-to-use version of Imagenet for software development and education.

Key Features

- ImageNette contains images from 10 different classes such as tench, English springer, cassette player, chain saw, church, French horn, garbage truck, gas pump, golf ball, parachute.

- The dataset comprises colored images of varying dimensions.

- ImageNette is widely used for training and testing in the field of machine learning, especially for image classification tasks.

Dataset Structure

The ImageNette dataset is split into two subsets:

- Training Set: This subset contains several thousands of images used for training machine learning models. The exact number varies per class.

- Validation Set: This subset consists of several hundreds of images used for validating and benchmarking the trained models. Again, the exact number varies per class.

Applications

The ImageNette dataset is widely used for training and evaluating deep learning models in image classification tasks, such as Convolutional Neural Networks (CNNs), and various other machine learning algorithms. The dataset's straightforward format and well-chosen classes make it a handy resource for both beginner and experienced practitioners in the field of machine learning and computer vision.

Usage

To train a model on the ImageNette dataset for 100 epochs with a standard image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model Training page.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="imagenette", epochs=100, imgsz=224)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model

yolo detect train data=imagenette model=yolov8n-cls.pt epochs=100 imgsz=224

```

Sample Images and Annotations

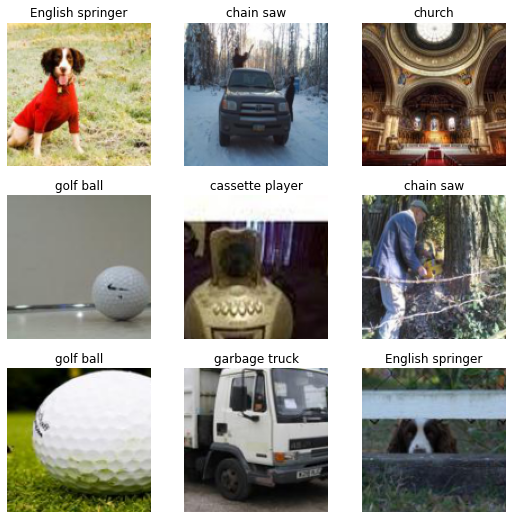

The ImageNette dataset contains colored images of various objects and scenes, providing a diverse dataset for image classification tasks. Here are some examples of images from the dataset:

The example showcases the variety and complexity of the images in the ImageNette dataset, highlighting the importance of a diverse dataset for training robust image classification models.

ImageNette160 and ImageNette320

For faster prototyping and training, the ImageNette dataset is also available in two reduced sizes: ImageNette160 and ImageNette320. These datasets maintain the same classes and structure as the full ImageNette dataset, but the images are resized to a smaller dimension. As such, these versions of the dataset are particularly useful for preliminary model testing, or when computational resources are limited.

To use these datasets, simply replace 'imagenette' with 'imagenette160' or 'imagenette320' in the training command. The following code snippets illustrate this:

!!! Example "Train Example with ImageNette160"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model with ImageNette160

results = model.train(data="imagenette160", epochs=100, imgsz=160)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model with ImageNette160

yolo detect train data=imagenette160 model=yolov8n-cls.pt epochs=100 imgsz=160

```

!!! Example "Train Example with ImageNette320"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model with ImageNette320

results = model.train(data="imagenette320", epochs=100, imgsz=320)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model with ImageNette320

yolo detect train data=imagenette320 model=yolov8n-cls.pt epochs=100 imgsz=320

```

These smaller versions of the dataset allow for rapid iterations during the development process while still providing valuable and realistic image classification tasks.

Citations and Acknowledgments

If you use the ImageNette dataset in your research or development work, please acknowledge it appropriately. For more information about the ImageNette dataset, visit the ImageNette dataset GitHub page.

FAQ

What is the ImageNette dataset?

The ImageNette dataset is a simplified subset of the larger ImageNet dataset, featuring only 10 easily distinguishable classes such as tench, English springer, and French horn. It was created to offer a more manageable dataset for efficient training and evaluation of image classification models. This dataset is particularly useful for quick software development and educational purposes in machine learning and computer vision.

How can I use the ImageNette dataset for training a YOLO model?

To train a YOLO model on the ImageNette dataset for 100 epochs, you can use the following commands. Make sure to have the Ultralytics YOLO environment set up.

!!! Example "Train Example"

=== "Python"

```py

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

results = model.train(data="imagenette", epochs=100, imgsz=224)

```

=== "CLI"

```py

# Start training from a pretrained *.pt model