Yolov8-源码解析-十五-

Yolov8 源码解析(十五)

comments: true

description: Discover FastSAM, a real-time CNN-based solution for segmenting any object in an image. Efficient, competitive, and ideal for various vision tasks.

keywords: FastSAM, Fast Segment Anything Model, Ultralytics, real-time segmentation, CNN, YOLOv8-seg, object segmentation, image processing, computer vision

Fast Segment Anything Model (FastSAM)

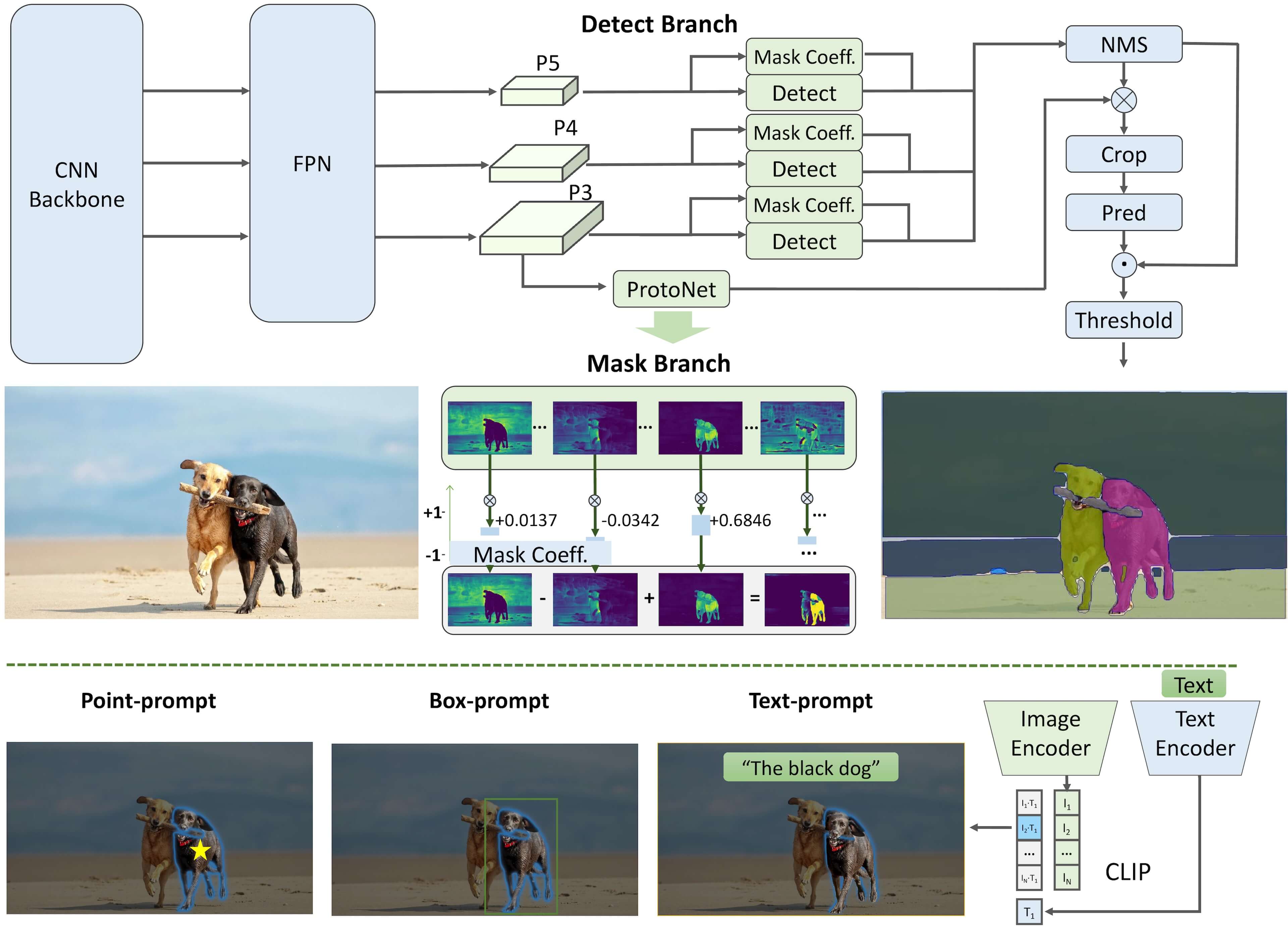

The Fast Segment Anything Model (FastSAM) is a novel, real-time CNN-based solution for the Segment Anything task. This task is designed to segment any object within an image based on various possible user interaction prompts. FastSAM significantly reduces computational demands while maintaining competitive performance, making it a practical choice for a variety of vision tasks.

Watch: Object Tracking using FastSAM with Ultralytics

Model Architecture

Overview

FastSAM is designed to address the limitations of the Segment Anything Model (SAM), a heavy Transformer model with substantial computational resource requirements. The FastSAM decouples the segment anything task into two sequential stages: all-instance segmentation and prompt-guided selection. The first stage uses YOLOv8-seg to produce the segmentation masks of all instances in the image. In the second stage, it outputs the region-of-interest corresponding to the prompt.

Key Features

-

Real-time Solution: By leveraging the computational efficiency of CNNs, FastSAM provides a real-time solution for the segment anything task, making it valuable for industrial applications that require quick results.

-

Efficiency and Performance: FastSAM offers a significant reduction in computational and resource demands without compromising on performance quality. It achieves comparable performance to SAM but with drastically reduced computational resources, enabling real-time application.

-

Prompt-guided Segmentation: FastSAM can segment any object within an image guided by various possible user interaction prompts, providing flexibility and adaptability in different scenarios.

-

Based on YOLOv8-seg: FastSAM is based on YOLOv8-seg, an object detector equipped with an instance segmentation branch. This allows it to effectively produce the segmentation masks of all instances in an image.

-

Competitive Results on Benchmarks: On the object proposal task on MS COCO, FastSAM achieves high scores at a significantly faster speed than SAM on a single NVIDIA RTX 3090, demonstrating its efficiency and capability.

-

Practical Applications: The proposed approach provides a new, practical solution for a large number of vision tasks at a really high speed, tens or hundreds of times faster than current methods.

-

Model Compression Feasibility: FastSAM demonstrates the feasibility of a path that can significantly reduce the computational effort by introducing an artificial prior to the structure, thus opening new possibilities for large model architecture for general vision tasks.

Available Models, Supported Tasks, and Operating Modes

This table presents the available models with their specific pre-trained weights, the tasks they support, and their compatibility with different operating modes like Inference, Validation, Training, and Export, indicated by ✅ emojis for supported modes and ❌ emojis for unsupported modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| FastSAM-s | FastSAM-s.pt | Instance Segmentation | ✅ | ❌ | ❌ | ✅ |

| FastSAM-x | FastSAM-x.pt | Instance Segmentation | ✅ | ❌ | ❌ | ✅ |

Usage Examples

The FastSAM models are easy to integrate into your Python applications. Ultralytics provides user-friendly Python API and CLI commands to streamline development.

Predict Usage

To perform object detection on an image, use the predict method as shown below:

!!! Example

=== "Python"

```py

from ultralytics import FastSAM

# Define an inference source

source = "path/to/bus.jpg"

# Create a FastSAM model

model = FastSAM("FastSAM-s.pt") # or FastSAM-x.pt

# Run inference on an image

everything_results = model(source, device="cpu", retina_masks=True, imgsz=1024, conf=0.4, iou=0.9)

# Run inference with bboxes prompt

results = model(source, bboxes=[439, 437, 524, 709])

# Run inference with points prompt

results = model(source, points=[[200, 200]], labels=[1])

# Run inference with texts prompt

results = model(source, texts="a photo of a dog")

# Run inference with bboxes and points and texts prompt at the same time

results = model(source, bboxes=[439, 437, 524, 709], points=[[200, 200]], labels=[1], texts="a photo of a dog")

```

=== "CLI"

```py

# Load a FastSAM model and segment everything with it

yolo segment predict model=FastSAM-s.pt source=path/to/bus.jpg imgsz=640

```

This snippet demonstrates the simplicity of loading a pre-trained model and running a prediction on an image.

!!! Example "FastSAMPredictor example"

This way you can run inference on image and get all the segment `results` once and run prompts inference multiple times without running inference multiple times.

=== "Prompt inference"

```py

from ultralytics.models.fastsam import FastSAMPredictor

# Create FastSAMPredictor

overrides = dict(conf=0.25, task="segment", mode="predict", model="FastSAM-s.pt", save=False, imgsz=1024)

predictor = FastSAMPredictor(overrides=overrides)

# Segment everything

everything_results = predictor("ultralytics/assets/bus.jpg")

# Prompt inference

bbox_results = predictor.prompt(everything_results, bboxes=[[200, 200, 300, 300]])

point_results = predictor.prompt(everything_results, points=[200, 200])

text_results = predictor.prompt(everything_results, texts="a photo of a dog")

```

!!! Note

All the returned `results` in above examples are [Results](../modes/predict.md#working-with-results) object which allows access predicted masks and source image easily.

Val Usage

Validation of the model on a dataset can be done as follows:

!!! Example

=== "Python"

```py

from ultralytics import FastSAM

# Create a FastSAM model

model = FastSAM("FastSAM-s.pt") # or FastSAM-x.pt

# Validate the model

results = model.val(data="coco8-seg.yaml")

```

=== "CLI"

```py

# Load a FastSAM model and validate it on the COCO8 example dataset at image size 640

yolo segment val model=FastSAM-s.pt data=coco8.yaml imgsz=640

```

Please note that FastSAM only supports detection and segmentation of a single class of object. This means it will recognize and segment all objects as the same class. Therefore, when preparing the dataset, you need to convert all object category IDs to 0.

Track Usage

To perform object tracking on an image, use the track method as shown below:

!!! Example

=== "Python"

```py

from ultralytics import FastSAM

# Create a FastSAM model

model = FastSAM("FastSAM-s.pt") # or FastSAM-x.pt

# Track with a FastSAM model on a video

results = model.track(source="path/to/video.mp4", imgsz=640)

```

=== "CLI"

```py

yolo segment track model=FastSAM-s.pt source="path/to/video/file.mp4" imgsz=640

```

FastSAM official Usage

FastSAM is also available directly from the https://github.com/CASIA-IVA-Lab/FastSAM repository. Here is a brief overview of the typical steps you might take to use FastSAM:

Installation

-

Clone the FastSAM repository:

git clone https://github.com/CASIA-IVA-Lab/FastSAM.git -

Create and activate a Conda environment with Python 3.9:

conda create -n FastSAM python=3.9 conda activate FastSAM -

Navigate to the cloned repository and install the required packages:

cd FastSAM pip install -r requirements.txt -

Install the CLIP model:

pip install git+https://github.com/ultralytics/CLIP.git

Example Usage

-

Download a model checkpoint.

-

Use FastSAM for inference. Example commands:

-

Segment everything in an image:

python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg -

Segment specific objects using text prompt:

python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --text_prompt "the yellow dog" -

Segment objects within a bounding box (provide box coordinates in xywh format):

python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --box_prompt "[570,200,230,400]" -

Segment objects near specific points:

python Inference.py --model_path ./weights/FastSAM.pt --img_path ./images/dogs.jpg --point_prompt "[[520,360],[620,300]]" --point_label "[1,0]"

-

Additionally, you can try FastSAM through a Colab demo or on the HuggingFace web demo for a visual experience.

Citations and Acknowledgements

We would like to acknowledge the FastSAM authors for their significant contributions in the field of real-time instance segmentation:

!!! Quote ""

=== "BibTeX"

```py

@misc{zhao2023fast,

title={Fast Segment Anything},

author={Xu Zhao and Wenchao Ding and Yongqi An and Yinglong Du and Tao Yu and Min Li and Ming Tang and Jinqiao Wang},

year={2023},

eprint={2306.12156},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

The original FastSAM paper can be found on arXiv. The authors have made their work publicly available, and the codebase can be accessed on GitHub. We appreciate their efforts in advancing the field and making their work accessible to the broader community.

FAQ

What is FastSAM and how does it differ from SAM?

FastSAM, short for Fast Segment Anything Model, is a real-time convolutional neural network (CNN)-based solution designed to reduce computational demands while maintaining high performance in object segmentation tasks. Unlike the Segment Anything Model (SAM), which uses a heavier Transformer-based architecture, FastSAM leverages Ultralytics YOLOv8-seg for efficient instance segmentation in two stages: all-instance segmentation followed by prompt-guided selection.

How does FastSAM achieve real-time segmentation performance?

FastSAM achieves real-time segmentation by decoupling the segmentation task into all-instance segmentation with YOLOv8-seg and prompt-guided selection stages. By utilizing the computational efficiency of CNNs, FastSAM offers significant reductions in computational and resource demands while maintaining competitive performance. This dual-stage approach enables FastSAM to deliver fast and efficient segmentation suitable for applications requiring quick results.

What are the practical applications of FastSAM?

FastSAM is practical for a variety of computer vision tasks that require real-time segmentation performance. Applications include:

- Industrial automation for quality control and assurance

- Real-time video analysis for security and surveillance

- Autonomous vehicles for object detection and segmentation

- Medical imaging for precise and quick segmentation tasks

Its ability to handle various user interaction prompts makes FastSAM adaptable and flexible for diverse scenarios.

How do I use the FastSAM model for inference in Python?

To use FastSAM for inference in Python, you can follow the example below:

from ultralytics import FastSAM

# Define an inference source

source = "path/to/bus.jpg"

# Create a FastSAM model

model = FastSAM("FastSAM-s.pt") # or FastSAM-x.pt

# Run inference on an image

everything_results = model(source, device="cpu", retina_masks=True, imgsz=1024, conf=0.4, iou=0.9)

# Run inference with bboxes prompt

results = model(source, bboxes=[439, 437, 524, 709])

# Run inference with points prompt

results = model(source, points=[[200, 200]], labels=[1])

# Run inference with texts prompt

results = model(source, texts="a photo of a dog")

# Run inference with bboxes and points and texts prompt at the same time

results = model(source, bboxes=[439, 437, 524, 709], points=[[200, 200]], labels=[1], texts="a photo of a dog")

For more details on inference methods, check the Predict Usage section of the documentation.

What types of prompts does FastSAM support for segmentation tasks?

FastSAM supports multiple prompt types for guiding the segmentation tasks:

- Everything Prompt: Generates segmentation for all visible objects.

- Bounding Box (BBox) Prompt: Segments objects within a specified bounding box.

- Text Prompt: Uses a descriptive text to segment objects matching the description.

- Point Prompt: Segments objects near specific user-defined points.

This flexibility allows FastSAM to adapt to a wide range of user interaction scenarios, enhancing its utility across different applications. For more information on using these prompts, refer to the Key Features section.

comments: true

description: Discover a variety of models supported by Ultralytics, including YOLOv3 to YOLOv10, NAS, SAM, and RT-DETR for detection, segmentation, and more.

keywords: Ultralytics, supported models, YOLOv3, YOLOv4, YOLOv5, YOLOv6, YOLOv7, YOLOv8, YOLOv9, YOLOv10, SAM, NAS, RT-DETR, object detection, image segmentation, classification, pose estimation, multi-object tracking

Models Supported by Ultralytics

Welcome to Ultralytics' model documentation! We offer support for a wide range of models, each tailored to specific tasks like object detection, instance segmentation, image classification, pose estimation, and multi-object tracking. If you're interested in contributing your model architecture to Ultralytics, check out our Contributing Guide.

Featured Models

Here are some of the key models supported:

- YOLOv3: The third iteration of the YOLO model family, originally by Joseph Redmon, known for its efficient real-time object detection capabilities.

- YOLOv4: A darknet-native update to YOLOv3, released by Alexey Bochkovskiy in 2020.

- YOLOv5: An improved version of the YOLO architecture by Ultralytics, offering better performance and speed trade-offs compared to previous versions.

- YOLOv6: Released by Meituan in 2022, and in use in many of the company's autonomous delivery robots.

- YOLOv7: Updated YOLO models released in 2022 by the authors of YOLOv4.

- YOLOv8 NEW 🚀: The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

- YOLOv9: An experimental model trained on the Ultralytics YOLOv5 codebase implementing Programmable Gradient Information (PGI).

- YOLOv10: By Tsinghua University, featuring NMS-free training and efficiency-accuracy driven architecture, delivering state-of-the-art performance and latency.

- Segment Anything Model (SAM): Meta's original Segment Anything Model (SAM).

- Segment Anything Model 2 (SAM2): The next generation of Meta's Segment Anything Model (SAM) for videos and images.

- Mobile Segment Anything Model (MobileSAM): MobileSAM for mobile applications, by Kyung Hee University.

- Fast Segment Anything Model (FastSAM): FastSAM by Image & Video Analysis Group, Institute of Automation, Chinese Academy of Sciences.

- YOLO-NAS: YOLO Neural Architecture Search (NAS) Models.

- Realtime Detection Transformers (RT-DETR): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

- YOLO-World: Real-time Open Vocabulary Object Detection models from Tencent AI Lab.

Watch: Run Ultralytics YOLO models in just a few lines of code.

Getting Started: Usage Examples

This example provides simple YOLO training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

Note the below example is for YOLOv8 Detect models for object detection. For additional supported tasks see the Segment, Classify and Pose docs.

!!! Example

=== "Python"

PyTorch pretrained `*.pt` models as well as configuration `*.yaml` files can be passed to the `YOLO()`, `SAM()`, `NAS()` and `RTDETR()` classes to create a model instance in Python:

```py

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8n model

model = YOLO("yolov8n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv8n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

CLI commands are available to directly run the models:

```py

# Load a COCO-pretrained YOLOv8n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov8n.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained YOLOv8n model and run inference on the 'bus.jpg' image

yolo predict model=yolov8n.pt source=path/to/bus.jpg

```

Contributing New Models

Interested in contributing your model to Ultralytics? Great! We're always open to expanding our model portfolio.

-

Fork the Repository: Start by forking the Ultralytics GitHub repository.

-

Clone Your Fork: Clone your fork to your local machine and create a new branch to work on.

-

Implement Your Model: Add your model following the coding standards and guidelines provided in our Contributing Guide.

-

Test Thoroughly: Make sure to test your model rigorously, both in isolation and as part of the pipeline.

-

Create a Pull Request: Once you're satisfied with your model, create a pull request to the main repository for review.

-

Code Review & Merging: After review, if your model meets our criteria, it will be merged into the main repository.

For detailed steps, consult our Contributing Guide.

FAQ

What are the key advantages of using Ultralytics YOLOv8 for object detection?

Ultralytics YOLOv8 offers enhanced capabilities such as real-time object detection, instance segmentation, pose estimation, and classification. Its optimized architecture ensures high-speed performance without sacrificing accuracy, making it ideal for a variety of applications. YOLOv8 also includes built-in compatibility with popular datasets and models, as detailed on the YOLOv8 documentation page.

How can I train a YOLOv8 model on custom data?

Training a YOLOv8 model on custom data can be easily accomplished using Ultralytics' libraries. Here's a quick example:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a YOLOv8n model

model = YOLO("yolov8n.pt")

# Train the model on custom dataset

results = model.train(data="custom_data.yaml", epochs=100, imgsz=640)

```

=== "CLI"

```py

yolo train model=yolov8n.pt data='custom_data.yaml' epochs=100 imgsz=640

```

For more detailed instructions, visit the Train documentation page.

Which YOLO versions are supported by Ultralytics?

Ultralytics supports a comprehensive range of YOLO (You Only Look Once) versions from YOLOv3 to YOLOv10, along with models like NAS, SAM, and RT-DETR. Each version is optimized for various tasks such as detection, segmentation, and classification. For detailed information on each model, refer to the Models Supported by Ultralytics documentation.

Why should I use Ultralytics HUB for machine learning projects?

Ultralytics HUB provides a no-code, end-to-end platform for training, deploying, and managing YOLO models. It simplifies complex workflows, enabling users to focus on model performance and application. The HUB also offers cloud training capabilities, comprehensive dataset management, and user-friendly interfaces. Learn more about it on the Ultralytics HUB documentation page.

What types of tasks can YOLOv8 perform, and how does it compare to other YOLO versions?

YOLOv8 is a versatile model capable of performing tasks including object detection, instance segmentation, classification, and pose estimation. Compared to earlier versions like YOLOv3 and YOLOv4, YOLOv8 offers significant improvements in speed and accuracy due to its optimized architecture. For a deeper comparison, refer to the YOLOv8 documentation and the Task pages for more details on specific tasks.

comments: true

description: Discover MobileSAM, a lightweight and fast image segmentation model for mobile applications. Compare its performance with the original SAM and explore its various modes.

keywords: MobileSAM, image segmentation, lightweight model, fast segmentation, mobile applications, SAM, ViT encoder, Tiny-ViT, Ultralytics

Mobile Segment Anything (MobileSAM)

The MobileSAM paper is now available on arXiv.

A demonstration of MobileSAM running on a CPU can be accessed at this demo link. The performance on a Mac i5 CPU takes approximately 3 seconds. On the Hugging Face demo, the interface and lower-performance CPUs contribute to a slower response, but it continues to function effectively.

MobileSAM is implemented in various projects including Grounding-SAM, AnyLabeling, and Segment Anything in 3D.

MobileSAM is trained on a single GPU with a 100k dataset (1% of the original images) in less than a day. The code for this training will be made available in the future.

Available Models, Supported Tasks, and Operating Modes

This table presents the available models with their specific pre-trained weights, the tasks they support, and their compatibility with different operating modes like Inference, Validation, Training, and Export, indicated by ✅ emojis for supported modes and ❌ emojis for unsupported modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| MobileSAM | mobile_sam.pt | Instance Segmentation | ✅ | ❌ | ❌ | ❌ |

Adapting from SAM to MobileSAM

Since MobileSAM retains the same pipeline as the original SAM, we have incorporated the original's pre-processing, post-processing, and all other interfaces. Consequently, those currently using the original SAM can transition to MobileSAM with minimal effort.

MobileSAM performs comparably to the original SAM and retains the same pipeline except for a change in the image encoder. Specifically, we replace the original heavyweight ViT-H encoder (632M) with a smaller Tiny-ViT (5M). On a single GPU, MobileSAM operates at about 12ms per image: 8ms on the image encoder and 4ms on the mask decoder.

The following table provides a comparison of ViT-based image encoders:

| Image Encoder | Original SAM | MobileSAM |

|---|---|---|

| Parameters | 611M | 5M |

| Speed | 452ms | 8ms |

Both the original SAM and MobileSAM utilize the same prompt-guided mask decoder:

| Mask Decoder | Original SAM | MobileSAM |

|---|---|---|

| Parameters | 3.876M | 3.876M |

| Speed | 4ms | 4ms |

Here is the comparison of the whole pipeline:

| Whole Pipeline (Enc+Dec) | Original SAM | MobileSAM |

|---|---|---|

| Parameters | 615M | 9.66M |

| Speed | 456ms | 12ms |

The performance of MobileSAM and the original SAM are demonstrated using both a point and a box as prompts.

With its superior performance, MobileSAM is approximately 5 times smaller and 7 times faster than the current FastSAM. More details are available at the MobileSAM project page.

Testing MobileSAM in Ultralytics

Just like the original SAM, we offer a straightforward testing method in Ultralytics, including modes for both Point and Box prompts.

Model Download

You can download the model here.

Point Prompt

!!! Example

=== "Python"

```py

from ultralytics import SAM

# Load the model

model = SAM("mobile_sam.pt")

# Predict a segment based on a point prompt

model.predict("ultralytics/assets/zidane.jpg", points=[900, 370], labels=[1])

```

Box Prompt

!!! Example

=== "Python"

```py

from ultralytics import SAM

# Load the model

model = SAM("mobile_sam.pt")

# Predict a segment based on a box prompt

model.predict("ultralytics/assets/zidane.jpg", bboxes=[439, 437, 524, 709])

```

We have implemented MobileSAM and SAM using the same API. For more usage information, please see the SAM page.

Citations and Acknowledgements

If you find MobileSAM useful in your research or development work, please consider citing our paper:

!!! Quote ""

=== "BibTeX"

```py

@article{mobile_sam,

title={Faster Segment Anything: Towards Lightweight SAM for Mobile Applications},

author={Zhang, Chaoning and Han, Dongshen and Qiao, Yu and Kim, Jung Uk and Bae, Sung Ho and Lee, Seungkyu and Hong, Choong Seon},

journal={arXiv preprint arXiv:2306.14289},

year={2023}

}

```

FAQ

What is MobileSAM and how does it differ from the original SAM model?

MobileSAM is a lightweight, fast image segmentation model designed for mobile applications. It retains the same pipeline as the original SAM but replaces the heavyweight ViT-H encoder (632M parameters) with a smaller Tiny-ViT encoder (5M parameters). This change results in MobileSAM being approximately 5 times smaller and 7 times faster than the original SAM. For instance, MobileSAM operates at about 12ms per image, compared to the original SAM's 456ms. You can learn more about the MobileSAM implementation in various projects here.

How can I test MobileSAM using Ultralytics?

Testing MobileSAM in Ultralytics can be accomplished through straightforward methods. You can use Point and Box prompts to predict segments. Here's an example using a Point prompt:

from ultralytics import SAM

# Load the model

model = SAM("mobile_sam.pt")

# Predict a segment based on a point prompt

model.predict("ultralytics/assets/zidane.jpg", points=[900, 370], labels=[1])

You can also refer to the Testing MobileSAM section for more details.

Why should I use MobileSAM for my mobile application?

MobileSAM is ideal for mobile applications due to its lightweight architecture and fast inference speed. Compared to the original SAM, MobileSAM is approximately 5 times smaller and 7 times faster, making it suitable for environments where computational resources are limited. This efficiency ensures that mobile devices can perform real-time image segmentation without significant latency. Additionally, MobileSAM's models, such as Inference, are optimized for mobile performance.

How was MobileSAM trained, and is the training code available?

MobileSAM was trained on a single GPU with a 100k dataset, which is 1% of the original images, in less than a day. While the training code will be made available in the future, you can currently explore other aspects of MobileSAM in the MobileSAM GitHub repository. This repository includes pre-trained weights and implementation details for various applications.

What are the primary use cases for MobileSAM?

MobileSAM is designed for fast and efficient image segmentation in mobile environments. Primary use cases include:

- Real-time object detection and segmentation for mobile applications.

- Low-latency image processing in devices with limited computational resources.

- Integration in AI-driven mobile apps for tasks such as augmented reality (AR) and real-time analytics.

For more detailed use cases and performance comparisons, see the section on Adapting from SAM to MobileSAM.

comments: true

description: Explore Baidu's RT-DETR, a Vision Transformer-based real-time object detector offering high accuracy and adaptable inference speed. Learn more with Ultralytics.

keywords: RT-DETR, Baidu, Vision Transformer, real-time object detection, PaddlePaddle, Ultralytics, pre-trained models, AI, machine learning, computer vision

Baidu's RT-DETR: A Vision Transformer-Based Real-Time Object Detector

Overview

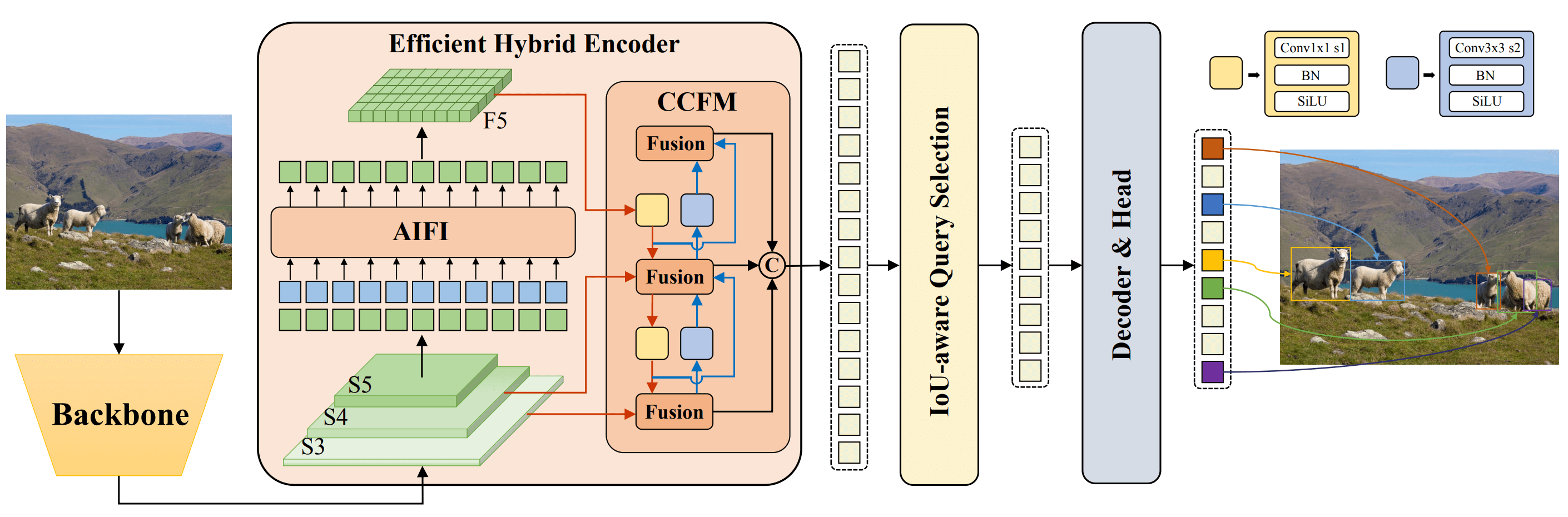

Real-Time Detection Transformer (RT-DETR), developed by Baidu, is a cutting-edge end-to-end object detector that provides real-time performance while maintaining high accuracy. It is based on the idea of DETR (the NMS-free framework), meanwhile introducing conv-based backbone and an efficient hybrid encoder to gain real-time speed. RT-DETR efficiently processes multiscale features by decoupling intra-scale interaction and cross-scale fusion. The model is highly adaptable, supporting flexible adjustment of inference speed using different decoder layers without retraining. RT-DETR excels on accelerated backends like CUDA with TensorRT, outperforming many other real-time object detectors.

Watch: Real-Time Detection Transformer (RT-DETR)

Overview of Baidu's RT-DETR. The RT-DETR model architecture diagram shows the last three stages of the backbone {S3, S4, S5} as the input to the encoder. The efficient hybrid encoder transforms multiscale features into a sequence of image features through intrascale feature interaction (AIFI) and cross-scale feature-fusion module (CCFM). The IoU-aware query selection is employed to select a fixed number of image features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary prediction heads iteratively optimizes object queries to generate boxes and confidence scores (source).

Overview of Baidu's RT-DETR. The RT-DETR model architecture diagram shows the last three stages of the backbone {S3, S4, S5} as the input to the encoder. The efficient hybrid encoder transforms multiscale features into a sequence of image features through intrascale feature interaction (AIFI) and cross-scale feature-fusion module (CCFM). The IoU-aware query selection is employed to select a fixed number of image features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary prediction heads iteratively optimizes object queries to generate boxes and confidence scores (source).

Key Features

- Efficient Hybrid Encoder: Baidu's RT-DETR uses an efficient hybrid encoder that processes multiscale features by decoupling intra-scale interaction and cross-scale fusion. This unique Vision Transformers-based design reduces computational costs and allows for real-time object detection.

- IoU-aware Query Selection: Baidu's RT-DETR improves object query initialization by utilizing IoU-aware query selection. This allows the model to focus on the most relevant objects in the scene, enhancing the detection accuracy.

- Adaptable Inference Speed: Baidu's RT-DETR supports flexible adjustments of inference speed by using different decoder layers without the need for retraining. This adaptability facilitates practical application in various real-time object detection scenarios.

Pre-trained Models

The Ultralytics Python API provides pre-trained PaddlePaddle RT-DETR models with different scales:

- RT-DETR-L: 53.0% AP on COCO val2017, 114 FPS on T4 GPU

- RT-DETR-X: 54.8% AP on COCO val2017, 74 FPS on T4 GPU

Usage Examples

This example provides simple RT-DETR training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

!!! Example

=== "Python"

```py

from ultralytics import RTDETR

# Load a COCO-pretrained RT-DETR-l model

model = RTDETR("rtdetr-l.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the RT-DETR-l model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

```py

# Load a COCO-pretrained RT-DETR-l model and train it on the COCO8 example dataset for 100 epochs

yolo train model=rtdetr-l.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained RT-DETR-l model and run inference on the 'bus.jpg' image

yolo predict model=rtdetr-l.pt source=path/to/bus.jpg

```

Supported Tasks and Modes

This table presents the model types, the specific pre-trained weights, the tasks supported by each model, and the various modes (Train , Val, Predict, Export) that are supported, indicated by ✅ emojis.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| RT-DETR Large | rtdetr-l.pt | Object Detection | ✅ | ✅ | ✅ | ✅ |

| RT-DETR Extra-Large | rtdetr-x.pt | Object Detection | ✅ | ✅ | ✅ | ✅ |

Citations and Acknowledgements

If you use Baidu's RT-DETR in your research or development work, please cite the original paper:

!!! Quote ""

=== "BibTeX"

```py

@misc{lv2023detrs,

title={DETRs Beat YOLOs on Real-time Object Detection},

author={Wenyu Lv and Shangliang Xu and Yian Zhao and Guanzhong Wang and Jinman Wei and Cheng Cui and Yuning Du and Qingqing Dang and Yi Liu},

year={2023},

eprint={2304.08069},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

We would like to acknowledge Baidu and the PaddlePaddle team for creating and maintaining this valuable resource for the computer vision community. Their contribution to the field with the development of the Vision Transformers-based real-time object detector, RT-DETR, is greatly appreciated.

FAQ

What is Baidu's RT-DETR model and how does it work?

Baidu's RT-DETR (Real-Time Detection Transformer) is an advanced real-time object detector built upon the Vision Transformer architecture. It efficiently processes multiscale features by decoupling intra-scale interaction and cross-scale fusion through its efficient hybrid encoder. By employing IoU-aware query selection, the model focuses on the most relevant objects, enhancing detection accuracy. Its adaptable inference speed, achieved by adjusting decoder layers without retraining, makes RT-DETR suitable for various real-time object detection scenarios. Learn more about RT-DETR features here.

How can I use the pre-trained RT-DETR models provided by Ultralytics?

You can leverage Ultralytics Python API to use pre-trained PaddlePaddle RT-DETR models. For instance, to load an RT-DETR-l model pre-trained on COCO val2017 and achieve high FPS on T4 GPU, you can utilize the following example:

!!! Example

=== "Python"

```py

from ultralytics import RTDETR

# Load a COCO-pretrained RT-DETR-l model

model = RTDETR("rtdetr-l.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the RT-DETR-l model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

```py

# Load a COCO-pretrained RT-DETR-l model and train it on the COCO8 example dataset for 100 epochs

yolo train model=rtdetr-l.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained RT-DETR-l model and run inference on the 'bus.jpg' image

yolo predict model=rtdetr-l.pt source=path/to/bus.jpg

```

Why should I choose Baidu's RT-DETR over other real-time object detectors?

Baidu's RT-DETR stands out due to its efficient hybrid encoder and IoU-aware query selection, which drastically reduce computational costs while maintaining high accuracy. Its unique ability to adjust inference speed by using different decoder layers without retraining adds significant flexibility. This makes it particularly advantageous for applications requiring real-time performance on accelerated backends like CUDA with TensorRT, outclassing many other real-time object detectors.

How does RT-DETR support adaptable inference speed for different real-time applications?

Baidu's RT-DETR allows flexible adjustments of inference speed by using different decoder layers without requiring retraining. This adaptability is crucial for scaling performance across various real-time object detection tasks. Whether you need faster processing for lower precision needs or slower, more accurate detections, RT-DETR can be tailored to meet your specific requirements.

Can I use RT-DETR models with other Ultralytics modes, such as training, validation, and export?

Yes, RT-DETR models are compatible with various Ultralytics modes including training, validation, prediction, and export. You can refer to the respective documentation for detailed instructions on how to utilize these modes: Train, Val, Predict, and Export. This ensures a comprehensive workflow for developing and deploying your object detection solutions.

comments: true

description: Explore the revolutionary Segment Anything Model (SAM) for promptable image segmentation with zero-shot performance. Discover key features, datasets, and usage tips.

keywords: Segment Anything, SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, instance segmentation, computer vision, AI, machine learning

Segment Anything Model (SAM)

Welcome to the frontier of image segmentation with the Segment Anything Model, or SAM. This revolutionary model has changed the game by introducing promptable image segmentation with real-time performance, setting new standards in the field.

Introduction to SAM: The Segment Anything Model

The Segment Anything Model, or SAM, is a cutting-edge image segmentation model that allows for promptable segmentation, providing unparalleled versatility in image analysis tasks. SAM forms the heart of the Segment Anything initiative, a groundbreaking project that introduces a novel model, task, and dataset for image segmentation.

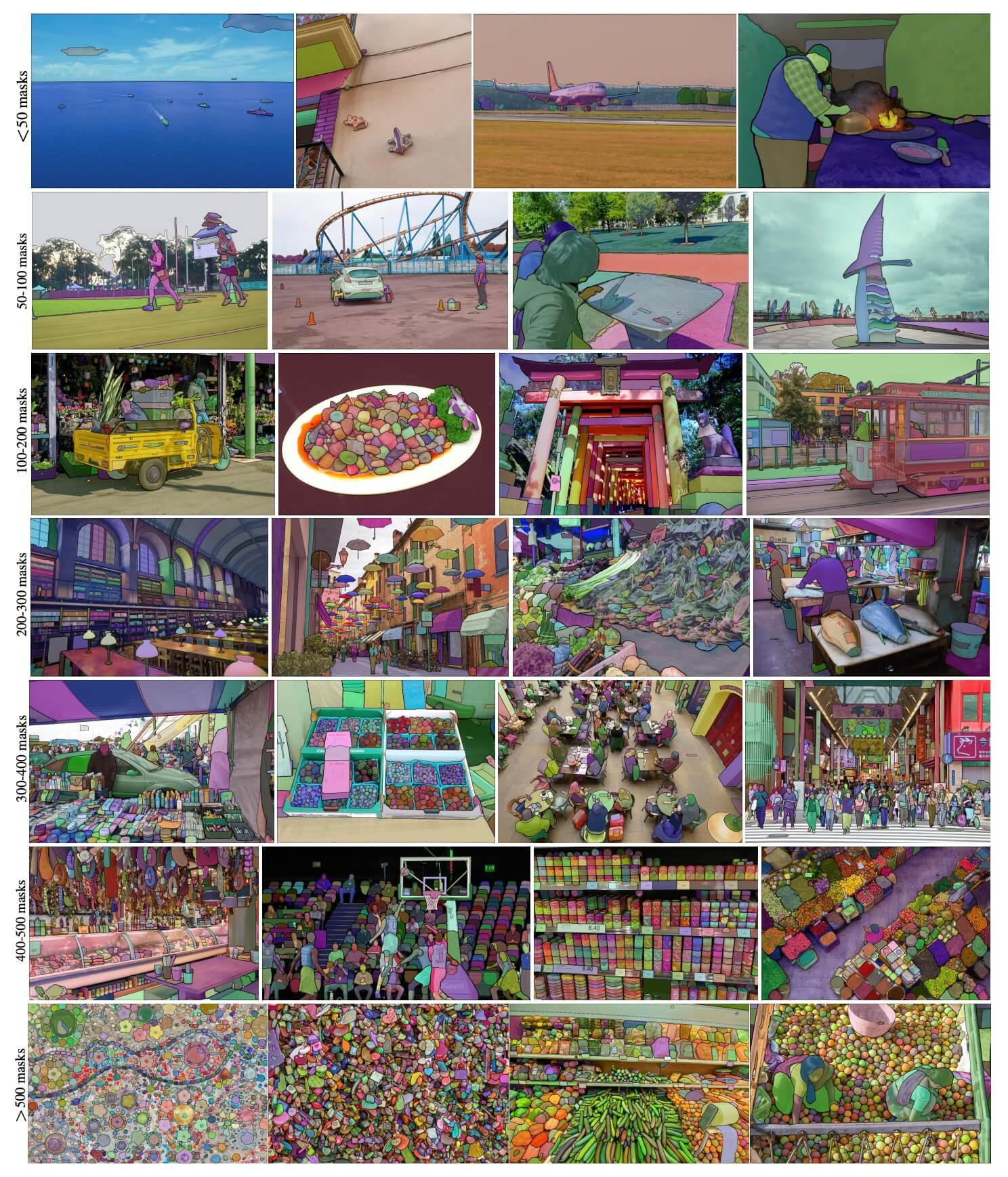

SAM's advanced design allows it to adapt to new image distributions and tasks without prior knowledge, a feature known as zero-shot transfer. Trained on the expansive SA-1B dataset, which contains more than 1 billion masks spread over 11 million carefully curated images, SAM has displayed impressive zero-shot performance, surpassing previous fully supervised results in many cases.

SA-1B Example images. Dataset images overlaid masks from the newly introduced SA-1B dataset. SA-1B contains 11M diverse, high-resolution, licensed, and privacy protecting images and 1.1B high-quality segmentation masks. These masks were annotated fully automatically by SAM, and as verified by human ratings and numerous experiments, are of high quality and diversity. Images are grouped by number of masks per image for visualization (there are ∼100 masks per image on average).

SA-1B Example images. Dataset images overlaid masks from the newly introduced SA-1B dataset. SA-1B contains 11M diverse, high-resolution, licensed, and privacy protecting images and 1.1B high-quality segmentation masks. These masks were annotated fully automatically by SAM, and as verified by human ratings and numerous experiments, are of high quality and diversity. Images are grouped by number of masks per image for visualization (there are ∼100 masks per image on average).

Key Features of the Segment Anything Model (SAM)

- Promptable Segmentation Task: SAM was designed with a promptable segmentation task in mind, allowing it to generate valid segmentation masks from any given prompt, such as spatial or text clues identifying an object.

- Advanced Architecture: The Segment Anything Model employs a powerful image encoder, a prompt encoder, and a lightweight mask decoder. This unique architecture enables flexible prompting, real-time mask computation, and ambiguity awareness in segmentation tasks.

- The SA-1B Dataset: Introduced by the Segment Anything project, the SA-1B dataset features over 1 billion masks on 11 million images. As the largest segmentation dataset to date, it provides SAM with a diverse and large-scale training data source.

- Zero-Shot Performance: SAM displays outstanding zero-shot performance across various segmentation tasks, making it a ready-to-use tool for diverse applications with minimal need for prompt engineering.

For an in-depth look at the Segment Anything Model and the SA-1B dataset, please visit the Segment Anything website and check out the research paper Segment Anything.

Available Models, Supported Tasks, and Operating Modes

This table presents the available models with their specific pre-trained weights, the tasks they support, and their compatibility with different operating modes like Inference, Validation, Training, and Export, indicated by ✅ emojis for supported modes and ❌ emojis for unsupported modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| SAM base | sam_b.pt | Instance Segmentation | ✅ | ❌ | ❌ | ❌ |

| SAM large | sam_l.pt | Instance Segmentation | ✅ | ❌ | ❌ | ❌ |

How to Use SAM: Versatility and Power in Image Segmentation

The Segment Anything Model can be employed for a multitude of downstream tasks that go beyond its training data. This includes edge detection, object proposal generation, instance segmentation, and preliminary text-to-mask prediction. With prompt engineering, SAM can swiftly adapt to new tasks and data distributions in a zero-shot manner, establishing it as a versatile and potent tool for all your image segmentation needs.

SAM prediction example

!!! Example "Segment with prompts"

Segment image with given prompts.

=== "Python"

```py

from ultralytics import SAM

# Load a model

model = SAM("sam_b.pt")

# Display model information (optional)

model.info()

# Run inference with bboxes prompt

results = model("ultralytics/assets/zidane.jpg", bboxes=[439, 437, 524, 709])

# Run inference with points prompt

results = model("ultralytics/assets/zidane.jpg", points=[900, 370], labels=[1])

```

!!! Example "Segment everything"

Segment the whole image.

=== "Python"

```py

from ultralytics import SAM

# Load a model

model = SAM("sam_b.pt")

# Display model information (optional)

model.info()

# Run inference

model("path/to/image.jpg")

```

=== "CLI"

```py

# Run inference with a SAM model

yolo predict model=sam_b.pt source=path/to/image.jpg

```

- The logic here is to segment the whole image if you don't pass any prompts(bboxes/points/masks).

!!! Example "SAMPredictor example"

This way you can set image once and run prompts inference multiple times without running image encoder multiple times.

=== "Prompt inference"

```py

from ultralytics.models.sam import Predictor as SAMPredictor

# Create SAMPredictor

overrides = dict(conf=0.25, task="segment", mode="predict", imgsz=1024, model="mobile_sam.pt")

predictor = SAMPredictor(overrides=overrides)

# Set image

predictor.set_image("ultralytics/assets/zidane.jpg") # set with image file

predictor.set_image(cv2.imread("ultralytics/assets/zidane.jpg")) # set with np.ndarray

results = predictor(bboxes=[439, 437, 524, 709])

results = predictor(points=[900, 370], labels=[1])

# Reset image

predictor.reset_image()

```

Segment everything with additional args.

=== "Segment everything"

```py

from ultralytics.models.sam import Predictor as SAMPredictor

# Create SAMPredictor

overrides = dict(conf=0.25, task="segment", mode="predict", imgsz=1024, model="mobile_sam.pt")

predictor = SAMPredictor(overrides=overrides)

# Segment with additional args

results = predictor(source="ultralytics/assets/zidane.jpg", crop_n_layers=1, points_stride=64)

```

!!! Note

All the returned `results` in above examples are [Results](../modes/predict.md#working-with-results) object which allows access predicted masks and source image easily.

- More additional args for

Segment everythingseePredictor/generateReference.

SAM comparison vs YOLOv8

Here we compare Meta's smallest SAM model, SAM-b, with Ultralytics smallest segmentation model, YOLOv8n-seg:

| Model | Size | Parameters | Speed (CPU) |

|---|---|---|---|

| Meta's SAM-b | 358 MB | 94.7 M | 51096 ms/im |

| MobileSAM | 40.7 MB | 10.1 M | 46122 ms/im |

| FastSAM-s with YOLOv8 backbone | 23.7 MB | 11.8 M | 115 ms/im |

| Ultralytics YOLOv8n-seg | 6.7 MB (53.4x smaller) | 3.4 M (27.9x less) | 59 ms/im (866x faster) |

This comparison shows the order-of-magnitude differences in the model sizes and speeds between models. Whereas SAM presents unique capabilities for automatic segmenting, it is not a direct competitor to YOLOv8 segment models, which are smaller, faster and more efficient.

Tests run on a 2023 Apple M2 Macbook with 16GB of RAM. To reproduce this test:

!!! Example

=== "Python"

```py

from ultralytics import SAM, YOLO, FastSAM

# Profile SAM-b

model = SAM("sam_b.pt")

model.info()

model("ultralytics/assets")

# Profile MobileSAM

model = SAM("mobile_sam.pt")

model.info()

model("ultralytics/assets")

# Profile FastSAM-s

model = FastSAM("FastSAM-s.pt")

model.info()

model("ultralytics/assets")

# Profile YOLOv8n-seg

model = YOLO("yolov8n-seg.pt")

model.info()

model("ultralytics/assets")

```

Auto-Annotation: A Quick Path to Segmentation Datasets

Auto-annotation is a key feature of SAM, allowing users to generate a segmentation dataset using a pre-trained detection model. This feature enables rapid and accurate annotation of a large number of images, bypassing the need for time-consuming manual labeling.

Generate Your Segmentation Dataset Using a Detection Model

To auto-annotate your dataset with the Ultralytics framework, use the auto_annotate function as shown below:

!!! Example

=== "Python"

```py

from ultralytics.data.annotator import auto_annotate

auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model="sam_b.pt")

```

| Argument | Type | Description | Default |

|---|---|---|---|

data |

str |

Path to a folder containing images to be annotated. | |

det_model |

str, optional |

Pre-trained YOLO detection model. Defaults to 'yolov8x.pt'. | 'yolov8x.pt' |

sam_model |

str, optional |

Pre-trained SAM segmentation model. Defaults to 'sam_b.pt'. | 'sam_b.pt' |

device |

str, optional |

Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | |

output_dir |

str, None, optional |

Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. | None |

The auto_annotate function takes the path to your images, with optional arguments for specifying the pre-trained detection and SAM segmentation models, the device to run the models on, and the output directory for saving the annotated results.

Auto-annotation with pre-trained models can dramatically cut down the time and effort required for creating high-quality segmentation datasets. This feature is especially beneficial for researchers and developers dealing with large image collections, as it allows them to focus on model development and evaluation rather than manual annotation.

Citations and Acknowledgements

If you find SAM useful in your research or development work, please consider citing our paper:

!!! Quote ""

=== "BibTeX"

```py

@misc{kirillov2023segment,

title={Segment Anything},

author={Alexander Kirillov and Eric Mintun and Nikhila Ravi and Hanzi Mao and Chloe Rolland and Laura Gustafson and Tete Xiao and Spencer Whitehead and Alexander C. Berg and Wan-Yen Lo and Piotr Dollár and Ross Girshick},

year={2023},

eprint={2304.02643},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

We would like to express our gratitude to Meta AI for creating and maintaining this valuable resource for the computer vision community.

FAQ

What is the Segment Anything Model (SAM) by Ultralytics?

The Segment Anything Model (SAM) by Ultralytics is a revolutionary image segmentation model designed for promptable segmentation tasks. It leverages advanced architecture, including image and prompt encoders combined with a lightweight mask decoder, to generate high-quality segmentation masks from various prompts such as spatial or text cues. Trained on the expansive SA-1B dataset, SAM excels in zero-shot performance, adapting to new image distributions and tasks without prior knowledge. Learn more here.

How can I use the Segment Anything Model (SAM) for image segmentation?

You can use the Segment Anything Model (SAM) for image segmentation by running inference with various prompts such as bounding boxes or points. Here's an example using Python:

from ultralytics import SAM

# Load a model

model = SAM("sam_b.pt")

# Segment with bounding box prompt

model("ultralytics/assets/zidane.jpg", bboxes=[439, 437, 524, 709])

# Segment with points prompt

model("ultralytics/assets/zidane.jpg", points=[900, 370], labels=[1])

Alternatively, you can run inference with SAM in the command line interface (CLI):

yolo predict model=sam_b.pt source=path/to/image.jpg

For more detailed usage instructions, visit the Segmentation section.

How do SAM and YOLOv8 compare in terms of performance?

Compared to YOLOv8, SAM models like SAM-b and FastSAM-s are larger and slower but offer unique capabilities for automatic segmentation. For instance, Ultralytics YOLOv8n-seg is 53.4 times smaller and 866 times faster than SAM-b. However, SAM's zero-shot performance makes it highly flexible and efficient in diverse, untrained tasks. Learn more about performance comparisons between SAM and YOLOv8 here.

How can I auto-annotate my dataset using SAM?

Ultralytics' SAM offers an auto-annotation feature that allows generating segmentation datasets using a pre-trained detection model. Here's an example in Python:

from ultralytics.data.annotator import auto_annotate

auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model="sam_b.pt")

This function takes the path to your images and optional arguments for pre-trained detection and SAM segmentation models, along with device and output directory specifications. For a complete guide, see Auto-Annotation.

What datasets are used to train the Segment Anything Model (SAM)?

SAM is trained on the extensive SA-1B dataset which comprises over 1 billion masks across 11 million images. SA-1B is the largest segmentation dataset to date, providing high-quality and diverse training data, ensuring impressive zero-shot performance in varied segmentation tasks. For more details, visit the Dataset section.

comments: true

description: Discover SAM2, the next generation of Meta's Segment Anything Model, supporting real-time promptable segmentation in both images and videos with state-of-the-art performance. Learn about its key features, datasets, and how to use it.

keywords: SAM2, Segment Anything, video segmentation, image segmentation, promptable segmentation, zero-shot performance, SA-V dataset, Ultralytics, real-time segmentation, AI, machine learning

SAM2: Segment Anything Model 2

!!! Note "🚧 SAM2 Integration In Progress 🚧"

The SAM2 features described in this documentation are currently not enabled in the `ultralytics` package. The Ultralytics team is actively working on integrating SAM2, and these capabilities should be available soon. We appreciate your patience as we work to implement this exciting new model.

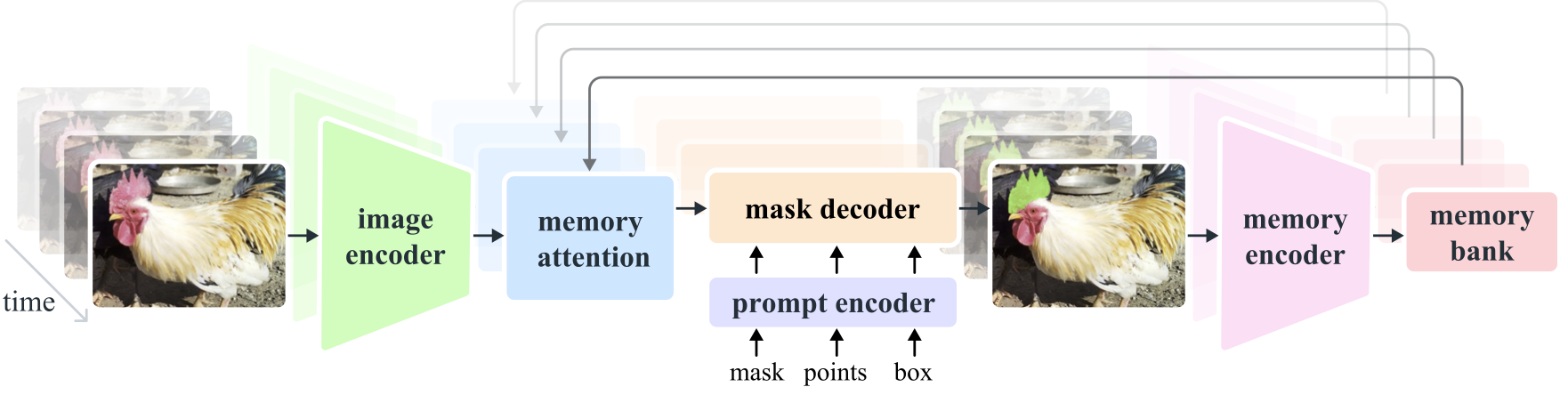

SAM2, the successor to Meta's Segment Anything Model (SAM), is a cutting-edge tool designed for comprehensive object segmentation in both images and videos. It excels in handling complex visual data through a unified, promptable model architecture that supports real-time processing and zero-shot generalization.

Key Features

Unified Model Architecture

SAM2 combines the capabilities of image and video segmentation in a single model. This unification simplifies deployment and allows for consistent performance across different media types. It leverages a flexible prompt-based interface, enabling users to specify objects of interest through various prompt types, such as points, bounding boxes, or masks.

Real-Time Performance

The model achieves real-time inference speeds, processing approximately 44 frames per second. This makes SAM2 suitable for applications requiring immediate feedback, such as video editing and augmented reality.

Zero-Shot Generalization

SAM2 can segment objects it has never encountered before, demonstrating strong zero-shot generalization. This is particularly useful in diverse or evolving visual domains where pre-defined categories may not cover all possible objects.

Interactive Refinement

Users can iteratively refine the segmentation results by providing additional prompts, allowing for precise control over the output. This interactivity is essential for fine-tuning results in applications like video annotation or medical imaging.

Advanced Handling of Visual Challenges

SAM2 includes mechanisms to manage common video segmentation challenges, such as object occlusion and reappearance. It uses a sophisticated memory mechanism to keep track of objects across frames, ensuring continuity even when objects are temporarily obscured or exit and re-enter the scene.

For a deeper understanding of SAM2's architecture and capabilities, explore the SAM2 research paper.

Performance and Technical Details

SAM2 sets a new benchmark in the field, outperforming previous models on various metrics:

| Metric | SAM2 | Previous SOTA |

|---|---|---|

| Interactive Video Segmentation | Best | - |

| Human Interactions Required | 3x fewer | Baseline |

| Image Segmentation Accuracy | Improved | SAM |

| Inference Speed | 6x faster | SAM |

Model Architecture

Core Components

- Image and Video Encoder: Utilizes a transformer-based architecture to extract high-level features from both images and video frames. This component is responsible for understanding the visual content at each timestep.

- Prompt Encoder: Processes user-provided prompts (points, boxes, masks) to guide the segmentation task. This allows SAM2 to adapt to user input and target specific objects within a scene.

- Memory Mechanism: Includes a memory encoder, memory bank, and memory attention module. These components collectively store and utilize information from past frames, enabling the model to maintain consistent object tracking over time.

- Mask Decoder: Generates the final segmentation masks based on the encoded image features and prompts. In video, it also uses memory context to ensure accurate tracking across frames.

Memory Mechanism and Occlusion Handling

The memory mechanism allows SAM2 to handle temporal dependencies and occlusions in video data. As objects move and interact, SAM2 records their features in a memory bank. When an object becomes occluded, the model can rely on this memory to predict its position and appearance when it reappears. The occlusion head specifically handles scenarios where objects are not visible, predicting the likelihood of an object being occluded.

Multi-Mask Ambiguity Resolution

In situations with ambiguity (e.g., overlapping objects), SAM2 can generate multiple mask predictions. This feature is crucial for accurately representing complex scenes where a single mask might not sufficiently describe the scene's nuances.

SA-V Dataset

The SA-V dataset, developed for SAM2's training, is one of the largest and most diverse video segmentation datasets available. It includes:

- 51,000+ Videos: Captured across 47 countries, providing a wide range of real-world scenarios.

- 600,000+ Mask Annotations: Detailed spatio-temporal mask annotations, referred to as "masklets," covering whole objects and parts.

- Dataset Scale: It features 4.5 times more videos and 53 times more annotations than previous largest datasets, offering unprecedented diversity and complexity.

Benchmarks

Video Object Segmentation

SAM2 has demonstrated superior performance across major video segmentation benchmarks:

| Dataset | J&F | J | F |

|---|---|---|---|

| DAVIS 2017 | 82.5 | 79.8 | 85.2 |

| YouTube-VOS | 81.2 | 78.9 | 83.5 |

Interactive Segmentation

In interactive segmentation tasks, SAM2 shows significant efficiency and accuracy:

| Dataset | NoC@90 | AUC |

|---|---|---|

| DAVIS Interactive | 1.54 | 0.872 |

Installation

To install SAM2, use the following command. All SAM2 models will automatically download on first use.

pip install ultralytics

How to Use SAM2: Versatility in Image and Video Segmentation

!!! Note "🚧 SAM2 Integration In Progress 🚧"

The SAM2 features described in this documentation are currently not enabled in the `ultralytics` package. The Ultralytics team is actively working on integrating SAM2, and these capabilities should be available soon. We appreciate your patience as we work to implement this exciting new model.

The following table details the available SAM2 models, their pre-trained weights, supported tasks, and compatibility with different operating modes like Inference, Validation, Training, and Export.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| SAM2 base | sam2_b.pt | Instance Segmentation | ✅ | ❌ | ❌ | ❌ |

| SAM2 large | sam2_l.pt | Instance Segmentation | ✅ | ❌ | ❌ | ❌ |

SAM2 Prediction Examples

SAM2 can be utilized across a broad spectrum of tasks, including real-time video editing, medical imaging, and autonomous systems. Its ability to segment both static and dynamic visual data makes it a versatile tool for researchers and developers.

Segment with Prompts

!!! Example "Segment with Prompts"

Use prompts to segment specific objects in images or videos.

=== "Python"

```py

from ultralytics import SAM2

# Load a model

model = SAM2("sam2_b.pt")

# Display model information (optional)

model.info()

# Segment with bounding box prompt

results = model("path/to/image.jpg", bboxes=[100, 100, 200, 200])

# Segment with point prompt

results = model("path/to/image.jpg", points=[150, 150], labels=[1])

```

Segment Everything

!!! Example "Segment Everything"

Segment the entire image or video content without specific prompts.

=== "Python"

```py

from ultralytics import SAM2

# Load a model

model = SAM2("sam2_b.pt")

# Display model information (optional)

model.info()

# Run inference

model("path/to/video.mp4")

```

=== "CLI"

```py

# Run inference with a SAM2 model

yolo predict model=sam2_b.pt source=path/to/video.mp4

```

- This example demonstrates how SAM2 can be used to segment the entire content of an image or video if no prompts (bboxes/points/masks) are provided.

SAM comparison vs YOLOv8

Here we compare Meta's smallest SAM model, SAM-b, with Ultralytics smallest segmentation model, YOLOv8n-seg:

| Model | Size | Parameters | Speed (CPU) |

|---|---|---|---|

| Meta's SAM-b | 358 MB | 94.7 M | 51096 ms/im |

| MobileSAM | 40.7 MB | 10.1 M | 46122 ms/im |

| FastSAM-s with YOLOv8 backbone | 23.7 MB | 11.8 M | 115 ms/im |

| Ultralytics YOLOv8n-seg | 6.7 MB (53.4x smaller) | 3.4 M (27.9x less) | 59 ms/im (866x faster) |

This comparison shows the order-of-magnitude differences in the model sizes and speeds between models. Whereas SAM presents unique capabilities for automatic segmenting, it is not a direct competitor to YOLOv8 segment models, which are smaller, faster and more efficient.

Tests run on a 2023 Apple M2 Macbook with 16GB of RAM. To reproduce this test:

!!! Example

=== "Python"

```py

from ultralytics import SAM, YOLO, FastSAM

# Profile SAM-b

model = SAM("sam_b.pt")

model.info()

model("ultralytics/assets")

# Profile MobileSAM

model = SAM("mobile_sam.pt")

model.info()

model("ultralytics/assets")

# Profile FastSAM-s

model = FastSAM("FastSAM-s.pt")

model.info()

model("ultralytics/assets")

# Profile YOLOv8n-seg

model = YOLO("yolov8n-seg.pt")

model.info()

model("ultralytics/assets")

```

Auto-Annotation: Efficient Dataset Creation

Auto-annotation is a powerful feature of SAM2, enabling users to generate segmentation datasets quickly and accurately by leveraging pre-trained models. This capability is particularly useful for creating large, high-quality datasets without extensive manual effort.

How to Auto-Annotate with SAM2

To auto-annotate your dataset using SAM2, follow this example:

!!! Example "Auto-Annotation Example"

```py

from ultralytics.data.annotator import auto_annotate

auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model="sam2_b.pt")

```

| Argument | Type | Description | Default |

|---|---|---|---|

data |

str |

Path to a folder containing images to be annotated. | |

det_model |

str, optional |

Pre-trained YOLO detection model. Defaults to 'yolov8x.pt'. | 'yolov8x.pt' |

sam_model |

str, optional |

Pre-trained SAM2 segmentation model. Defaults to 'sam2_b.pt'. | 'sam2_b.pt' |

device |

str, optional |

Device to run the models on. Defaults to an empty string (CPU or GPU, if available). | |

output_dir |

str, None, optional |

Directory to save the annotated results. Defaults to a 'labels' folder in the same directory as 'data'. | None |

This function facilitates the rapid creation of high-quality segmentation datasets, ideal for researchers and developers aiming to accelerate their projects.

Limitations

Despite its strengths, SAM2 has certain limitations:

- Tracking Stability: SAM2 may lose track of objects during extended sequences or significant viewpoint changes.

- Object Confusion: The model can sometimes confuse similar-looking objects, particularly in crowded scenes.

- Efficiency with Multiple Objects: Segmentation efficiency decreases when processing multiple objects simultaneously due to the lack of inter-object communication.

- Detail Accuracy: May miss fine details, especially with fast-moving objects. Additional prompts can partially address this issue, but temporal smoothness is not guaranteed.

Citations and Acknowledgements

If SAM2 is a crucial part of your research or development work, please cite it using the following reference:

!!! Quote ""

=== "BibTeX"

```py

@article{kirillov2024sam2,

title={SAM2: Segment Anything Model 2},

author={Alexander Kirillov and others},

journal={arXiv preprint arXiv:2401.12741},

year={2024}

}

```

We extend our gratitude to Meta AI for their contributions to the AI community with this groundbreaking model and dataset.

FAQ

What is SAM2 and how does it improve upon the original Segment Anything Model (SAM)?

SAM2, the successor to Meta's Segment Anything Model (SAM), is a cutting-edge tool designed for comprehensive object segmentation in both images and videos. It excels in handling complex visual data through a unified, promptable model architecture that supports real-time processing and zero-shot generalization. SAM2 offers several improvements over the original SAM, including:

- Unified Model Architecture: Combines image and video segmentation capabilities in a single model.

- Real-Time Performance: Processes approximately 44 frames per second, making it suitable for applications requiring immediate feedback.

- Zero-Shot Generalization: Segments objects it has never encountered before, useful in diverse visual domains.

- Interactive Refinement: Allows users to iteratively refine segmentation results by providing additional prompts.

- Advanced Handling of Visual Challenges: Manages common video segmentation challenges like object occlusion and reappearance.

For more details on SAM2's architecture and capabilities, explore the SAM2 research paper.

How can I use SAM2 for real-time video segmentation?

SAM2 can be utilized for real-time video segmentation by leveraging its promptable interface and real-time inference capabilities. Here's a basic example:

!!! Example "Segment with Prompts"

Use prompts to segment specific objects in images or videos.

=== "Python"

```py

from ultralytics import SAM2

# Load a model

model = SAM2("sam2_b.pt")

# Display model information (optional)

model.info()

# Segment with bounding box prompt

results = model("path/to/image.jpg", bboxes=[100, 100, 200, 200])

# Segment with point prompt

results = model("path/to/image.jpg", points=[150, 150], labels=[1])

```

For more comprehensive usage, refer to the How to Use SAM2 section.

What datasets are used to train SAM2, and how do they enhance its performance?

SAM2 is trained on the SA-V dataset, one of the largest and most diverse video segmentation datasets available. The SA-V dataset includes:

- 51,000+ Videos: Captured across 47 countries, providing a wide range of real-world scenarios.

- 600,000+ Mask Annotations: Detailed spatio-temporal mask annotations, referred to as "masklets," covering whole objects and parts.

- Dataset Scale: Features 4.5 times more videos and 53 times more annotations than previous largest datasets, offering unprecedented diversity and complexity.

This extensive dataset allows SAM2 to achieve superior performance across major video segmentation benchmarks and enhances its zero-shot generalization capabilities. For more information, see the SA-V Dataset section.

How does SAM2 handle occlusions and object reappearances in video segmentation?

SAM2 includes a sophisticated memory mechanism to manage temporal dependencies and occlusions in video data. The memory mechanism consists of:

- Memory Encoder and Memory Bank: Stores features from past frames.

- Memory Attention Module: Utilizes stored information to maintain consistent object tracking over time.

- Occlusion Head: Specifically handles scenarios where objects are not visible, predicting the likelihood of an object being occluded.

This mechanism ensures continuity even when objects are temporarily obscured or exit and re-enter the scene. For more details, refer to the Memory Mechanism and Occlusion Handling section.

How does SAM2 compare to other segmentation models like YOLOv8?

SAM2 and Ultralytics YOLOv8 serve different purposes and excel in different areas. While SAM2 is designed for comprehensive object segmentation with advanced features like zero-shot generalization and real-time performance, YOLOv8 is optimized for speed and efficiency in object detection and segmentation tasks. Here's a comparison:

| Model | Size | Parameters | Speed (CPU) |

|---|---|---|---|

| Meta's SAM-b | 358 MB | 94.7 M | 51096 ms/im |

| MobileSAM | 40.7 MB | 10.1 M | 46122 ms/im |

| FastSAM-s with YOLOv8 backbone | 23.7 MB | 11.8 M | 115 ms/im |

| Ultralytics YOLOv8n-seg | 6.7 MB (53.4x smaller) | 3.4 M (27.9x less) | 59 ms/im (866x faster) |

For more details, see the SAM comparison vs YOLOv8 section.

comments: true

description: Discover YOLO-NAS by Deci AI - a state-of-the-art object detection model with quantization support. Explore features, pretrained models, and implementation examples.

keywords: YOLO-NAS, Deci AI, object detection, deep learning, Neural Architecture Search, Ultralytics, Python API, YOLO model, SuperGradients, pretrained models, quantization, AutoNAC

YOLO-NAS

Overview

Developed by Deci AI, YOLO-NAS is a groundbreaking object detection foundational model. It is the product of advanced Neural Architecture Search technology, meticulously designed to address the limitations of previous YOLO models. With significant improvements in quantization support and accuracy-latency trade-offs, YOLO-NAS represents a major leap in object detection.

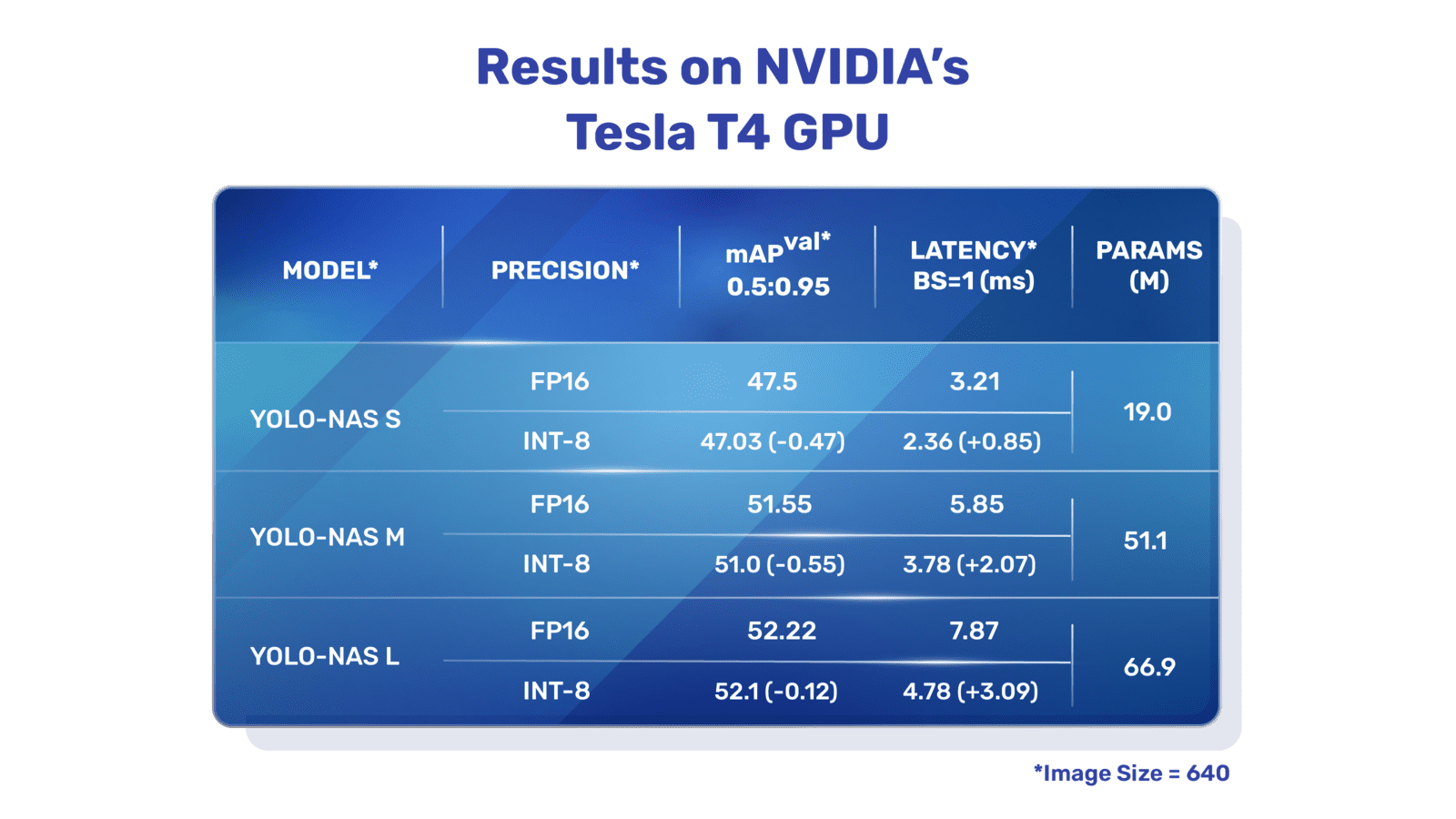

Overview of YOLO-NAS. YOLO-NAS employs quantization-aware blocks and selective quantization for optimal performance. The model, when converted to its INT8 quantized version, experiences a minimal precision drop, a significant improvement over other models. These advancements culminate in a superior architecture with unprecedented object detection capabilities and outstanding performance.

Overview of YOLO-NAS. YOLO-NAS employs quantization-aware blocks and selective quantization for optimal performance. The model, when converted to its INT8 quantized version, experiences a minimal precision drop, a significant improvement over other models. These advancements culminate in a superior architecture with unprecedented object detection capabilities and outstanding performance.

Key Features

- Quantization-Friendly Basic Block: YOLO-NAS introduces a new basic block that is friendly to quantization, addressing one of the significant limitations of previous YOLO models.

- Sophisticated Training and Quantization: YOLO-NAS leverages advanced training schemes and post-training quantization to enhance performance.

- AutoNAC Optimization and Pre-training: YOLO-NAS utilizes AutoNAC optimization and is pre-trained on prominent datasets such as COCO, Objects365, and Roboflow 100. This pre-training makes it extremely suitable for downstream object detection tasks in production environments.

Pre-trained Models

Experience the power of next-generation object detection with the pre-trained YOLO-NAS models provided by Ultralytics. These models are designed to deliver top-notch performance in terms of both speed and accuracy. Choose from a variety of options tailored to your specific needs:

| Model | mAP | Latency (ms) |

|---|---|---|

| YOLO-NAS S | 47.5 | 3.21 |

| YOLO-NAS M | 51.55 | 5.85 |

| YOLO-NAS L | 52.22 | 7.87 |

| YOLO-NAS S INT-8 | 47.03 | 2.36 |

| YOLO-NAS M INT-8 | 51.0 | 3.78 |

| YOLO-NAS L INT-8 | 52.1 | 4.78 |

Each model variant is designed to offer a balance between Mean Average Precision (mAP) and latency, helping you optimize your object detection tasks for both performance and speed.

Usage Examples

Ultralytics has made YOLO-NAS models easy to integrate into your Python applications via our ultralytics python package. The package provides a user-friendly Python API to streamline the process.

The following examples show how to use YOLO-NAS models with the ultralytics package for inference and validation:

Inference and Validation Examples

In this example we validate YOLO-NAS-s on the COCO8 dataset.

!!! Example

This example provides simple inference and validation code for YOLO-NAS. For handling inference results see [Predict](../modes/predict.md) mode. For using YOLO-NAS with additional modes see [Val](../modes/val.md) and [Export](../modes/export.md). YOLO-NAS on the `ultralytics` package does not support training.

=== "Python"

PyTorch pretrained `*.pt` models files can be passed to the `NAS()` class to create a model instance in python:

```py

from ultralytics import NAS

# Load a COCO-pretrained YOLO-NAS-s model

model = NAS("yolo_nas_s.pt")

# Display model information (optional)

model.info()

# Validate the model on the COCO8 example dataset

results = model.val(data="coco8.yaml")

# Run inference with the YOLO-NAS-s model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

CLI commands are available to directly run the models:

```py

# Load a COCO-pretrained YOLO-NAS-s model and validate it's performance on the COCO8 example dataset

yolo val model=yolo_nas_s.pt data=coco8.yaml

# Load a COCO-pretrained YOLO-NAS-s model and run inference on the 'bus.jpg' image

yolo predict model=yolo_nas_s.pt source=path/to/bus.jpg

```

Supported Tasks and Modes

We offer three variants of the YOLO-NAS models: Small (s), Medium (m), and Large (l). Each variant is designed to cater to different computational and performance needs:

- YOLO-NAS-s: Optimized for environments where computational resources are limited but efficiency is key.

- YOLO-NAS-m: Offers a balanced approach, suitable for general-purpose object detection with higher accuracy.

- YOLO-NAS-l: Tailored for scenarios requiring the highest accuracy, where computational resources are less of a constraint.

Below is a detailed overview of each model, including links to their pre-trained weights, the tasks they support, and their compatibility with different operating modes.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| YOLO-NAS-s | yolo_nas_s.pt | Object Detection | ✅ | ✅ | ❌ | ✅ |

| YOLO-NAS-m | yolo_nas_m.pt | Object Detection | ✅ | ✅ | ❌ | ✅ |

| YOLO-NAS-l | yolo_nas_l.pt | Object Detection | ✅ | ✅ | ❌ | ✅ |

Citations and Acknowledgements

If you employ YOLO-NAS in your research or development work, please cite SuperGradients:

!!! Quote ""

=== "BibTeX"

```py

@misc{supergradients,

doi = {10.5281/ZENODO.7789328},

url = {https://zenodo.org/record/7789328},

author = {Aharon, Shay and {Louis-Dupont} and {Ofri Masad} and Yurkova, Kate and {Lotem Fridman} and {Lkdci} and Khvedchenya, Eugene and Rubin, Ran and Bagrov, Natan and Tymchenko, Borys and Keren, Tomer and Zhilko, Alexander and {Eran-Deci}},

title = {Super-Gradients},

publisher = {GitHub},

journal = {GitHub repository},

year = {2021},

}

```

We express our gratitude to Deci AI's SuperGradients team for their efforts in creating and maintaining this valuable resource for the computer vision community. We believe YOLO-NAS, with its innovative architecture and superior object detection capabilities, will become a critical tool for developers and researchers alike.

FAQ

What is YOLO-NAS and how does it improve over previous YOLO models?

YOLO-NAS, developed by Deci AI, is a state-of-the-art object detection model leveraging advanced Neural Architecture Search (NAS) technology. It addresses the limitations of previous YOLO models by introducing features like quantization-friendly basic blocks and sophisticated training schemes. This results in significant improvements in performance, particularly in environments with limited computational resources. YOLO-NAS also supports quantization, maintaining high accuracy even when converted to its INT8 version, enhancing its suitability for production environments. For more details, see the Overview section.

How can I integrate YOLO-NAS models into my Python application?

You can easily integrate YOLO-NAS models into your Python application using the ultralytics package. Here's a simple example of how to load a pre-trained YOLO-NAS model and perform inference:

from ultralytics import NAS

# Load a COCO-pretrained YOLO-NAS-s model

model = NAS("yolo_nas_s.pt")

# Validate the model on the COCO8 example dataset

results = model.val(data="coco8.yaml")

# Run inference with the YOLO-NAS-s model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

For more information, refer to the Inference and Validation Examples.

What are the key features of YOLO-NAS and why should I consider using it?

YOLO-NAS introduces several key features that make it a superior choice for object detection tasks:

- Quantization-Friendly Basic Block: Enhanced architecture that improves model performance with minimal precision drop post quantization.

- Sophisticated Training and Quantization: Employs advanced training schemes and post-training quantization techniques.

- AutoNAC Optimization and Pre-training: Utilizes AutoNAC optimization and is pre-trained on prominent datasets like COCO, Objects365, and Roboflow 100.

These features contribute to its high accuracy, efficient performance, and suitability for deployment in production environments. Learn more in the Key Features section.

Which tasks and modes are supported by YOLO-NAS models?

YOLO-NAS models support various object detection tasks and modes such as inference, validation, and export. They do not support training. The supported models include YOLO-NAS-s, YOLO-NAS-m, and YOLO-NAS-l, each tailored to different computational capacities and performance needs. For a detailed overview, refer to the Supported Tasks and Modes section.

Are there pre-trained YOLO-NAS models available and how do I access them?

Yes, Ultralytics provides pre-trained YOLO-NAS models that you can access directly. These models are pre-trained on datasets like COCO, ensuring high performance in terms of both speed and accuracy. You can download these models using the links provided in the Pre-trained Models section. Here are some examples:

comments: true

description: Explore the YOLO-World Model for efficient, real-time open-vocabulary object detection using Ultralytics YOLOv8 advancements. Achieve top performance with minimal computation.

keywords: YOLO-World, Ultralytics, open-vocabulary detection, YOLOv8, real-time object detection, machine learning, computer vision, AI, deep learning, model training

YOLO-World Model