Yolov8-源码解析-十六-

Yolov8 源码解析(十六)

comments: true

description: Discover YOLOv10, the latest in real-time object detection, eliminating NMS and boosting efficiency. Achieve top performance with a low computational cost.

keywords: YOLOv10, real-time object detection, NMS-free, deep learning, Tsinghua University, Ultralytics, machine learning, neural networks, performance optimization

YOLOv10: Real-Time End-to-End Object Detection

YOLOv10, built on the Ultralytics Python package by researchers at Tsinghua University, introduces a new approach to real-time object detection, addressing both the post-processing and model architecture deficiencies found in previous YOLO versions. By eliminating non-maximum suppression (NMS) and optimizing various model components, YOLOv10 achieves state-of-the-art performance with significantly reduced computational overhead. Extensive experiments demonstrate its superior accuracy-latency trade-offs across multiple model scales.

Overview

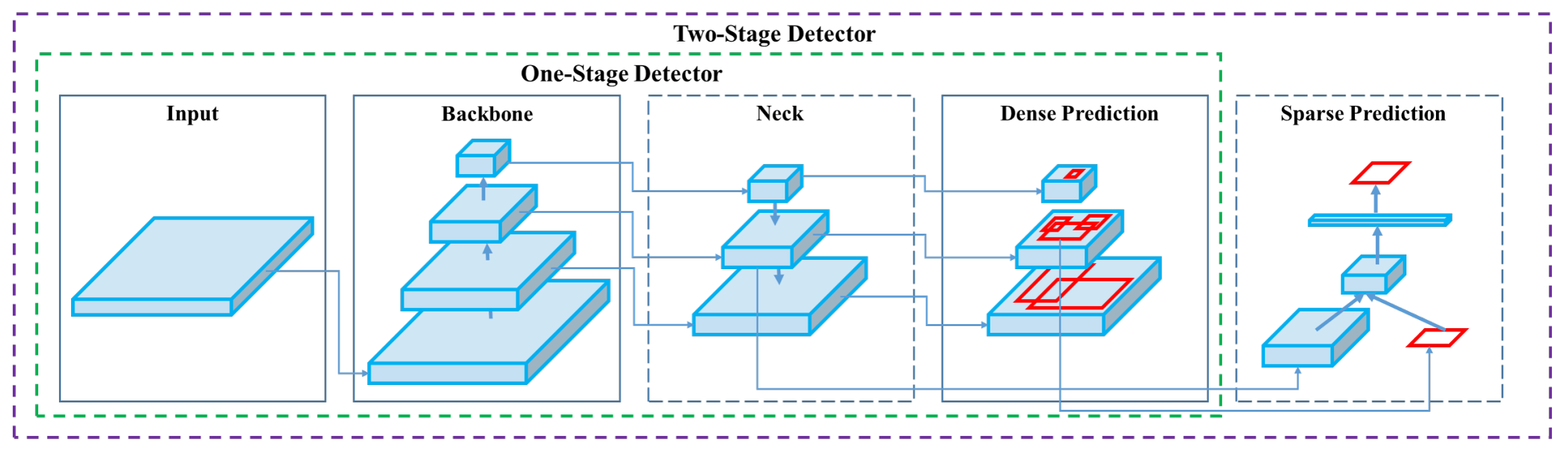

Real-time object detection aims to accurately predict object categories and positions in images with low latency. The YOLO series has been at the forefront of this research due to its balance between performance and efficiency. However, reliance on NMS and architectural inefficiencies have hindered optimal performance. YOLOv10 addresses these issues by introducing consistent dual assignments for NMS-free training and a holistic efficiency-accuracy driven model design strategy.

Architecture

The architecture of YOLOv10 builds upon the strengths of previous YOLO models while introducing several key innovations. The model architecture consists of the following components:

- Backbone: Responsible for feature extraction, the backbone in YOLOv10 uses an enhanced version of CSPNet (Cross Stage Partial Network) to improve gradient flow and reduce computational redundancy.

- Neck: The neck is designed to aggregate features from different scales and passes them to the head. It includes PAN (Path Aggregation Network) layers for effective multiscale feature fusion.

- One-to-Many Head: Generates multiple predictions per object during training to provide rich supervisory signals and improve learning accuracy.

- One-to-One Head: Generates a single best prediction per object during inference to eliminate the need for NMS, thereby reducing latency and improving efficiency.

Key Features

- NMS-Free Training: Utilizes consistent dual assignments to eliminate the need for NMS, reducing inference latency.

- Holistic Model Design: Comprehensive optimization of various components from both efficiency and accuracy perspectives, including lightweight classification heads, spatial-channel decoupled down sampling, and rank-guided block design.

- Enhanced Model Capabilities: Incorporates large-kernel convolutions and partial self-attention modules to improve performance without significant computational cost.

Model Variants

YOLOv10 comes in various model scales to cater to different application needs:

- YOLOv10-N: Nano version for extremely resource-constrained environments.

- YOLOv10-S: Small version balancing speed and accuracy.

- YOLOv10-M: Medium version for general-purpose use.

- YOLOv10-B: Balanced version with increased width for higher accuracy.

- YOLOv10-L: Large version for higher accuracy at the cost of increased computational resources.

- YOLOv10-X: Extra-large version for maximum accuracy and performance.

Performance

YOLOv10 outperforms previous YOLO versions and other state-of-the-art models in terms of accuracy and efficiency. For example, YOLOv10-S is 1.8x faster than RT-DETR-R18 with similar AP on the COCO dataset, and YOLOv10-B has 46% less latency and 25% fewer parameters than YOLOv9-C with the same performance.

| Model | Input Size | APval | FLOPs (G) | Latency (ms) |

|---|---|---|---|---|

| YOLOv10-N | 640 | 38.5 | 6.7 | 1.84 |

| YOLOv10-S | 640 | 46.3 | 21.6 | 2.49 |

| YOLOv10-M | 640 | 51.1 | 59.1 | 4.74 |

| YOLOv10-B | 640 | 52.5 | 92.0 | 5.74 |

| YOLOv10-L | 640 | 53.2 | 120.3 | 7.28 |

| YOLOv10-X | 640 | 54.4 | 160.4 | 10.70 |

Latency measured with TensorRT FP16 on T4 GPU.

Methodology

Consistent Dual Assignments for NMS-Free Training

YOLOv10 employs dual label assignments, combining one-to-many and one-to-one strategies during training to ensure rich supervision and efficient end-to-end deployment. The consistent matching metric aligns the supervision between both strategies, enhancing the quality of predictions during inference.

Holistic Efficiency-Accuracy Driven Model Design

Efficiency Enhancements

- Lightweight Classification Head: Reduces the computational overhead of the classification head by using depth-wise separable convolutions.

- Spatial-Channel Decoupled Down sampling: Decouples spatial reduction and channel modulation to minimize information loss and computational cost.

- Rank-Guided Block Design: Adapts block design based on intrinsic stage redundancy, ensuring optimal parameter utilization.

Accuracy Enhancements

- Large-Kernel Convolution: Enlarges the receptive field to enhance feature extraction capability.

- Partial Self-Attention (PSA): Incorporates self-attention modules to improve global representation learning with minimal overhead.

Experiments and Results

YOLOv10 has been extensively tested on standard benchmarks like COCO, demonstrating superior performance and efficiency. The model achieves state-of-the-art results across different variants, showcasing significant improvements in latency and accuracy compared to previous versions and other contemporary detectors.

Comparisons

Compared to other state-of-the-art detectors:

- YOLOv10-S / X are 1.8× / 1.3× faster than RT-DETR-R18 / R101 with similar accuracy

- YOLOv10-B has 25% fewer parameters and 46% lower latency than YOLOv9-C at same accuracy

- YOLOv10-L / X outperform YOLOv8-L / X by 0.3 AP / 0.5 AP with 1.8× / 2.3× fewer parameters

Here is a detailed comparison of YOLOv10 variants with other state-of-the-art models:

| Model | Params (M) |

FLOPs (G) |

mAPval 50-95 |

Latency (ms) |

Latency-forward (ms) |

|---|---|---|---|---|---|

| YOLOv6-3.0-N | 4.7 | 11.4 | 37.0 | 2.69 | 1.76 |

| Gold-YOLO-N | 5.6 | 12.1 | 39.6 | 2.92 | 1.82 |

| YOLOv8-N | 3.2 | 8.7 | 37.3 | 6.16 | 1.77 |

| YOLOv10-N | 2.3 | 6.7 | 39.5 | 1.84 | 1.79 |

| YOLOv6-3.0-S | 18.5 | 45.3 | 44.3 | 3.42 | 2.35 |

| Gold-YOLO-S | 21.5 | 46.0 | 45.4 | 3.82 | 2.73 |

| YOLOv8-S | 11.2 | 28.6 | 44.9 | 7.07 | 2.33 |

| YOLOv10-S | 7.2 | 21.6 | 46.8 | 2.49 | 2.39 |

| RT-DETR-R18 | 20.0 | 60.0 | 46.5 | 4.58 | 4.49 |

| YOLOv6-3.0-M | 34.9 | 85.8 | 49.1 | 5.63 | 4.56 |

| Gold-YOLO-M | 41.3 | 87.5 | 49.8 | 6.38 | 5.45 |

| YOLOv8-M | 25.9 | 78.9 | 50.6 | 9.50 | 5.09 |

| YOLOv10-M | 15.4 | 59.1 | 51.3 | 4.74 | 4.63 |

| YOLOv6-3.0-L | 59.6 | 150.7 | 51.8 | 9.02 | 7.90 |

| Gold-YOLO-L | 75.1 | 151.7 | 51.8 | 10.65 | 9.78 |

| YOLOv8-L | 43.7 | 165.2 | 52.9 | 12.39 | 8.06 |

| RT-DETR-R50 | 42.0 | 136.0 | 53.1 | 9.20 | 9.07 |

| YOLOv10-L | 24.4 | 120.3 | 53.4 | 7.28 | 7.21 |

| YOLOv8-X | 68.2 | 257.8 | 53.9 | 16.86 | 12.83 |

| RT-DETR-R101 | 76.0 | 259.0 | 54.3 | 13.71 | 13.58 |

| YOLOv10-X | 29.5 | 160.4 | 54.4 | 10.70 | 10.60 |

Usage Examples

For predicting new images with YOLOv10:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a pre-trained YOLOv10n model

model = YOLO("yolov10n.pt")

# Perform object detection on an image

results = model("image.jpg")

# Display the results

results[0].show()

```

=== "CLI"

```py

# Load a COCO-pretrained YOLOv10n model and run inference on the 'bus.jpg' image

yolo detect predict model=yolov10n.pt source=path/to/bus.jpg

```

For training YOLOv10 on a custom dataset:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load YOLOv10n model from scratch

model = YOLO("yolov10n.yaml")

# Train the model

model.train(data="coco8.yaml", epochs=100, imgsz=640)

```

=== "CLI"

```py

# Build a YOLOv10n model from scratch and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov10n.yaml data=coco8.yaml epochs=100 imgsz=640

# Build a YOLOv10n model from scratch and run inference on the 'bus.jpg' image

yolo predict model=yolov10n.yaml source=path/to/bus.jpg

```

Supported Tasks and Modes

The YOLOv10 models series offers a range of models, each optimized for high-performance Object Detection. These models cater to varying computational needs and accuracy requirements, making them versatile for a wide array of applications.

| Model | Filenames | Tasks | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| YOLOv10 | yolov10n.pt yolov10s.pt yolov10m.pt yolov10l.pt yolov10x.pt |

Object Detection | ✅ | ✅ | ✅ | ✅ |

Exporting YOLOv10

Due to the new operations introduced with YOLOv10, not all export formats provided by Ultralytics are currently supported. The following table outlines which formats have been successfully converted using Ultralytics for YOLOv10. Feel free to open a pull request if you're able to provide a contribution change for adding export support of additional formats for YOLOv10.

| Export Format | Supported |

|---|---|

| TorchScript | ✅ |

| ONNX | ✅ |

| OpenVINO | ✅ |

| TensorRT | ✅ |

| CoreML | ❌ |

| TF SavedModel | ✅ |

| TF GraphDef | ✅ |

| TF Lite | ✅ |

| TF Edge TPU | ❌ |

| TF.js | ❌ |

| PaddlePaddle | ❌ |

| NCNN | ❌ |

Conclusion

YOLOv10 sets a new standard in real-time object detection by addressing the shortcomings of previous YOLO versions and incorporating innovative design strategies. Its ability to deliver high accuracy with low computational cost makes it an ideal choice for a wide range of real-world applications.

Citations and Acknowledgements

We would like to acknowledge the YOLOv10 authors from Tsinghua University for their extensive research and significant contributions to the Ultralytics framework:

!!! Quote ""

=== "BibTeX"

```py

@article{THU-MIGyolov10,

title={YOLOv10: Real-Time End-to-End Object Detection},

author={Ao Wang, Hui Chen, Lihao Liu, et al.},

journal={arXiv preprint arXiv:2405.14458},

year={2024},

institution={Tsinghua University},

license = {AGPL-3.0}

}

```

For detailed implementation, architectural innovations, and experimental results, please refer to the YOLOv10 research paper and GitHub repository by the Tsinghua University team.

FAQ

What is YOLOv10 and how does it differ from previous YOLO versions?

YOLOv10, developed by researchers at Tsinghua University, introduces several key innovations to real-time object detection. It eliminates the need for non-maximum suppression (NMS) by employing consistent dual assignments during training and optimized model components for superior performance with reduced computational overhead. For more details on its architecture and key features, check out the YOLOv10 overview section.

How can I get started with running inference using YOLOv10?

For easy inference, you can use the Ultralytics YOLO Python library or the command line interface (CLI). Below are examples of predicting new images using YOLOv10:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load the pre-trained YOLOv10-N model

model = YOLO("yolov10n.pt")

results = model("image.jpg")

results[0].show()

```

=== "CLI"

```py

yolo detect predict model=yolov10n.pt source=path/to/image.jpg

```

For more usage examples, visit our Usage Examples section.

Which model variants does YOLOv10 offer and what are their use cases?

YOLOv10 offers several model variants to cater to different use cases:

- YOLOv10-N: Suitable for extremely resource-constrained environments

- YOLOv10-S: Balances speed and accuracy

- YOLOv10-M: General-purpose use

- YOLOv10-B: Higher accuracy with increased width

- YOLOv10-L: High accuracy at the cost of computational resources

- YOLOv10-X: Maximum accuracy and performance

Each variant is designed for different computational needs and accuracy requirements, making them versatile for a variety of applications. Explore the Model Variants section for more information.

How does the NMS-free approach in YOLOv10 improve performance?

YOLOv10 eliminates the need for non-maximum suppression (NMS) during inference by employing consistent dual assignments for training. This approach reduces inference latency and enhances prediction efficiency. The architecture also includes a one-to-one head for inference, ensuring that each object gets a single best prediction. For a detailed explanation, see the Consistent Dual Assignments for NMS-Free Training section.

Where can I find the export options for YOLOv10 models?

YOLOv10 supports several export formats, including TorchScript, ONNX, OpenVINO, and TensorRT. However, not all export formats provided by Ultralytics are currently supported for YOLOv10 due to its new operations. For details on the supported formats and instructions on exporting, visit the Exporting YOLOv10 section.

What are the performance benchmarks for YOLOv10 models?

YOLOv10 outperforms previous YOLO versions and other state-of-the-art models in both accuracy and efficiency. For example, YOLOv10-S is 1.8x faster than RT-DETR-R18 with a similar AP on the COCO dataset. YOLOv10-B shows 46% less latency and 25% fewer parameters than YOLOv9-C with the same performance. Detailed benchmarks can be found in the Comparisons section.

comments: true

description: Discover YOLOv3 and its variants YOLOv3-Ultralytics and YOLOv3u. Learn about their features, implementations, and support for object detection tasks.

keywords: YOLOv3, YOLOv3-Ultralytics, YOLOv3u, object detection, Ultralytics, computer vision, AI models, deep learning

YOLOv3, YOLOv3-Ultralytics, and YOLOv3u

Overview

This document presents an overview of three closely related object detection models, namely YOLOv3, YOLOv3-Ultralytics, and YOLOv3u.

-

YOLOv3: This is the third version of the You Only Look Once (YOLO) object detection algorithm. Originally developed by Joseph Redmon, YOLOv3 improved on its predecessors by introducing features such as multiscale predictions and three different sizes of detection kernels.

-

YOLOv3-Ultralytics: This is Ultralytics' implementation of the YOLOv3 model. It reproduces the original YOLOv3 architecture and offers additional functionalities, such as support for more pre-trained models and easier customization options.

-

YOLOv3u: This is an updated version of YOLOv3-Ultralytics that incorporates the anchor-free, objectness-free split head used in YOLOv8 models. YOLOv3u maintains the same backbone and neck architecture as YOLOv3 but with the updated detection head from YOLOv8.

Key Features

-

YOLOv3: Introduced the use of three different scales for detection, leveraging three different sizes of detection kernels: 13x13, 26x26, and 52x52. This significantly improved detection accuracy for objects of different sizes. Additionally, YOLOv3 added features such as multi-label predictions for each bounding box and a better feature extractor network.

-

YOLOv3-Ultralytics: Ultralytics' implementation of YOLOv3 provides the same performance as the original model but comes with added support for more pre-trained models, additional training methods, and easier customization options. This makes it more versatile and user-friendly for practical applications.

-

YOLOv3u: This updated model incorporates the anchor-free, objectness-free split head from YOLOv8. By eliminating the need for pre-defined anchor boxes and objectness scores, this detection head design can improve the model's ability to detect objects of varying sizes and shapes. This makes YOLOv3u more robust and accurate for object detection tasks.

Supported Tasks and Modes

The YOLOv3 series, including YOLOv3, YOLOv3-Ultralytics, and YOLOv3u, are designed specifically for object detection tasks. These models are renowned for their effectiveness in various real-world scenarios, balancing accuracy and speed. Each variant offers unique features and optimizations, making them suitable for a range of applications.

All three models support a comprehensive set of modes, ensuring versatility in various stages of model deployment and development. These modes include Inference, Validation, Training, and Export, providing users with a complete toolkit for effective object detection.

| Model Type | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|

| YOLOv3 | Object Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv3-Ultralytics | Object Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv3u | Object Detection | ✅ | ✅ | ✅ | ✅ |

This table provides an at-a-glance view of the capabilities of each YOLOv3 variant, highlighting their versatility and suitability for various tasks and operational modes in object detection workflows.

Usage Examples

This example provides simple YOLOv3 training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

!!! Example

=== "Python"

PyTorch pretrained `*.pt` models as well as configuration `*.yaml` files can be passed to the `YOLO()` class to create a model instance in python:

```py

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv3n model

model = YOLO("yolov3n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv3n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

CLI commands are available to directly run the models:

```py

# Load a COCO-pretrained YOLOv3n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov3n.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained YOLOv3n model and run inference on the 'bus.jpg' image

yolo predict model=yolov3n.pt source=path/to/bus.jpg

```

Citations and Acknowledgements

If you use YOLOv3 in your research, please cite the original YOLO papers and the Ultralytics YOLOv3 repository:

!!! Quote ""

=== "BibTeX"

```py

@article{redmon2018yolov3,

title={YOLOv3: An Incremental Improvement},

author={Redmon, Joseph and Farhadi, Ali},

journal={arXiv preprint arXiv:1804.02767},

year={2018}

}

```

Thank you to Joseph Redmon and Ali Farhadi for developing the original YOLOv3.

FAQ

What are the differences between YOLOv3, YOLOv3-Ultralytics, and YOLOv3u?

YOLOv3 is the third iteration of the YOLO (You Only Look Once) object detection algorithm developed by Joseph Redmon, known for its balance of accuracy and speed, utilizing three different scales (13x13, 26x26, and 52x52) for detections. YOLOv3-Ultralytics is Ultralytics' adaptation of YOLOv3 that adds support for more pre-trained models and facilitates easier model customization. YOLOv3u is an upgraded variant of YOLOv3-Ultralytics, integrating the anchor-free, objectness-free split head from YOLOv8, improving detection robustness and accuracy for various object sizes. For more details on the variants, refer to the YOLOv3 series.

How can I train a YOLOv3 model using Ultralytics?

Training a YOLOv3 model with Ultralytics is straightforward. You can train the model using either Python or CLI:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv3n model

model = YOLO("yolov3n.pt")

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

```

=== "CLI"

```py

# Load a COCO-pretrained YOLOv3n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov3n.pt data=coco8.yaml epochs=100 imgsz=640

```

For more comprehensive training options and guidelines, visit our Train mode documentation.

What makes YOLOv3u more accurate for object detection tasks?

YOLOv3u improves upon YOLOv3 and YOLOv3-Ultralytics by incorporating the anchor-free, objectness-free split head used in YOLOv8 models. This upgrade eliminates the need for pre-defined anchor boxes and objectness scores, enhancing its capability to detect objects of varying sizes and shapes more precisely. This makes YOLOv3u a better choice for complex and diverse object detection tasks. For more information, refer to the Why YOLOv3u section.

How can I use YOLOv3 models for inference?

You can perform inference using YOLOv3 models by either Python scripts or CLI commands:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv3n model

model = YOLO("yolov3n.pt")

# Run inference with the YOLOv3n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

```py

# Load a COCO-pretrained YOLOv3n model and run inference on the 'bus.jpg' image

yolo predict model=yolov3n.pt source=path/to/bus.jpg

```

Refer to the Inference mode documentation for more details on running YOLO models.

What tasks are supported by YOLOv3 and its variants?

YOLOv3, YOLOv3-Ultralytics, and YOLOv3u primarily support object detection tasks. These models can be used for various stages of model deployment and development, such as Inference, Validation, Training, and Export. For a comprehensive set of tasks supported and more in-depth details, visit our Object Detection tasks documentation.

Where can I find resources to cite YOLOv3 in my research?

If you use YOLOv3 in your research, please cite the original YOLO papers and the Ultralytics YOLOv3 repository. Example BibTeX citation:

!!! Quote ""

=== "BibTeX"

```py

@article{redmon2018yolov3,

title={YOLOv3: An Incremental Improvement},

author={Redmon, Joseph and Farhadi, Ali},

journal={arXiv preprint arXiv:1804.02767},

year={2018}

}

```

For more citation details, refer to the Citations and Acknowledgements section.

comments: true

description: Explore YOLOv4, a state-of-the-art real-time object detection model by Alexey Bochkovskiy. Discover its architecture, features, and performance.

keywords: YOLOv4, object detection, real-time detection, Alexey Bochkovskiy, neural networks, machine learning, computer vision

YOLOv4: High-Speed and Precise Object Detection

Welcome to the Ultralytics documentation page for YOLOv4, a state-of-the-art, real-time object detector launched in 2020 by Alexey Bochkovskiy at https://github.com/AlexeyAB/darknet. YOLOv4 is designed to provide the optimal balance between speed and accuracy, making it an excellent choice for many applications.

YOLOv4 architecture diagram. Showcasing the intricate network design of YOLOv4, including the backbone, neck, and head components, and their interconnected layers for optimal real-time object detection.

YOLOv4 architecture diagram. Showcasing the intricate network design of YOLOv4, including the backbone, neck, and head components, and their interconnected layers for optimal real-time object detection.

Introduction

YOLOv4 stands for You Only Look Once version 4. It is a real-time object detection model developed to address the limitations of previous YOLO versions like YOLOv3 and other object detection models. Unlike other convolutional neural network (CNN) based object detectors, YOLOv4 is not only applicable for recommendation systems but also for standalone process management and human input reduction. Its operation on conventional graphics processing units (GPUs) allows for mass usage at an affordable price, and it is designed to work in real-time on a conventional GPU while requiring only one such GPU for training.

Architecture

YOLOv4 makes use of several innovative features that work together to optimize its performance. These include Weighted-Residual-Connections (WRC), Cross-Stage-Partial-connections (CSP), Cross mini-Batch Normalization (CmBN), Self-adversarial-training (SAT), Mish-activation, Mosaic data augmentation, DropBlock regularization, and CIoU loss. These features are combined to achieve state-of-the-art results.

A typical object detector is composed of several parts including the input, the backbone, the neck, and the head. The backbone of YOLOv4 is pre-trained on ImageNet and is used to predict classes and bounding boxes of objects. The backbone could be from several models including VGG, ResNet, ResNeXt, or DenseNet. The neck part of the detector is used to collect feature maps from different stages and usually includes several bottom-up paths and several top-down paths. The head part is what is used to make the final object detections and classifications.

Bag of Freebies

YOLOv4 also makes use of methods known as "bag of freebies," which are techniques that improve the accuracy of the model during training without increasing the cost of inference. Data augmentation is a common bag of freebies technique used in object detection, which increases the variability of the input images to improve the robustness of the model. Some examples of data augmentation include photometric distortions (adjusting the brightness, contrast, hue, saturation, and noise of an image) and geometric distortions (adding random scaling, cropping, flipping, and rotating). These techniques help the model to generalize better to different types of images.

Features and Performance

YOLOv4 is designed for optimal speed and accuracy in object detection. The architecture of YOLOv4 includes CSPDarknet53 as the backbone, PANet as the neck, and YOLOv3 as the detection head. This design allows YOLOv4 to perform object detection at an impressive speed, making it suitable for real-time applications. YOLOv4 also excels in accuracy, achieving state-of-the-art results in object detection benchmarks.

Usage Examples

As of the time of writing, Ultralytics does not currently support YOLOv4 models. Therefore, any users interested in using YOLOv4 will need to refer directly to the YOLOv4 GitHub repository for installation and usage instructions.

Here is a brief overview of the typical steps you might take to use YOLOv4:

-

Visit the YOLOv4 GitHub repository: https://github.com/AlexeyAB/darknet.

-

Follow the instructions provided in the README file for installation. This typically involves cloning the repository, installing necessary dependencies, and setting up any necessary environment variables.

-

Once installation is complete, you can train and use the model as per the usage instructions provided in the repository. This usually involves preparing your dataset, configuring the model parameters, training the model, and then using the trained model to perform object detection.

Please note that the specific steps may vary depending on your specific use case and the current state of the YOLOv4 repository. Therefore, it is strongly recommended to refer directly to the instructions provided in the YOLOv4 GitHub repository.

We regret any inconvenience this may cause and will strive to update this document with usage examples for Ultralytics once support for YOLOv4 is implemented.

Conclusion

YOLOv4 is a powerful and efficient object detection model that strikes a balance between speed and accuracy. Its use of unique features and bag of freebies techniques during training allows it to perform excellently in real-time object detection tasks. YOLOv4 can be trained and used by anyone with a conventional GPU, making it accessible and practical for a wide range of applications.

Citations and Acknowledgements

We would like to acknowledge the YOLOv4 authors for their significant contributions in the field of real-time object detection:

!!! Quote ""

=== "BibTeX"

```py

@misc{bochkovskiy2020yolov4,

title={YOLOv4: Optimal Speed and Accuracy of Object Detection},

author={Alexey Bochkovskiy and Chien-Yao Wang and Hong-Yuan Mark Liao},

year={2020},

eprint={2004.10934},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

The original YOLOv4 paper can be found on arXiv. The authors have made their work publicly available, and the codebase can be accessed on GitHub. We appreciate their efforts in advancing the field and making their work accessible to the broader community.

FAQ

What is YOLOv4 and why should I use it for object detection?

YOLOv4, which stands for "You Only Look Once version 4," is a state-of-the-art real-time object detection model developed by Alexey Bochkovskiy in 2020. It achieves an optimal balance between speed and accuracy, making it highly suitable for real-time applications. YOLOv4's architecture incorporates several innovative features like Weighted-Residual-Connections (WRC), Cross-Stage-Partial-connections (CSP), and Self-adversarial-training (SAT), among others, to achieve state-of-the-art results. If you're looking for a high-performance model that operates efficiently on conventional GPUs, YOLOv4 is an excellent choice.

How does the architecture of YOLOv4 enhance its performance?

The architecture of YOLOv4 includes several key components: the backbone, the neck, and the head. The backbone, which can be models like VGG, ResNet, or CSPDarknet53, is pre-trained to predict classes and bounding boxes. The neck, utilizing PANet, connects feature maps from different stages for comprehensive data extraction. Finally, the head, which uses configurations from YOLOv3, makes the final object detections. YOLOv4 also employs "bag of freebies" techniques like mosaic data augmentation and DropBlock regularization, further optimizing its speed and accuracy.

What are "bag of freebies" in the context of YOLOv4?

"Bag of freebies" refers to methods that improve the training accuracy of YOLOv4 without increasing the cost of inference. These techniques include various forms of data augmentation like photometric distortions (adjusting brightness, contrast, etc.) and geometric distortions (scaling, cropping, flipping, rotating). By increasing the variability of the input images, these augmentations help YOLOv4 generalize better to different types of images, thereby improving its robustness and accuracy without compromising its real-time performance.

Why is YOLOv4 considered suitable for real-time object detection on conventional GPUs?

YOLOv4 is designed to optimize both speed and accuracy, making it ideal for real-time object detection tasks that require quick and reliable performance. It operates efficiently on conventional GPUs, needing only one for both training and inference. This makes it accessible and practical for various applications ranging from recommendation systems to standalone process management, thereby reducing the need for extensive hardware setups and making it a cost-effective solution for real-time object detection.

How can I get started with YOLOv4 if Ultralytics does not currently support it?

To get started with YOLOv4, you should visit the official YOLOv4 GitHub repository. Follow the installation instructions provided in the README file, which typically include cloning the repository, installing dependencies, and setting up environment variables. Once installed, you can train the model by preparing your dataset, configuring the model parameters, and following the usage instructions provided. Since Ultralytics does not currently support YOLOv4, it is recommended to refer directly to the YOLOv4 GitHub for the most up-to-date and detailed guidance.

comments: true

description: Explore YOLOv5u, an advanced object detection model with optimized accuracy-speed tradeoff, featuring anchor-free Ultralytics head and various pre-trained models.

keywords: YOLOv5, YOLOv5u, object detection, Ultralytics, anchor-free, pre-trained models, accuracy, speed, real-time detection

YOLOv5

Overview

YOLOv5u represents an advancement in object detection methodologies. Originating from the foundational architecture of the YOLOv5 model developed by Ultralytics, YOLOv5u integrates the anchor-free, objectness-free split head, a feature previously introduced in the YOLOv8 models. This adaptation refines the model's architecture, leading to an improved accuracy-speed tradeoff in object detection tasks. Given the empirical results and its derived features, YOLOv5u provides an efficient alternative for those seeking robust solutions in both research and practical applications.

Key Features

-

Anchor-free Split Ultralytics Head: Traditional object detection models rely on predefined anchor boxes to predict object locations. However, YOLOv5u modernizes this approach. By adopting an anchor-free split Ultralytics head, it ensures a more flexible and adaptive detection mechanism, consequently enhancing the performance in diverse scenarios.

-

Optimized Accuracy-Speed Tradeoff: Speed and accuracy often pull in opposite directions. But YOLOv5u challenges this tradeoff. It offers a calibrated balance, ensuring real-time detections without compromising on accuracy. This feature is particularly invaluable for applications that demand swift responses, such as autonomous vehicles, robotics, and real-time video analytics.

-

Variety of Pre-trained Models: Understanding that different tasks require different toolsets, YOLOv5u provides a plethora of pre-trained models. Whether you're focusing on Inference, Validation, or Training, there's a tailor-made model awaiting you. This variety ensures you're not just using a one-size-fits-all solution, but a model specifically fine-tuned for your unique challenge.

Supported Tasks and Modes

The YOLOv5u models, with various pre-trained weights, excel in Object Detection tasks. They support a comprehensive range of modes, making them suitable for diverse applications, from development to deployment.

| Model Type | Pre-trained Weights | Task | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| YOLOv5u | yolov5nu, yolov5su, yolov5mu, yolov5lu, yolov5xu, yolov5n6u, yolov5s6u, yolov5m6u, yolov5l6u, yolov5x6u |

Object Detection | ✅ | ✅ | ✅ | ✅ |

This table provides a detailed overview of the YOLOv5u model variants, highlighting their applicability in object detection tasks and support for various operational modes such as Inference, Validation, Training, and Export. This comprehensive support ensures that users can fully leverage the capabilities of YOLOv5u models in a wide range of object detection scenarios.

Performance Metrics

!!! Performance

=== "Detection"

See [Detection Docs](../tasks/detect.md) for usage examples with these models trained on [COCO](../datasets/detect/coco.md), which include 80 pre-trained classes.

| Model | YAML | size<br><sup>(pixels) | mAP<sup>val<br>50-95 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

|---------------------------------------------------------------------------------------------|----------------------------------------------------------------------------------------------------------------|-----------------------|----------------------|--------------------------------|-------------------------------------|--------------------|-------------------|

| [yolov5nu.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5nu.pt) | [yolov5n.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 34.3 | 73.6 | 1.06 | 2.6 | 7.7 |

| [yolov5su.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5su.pt) | [yolov5s.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 43.0 | 120.7 | 1.27 | 9.1 | 24.0 |

| [yolov5mu.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5mu.pt) | [yolov5m.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 49.0 | 233.9 | 1.86 | 25.1 | 64.2 |

| [yolov5lu.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5lu.pt) | [yolov5l.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 52.2 | 408.4 | 2.50 | 53.2 | 135.0 |

| [yolov5xu.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5xu.pt) | [yolov5x.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5.yaml) | 640 | 53.2 | 763.2 | 3.81 | 97.2 | 246.4 |

| | | | | | | | |

| [yolov5n6u.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5n6u.pt) | [yolov5n6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 42.1 | 211.0 | 1.83 | 4.3 | 7.8 |

| [yolov5s6u.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5s6u.pt) | [yolov5s6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 48.6 | 422.6 | 2.34 | 15.3 | 24.6 |

| [yolov5m6u.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5m6u.pt) | [yolov5m6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 53.6 | 810.9 | 4.36 | 41.2 | 65.7 |

| [yolov5l6u.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5l6u.pt) | [yolov5l6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 55.7 | 1470.9 | 5.47 | 86.1 | 137.4 |

| [yolov5x6u.pt](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov5x6u.pt) | [yolov5x6.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v5/yolov5-p6.yaml) | 1280 | 56.8 | 2436.5 | 8.98 | 155.4 | 250.7 |

Usage Examples

This example provides simple YOLOv5 training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

!!! Example

=== "Python"

PyTorch pretrained `*.pt` models as well as configuration `*.yaml` files can be passed to the `YOLO()` class to create a model instance in python:

```py

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv5n model

model = YOLO("yolov5n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv5n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

CLI commands are available to directly run the models:

```py

# Load a COCO-pretrained YOLOv5n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov5n.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained YOLOv5n model and run inference on the 'bus.jpg' image

yolo predict model=yolov5n.pt source=path/to/bus.jpg

```

Citations and Acknowledgements

If you use YOLOv5 or YOLOv5u in your research, please cite the Ultralytics YOLOv5 repository as follows:

!!! Quote ""

=== "BibTeX"

```py

@software{yolov5,

title = {Ultralytics YOLOv5},

author = {Glenn Jocher},

year = {2020},

version = {7.0},

license = {AGPL-3.0},

url = {https://github.com/ultralytics/yolov5},

doi = {10.5281/zenodo.3908559},

orcid = {0000-0001-5950-6979}

}

```

Please note that YOLOv5 models are provided under AGPL-3.0 and Enterprise licenses.

FAQ

What is Ultralytics YOLOv5u and how does it differ from YOLOv5?

Ultralytics YOLOv5u is an advanced version of YOLOv5, integrating the anchor-free, objectness-free split head that enhances the accuracy-speed tradeoff for real-time object detection tasks. Unlike the traditional YOLOv5, YOLOv5u adopts an anchor-free detection mechanism, making it more flexible and adaptive in diverse scenarios. For more detailed information on its features, you can refer to the YOLOv5 Overview.

How does the anchor-free Ultralytics head improve object detection performance in YOLOv5u?

The anchor-free Ultralytics head in YOLOv5u improves object detection performance by eliminating the dependency on predefined anchor boxes. This results in a more flexible and adaptive detection mechanism that can handle various object sizes and shapes with greater efficiency. This enhancement directly contributes to a balanced tradeoff between accuracy and speed, making YOLOv5u suitable for real-time applications. Learn more about its architecture in the Key Features section.

Can I use pre-trained YOLOv5u models for different tasks and modes?

Yes, you can use pre-trained YOLOv5u models for various tasks such as Object Detection. These models support multiple modes, including Inference, Validation, Training, and Export. This flexibility allows users to leverage the capabilities of YOLOv5u models across different operational requirements. For a detailed overview, check the Supported Tasks and Modes section.

How do the performance metrics of YOLOv5u models compare on different platforms?

The performance metrics of YOLOv5u models vary depending on the platform and hardware used. For example, the YOLOv5nu model achieves a 34.3 mAP on COCO dataset with a speed of 73.6 ms on CPU (ONNX) and 1.06 ms on A100 TensorRT. Detailed performance metrics for different YOLOv5u models can be found in the Performance Metrics section, which provides a comprehensive comparison across various devices.

How can I train a YOLOv5u model using the Ultralytics Python API?

You can train a YOLOv5u model by loading a pre-trained model and running the training command with your dataset. Here's a quick example:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv5n model

model = YOLO("yolov5n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

```

=== "CLI"

```py

# Load a COCO-pretrained YOLOv5n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov5n.pt data=coco8.yaml epochs=100 imgsz=640

```

For more detailed instructions, visit the Usage Examples section.

comments: true

description: Explore Meituan YOLOv6, a top-tier object detector balancing speed and accuracy. Learn about its unique features and performance metrics on Ultralytics Docs.

keywords: Meituan YOLOv6, object detection, real-time applications, BiC module, Anchor-Aided Training, COCO dataset, high-performance models, Ultralytics Docs

Meituan YOLOv6

Overview

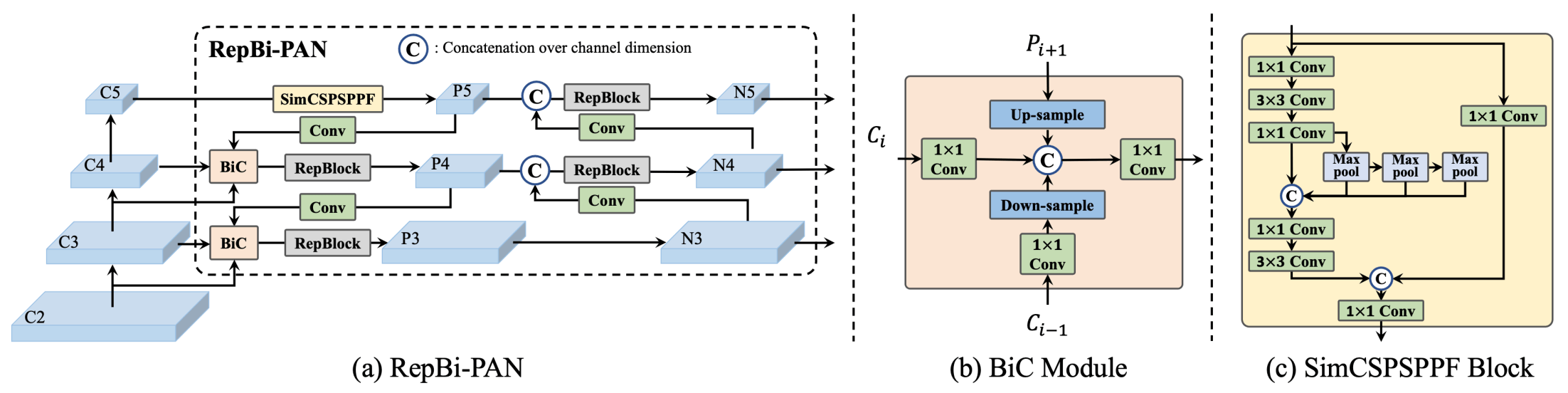

Meituan YOLOv6 is a cutting-edge object detector that offers remarkable balance between speed and accuracy, making it a popular choice for real-time applications. This model introduces several notable enhancements on its architecture and training scheme, including the implementation of a Bi-directional Concatenation (BiC) module, an anchor-aided training (AAT) strategy, and an improved backbone and neck design for state-of-the-art accuracy on the COCO dataset.

Overview of YOLOv6. Model architecture diagram showing the redesigned network components and training strategies that have led to significant performance improvements. (a) The neck of YOLOv6 (N and S are shown). Note for M/L, RepBlocks is replaced with CSPStackRep. (b) The structure of a BiC module. (c) A SimCSPSPPF block. (source).

Overview of YOLOv6. Model architecture diagram showing the redesigned network components and training strategies that have led to significant performance improvements. (a) The neck of YOLOv6 (N and S are shown). Note for M/L, RepBlocks is replaced with CSPStackRep. (b) The structure of a BiC module. (c) A SimCSPSPPF block. (source).

Key Features

- Bidirectional Concatenation (BiC) Module: YOLOv6 introduces a BiC module in the neck of the detector, enhancing localization signals and delivering performance gains with negligible speed degradation.

- Anchor-Aided Training (AAT) Strategy: This model proposes AAT to enjoy the benefits of both anchor-based and anchor-free paradigms without compromising inference efficiency.

- Enhanced Backbone and Neck Design: By deepening YOLOv6 to include another stage in the backbone and neck, this model achieves state-of-the-art performance on the COCO dataset at high-resolution input.

- Self-Distillation Strategy: A new self-distillation strategy is implemented to boost the performance of smaller models of YOLOv6, enhancing the auxiliary regression branch during training and removing it at inference to avoid a marked speed decline.

Performance Metrics

YOLOv6 provides various pre-trained models with different scales:

- YOLOv6-N: 37.5% AP on COCO val2017 at 1187 FPS with NVIDIA Tesla T4 GPU.

- YOLOv6-S: 45.0% AP at 484 FPS.

- YOLOv6-M: 50.0% AP at 226 FPS.

- YOLOv6-L: 52.8% AP at 116 FPS.

- YOLOv6-L6: State-of-the-art accuracy in real-time.

YOLOv6 also provides quantized models for different precisions and models optimized for mobile platforms.

Usage Examples

This example provides simple YOLOv6 training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

!!! Example

=== "Python"

PyTorch pretrained `*.pt` models as well as configuration `*.yaml` files can be passed to the `YOLO()` class to create a model instance in python:

```py

from ultralytics import YOLO

# Build a YOLOv6n model from scratch

model = YOLO("yolov6n.yaml")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv6n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

CLI commands are available to directly run the models:

```py

# Build a YOLOv6n model from scratch and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov6n.yaml data=coco8.yaml epochs=100 imgsz=640

# Build a YOLOv6n model from scratch and run inference on the 'bus.jpg' image

yolo predict model=yolov6n.yaml source=path/to/bus.jpg

```

Supported Tasks and Modes

The YOLOv6 series offers a range of models, each optimized for high-performance Object Detection. These models cater to varying computational needs and accuracy requirements, making them versatile for a wide array of applications.

| Model Type | Pre-trained Weights | Tasks Supported | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| YOLOv6-N | yolov6-n.pt |

Object Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv6-S | yolov6-s.pt |

Object Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv6-M | yolov6-m.pt |

Object Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv6-L | yolov6-l.pt |

Object Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv6-L6 | yolov6-l6.pt |

Object Detection | ✅ | ✅ | ✅ | ✅ |

This table provides a detailed overview of the YOLOv6 model variants, highlighting their capabilities in object detection tasks and their compatibility with various operational modes such as Inference, Validation, Training, and Export. This comprehensive support ensures that users can fully leverage the capabilities of YOLOv6 models in a broad range of object detection scenarios.

Citations and Acknowledgements

We would like to acknowledge the authors for their significant contributions in the field of real-time object detection:

!!! Quote ""

=== "BibTeX"

```py

@misc{li2023yolov6,

title={YOLOv6 v3.0: A Full-Scale Reloading},

author={Chuyi Li and Lulu Li and Yifei Geng and Hongliang Jiang and Meng Cheng and Bo Zhang and Zaidan Ke and Xiaoming Xu and Xiangxiang Chu},

year={2023},

eprint={2301.05586},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

The original YOLOv6 paper can be found on arXiv. The authors have made their work publicly available, and the codebase can be accessed on GitHub. We appreciate their efforts in advancing the field and making their work accessible to the broader community.

FAQ

What is Meituan YOLOv6 and what makes it unique?

Meituan YOLOv6 is a state-of-the-art object detector that balances speed and accuracy, ideal for real-time applications. It features notable architectural enhancements like the Bi-directional Concatenation (BiC) module and an Anchor-Aided Training (AAT) strategy. These innovations provide substantial performance gains with minimal speed degradation, making YOLOv6 a competitive choice for object detection tasks.

How does the Bi-directional Concatenation (BiC) Module in YOLOv6 improve performance?

The Bi-directional Concatenation (BiC) module in YOLOv6 enhances localization signals in the detector's neck, delivering performance improvements with negligible speed impact. This module effectively combines different feature maps, increasing the model's ability to detect objects accurately. For more details on YOLOv6's features, refer to the Key Features section.

How can I train a YOLOv6 model using Ultralytics?

You can train a YOLOv6 model using Ultralytics with simple Python or CLI commands. For instance:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Build a YOLOv6n model from scratch

model = YOLO("yolov6n.yaml")

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

```

=== "CLI"

```py

yolo train model=yolov6n.yaml data=coco8.yaml epochs=100 imgsz=640

```

For more information, visit the Train page.

What are the different versions of YOLOv6 and their performance metrics?

YOLOv6 offers multiple versions, each optimized for different performance requirements:

- YOLOv6-N: 37.5% AP at 1187 FPS

- YOLOv6-S: 45.0% AP at 484 FPS

- YOLOv6-M: 50.0% AP at 226 FPS

- YOLOv6-L: 52.8% AP at 116 FPS

- YOLOv6-L6: State-of-the-art accuracy in real-time scenarios

These models are evaluated on the COCO dataset using an NVIDIA Tesla T4 GPU. For more on performance metrics, see the Performance Metrics section.

How does the Anchor-Aided Training (AAT) strategy benefit YOLOv6?

Anchor-Aided Training (AAT) in YOLOv6 combines elements of anchor-based and anchor-free approaches, enhancing the model's detection capabilities without compromising inference efficiency. This strategy leverages anchors during training to improve bounding box predictions, making YOLOv6 effective in diverse object detection tasks.

Which operational modes are supported by YOLOv6 models in Ultralytics?

YOLOv6 supports various operational modes including Inference, Validation, Training, and Export. This flexibility allows users to fully exploit the model's capabilities in different scenarios. Check out the Supported Tasks and Modes section for a detailed overview of each mode.

comments: true

description: Discover YOLOv7, the breakthrough real-time object detector with top speed and accuracy. Learn about key features, usage, and performance metrics.

keywords: YOLOv7, real-time object detection, Ultralytics, AI, computer vision, model training, object detector

YOLOv7: Trainable Bag-of-Freebies

YOLOv7 is a state-of-the-art real-time object detector that surpasses all known object detectors in both speed and accuracy in the range from 5 FPS to 160 FPS. It has the highest accuracy (56.8% AP) among all known real-time object detectors with 30 FPS or higher on GPU V100. Moreover, YOLOv7 outperforms other object detectors such as YOLOR, YOLOX, Scaled-YOLOv4, YOLOv5, and many others in speed and accuracy. The model is trained on the MS COCO dataset from scratch without using any other datasets or pre-trained weights. Source code for YOLOv7 is available on GitHub.

Comparison of SOTA object detectors

From the results in the YOLO comparison table we know that the proposed method has the best speed-accuracy trade-off comprehensively. If we compare YOLOv7-tiny-SiLU with YOLOv5-N (r6.1), our method is 127 fps faster and 10.7% more accurate on AP. In addition, YOLOv7 has 51.4% AP at frame rate of 161 fps, while PPYOLOE-L with the same AP has only 78 fps frame rate. In terms of parameter usage, YOLOv7 is 41% less than PPYOLOE-L. If we compare YOLOv7-X with 114 fps inference speed to YOLOv5-L (r6.1) with 99 fps inference speed, YOLOv7-X can improve AP by 3.9%. If YOLOv7-X is compared with YOLOv5-X (r6.1) of similar scale, the inference speed of YOLOv7-X is 31 fps faster. In addition, in terms the amount of parameters and computation, YOLOv7-X reduces 22% of parameters and 8% of computation compared to YOLOv5-X (r6.1), but improves AP by 2.2% (Source).

| Model | Params (M) |

FLOPs (G) |

Size (pixels) |

FPS | APtest / val 50-95 |

APtest 50 |

APtest 75 |

APtest S |

APtest M |

APtest L |

|---|---|---|---|---|---|---|---|---|---|---|

| YOLOX-S | 9.0M | 26.8G | 640 | 102 | 40.5% / 40.5% | - | - | - | - | - |

| YOLOX-M | 25.3M | 73.8G | 640 | 81 | 47.2% / 46.9% | - | - | - | - | - |

| YOLOX-L | 54.2M | 155.6G | 640 | 69 | 50.1% / 49.7% | - | - | - | - | - |

| YOLOX-X | 99.1M | 281.9G | 640 | 58 | 51.5% / 51.1% | - | - | - | - | - |

| PPYOLOE-S | 7.9M | 17.4G | 640 | 208 | 43.1% / 42.7% | 60.5% | 46.6% | 23.2% | 46.4% | 56.9% |

| PPYOLOE-M | 23.4M | 49.9G | 640 | 123 | 48.9% / 48.6% | 66.5% | 53.0% | 28.6% | 52.9% | 63.8% |

| PPYOLOE-L | 52.2M | 110.1G | 640 | 78 | 51.4% / 50.9% | 68.9% | 55.6% | 31.4% | 55.3% | 66.1% |

| PPYOLOE-X | 98.4M | 206.6G | 640 | 45 | 52.2% / 51.9% | 69.9% | 56.5% | 33.3% | 56.3% | 66.4% |

| YOLOv5-N (r6.1) | 1.9M | 4.5G | 640 | 159 | - / 28.0% | - | - | - | - | - |

| YOLOv5-S (r6.1) | 7.2M | 16.5G | 640 | 156 | - / 37.4% | - | - | - | - | - |

| YOLOv5-M (r6.1) | 21.2M | 49.0G | 640 | 122 | - / 45.4% | - | - | - | - | - |

| YOLOv5-L (r6.1) | 46.5M | 109.1G | 640 | 99 | - / 49.0% | - | - | - | - | - |

| YOLOv5-X (r6.1) | 86.7M | 205.7G | 640 | 83 | - / 50.7% | - | - | - | - | - |

| YOLOR-CSP | 52.9M | 120.4G | 640 | 106 | 51.1% / 50.8% | 69.6% | 55.7% | 31.7% | 55.3% | 64.7% |

| YOLOR-CSP-X | 96.9M | 226.8G | 640 | 87 | 53.0% / 52.7% | 71.4% | 57.9% | 33.7% | 57.1% | 66.8% |

| YOLOv7-tiny-SiLU | 6.2M | 13.8G | 640 | 286 | 38.7% / 38.7% | 56.7% | 41.7% | 18.8% | 42.4% | 51.9% |

| YOLOv7 | 36.9M | 104.7G | 640 | 161 | 51.4% / 51.2% | 69.7% | 55.9% | 31.8% | 55.5% | 65.0% |

| YOLOv7-X | 71.3M | 189.9G | 640 | 114 | 53.1% / 52.9% | 71.2% | 57.8% | 33.8% | 57.1% | 67.4% |

| YOLOv5-N6 (r6.1) | 3.2M | 18.4G | 1280 | 123 | - / 36.0% | - | - | - | - | - |

| YOLOv5-S6 (r6.1) | 12.6M | 67.2G | 1280 | 122 | - / 44.8% | - | - | - | - | - |

| YOLOv5-M6 (r6.1) | 35.7M | 200.0G | 1280 | 90 | - / 51.3% | - | - | - | - | - |

| YOLOv5-L6 (r6.1) | 76.8M | 445.6G | 1280 | 63 | - / 53.7% | - | - | - | - | - |

| YOLOv5-X6 (r6.1) | 140.7M | 839.2G | 1280 | 38 | - / 55.0% | - | - | - | - | - |

| YOLOR-P6 | 37.2M | 325.6G | 1280 | 76 | 53.9% / 53.5% | 71.4% | 58.9% | 36.1% | 57.7% | 65.6% |

| YOLOR-W6 | 79.8G | 453.2G | 1280 | 66 | 55.2% / 54.8% | 72.7% | 60.5% | 37.7% | 59.1% | 67.1% |

| YOLOR-E6 | 115.8M | 683.2G | 1280 | 45 | 55.8% / 55.7% | 73.4% | 61.1% | 38.4% | 59.7% | 67.7% |

| YOLOR-D6 | 151.7M | 935.6G | 1280 | 34 | 56.5% / 56.1% | 74.1% | 61.9% | 38.9% | 60.4% | 68.7% |

| YOLOv7-W6 | 70.4M | 360.0G | 1280 | 84 | 54.9% / 54.6% | 72.6% | 60.1% | 37.3% | 58.7% | 67.1% |

| YOLOv7-E6 | 97.2M | 515.2G | 1280 | 56 | 56.0% / 55.9% | 73.5% | 61.2% | 38.0% | 59.9% | 68.4% |

| YOLOv7-D6 | 154.7M | 806.8G | 1280 | 44 | 56.6% / 56.3% | 74.0% | 61.8% | 38.8% | 60.1% | 69.5% |

| YOLOv7-E6E | 151.7M | 843.2G | 1280 | 36 | 56.8% / 56.8% | 74.4% | 62.1% | 39.3% | 60.5% | 69.0% |

Overview

Real-time object detection is an important component in many computer vision systems, including multi-object tracking, autonomous driving, robotics, and medical image analysis. In recent years, real-time object detection development has focused on designing efficient architectures and improving the inference speed of various CPUs, GPUs, and neural processing units (NPUs). YOLOv7 supports both mobile GPU and GPU devices, from the edge to the cloud.

Unlike traditional real-time object detectors that focus on architecture optimization, YOLOv7 introduces a focus on the optimization of the training process. This includes modules and optimization methods designed to improve the accuracy of object detection without increasing the inference cost, a concept known as the "trainable bag-of-freebies".

Key Features

YOLOv7 introduces several key features:

-

Model Re-parameterization: YOLOv7 proposes a planned re-parameterized model, which is a strategy applicable to layers in different networks with the concept of gradient propagation path.

-

Dynamic Label Assignment: The training of the model with multiple output layers presents a new issue: "How to assign dynamic targets for the outputs of different branches?" To solve this problem, YOLOv7 introduces a new label assignment method called coarse-to-fine lead guided label assignment.

-

Extended and Compound Scaling: YOLOv7 proposes "extend" and "compound scaling" methods for the real-time object detector that can effectively utilize parameters and computation.

-

Efficiency: The method proposed by YOLOv7 can effectively reduce about 40% parameters and 50% computation of state-of-the-art real-time object detector, and has faster inference speed and higher detection accuracy.

Usage Examples

As of the time of writing, Ultralytics does not currently support YOLOv7 models. Therefore, any users interested in using YOLOv7 will need to refer directly to the YOLOv7 GitHub repository for installation and usage instructions.

Here is a brief overview of the typical steps you might take to use YOLOv7:

-

Visit the YOLOv7 GitHub repository: https://github.com/WongKinYiu/yolov7.

-

Follow the instructions provided in the README file for installation. This typically involves cloning the repository, installing necessary dependencies, and setting up any necessary environment variables.

-

Once installation is complete, you can train and use the model as per the usage instructions provided in the repository. This usually involves preparing your dataset, configuring the model parameters, training the model, and then using the trained model to perform object detection.

Please note that the specific steps may vary depending on your specific use case and the current state of the YOLOv7 repository. Therefore, it is strongly recommended to refer directly to the instructions provided in the YOLOv7 GitHub repository.

We regret any inconvenience this may cause and will strive to update this document with usage examples for Ultralytics once support for YOLOv7 is implemented.

Citations and Acknowledgements

We would like to acknowledge the YOLOv7 authors for their significant contributions in the field of real-time object detection:

!!! Quote ""

=== "BibTeX"

```py

@article{wang2022yolov7,

title={{YOLOv7}: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors},

author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark},

journal={arXiv preprint arXiv:2207.02696},

year={2022}

}

```

The original YOLOv7 paper can be found on arXiv. The authors have made their work publicly available, and the codebase can be accessed on GitHub. We appreciate their efforts in advancing the field and making their work accessible to the broader community.

FAQ

What is YOLOv7 and why is it considered a breakthrough in real-time object detection?

YOLOv7 is a cutting-edge real-time object detection model that achieves unparalleled speed and accuracy. It surpasses other models, such as YOLOX, YOLOv5, and PPYOLOE, in both parameters usage and inference speed. YOLOv7's distinguishing features include its model re-parameterization and dynamic label assignment, which optimize its performance without increasing inference costs. For more technical details about its architecture and comparison metrics with other state-of-the-art object detectors, refer to the YOLOv7 paper.

How does YOLOv7 improve on previous YOLO models like YOLOv4 and YOLOv5?

YOLOv7 introduces several innovations, including model re-parameterization and dynamic label assignment, which enhance the training process and improve inference accuracy. Compared to YOLOv5, YOLOv7 significantly boosts speed and accuracy. For instance, YOLOv7-X improves accuracy by 2.2% and reduces parameters by 22% compared to YOLOv5-X. Detailed comparisons can be found in the performance table YOLOv7 comparison with SOTA object detectors.

Can I use YOLOv7 with Ultralytics tools and platforms?

As of now, Ultralytics does not directly support YOLOv7 in its tools and platforms. Users interested in using YOLOv7 need to follow the installation and usage instructions provided in the YOLOv7 GitHub repository. For other state-of-the-art models, you can explore and train using Ultralytics tools like Ultralytics HUB.

How do I install and run YOLOv7 for a custom object detection project?

To install and run YOLOv7, follow these steps:

- Clone the YOLOv7 repository:

git clone https://github.com/WongKinYiu/yolov7 - Navigate to the cloned directory and install dependencies:

cd yolov7 pip install -r requirements.txt - Prepare your dataset and configure the model parameters according to the usage instructions provided in the repository.

For further guidance, visit the YOLOv7 GitHub repository for the latest information and updates.

What are the key features and optimizations introduced in YOLOv7?

YOLOv7 offers several key features that revolutionize real-time object detection:

- Model Re-parameterization: Enhances the model's performance by optimizing gradient propagation paths.

- Dynamic Label Assignment: Uses a coarse-to-fine lead guided method to assign dynamic targets for outputs across different branches, improving accuracy.

- Extended and Compound Scaling: Efficiently utilizes parameters and computation to scale the model for various real-time applications.

- Efficiency: Reduces parameter count by 40% and computation by 50% compared to other state-of-the-art models while achieving faster inference speeds.

For further details on these features, see the YOLOv7 Overview section.

comments: true

description: Discover YOLOv8, the latest advancement in real-time object detection, optimizing performance with an array of pre-trained models for diverse tasks.

keywords: YOLOv8, real-time object detection, YOLO series, Ultralytics, computer vision, advanced object detection, AI, machine learning, deep learning

YOLOv8

Overview

YOLOv8 is the latest iteration in the YOLO series of real-time object detectors, offering cutting-edge performance in terms of accuracy and speed. Building upon the advancements of previous YOLO versions, YOLOv8 introduces new features and optimizations that make it an ideal choice for various object detection tasks in a wide range of applications.

Watch: Ultralytics YOLOv8 Model Overview

Key Features

- Advanced Backbone and Neck Architectures: YOLOv8 employs state-of-the-art backbone and neck architectures, resulting in improved feature extraction and object detection performance.

- Anchor-free Split Ultralytics Head: YOLOv8 adopts an anchor-free split Ultralytics head, which contributes to better accuracy and a more efficient detection process compared to anchor-based approaches.

- Optimized Accuracy-Speed Tradeoff: With a focus on maintaining an optimal balance between accuracy and speed, YOLOv8 is suitable for real-time object detection tasks in diverse application areas.

- Variety of Pre-trained Models: YOLOv8 offers a range of pre-trained models to cater to various tasks and performance requirements, making it easier to find the right model for your specific use case.

Supported Tasks and Modes

The YOLOv8 series offers a diverse range of models, each specialized for specific tasks in computer vision. These models are designed to cater to various requirements, from object detection to more complex tasks like instance segmentation, pose/keypoints detection, oriented object detection, and classification.

Each variant of the YOLOv8 series is optimized for its respective task, ensuring high performance and accuracy. Additionally, these models are compatible with various operational modes including Inference, Validation, Training, and Export, facilitating their use in different stages of deployment and development.

| Model | Filenames | Task | Inference | Validation | Training | Export |

|---|---|---|---|---|---|---|

| YOLOv8 | yolov8n.pt yolov8s.pt yolov8m.pt yolov8l.pt yolov8x.pt |

Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-seg | yolov8n-seg.pt yolov8s-seg.pt yolov8m-seg.pt yolov8l-seg.pt yolov8x-seg.pt |

Instance Segmentation | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-pose | yolov8n-pose.pt yolov8s-pose.pt yolov8m-pose.pt yolov8l-pose.pt yolov8x-pose.pt yolov8x-pose-p6.pt |

Pose/Keypoints | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-obb | yolov8n-obb.pt yolov8s-obb.pt yolov8m-obb.pt yolov8l-obb.pt yolov8x-obb.pt |

Oriented Detection | ✅ | ✅ | ✅ | ✅ |

| YOLOv8-cls | yolov8n-cls.pt yolov8s-cls.pt yolov8m-cls.pt yolov8l-cls.pt yolov8x-cls.pt |

Classification | ✅ | ✅ | ✅ | ✅ |

This table provides an overview of the YOLOv8 model variants, highlighting their applicability in specific tasks and their compatibility with various operational modes such as Inference, Validation, Training, and Export. It showcases the versatility and robustness of the YOLOv8 series, making them suitable for a variety of applications in computer vision.

Performance Metrics

!!! Performance

=== "Detection (COCO)"

See [Detection Docs](../tasks/detect.md) for usage examples with these models trained on [COCO](../datasets/detect/coco.md), which include 80 pre-trained classes.

| Model | size<br><sup>(pixels) | mAP<sup>val<br>50-95 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

| ------------------------------------------------------------------------------------ | --------------------- | -------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- |

| [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n.pt) | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 |

| [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8s.pt) | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 |

| [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8m.pt) | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 |

| [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8l.pt) | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 |

| [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8x.pt) | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 |

=== "Detection (Open Images V7)"

See [Detection Docs](../tasks/detect.md) for usage examples with these models trained on [Open Image V7](../datasets/detect/open-images-v7.md), which include 600 pre-trained classes.

| Model | size<br><sup>(pixels) | mAP<sup>val<br>50-95 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

| ----------------------------------------------------------------------------------------- | --------------------- | -------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- |

| [YOLOv8n](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n-oiv7.pt) | 640 | 18.4 | 142.4 | 1.21 | 3.5 | 10.5 |

| [YOLOv8s](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8s-oiv7.pt) | 640 | 27.7 | 183.1 | 1.40 | 11.4 | 29.7 |

| [YOLOv8m](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8m-oiv7.pt) | 640 | 33.6 | 408.5 | 2.26 | 26.2 | 80.6 |

| [YOLOv8l](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8l-oiv7.pt) | 640 | 34.9 | 596.9 | 2.43 | 44.1 | 167.4 |

| [YOLOv8x](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8x-oiv7.pt) | 640 | 36.3 | 860.6 | 3.56 | 68.7 | 260.6 |

=== "Segmentation (COCO)"

See [Segmentation Docs](../tasks/segment.md) for usage examples with these models trained on [COCO](../datasets/segment/coco.md), which include 80 pre-trained classes.

| Model | size<br><sup>(pixels) | mAP<sup>box<br>50-95 | mAP<sup>mask<br>50-95 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

| -------------------------------------------------------------------------------------------- | --------------------- | -------------------- | --------------------- | ------------------------------ | ----------------------------------- | ------------------ | ----------------- |

| [YOLOv8n-seg](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n-seg.pt) | 640 | 36.7 | 30.5 | 96.1 | 1.21 | 3.4 | 12.6 |

| [YOLOv8s-seg](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8s-seg.pt) | 640 | 44.6 | 36.8 | 155.7 | 1.47 | 11.8 | 42.6 |

| [YOLOv8m-seg](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8m-seg.pt) | 640 | 49.9 | 40.8 | 317.0 | 2.18 | 27.3 | 110.2 |

| [YOLOv8l-seg](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8l-seg.pt) | 640 | 52.3 | 42.6 | 572.4 | 2.79 | 46.0 | 220.5 |

| [YOLOv8x-seg](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8x-seg.pt) | 640 | 53.4 | 43.4 | 712.1 | 4.02 | 71.8 | 344.1 |

=== "Classification (ImageNet)"

See [Classification Docs](../tasks/classify.md) for usage examples with these models trained on [ImageNet](../datasets/classify/imagenet.md), which include 1000 pre-trained classes.

| Model | size<br><sup>(pixels) | acc<br><sup>top1 | acc<br><sup>top5 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) at 640 |

| -------------------------------------------------------------------------------------------- | --------------------- | ---------------- | ---------------- | ------------------------------ | ----------------------------------- | ------------------ | ------------------------ |

| [YOLOv8n-cls](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n-cls.pt) | 224 | 69.0 | 88.3 | 12.9 | 0.31 | 2.7 | 4.3 |

| [YOLOv8s-cls](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8s-cls.pt) | 224 | 73.8 | 91.7 | 23.4 | 0.35 | 6.4 | 13.5 |

| [YOLOv8m-cls](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8m-cls.pt) | 224 | 76.8 | 93.5 | 85.4 | 0.62 | 17.0 | 42.7 |

| [YOLOv8l-cls](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8l-cls.pt) | 224 | 76.8 | 93.5 | 163.0 | 0.87 | 37.5 | 99.7 |

| [YOLOv8x-cls](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8x-cls.pt) | 224 | 79.0 | 94.6 | 232.0 | 1.01 | 57.4 | 154.8 |

=== "Pose (COCO)"

See [Pose Estimation Docs](../tasks/pose.md) for usage examples with these models trained on [COCO](../datasets/pose/coco.md), which include 1 pre-trained class, 'person'.

| Model | size<br><sup>(pixels) | mAP<sup>pose<br>50-95 | mAP<sup>pose<br>50 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

| ---------------------------------------------------------------------------------------------------- | --------------------- | --------------------- | ------------------ | ------------------------------ | ----------------------------------- | ------------------ | ----------------- |

| [YOLOv8n-pose](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n-pose.pt) | 640 | 50.4 | 80.1 | 131.8 | 1.18 | 3.3 | 9.2 |

| [YOLOv8s-pose](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8s-pose.pt) | 640 | 60.0 | 86.2 | 233.2 | 1.42 | 11.6 | 30.2 |

| [YOLOv8m-pose](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8m-pose.pt) | 640 | 65.0 | 88.8 | 456.3 | 2.00 | 26.4 | 81.0 |

| [YOLOv8l-pose](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8l-pose.pt) | 640 | 67.6 | 90.0 | 784.5 | 2.59 | 44.4 | 168.6 |

| [YOLOv8x-pose](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8x-pose.pt) | 640 | 69.2 | 90.2 | 1607.1 | 3.73 | 69.4 | 263.2 |

| [YOLOv8x-pose-p6](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8x-pose-p6.pt) | 1280 | 71.6 | 91.2 | 4088.7 | 10.04 | 99.1 | 1066.4 |

=== "OBB (DOTAv1)"

See [Oriented Detection Docs](../tasks/obb.md) for usage examples with these models trained on [DOTAv1](../datasets/obb/dota-v2.md#dota-v10), which include 15 pre-trained classes.

| Model | size<br><sup>(pixels) | mAP<sup>test<br>50 | Speed<br><sup>CPU ONNX<br>(ms) | Speed<br><sup>A100 TensorRT<br>(ms) | params<br><sup>(M) | FLOPs<br><sup>(B) |

|----------------------------------------------------------------------------------------------|-----------------------| -------------------- | -------------------------------- | ------------------------------------- | -------------------- | ----------------- |

| [YOLOv8n-obb](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n-obb.pt) | 1024 | 78.0 | 204.77 | 3.57 | 3.1 | 23.3 |

| [YOLOv8s-obb](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8s-obb.pt) | 1024 | 79.5 | 424.88 | 4.07 | 11.4 | 76.3 |

| [YOLOv8m-obb](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8m-obb.pt) | 1024 | 80.5 | 763.48 | 7.61 | 26.4 | 208.6 |

| [YOLOv8l-obb](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8l-obb.pt) | 1024 | 80.7 | 1278.42 | 11.83 | 44.5 | 433.8 |

| [YOLOv8x-obb](https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8x-obb.pt) | 1024 | 81.36 | 1759.10 | 13.23 | 69.5 | 676.7 |

Usage Examples

This example provides simple YOLOv8 training and inference examples. For full documentation on these and other modes see the Predict, Train, Val and Export docs pages.

Note the below example is for YOLOv8 Detect models for object detection. For additional supported tasks see the Segment, Classify, OBB docs and Pose docs.

!!! Example

=== "Python"

PyTorch pretrained `*.pt` models as well as configuration `*.yaml` files can be passed to the `YOLO()` class to create a model instance in python:

```py

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8n model

model = YOLO("yolov8n.pt")

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the YOLOv8n model on the 'bus.jpg' image

results = model("path/to/bus.jpg")

```

=== "CLI"

CLI commands are available to directly run the models:

```py

# Load a COCO-pretrained YOLOv8n model and train it on the COCO8 example dataset for 100 epochs

yolo train model=yolov8n.pt data=coco8.yaml epochs=100 imgsz=640

# Load a COCO-pretrained YOLOv8n model and run inference on the 'bus.jpg' image

yolo predict model=yolov8n.pt source=path/to/bus.jpg

```

Citations and Acknowledgements

If you use the YOLOv8 model or any other software from this repository in your work, please cite it using the following format:

!!! Quote ""

=== "BibTeX"

```py

@software{yolov8_ultralytics,

author = {Glenn Jocher and Ayush Chaurasia and Jing Qiu},

title = {Ultralytics YOLOv8},

version = {8.0.0},

year = {2023},

url = {https://github.com/ultralytics/ultralytics},

orcid = {0000-0001-5950-6979, 0000-0002-7603-6750, 0000-0003-3783-7069},

license = {AGPL-3.0}

}

```

Please note that the DOI is pending and will be added to the citation once it is available. YOLOv8 models are provided under AGPL-3.0 and Enterprise licenses.

FAQ

What is YOLOv8 and how does it differ from previous YOLO versions?

YOLOv8 is the latest iteration in the Ultralytics YOLO series, designed to improve real-time object detection performance with advanced features. Unlike earlier versions, YOLOv8 incorporates an anchor-free split Ultralytics head, state-of-the-art backbone and neck architectures, and offers optimized accuracy-speed tradeoff, making it ideal for diverse applications. For more details, check the Overview and Key Features sections.

How can I use YOLOv8 for different computer vision tasks?