Lucidrains-系列项目源码解析-四十二-

Lucidrains 系列项目源码解析(四十二)

.\lucidrains\st-moe-pytorch\st_moe_pytorch\__init__.py

# 从 st_moe_pytorch.st_moe_pytorch 模块中导入 MoE 和 SparseMoEBlock 类

from st_moe_pytorch.st_moe_pytorch import (

MoE,

SparseMoEBlock

)

STAM - Pytorch

Implementation of STAM (Space Time Attention Model), yet another pure and simple SOTA attention model that bests all previous models in video classification. This corroborates the finding of TimeSformer. Attention is all we need.

Install

$ pip install stam-pytorch

Usage

import torch

from stam_pytorch import STAM

model = STAM(

dim = 512,

image_size = 256, # size of image

patch_size = 32, # patch size

num_frames = 5, # number of image frames, selected out of video

space_depth = 12, # depth of vision transformer

space_heads = 8, # heads of vision transformer

space_mlp_dim = 2048, # feedforward hidden dimension of vision transformer

time_depth = 6, # depth of time transformer (in paper, it was shallower, 6)

time_heads = 8, # heads of time transformer

time_mlp_dim = 2048, # feedforward hidden dimension of time transformer

num_classes = 100, # number of output classes

space_dim_head = 64, # space transformer head dimension

time_dim_head = 64, # time transformer head dimension

dropout = 0., # dropout

emb_dropout = 0. # embedding dropout

)

frames = torch.randn(2, 5, 3, 256, 256) # (batch x frames x channels x height x width)

pred = model(frames) # (2, 100)

Citations

@misc{sharir2021image,

title = {An Image is Worth 16x16 Words, What is a Video Worth?},

author = {Gilad Sharir and Asaf Noy and Lihi Zelnik-Manor},

year = {2021},

eprint = {2103.13915},

archivePrefix = {arXiv},

primaryClass = {cs.CV}

}

.\lucidrains\STAM-pytorch\setup.py

# 导入设置安装和查找包的函数

from setuptools import setup, find_packages

# 设置包的元数据

setup(

name = 'stam-pytorch', # 包的名称

packages = find_packages(), # 查找所有包

version = '0.0.4', # 版本号

license='MIT', # 许可证

description = 'Space Time Attention Model (STAM) - Pytorch', # 描述

author = 'Phil Wang', # 作者

author_email = 'lucidrains@gmail.com', # 作者邮箱

url = 'https://github.com/lucidrains/STAM-pytorch', # 项目链接

keywords = [ # 关键词列表

'artificial intelligence',

'attention mechanism',

'image recognition'

],

install_requires=[ # 安装依赖

'torch>=1.6',

'einops>=0.3'

],

classifiers=[ # 分类器

'Development Status :: 4 - Beta',

'Intended Audience :: Developers',

'Topic :: Scientific/Engineering :: Artificial Intelligence',

'License :: OSI Approved :: MIT License',

'Programming Language :: Python :: 3.6',

],

)

.\lucidrains\STAM-pytorch\stam_pytorch\stam.py

# 导入 torch 库

import torch

# 从 torch 库中导入 nn 模块和 einsum 函数

from torch import nn, einsum

# 从 einops 库中导入 rearrange 和 repeat 函数,以及 torch 模块中的 Rearrange 类

from einops import rearrange, repeat

from einops.layers.torch import Rearrange

# 定义 PreNorm 类,继承自 nn.Module 类

class PreNorm(nn.Module):

# 初始化函数,接受维度 dim 和函数 fn 作为参数

def __init__(self, dim, fn):

super().__init__()

# 初始化 LayerNorm 层

self.norm = nn.LayerNorm(dim)

# 将传入的函数赋值给 fn

self.fn = fn

# 前向传播函数,接受输入 x 和关键字参数 kwargs

def forward(self, x, **kwargs):

# 对输入 x 进行 LayerNorm 处理后,再传入函数 fn 进行处理

return self.fn(self.norm(x), **kwargs)

# 定义 FeedForward 类,继承自 nn.Module 类

class FeedForward(nn.Module):

# 初始化函数,接受维度 dim、隐藏层维度 hidden_dim 和 dropout 参数(默认为 0.)

def __init__(self, dim, hidden_dim, dropout = 0.):

super().__init__()

# 定义神经网络结构

self.net = nn.Sequential(

nn.Linear(dim, hidden_dim),

nn.GELU(),

nn.Dropout(dropout),

nn.Linear(hidden_dim, dim),

nn.Dropout(dropout)

)

# 前向传播函数,接受输入 x

def forward(self, x):

# 将输入 x 传入神经网络结构中

return self.net(x)

# 定义 Attention 类,继承自 nn.Module 类

class Attention(nn.Module):

# 初始化函数,接受维度 dim、头数 heads、头维度 dim_head 和 dropout 参数(默认为 0.)

def __init__(self, dim, heads = 8, dim_head = 64, dropout = 0.):

super().__init__()

inner_dim = dim_head * heads

self.heads = heads

self.scale = dim_head ** -0.5

# 定义线性层,用于计算 Q、K、V

self.to_qkv = nn.Linear(dim, inner_dim * 3, bias = False)

# 定义输出层

self.to_out = nn.Sequential(

nn.Linear(inner_dim, dim),

nn.Dropout(dropout)

)

# 前向传播函数,接受输入 x

def forward(self, x):

b, n, _, h = *x.shape, self.heads

# 将输入 x 通过线性层得到 Q、K、V,并分割为三部分

qkv = self.to_qkv(x).chunk(3, dim = -1)

q, k, v = map(lambda t: rearrange(t, 'b n (h d) -> b h n d', h = h), qkv)

# 计算注意力权重

dots = einsum('b h i d, b h j d -> b h i j', q, k) * self.scale

attn = dots.softmax(dim=-1)

# 计算输出

out = einsum('b h i j, b h j d -> b h i d', attn, v)

out = rearrange(out, 'b h n d -> b n (h d)')

return self.to_out(out)

# 定义 Transformer 类,继承自 nn.Module 类

class Transformer(nn.Module):

# 初始化函数,接受维度 dim、层数 depth、头数 heads、头维度 dim_head、MLP维度 mlp_dim 和 dropout 参数(默认为 0.)

def __init__(self, dim, depth, heads, dim_head, mlp_dim, dropout = 0.):

super().__init__()

self.layers = nn.ModuleList([])

self.norm = nn.LayerNorm(dim)

# 构建多层 Transformer 结构

for _ in range(depth):

self.layers.append(nn.ModuleList([

PreNorm(dim, Attention(dim, heads = heads, dim_head = dim_head, dropout = dropout)),

PreNorm(dim, FeedForward(dim, mlp_dim, dropout = dropout))

]))

# 前向传播函数,接受输入 x

def forward(self, x):

# 遍历每一层 Transformer 结构

for attn, ff in self.layers:

x = attn(x) + x

x = ff(x) + x

return self.norm(x)

# 定义 STAM 类,继承自 nn.Module 类

class STAM(nn.Module):

# 初始化函数,接受多个参数,包括维度 dim、图像大小 image_size、patch 大小 patch_size、帧数 num_frames、类别数 num_classes 等

def __init__(

self,

*,

dim,

image_size,

patch_size,

num_frames,

num_classes,

space_depth,

space_heads,

space_mlp_dim,

time_depth,

time_heads,

time_mlp_dim,

space_dim_head = 64,

time_dim_head = 64,

dropout = 0.,

emb_dropout = 0.

):

super().__init__()

assert image_size % patch_size == 0, 'Image dimensions must be divisible by the patch size.'

num_patches = (image_size // patch_size) ** 2

patch_dim = 3 * patch_size ** 2

# 定义图像块到嵌入向量的映射

self.to_patch_embedding = nn.Sequential(

Rearrange('b f c (h p1) (w p2) -> b f (h w) (p1 p2 c)', p1 = patch_size, p2 = patch_size),

nn.Linear(patch_dim, dim),

)

# 定义位置嵌入向量

self.pos_embedding = nn.Parameter(torch.randn(1, num_frames, num_patches + 1, dim))

self.space_cls_token = nn.Parameter(torch.randn(1, dim))

self.time_cls_token = nn.Parameter(torch.randn(1, dim))

self.dropout = nn.Dropout(emb_dropout)

# 定义空间 Transformer 和时间 Transformer

self.space_transformer = Transformer(dim, space_depth, space_heads, space_dim_head, space_mlp_dim, dropout)

self.time_transformer = Transformer(dim, time_depth, time_heads, time_dim_head, time_mlp_dim, dropout)

self.mlp_head = nn.Linear(dim, num_classes)

# 定义一个前向传播函数,接受视频数据作为输入

def forward(self, video):

# 将视频数据转换为补丁嵌入

x = self.to_patch_embedding(video)

b, f, n, *_ = x.shape

# 连接空间的CLS标记

# 重复空间的CLS标记,以匹配补丁嵌入的维度

space_cls_tokens = repeat(self.space_cls_token, 'n d -> b f n d', b = b, f = f)

# 在空间的CLS标记和补丁嵌入之间进行连接

x = torch.cat((space_cls_tokens, x), dim = -2)

# 位置嵌入

# 添加位置嵌入到补丁嵌入中

x += self.pos_embedding[:, :, :(n + 1)]

# 对结果进行dropout处理

x = self.dropout(x)

# 空间注意力

# 重新排列张量的维度,以便输入到空间变换器中

x = rearrange(x, 'b f ... -> (b f) ...')

# 使用空间变换器处理数据

x = self.space_transformer(x)

# 从每个帧中选择CLS标记

x = rearrange(x[:, 0], '(b f) ... -> b f ...', b = b)

# 连接时间的CLS标记

# 重复时间的CLS标记,以匹配补丁嵌入的维度

time_cls_tokens = repeat(self.time_cls_token, 'n d -> b n d', b = b)

# 在时间的CLS标记和空间注意力结果之间进行连接

x = torch.cat((time_cls_tokens, x), dim = -2)

# 时间注意力

# 使用时间变换器处理数据

x = self.time_transformer(x)

# 最终的多层感知机

# 从每个样本中选择第一个元素,并通过多层感知机处理

return self.mlp_head(x[:, 0])

.\lucidrains\STAM-pytorch\stam_pytorch\__init__.py

# 从stam_pytorch.stam模块中导入STAM类

from stam_pytorch.stam import STAM

Simple StyleGan2 for Pytorch

Simple Pytorch implementation of Stylegan2 based on https://arxiv.org/abs/1912.04958 that can be completely trained from the command-line, no coding needed.

Below are some flowers that do not exist.

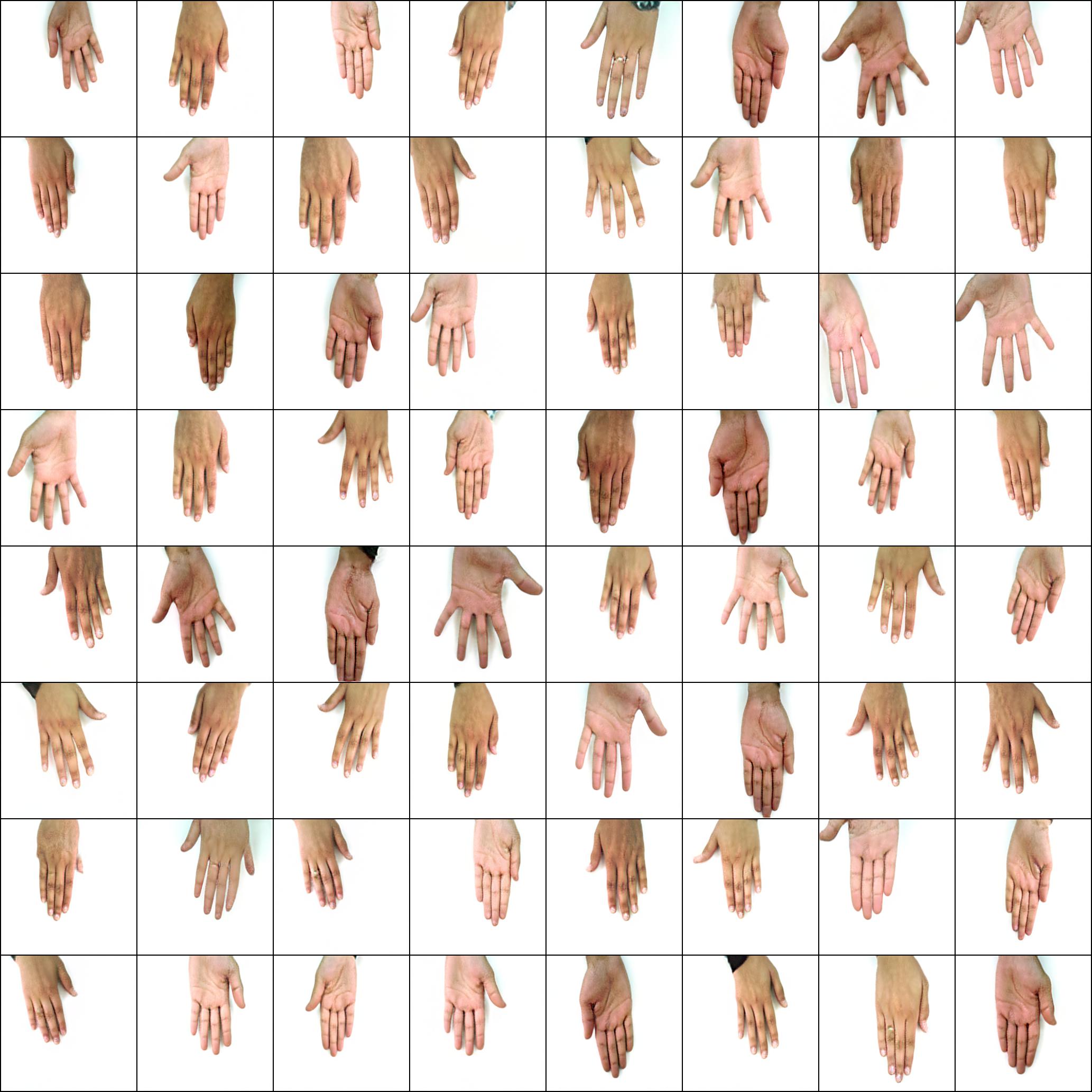

Neither do these hands

Nor these cities

Nor these celebrities (trained by @yoniker)

Install

You will need a machine with a GPU and CUDA installed. Then pip install the package like this

$ pip install stylegan2_pytorch

If you are using a windows machine, the following commands reportedly works.

$ conda install pytorch torchvision -c python

$ pip install stylegan2_pytorch

Use

$ stylegan2_pytorch --data /path/to/images

That's it. Sample images will be saved to results/default and models will be saved periodically to models/default.

Advanced Use

You can specify the name of your project with

$ stylegan2_pytorch --data /path/to/images --name my-project-name

You can also specify the location where intermediate results and model checkpoints should be stored with

$ stylegan2_pytorch --data /path/to/images --name my-project-name --results_dir /path/to/results/dir --models_dir /path/to/models/dir

You can increase the network capacity (which defaults to 16) to improve generation results, at the cost of more memory.

$ stylegan2_pytorch --data /path/to/images --network-capacity 256

By default, if the training gets cut off, it will automatically resume from the last checkpointed file. If you want to restart with new settings, just add a new flag

$ stylegan2_pytorch --new --data /path/to/images --name my-project-name --image-size 512 --batch-size 1 --gradient-accumulate-every 16 --network-capacity 10

Once you have finished training, you can generate images from your latest checkpoint like so.

$ stylegan2_pytorch --generate

To generate a video of a interpolation through two random points in latent space.

$ stylegan2_pytorch --generate-interpolation --interpolation-num-steps 100

To save each individual frame of the interpolation

$ stylegan2_pytorch --generate-interpolation --save-frames

If a previous checkpoint contained a better generator, (which often happens as generators start degrading towards the end of training), you can load from a previous checkpoint with another flag

$ stylegan2_pytorch --generate --load-from {checkpoint number}

A technique used in both StyleGAN and BigGAN is truncating the latent values so that their values fall close to the mean. The small the truncation value, the better the samples will appear at the cost of sample variety. You can control this with the --trunc-psi, where values typically fall between 0.5 and 1. It is set at 0.75 as default

$ stylegan2_pytorch --generate --trunc-psi 0.5

Multi-GPU training

If you have one machine with multiple GPUs, the repository offers a way to utilize all of them for training. With multiple GPUs, each batch will be divided evenly amongst the GPUs available. For example, for 2 GPUs, with a batch size of 32, each GPU will see 16 samples.

You simply have to add a --multi-gpus flag, everyting else is taken care of. If you would like to restrict to specific GPUs, you can use the CUDA_VISIBLE_DEVICES environment variable to control what devices can be used. (ex. CUDA_VISIBLE_DEVICES=0,2,3 only devices 0, 2, 3 are available)

$ stylegan2_pytorch --data ./data --multi-gpus --batch-size 32 --gradient-accumulate-every 1

Low amounts of Training Data

In the past, GANs needed a lot of data to learn how to generate well. The faces model took 70k high quality images from Flickr, as an example.

However, in the month of May 2020, researchers all across the world independently converged on a simple technique to reduce that number to as low as 1-2k. That simple idea was to differentiably augment all images, generated or real, going into the discriminator during training.

If one were to augment at a low enough probability, the augmentations will not 'leak' into the generations.

In the setting of low data, you can use the feature with a simple flag.

# find a suitable probability between 0. -> 0.7 at maximum

$ stylegan2_pytorch --data ./data --aug-prob 0.25

By default, the augmentations used are translation and cutout. If you would like to add color, you can do so with the --aug-types argument.

# make sure there are no spaces between items!

$ stylegan2_pytorch --data ./data --aug-prob 0.25 --aug-types [translation,cutout,color]

You can customize it to any combination of the three you would like. The differentiable augmentation code was copied and slightly modified from here.

When do I stop training?

For as long as possible until the adversarial game between the two neural nets fall apart (we call this divergence). By default, the number of training steps is set to 150000 for 128x128 images, but you will certainly want this number to be higher if the GAN doesn't diverge by the end of training, or if you are training at a higher resolution.

$ stylegan2_pytorch --data ./data --image-size 512 --num-train-steps 1000000

Attention

This framework also allows for you to add an efficient form of self-attention to the designated layers of the discriminator (and the symmetric layer of the generator), which will greatly improve results. The more attention you can afford, the better!

# add self attention after the output of layer 1

$ stylegan2_pytorch --data ./data --attn-layers 1

# add self attention after the output of layers 1 and 2

# do not put a space after the comma in the list!

$ stylegan2_pytorch --data ./data --attn-layers [1,2]

Bonus

Training on transparent images

$ stylegan2_pytorch --data ./transparent/images/path --transparent

Memory considerations

The more GPU memory you have, the bigger and better the image generation will be. Nvidia recommended having up to 16GB for training 1024x1024 images. If you have less than that, there are a couple settings you can play with so that the model fits.

$ stylegan2_pytorch --data /path/to/data \

--batch-size 3 \

--gradient-accumulate-every 5 \

--network-capacity 16

-

Batch size - You can decrease the

batch-sizedown to 1, but you should increase thegradient-accumulate-everycorrespondingly so that the mini-batch the network sees is not too small. This may be confusing to a layperson, so I'll think about how I would automate the choice ofgradient-accumulate-everygoing forward. -

Network capacity - You can decrease the neural network capacity to lessen the memory requirements. Just be aware that this has been shown to degrade generation performance.

If none of this works, you can settle for 'Lightweight' GAN, which will allow you to tradeoff quality to train at greater resolutions in reasonable amount of time.

Deployment on AWS

Below are some steps which may be helpful for deployment using Amazon Web Services. In order to use this, you will have

to provision a GPU-backed EC2 instance. An appropriate instance type would be from a p2 or p3 series. I (iboates) tried

a p2.xlarge (the cheapest option) and it was quite slow, slower in fact than using Google Colab. More powerful instance

types may be better but they are more expensive. You can read more about them

here.

Setup steps

- Archive your training data and upload it to an S3 bucket

- Provision your EC2 instance (I used an Ubuntu AMI)

- Log into your EC2 instance via SSH

- Install the aws CLI client and configure it:

sudo snap install aws-cli --classic

aws configure

You will then have to enter your AWS access keys, which you can retrieve from the management console under AWS

Management Console > Profile > My Security Credentials > Access Keys

Then, run these commands, or maybe put them in a shell script and execute that:

mkdir data

curl -O https://bootstrap.pypa.io/get-pip.py

sudo apt-get install python3-distutils

python3 get-pip.py

pip3 install stylegan2_pytorch

export PATH=$PATH:/home/ubuntu/.local/bin

aws s3 sync s3://<Your bucket name> ~/data

cd data

tar -xf ../train.tar.gz

Now you should be able to train by simplying calling stylegan2_pytorch [args].

Notes:

- If you have a lot of training data, you may need to provision extra block storage via EBS.

- Also, you may need to spread your data across multiple archives.

- You should run this on a

screenwindow so it won't terminate once you log out of the SSH session.

Research

FID Scores

Thanks to GetsEclectic, you can now calculate the FID score periodically! Again, made super simple with one extra argument, as shown below.

Firstly, install the pytorch_fid package

$ pip install pytorch-fid

Followed by

$ stylegan2_pytorch --data ./data --calculate-fid-every 5000

FID results will be logged to ./results/{name}/fid_scores.txt

Coding

If you would like to sample images programmatically, you can do so with the following simple ModelLoader class.

import torch

from torchvision.utils import save_image

from stylegan2_pytorch import ModelLoader

loader = ModelLoader(

base_dir = '/path/to/directory', # path to where you invoked the command line tool

name = 'default' # the project name, defaults to 'default'

)

noise = torch.randn(1, 512).cuda() # noise

styles = loader.noise_to_styles(noise, trunc_psi = 0.7) # pass through mapping network

images = loader.styles_to_images(styles) # call the generator on intermediate style vectors

save_image(images, './sample.jpg') # save your images, or do whatever you desire

Logging to experiment tracker

To log the losses to an open source experiment tracker (Aim), you simply need to pass an extra flag like so.

$ stylegan2_pytorch --data ./data --log

Then, you need to make sure you have Docker installed. Following the instructions at Aim, you execute the following in your terminal.

$ aim up

Then open up your browser to the address and you should see

Experimental

Top-k Training for Generator

A new paper has produced evidence that by simply zero-ing out the gradient contributions from samples that are deemed fake by the discriminator, the generator learns significantly better, achieving new state of the art.

$ stylegan2_pytorch --data ./data --top-k-training

Gamma is a decay schedule that slowly decreases the topk from the full batch size to the target fraction of 50% (also modifiable hyperparameter).

$ stylegan2_pytorch --data ./data --top-k-training --generate-top-k-frac 0.5 --generate-top-k-gamma 0.99

Feature Quantization

A recent paper reported improved results if intermediate representations of the discriminator are vector quantized. Although I have not noticed any dramatic changes, I have decided to add this as a feature, so other minds out there can investigate. To use, you have to specify which layer(s) you would like to vector quantize. Default dictionary size is 256 and is also tunable.

# feature quantize layers 1 and 2, with a dictionary size of 512 each

# do not put a space after the comma in the list!

$ stylegan2_pytorch --data ./data --fq-layers [1,2] --fq-dict-size 512

Contrastive Loss Regularization

I have tried contrastive learning on the discriminator (in step with the usual GAN training) and possibly observed improved stability and quality of final results. You can turn on this experimental feature with a simple flag as shown below.

$ stylegan2_pytorch --data ./data --cl-reg

Relativistic Discriminator Loss

This was proposed in the Relativistic GAN paper to stabilize training. I have had mixed results, but will include the feature for those who want to experiment with it.

$ stylegan2_pytorch --data ./data --rel-disc-loss

Non-constant 4x4 Block

By default, the StyleGAN architecture styles a constant learned 4x4 block as it is progressively upsampled. This is an experimental feature that makes it so the 4x4 block is learned from the style vector w instead.

$ stylegan2_pytorch --data ./data --no-const

Dual Contrastive Loss

A recent paper has proposed that a novel contrastive loss between the real and fake logits can improve quality over other types of losses. (The default in this repository is hinge loss, and the paper shows a slight improvement)

$ stylegan2_pytorch --data ./data --dual-contrast-loss

Alternatives

Stylegan2 + Unet Discriminator

I have gotten really good results with a unet discriminator, but the architecturally change was too big to fit as an option in this repository. If you are aiming for perfection, feel free to try it.

If you would like me to give the royal treatment to some other GAN architecture (BigGAN), feel free to reach out at my email. Happy to hear your pitch.

Appreciation

Thank you to Matthew Mann for his inspiring simple port for Tensorflow 2.0

References

@article{Karras2019stylegan2,

title = {Analyzing and Improving the Image Quality of {StyleGAN}},

author = {Tero Karras and Samuli Laine and Miika Aittala and Janne Hellsten and Jaakko Lehtinen and Timo Aila},

journal = {CoRR},

volume = {abs/1912.04958},

year = {2019},

}

@misc{zhao2020feature,

title = {Feature Quantization Improves GAN Training},

author = {Yang Zhao and Chunyuan Li and Ping Yu and Jianfeng Gao and Changyou Chen},

year = {2020}

}

@misc{chen2020simple,

title = {A Simple Framework for Contrastive Learning of Visual Representations},

author = {Ting Chen and Simon Kornblith and Mohammad Norouzi and Geoffrey Hinton},

year = {2020}

}

@article{,

title = {Oxford 102 Flowers},

author = {Nilsback, M-E. and Zisserman, A., 2008},

abstract = {A 102 category dataset consisting of 102 flower categories, commonly occuring in the United Kingdom. Each class consists of 40 to 258 images. The images have large scale, pose and light variations.}

}

@article{afifi201911k,

title = {11K Hands: gender recognition and biometric identification using a large dataset of hand images},

author = {Afifi, Mahmoud},

journal = {Multimedia Tools and Applications}

}

@misc{zhang2018selfattention,

title = {Self-Attention Generative Adversarial Networks},

author = {Han Zhang and Ian Goodfellow and Dimitris Metaxas and Augustus Odena},

year = {2018},

eprint = {1805.08318},

archivePrefix = {arXiv}

}

@article{shen2019efficient,

author = {Zhuoran Shen and

Mingyuan Zhang and

Haiyu Zhao and

Shuai Yi and

Hongsheng Li},

title = {Efficient Attention: Attention with Linear Complexities},

journal = {CoRR},

year = {2018},

url = {http://arxiv.org/abs/1812.01243},

}

@article{zhao2020diffaugment,

title = {Differentiable Augmentation for Data-Efficient GAN Training},

author = {Zhao, Shengyu and Liu, Zhijian and Lin, Ji and Zhu, Jun-Yan and Han, Song},

journal = {arXiv preprint arXiv:2006.10738},

year = {2020}

}

@misc{zhao2020image,

title = {Image Augmentations for GAN Training},

author = {Zhengli Zhao and Zizhao Zhang and Ting Chen and Sameer Singh and Han Zhang},

year = {2020},

eprint = {2006.02595},

archivePrefix = {arXiv}

}

@misc{karras2020training,

title = {Training Generative Adversarial Networks with Limited Data},

author = {Tero Karras and Miika Aittala and Janne Hellsten and Samuli Laine and Jaakko Lehtinen and Timo Aila},

year = {2020},

eprint = {2006.06676},

archivePrefix = {arXiv},

primaryClass = {cs.CV}

}

@misc{jolicoeurmartineau2018relativistic,

title = {The relativistic discriminator: a key element missing from standard GAN},

author = {Alexia Jolicoeur-Martineau},

year = {2018},

eprint = {1807.00734},

archivePrefix = {arXiv},

primaryClass = {cs.LG}

}

@misc{sinha2020topk,

title = {Top-k Training of GANs: Improving GAN Performance by Throwing Away Bad Samples},

author = {Samarth Sinha and Zhengli Zhao and Anirudh Goyal and Colin Raffel and Augustus Odena},

year = {2020},

eprint = {2002.06224},

archivePrefix = {arXiv},

primaryClass = {stat.ML}

}

@misc{yu2021dual,

title = {Dual Contrastive Loss and Attention for GANs},

author = {Ning Yu and Guilin Liu and Aysegul Dundar and Andrew Tao and Bryan Catanzaro and Larry Davis and Mario Fritz},

year = {2021},

eprint = {2103.16748},

archivePrefix = {arXiv},

primaryClass = {cs.CV}

}

.\lucidrains\stylegan2-pytorch\setup.py

# 导入 sys 模块

import sys

# 从 setuptools 模块中导入 setup 和 find_packages 函数

from setuptools import setup, find_packages

# 将 stylegan2_pytorch 目录添加到 sys.path 中

sys.path[0:0] = ['stylegan2_pytorch']

# 从 version 模块中导入 __version__ 变量

from version import __version__

# 设置包的元数据

setup(

# 包的名称

name = 'stylegan2_pytorch',

# 查找并包含所有包

packages = find_packages(),

# 设置入口点,命令行脚本为 stylegan2_pytorch

entry_points={

'console_scripts': [

'stylegan2_pytorch = stylegan2_pytorch.cli:main',

],

},

# 设置版本号为导入的 __version__ 变量

version = __version__,

# 设置许可证为 GPLv3+

license='GPLv3+',

# 设置描述信息

description = 'StyleGan2 in Pytorch',

# 设置长描述内容类型为 markdown

long_description_content_type = 'text/markdown',

# 设置作者

author = 'Phil Wang',

# 设置作者邮箱

author_email = 'lucidrains@gmail.com',

# 设置项目 URL

url = 'https://github.com/lucidrains/stylegan2-pytorch',

# 设置下载 URL

download_url = 'https://github.com/lucidrains/stylegan2-pytorch/archive/v_036.tar.gz',

# 设置关键词

keywords = ['generative adversarial networks', 'artificial intelligence'],

# 设置依赖的包

install_requires=[

'aim',

'einops',

'contrastive_learner>=0.1.0',

'fire',

'kornia>=0.5.4',

'numpy',

'retry',

'tqdm',

'torch',

'torchvision',

'pillow',

'vector-quantize-pytorch==0.1.0'

],

# 设置分类标签

classifiers=[

'Development Status :: 4 - Beta',

'Intended Audience :: Developers',

'Topic :: Scientific/Engineering :: Artificial Intelligence',

'License :: OSI Approved :: MIT License',

'Programming Language :: Python :: 3.6',

],

)

.\lucidrains\stylegan2-pytorch\stylegan2_pytorch\cli.py

# 导入所需的库

import os

import fire

import random

from retry.api import retry_call

from tqdm import tqdm

from datetime import datetime

from functools import wraps

from stylegan2_pytorch import Trainer, NanException

import torch

import torch.multiprocessing as mp

import torch.distributed as dist

import numpy as np

# 定义一个函数,将输入转换为列表

def cast_list(el):

return el if isinstance(el, list) else [el]

# 生成带时间戳的文件名

def timestamped_filename(prefix = 'generated-'):

now = datetime.now()

timestamp = now.strftime("%m-%d-%Y_%H-%M-%S")

return f'{prefix}{timestamp}'

# 设置随机种子

def set_seed(seed):

torch.manual_seed(seed)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

np.random.seed(seed)

random.seed(seed)

# 运行训练过程

def run_training(rank, world_size, model_args, data, load_from, new, num_train_steps, name, seed):

is_main = rank == 0

is_ddp = world_size > 1

if is_ddp:

set_seed(seed)

os.environ['MASTER_ADDR'] = 'localhost'

os.environ['MASTER_PORT'] = '12355'

dist.init_process_group('nccl', rank=rank, world_size=world_size)

print(f"{rank + 1}/{world_size} process initialized.")

model_args.update(

is_ddp = is_ddp,

rank = rank,

world_size = world_size

)

model = Trainer(**model_args)

if not new:

model.load(load_from)

else:

model.clear()

model.set_data_src(data)

progress_bar = tqdm(initial = model.steps, total = num_train_steps, mininterval=10., desc=f'{name}<{data}>')

while model.steps < num_train_steps:

retry_call(model.train, tries=3, exceptions=NanException)

progress_bar.n = model.steps

progress_bar.refresh()

if is_main and model.steps % 50 == 0:

model.print_log()

model.save(model.checkpoint_num)

if is_ddp:

dist.destroy_process_group()

# 从文件夹中训练模型

def train_from_folder(

data = './data',

results_dir = './results',

models_dir = './models',

name = 'default',

new = False,

load_from = -1,

image_size = 128,

network_capacity = 16,

fmap_max = 512,

transparent = False,

batch_size = 5,

gradient_accumulate_every = 6,

num_train_steps = 150000,

learning_rate = 2e-4,

lr_mlp = 0.1,

ttur_mult = 1.5,

rel_disc_loss = False,

num_workers = None,

save_every = 1000,

evaluate_every = 1000,

generate = False,

num_generate = 1,

generate_interpolation = False,

interpolation_num_steps = 100,

save_frames = False,

num_image_tiles = 8,

trunc_psi = 0.75,

mixed_prob = 0.9,

fp16 = False,

no_pl_reg = False,

cl_reg = False,

fq_layers = [],

fq_dict_size = 256,

attn_layers = [],

no_const = False,

aug_prob = 0.,

aug_types = ['translation', 'cutout'],

top_k_training = False,

generator_top_k_gamma = 0.99,

generator_top_k_frac = 0.5,

dual_contrast_loss = False,

dataset_aug_prob = 0.,

multi_gpus = False,

calculate_fid_every = None,

calculate_fid_num_images = 12800,

clear_fid_cache = False,

seed = 42,

log = False

):

model_args = dict(

name = name, # 模型名称

results_dir = results_dir, # 结果保存目录

models_dir = models_dir, # 模型保存目录

batch_size = batch_size, # 批量大小

gradient_accumulate_every = gradient_accumulate_every, # 梯度积累频率

image_size = image_size, # 图像尺寸

network_capacity = network_capacity, # 网络容量

fmap_max = fmap_max, # 最大特征图数

transparent = transparent, # 是否透明

lr = learning_rate, # 学习率

lr_mlp = lr_mlp, # MLP学习率

ttur_mult = ttur_mult, # TTUR倍数

rel_disc_loss = rel_disc_loss, # 相对鉴别器损失

num_workers = num_workers, # 工作进程数

save_every = save_every, # 保存频率

evaluate_every = evaluate_every, # 评估频率

num_image_tiles = num_image_tiles, # 图像瓦片数

trunc_psi = trunc_psi, # 截断参数

fp16 = fp16, # 是否使用FP16

no_pl_reg = no_pl_reg, # 是否无PL正则化

cl_reg = cl_reg, # CL正则化

fq_layers = fq_layers, # FQ层

fq_dict_size = fq_dict_size, # FQ字典大小

attn_layers = attn_layers, # 注意力层

no_const = no_const, # 是否无常数

aug_prob = aug_prob, # 数据增强概率

aug_types = cast_list(aug_types), # 数据增强类型

top_k_training = top_k_training, # Top-K训练

generator_top_k_gamma = generator_top_k_gamma, # 生成器Top-K Gamma

generator_top_k_frac = generator_top_k_frac, # 生成器Top-K分数

dual_contrast_loss = dual_contrast_loss, # 双对比损失

dataset_aug_prob = dataset_aug_prob, # 数据集增强概率

calculate_fid_every = calculate_fid_every, # 计算FID频率

calculate_fid_num_images = calculate_fid_num_images, # 计算FID图像数

clear_fid_cache = clear_fid_cache, # 清除FID缓存

mixed_prob = mixed_prob, # 混合概率

log = log # 日志

)

if generate:

model = Trainer(**model_args) # 创建Trainer模型

model.load(load_from) # 加载模型

samples_name = timestamped_filename() # 生成时间戳文件名

for num in tqdm(range(num_generate)): # 迭代生成指定数量的样本

model.evaluate(f'{samples_name}-{num}', num_image_tiles) # 评估模型生成样本

print(f'sample images generated at {results_dir}/{name}/{samples_name}') # 打印生成的样本图像保存路径

return

if generate_interpolation:

model = Trainer(**model_args) # 创建Trainer模型

model.load(load_from) # 加载模型

samples_name = timestamped_filename() # 生成时间戳文件名

model.generate_interpolation(samples_name, num_image_tiles, num_steps = interpolation_num_steps, save_frames = save_frames) # 生成插值图像

print(f'interpolation generated at {results_dir}/{name}/{samples_name}') # 打印插值图像保存路径

return

world_size = torch.cuda.device_count() # 获取GPU数量

if world_size == 1 or not multi_gpus:

run_training(0, 1, model_args, data, load_from, new, num_train_steps, name, seed) # 单GPU训练

return

mp.spawn(run_training,

args=(world_size, model_args, data, load_from, new, num_train_steps, name, seed),

nprocs=world_size,

join=True) # 多GPU训练

# 定义主函数

def main():

# 使用 Fire 库将 train_from_folder 函数转换为命令行接口

fire.Fire(train_from_folder)

.\lucidrains\stylegan2-pytorch\stylegan2_pytorch\diff_augment.py

# 导入必要的库

from functools import partial

import random

import torch

import torch.nn.functional as F

# 定义一个函数,用于对输入进行不同类型的数据增强

def DiffAugment(x, types=[]):

# 遍历每种数据增强类型

for p in types:

# 遍历每种数据增强函数

for f in AUGMENT_FNS[p]:

# 对输入数据进行数据增强操作

x = f(x)

# 返回处理后的数据

return x.contiguous()

# 定义不同的数据增强函数

# 亮度随机增强函数

def rand_brightness(x, scale):

x = x + (torch.rand(x.size(0), 1, 1, 1, dtype=x.dtype, device=x.device) - 0.5) * scale

return x

# 饱和度随机增强函数

def rand_saturation(x, scale):

x_mean = x.mean(dim=1, keepdim=True)

x = (x - x_mean) * (((torch.rand(x.size(0), 1, 1, 1, dtype=x.dtype, device=x.device) - 0.5) * 2.0 * scale) + 1.0) + x_mean

return x

# 对比度随机增强函数

def rand_contrast(x, scale):

x_mean = x.mean(dim=[1, 2, 3], keepdim=True)

x = (x - x_mean) * (((torch.rand(x.size(0), 1, 1, 1, dtype=x.dtype, device=x.device) - 0.5) * 2.0 * scale) + 1.0) + x_mean

return x

# 随机平移增强函数

def rand_translation(x, ratio=0.125):

# 计算平移的像素数

shift_x, shift_y = int(x.size(2) * ratio + 0.5), int(x.size(3) * ratio + 0.5)

# 生成随机的平移量

translation_x = torch.randint(-shift_x, shift_x + 1, size=[x.size(0), 1, 1], device=x.device)

translation_y = torch.randint(-shift_y, shift_y + 1, size=[x.size(0), 1, 1], device=x.device)

# 创建平移后的图像

grid_batch, grid_x, grid_y = torch.meshgrid(

torch.arange(x.size(0), dtype=torch.long, device=x.device),

torch.arange(x.size(2), dtype=torch.long, device=x.device),

torch.arange(x.size(3), dtype=torch.long, device=x.device),

)

grid_x = torch.clamp(grid_x + translation_x + 1, 0, x.size(2) + 1)

grid_y = torch.clamp(grid_y + translation_y + 1, 0, x.size(3) + 1)

x_pad = F.pad(x, [1, 1, 1, 1, 0, 0, 0, 0])

x = x_pad.permute(0, 2, 3, 1).contiguous()[grid_batch, grid_x, grid_y].permute(0, 3, 1, 2)

return x

# 随机偏移增强函数

def rand_offset(x, ratio=1, ratio_h=1, ratio_v=1):

w, h = x.size(2), x.size(3)

imgs = []

for img in x.unbind(dim = 0):

max_h = int(w * ratio * ratio_h)

max_v = int(h * ratio * ratio_v)

value_h = random.randint(0, max_h) * 2 - max_h

value_v = random.randint(0, max_v) * 2 - max_v

if abs(value_h) > 0:

img = torch.roll(img, value_h, 2)

if abs(value_v) > 0:

img = torch.roll(img, value_v, 1)

imgs.append(img)

return torch.stack(imgs)

# 水平偏移增强函数

def rand_offset_h(x, ratio=1):

return rand_offset(x, ratio=1, ratio_h=ratio, ratio_v=0)

# 垂直偏移增强函数

def rand_offset_v(x, ratio=1):

return rand_offset(x, ratio=1, ratio_h=0, ratio_v=ratio)

# 随机遮挡增强函数

def rand_cutout(x, ratio=0.5):

cutout_size = int(x.size(2) * ratio + 0.5), int(x.size(3) * ratio + 0.5)

offset_x = torch.randint(0, x.size(2) + (1 - cutout_size[0] % 2), size=[x.size(0), 1, 1], device=x.device)

offset_y = torch.randint(0, x.size(3) + (1 - cutout_size[1] % 2), size=[x.size(0), 1, 1], device=x.device)

grid_batch, grid_x, grid_y = torch.meshgrid(

torch.arange(x.size(0), dtype=torch.long, device=x.device),

torch.arange(cutout_size[0], dtype=torch.long, device=x.device),

torch.arange(cutout_size[1], dtype=torch.long, device=x.device),

)

grid_x = torch.clamp(grid_x + offset_x - cutout_size[0] // 2, min=0, max=x.size(2) - 1)

grid_y = torch.clamp(grid_y + offset_y - cutout_size[1] // 2, min=0, max=x.size(3) - 1)

mask = torch.ones(x.size(0), x.size(2), x.size(3), dtype=x.dtype, device=x.device)

mask[grid_batch, grid_x, grid_y] = 0

x = x * mask.unsqueeze(1)

return x

# 定义不同数据增强类型对应的数据增强函数

AUGMENT_FNS = {

'brightness': [partial(rand_brightness, scale=1.)],

'lightbrightness': [partial(rand_brightness, scale=.65)],

'contrast': [partial(rand_contrast, scale=.5)],

'lightcontrast': [partial(rand_contrast, scale=.25)],

'saturation': [partial(rand_saturation, scale=1.)],

'lightsaturation': [partial(rand_saturation, scale=.5)],

'color': [partial(rand_brightness, scale=1.), partial(rand_saturation, scale=1.), partial(rand_contrast, scale=0.5)],

}

# 'lightcolor'键对应的值是一个包含三个函数的列表,分别用于随机调整亮度、饱和度和对比度

'lightcolor': [partial(rand_brightness, scale=0.65), partial(rand_saturation, scale=.5), partial(rand_contrast, scale=0.5)],

# 'offset'键对应的值是一个包含一个函数的列表,用于生成随机偏移量

'offset': [rand_offset],

# 'offset_h'键对应的值是一个包含一个函数的列表,用于生成水平方向的随机偏移量

'offset_h': [rand_offset_h],

# 'offset_v'键对应的值是一个包含一个函数的列表,用于生成垂直方向的随机偏移量

'offset_v': [rand_offset_v],

# 'translation'键对应的值是一个包含一个函数的列表,用于生成随机平移

'translation': [rand_translation],

# 'cutout'键对应的值是一个包含一个函数的列表,用于生成随机遮挡

'cutout': [rand_cutout],

# 闭合大括号,表示代码块的结束

.\lucidrains\stylegan2-pytorch\stylegan2_pytorch\stylegan2_pytorch.py

# 导入必要的库

import os

import sys

import math

import fire

import json

from tqdm import tqdm

from math import floor, log2

from random import random

from shutil import rmtree

from functools import partial

import multiprocessing

from contextlib import contextmanager, ExitStack

import numpy as np

import torch

from torch import nn, einsum

from torch.utils import data

from torch.optim import Adam

import torch.nn.functional as F

from torch.autograd import grad as torch_grad

from torch.utils.data.distributed import DistributedSampler

from torch.nn.parallel import DistributedDataParallel as DDP

from einops import rearrange, repeat

from kornia.filters import filter2d

import torchvision

from torchvision import transforms

from stylegan2_pytorch.version import __version__

from stylegan2_pytorch.diff_augment import DiffAugment

from vector_quantize_pytorch import VectorQuantize

from PIL import Image

from pathlib import Path

try:

from apex import amp

APEX_AVAILABLE = True

except:

APEX_AVAILABLE = False

import aim

# 检查是否有可用的 CUDA 设备

assert torch.cuda.is_available(), 'You need to have an Nvidia GPU with CUDA installed.'

# 常量定义

NUM_CORES = multiprocessing.cpu_count()

EXTS = ['jpg', 'jpeg', 'png']

# 辅助类定义

# 自定义异常类

class NanException(Exception):

pass

# 指数移动平均类

class EMA():

def __init__(self, beta):

super().__init__()

self.beta = beta

def update_average(self, old, new):

if not exists(old):

return new

return old * self.beta + (1 - self.beta) * new

# 展平类

class Flatten(nn.Module):

def forward(self, x):

return x.reshape(x.shape[0], -1)

# 随机应用类

class RandomApply(nn.Module):

def __init__(self, prob, fn, fn_else = lambda x: x):

super().__init__()

self.fn = fn

self.fn_else = fn_else

self.prob = prob

def forward(self, x):

fn = self.fn if random() < self.prob else self.fn_else

return fn(x)

# 残差连接类

class Residual(nn.Module):

def __init__(self, fn):

super().__init__()

self.fn = fn

def forward(self, x):

return self.fn(x) + x

# 通道归一化类

class ChanNorm(nn.Module):

def __init__(self, dim, eps = 1e-5):

super().__init__()

self.eps = eps

self.g = nn.Parameter(torch.ones(1, dim, 1, 1))

self.b = nn.Parameter(torch.zeros(1, dim, 1, 1))

def forward(self, x):

var = torch.var(x, dim = 1, unbiased = False, keepdim = True)

mean = torch.mean(x, dim = 1, keepdim = True)

return (x - mean) / (var + self.eps).sqrt() * self.g + self.b

# 预归一化类

class PreNorm(nn.Module):

def __init__(self, dim, fn):

super().__init__()

self.fn = fn

self.norm = ChanNorm(dim)

def forward(self, x):

return self.fn(self.norm(x))

# 维度置换类

class PermuteToFrom(nn.Module):

def __init__(self, fn):

super().__init__()

self.fn = fn

def forward(self, x):

x = x.permute(0, 2, 3, 1)

out, *_, loss = self.fn(x)

out = out.permute(0, 3, 1, 2)

return out, loss

# 模糊类

class Blur(nn.Module):

def __init__(self):

super().__init__()

f = torch.Tensor([1, 2, 1])

self.register_buffer('f', f)

def forward(self, x):

f = self.f

f = f[None, None, :] * f [None, :, None]

return filter2d(x, f, normalized=True)

# 注意力机制

# 深度卷积类

class DepthWiseConv2d(nn.Module):

def __init__(self, dim_in, dim_out, kernel_size, padding = 0, stride = 1, bias = True):

super().__init__()

self.net = nn.Sequential(

nn.Conv2d(dim_in, dim_in, kernel_size = kernel_size, padding = padding, groups = dim_in, stride = stride, bias = bias),

nn.Conv2d(dim_in, dim_out, kernel_size = 1, bias = bias)

)

def forward(self, x):

return self.net(x)

# 线性注意力类

class LinearAttention(nn.Module):

# 初始化函数,设置注意力机制的参数

def __init__(self, dim, dim_head = 64, heads = 8):

# 调用父类的初始化函数

super().__init__()

# 计算缩放因子

self.scale = dim_head ** -0.5

# 设置头数

self.heads = heads

# 计算内部维度

inner_dim = dim_head * heads

# 使用 GELU 作为非线性激活函数

self.nonlin = nn.GELU()

# 创建输入到查询向量的卷积层

self.to_q = nn.Conv2d(dim, inner_dim, 1, bias = False)

# 创建输入到键值对的卷积层

self.to_kv = DepthWiseConv2d(dim, inner_dim * 2, 3, padding = 1, bias = False)

# 创建输出的卷积层

self.to_out = nn.Conv2d(inner_dim, dim, 1)

# 前向传播函数

def forward(self, fmap):

# 获取头数和特征图的高度、宽度

h, x, y = self.heads, *fmap.shape[-2:]

# 计算查询、键、值

q, k, v = (self.to_q(fmap), *self.to_kv(fmap).chunk(2, dim = 1))

# 重排查询、键、值的维度

q, k, v = map(lambda t: rearrange(t, 'b (h c) x y -> (b h) (x y) c', h = h), (q, k, v))

# 对查询进行 softmax 操作

q = q.softmax(dim = -1)

# 对键进行 softmax 操作

k = k.softmax(dim = -2)

# 缩放查询

q = q * self.scale

# 计算上下文信息

context = einsum('b n d, b n e -> b d e', k, v)

# 计算输出

out = einsum('b n d, b d e -> b n e', q, context)

# 重排输出的维度

out = rearrange(out, '(b h) (x y) d -> b (h d) x y', h = h, x = x, y = y)

# 使用非线性激活函数

out = self.nonlin(out)

# 返回输出

return self.to_out(out)

# 定义一个包含自注意力和前馈的函数,用于图像处理

attn_and_ff = lambda chan: nn.Sequential(*[

# 使用残差连接将通道数作为参数传入预标准化和线性注意力模块中

Residual(PreNorm(chan, LinearAttention(chan))),

# 使用残差连接将通道数作为参数传入预标准化和卷积模块中

Residual(PreNorm(chan, nn.Sequential(nn.Conv2d(chan, chan * 2, 1), leaky_relu(), nn.Conv2d(chan * 2, chan, 1))))

])

# 辅助函数

# 判断值是否存在

def exists(val):

return val is not None

# 空上下文管理器

@contextmanager

def null_context():

yield

# 合并多个上下文管理器

def combine_contexts(contexts):

@contextmanager

def multi_contexts():

with ExitStack() as stack:

yield [stack.enter_context(ctx()) for ctx in contexts]

return multi_contexts

# 返回默认值

def default(value, d):

return value if exists(value) else d

# 无限循环迭代器

def cycle(iterable):

while True:

for i in iterable:

yield i

# 将元素转换为列表

def cast_list(el):

return el if isinstance(el, list) else [el]

# 判断张量是否为空

def is_empty(t):

if isinstance(t, torch.Tensor):

return t.nelement() == 0

return not exists(t)

# 如果张量包含 NaN,则抛出异常

def raise_if_nan(t):

if torch.isnan(t):

raise NanException

# 梯度累积上下文管理器

def gradient_accumulate_contexts(gradient_accumulate_every, is_ddp, ddps):

if is_ddp:

num_no_syncs = gradient_accumulate_every - 1

head = [combine_contexts(map(lambda ddp: ddp.no_sync, ddps))] * num_no_syncs

tail = [null_context]

contexts = head + tail

else:

contexts = [null_context] * gradient_accumulate_every

for context in contexts:

with context():

yield

# 损失反向传播

def loss_backwards(fp16, loss, optimizer, loss_id, **kwargs):

if fp16:

with amp.scale_loss(loss, optimizer, loss_id) as scaled_loss:

scaled_loss.backward(**kwargs)

else:

loss.backward(**kwargs)

# 梯度惩罚

def gradient_penalty(images, output, weight = 10):

batch_size = images.shape[0]

gradients = torch_grad(outputs=output, inputs=images,

grad_outputs=torch.ones(output.size(), device=images.device),

create_graph=True, retain_graph=True, only_inputs=True)[0]

gradients = gradients.reshape(batch_size, -1)

return weight * ((gradients.norm(2, dim=1) - 1) ** 2).mean()

# 计算潜在空间长��

def calc_pl_lengths(styles, images):

device = images.device

num_pixels = images.shape[2] * images.shape[3]

pl_noise = torch.randn(images.shape, device=device) / math.sqrt(num_pixels)

outputs = (images * pl_noise).sum()

pl_grads = torch_grad(outputs=outputs, inputs=styles,

grad_outputs=torch.ones(outputs.shape, device=device),

create_graph=True, retain_graph=True, only_inputs=True)[0]

return (pl_grads ** 2).sum(dim=2).mean(dim=1).sqrt()

# 生成噪声

def noise(n, latent_dim, device):

return torch.randn(n, latent_dim).cuda(device)

# 生成噪声列表

def noise_list(n, layers, latent_dim, device):

return [(noise(n, latent_dim, device), layers)]

# 生成混合噪声列表

def mixed_list(n, layers, latent_dim, device):

tt = int(torch.rand(()).numpy() * layers)

return noise_list(n, tt, latent_dim, device) + noise_list(n, layers - tt, latent_dim, device)

# 将潜在向量转换为 W

def latent_to_w(style_vectorizer, latent_descr):

return [(style_vectorizer(z), num_layers) for z, num_layers in latent_descr]

# 生成图像噪声

def image_noise(n, im_size, device):

return torch.FloatTensor(n, im_size, im_size, 1).uniform_(0., 1.).cuda(device)

# Leaky ReLU 激活函数

def leaky_relu(p=0.2):

return nn.LeakyReLU(p, inplace=True)

# 分块评估

def evaluate_in_chunks(max_batch_size, model, *args):

split_args = list(zip(*list(map(lambda x: x.split(max_batch_size, dim=0), args))))

chunked_outputs = [model(*i) for i in split_args]

if len(chunked_outputs) == 1:

return chunked_outputs[0]

return torch.cat(chunked_outputs, dim=0)

# 将样式定义转换为张量

def styles_def_to_tensor(styles_def):

return torch.cat([t[:, None, :].expand(-1, n, -1) for t, n in styles_def], dim=1)

# 设置模型参数是否需要梯度

def set_requires_grad(model, bool):

for p in model.parameters():

p.requires_grad = bool

# Slerp 插值

def slerp(val, low, high):

low_norm = low / torch.norm(low, dim=1, keepdim=True)

high_norm = high / torch.norm(high, dim=1, keepdim=True)

# 计算两个向量的夹角的余弦值

omega = torch.acos((low_norm * high_norm).sum(1))

# 计算夹角的正弦值

so = torch.sin(omega)

# 根据插值参数val计算插值结果

res = (torch.sin((1.0 - val) * omega) / so).unsqueeze(1) * low + (torch.sin(val * omega) / so).unsqueeze(1) * high

# 返回插值结果

return res

# losses

# 生成 Hinge 损失函数,返回 fake 数据的均值

def gen_hinge_loss(fake, real):

return fake.mean()

# Hinge 损失函数,计算 real 和 fake 数据的损失

def hinge_loss(real, fake):

return (F.relu(1 + real) + F.relu(1 - fake)).mean()

# 对偶对比损失函数,计算 real_logits 和 fake_logits 之间的损失

def dual_contrastive_loss(real_logits, fake_logits):

device = real_logits.device

# 重排维度

real_logits, fake_logits = map(lambda t: rearrange(t, '... -> (...)'), (real_logits, fake_logits))

# 定义损失函数

def loss_half(t1, t2):

t1 = rearrange(t1, 'i -> i ()')

t2 = repeat(t2, 'j -> i j', i = t1.shape[0])

t = torch.cat((t1, t2), dim = -1)

return F.cross_entropy(t, torch.zeros(t1.shape[0], device = device, dtype = torch.long))

# 返回损失函数结果

return loss_half(real_logits, fake_logits) + loss_half(-fake_logits, -real_logits)

# dataset

# 将 RGB 图像转换为带透明度的图像

def convert_rgb_to_transparent(image):

if image.mode != 'RGBA':

return image.convert('RGBA')

return image

# 将带透明度的图像转换为 RGB 图像

def convert_transparent_to_rgb(image):

if image.mode != 'RGB':

return image.convert('RGB')

return image

# 扩展灰度图像类

class expand_greyscale(object):

def __init__(self, transparent):

self.transparent = transparent

def __call__(self, tensor):

channels = tensor.shape[0]

num_target_channels = 4 if self.transparent else 3

if channels == num_target_channels:

return tensor

alpha = None

if channels == 1:

color = tensor.expand(3, -1, -1)

elif channels == 2:

color = tensor[:1].expand(3, -1, -1)

alpha = tensor[1:]

else:

raise Exception(f'image with invalid number of channels given {channels}')

if not exists(alpha) and self.transparent:

alpha = torch.ones(1, *tensor.shape[1:], device=tensor.device)

return color if not self.transparent else torch.cat((color, alpha))

# 调整图像大小至最小尺寸

def resize_to_minimum_size(min_size, image):

if max(*image.size) < min_size:

return torchvision.transforms.functional.resize(image, min_size)

return image

# 数据集类

class Dataset(data.Dataset):

def __init__(self, folder, image_size, transparent = False, aug_prob = 0.):

super().__init__()

self.folder = folder

self.image_size = image_size

self.paths = [p for ext in EXTS for p in Path(f'{folder}').glob(f'**/*.{ext}')]

assert len(self.paths) > 0, f'No images were found in {folder} for training'

convert_image_fn = convert_transparent_to_rgb if not transparent else convert_rgb_to_transparent

num_channels = 3 if not transparent else 4

self.transform = transforms.Compose([

transforms.Lambda(convert_image_fn),

transforms.Lambda(partial(resize_to_minimum_size, image_size)),

transforms.Resize(image_size),

RandomApply(aug_prob, transforms.RandomResizedCrop(image_size, scale=(0.5, 1.0), ratio=(0.98, 1.02)), transforms.CenterCrop(image_size)),

transforms.ToTensor(),

transforms.Lambda(expand_greyscale(transparent))

])

def __len__(self):

return len(self.paths)

def __getitem__(self, index):

path = self.paths[index]

img = Image.open(path)

return self.transform(img)

# augmentations

# 随机水平翻转图像

def random_hflip(tensor, prob):

if prob < random():

return tensor

return torch.flip(tensor, dims=(3,))

# 增强包装类

class AugWrapper(nn.Module):

def __init__(self, D, image_size):

super().__init__()

self.D = D

def forward(self, images, prob = 0., types = [], detach = False):

if random() < prob:

images = random_hflip(images, prob=0.5)

images = DiffAugment(images, types=types)

if detach:

images = images.detach()

return self.D(images)

# stylegan2 classes

# 等权线性层

class EqualLinear(nn.Module):

def __init__(self, in_dim, out_dim, lr_mul = 1, bias = True):

super().__init__()

self.weight = nn.Parameter(torch.randn(out_dim, in_dim))

if bias:

self.bias = nn.Parameter(torch.zeros(out_dim))

self.lr_mul = lr_mul

# 定义一个前向传播函数,接收输入并返回线性变换的结果

def forward(self, input):

# 使用线性变换函数对输入进行处理,其中权重乘以学习率倍数,偏置也乘以学习率倍数

return F.linear(input, self.weight * self.lr_mul, bias=self.bias * self.lr_mul)

class StyleVectorizer(nn.Module):

def __init__(self, emb, depth, lr_mul = 0.1):

super().__init__()

layers = []

for i in range(depth):

layers.extend([EqualLinear(emb, emb, lr_mul), leaky_relu()])

self.net = nn.Sequential(*layers)

def forward(self, x):

x = F.normalize(x, dim=1)

return self.net(x)

# 定义一个风格向量化器模块,用于将输入向量进行风格化处理

class StyleVectorizer(nn.Module):

def __init__(self, emb, depth, lr_mul = 0.1):

# 初始化函数

super().__init__()

# 创建一个空的层列表

layers = []

# 根据深度循环创建一组相同结构的层

for i in range(depth):

layers.extend([EqualLinear(emb, emb, lr_mul), leaky_relu()])

# 将层列表组合成一个序列

self.net = nn.Sequential(*layers)

# 前向传播函数

def forward(self, x):

# 对输入进行归一化处理

x = F.normalize(x, dim=1)

return self.net(x)

class RGBBlock(nn.Module):

def __init__(self, latent_dim, input_channel, upsample, rgba = False):

super().__init__()

self.input_channel = input_channel

self.to_style = nn.Linear(latent_dim, input_channel)

out_filters = 3 if not rgba else 4

self.conv = Conv2DMod(input_channel, out_filters, 1, demod=False)

self.upsample = nn.Sequential(

nn.Upsample(scale_factor = 2, mode='bilinear', align_corners=False),

Blur()

) if upsample else None

def forward(self, x, prev_rgb, istyle):

b, c, h, w = x.shape

style = self.to_style(istyle)

x = self.conv(x, style)

if exists(prev_rgb):

x = x + prev_rgb

if exists(self.upsample):

x = self.upsample(x)

return x

# 定义一个 RGB 模块,用于处理 RGB 图像数据

class RGBBlock(nn.Module):

def __init__(self, latent_dim, input_channel, upsample, rgba = False):

# 初始化函数

super().__init__()

self.input_channel = input_channel

self.to_style = nn.Linear(latent_dim, input_channel)

# 根据是否包含 alpha 通道确定输出通道数

out_filters = 3 if not rgba else 4

self.conv = Conv2DMod(input_channel, out_filters, 1, demod=False)

# 根据是否需要上采样创建上采样模块

self.upsample = nn.Sequential(

nn.Upsample(scale_factor = 2, mode='bilinear', align_corners=False),

Blur()

) if upsample else None

# 前向传播函数

def forward(self, x, prev_rgb, istyle):

b, c, h, w = x.shape

style = self.to_style(istyle)

x = self.conv(x, style)

# 如果存在前一层 RGB 数据,则进行相加操作

if exists(prev_rgb):

x = x + prev_rgb

# 如果存在上采样模块,则进行上采样操作

if exists(self.upsample):

x = self.upsample(x)

return x

class Conv2DMod(nn.Module):

def __init__(self, in_chan, out_chan, kernel, demod=True, stride=1, dilation=1, eps = 1e-8, **kwargs):

super().__init__()

self.filters = out_chan

self.demod = demod

self.kernel = kernel

self.stride = stride

self.dilation = dilation

self.weight = nn.Parameter(torch.randn((out_chan, in_chan, kernel, kernel)))

self.eps = eps

nn.init.kaiming_normal_(self.weight, a=0, mode='fan_in', nonlinearity='leaky_relu')

def _get_same_padding(self, size, kernel, dilation, stride):

return ((size - 1) * (stride - 1) + dilation * (kernel - 1)) // 2

def forward(self, x, y):

b, c, h, w = x.shape

w1 = y[:, None, :, None, None]

w2 = self.weight[None, :, :, :, :]

weights = w2 * (w1 + 1)

if self.demod:

d = torch.rsqrt((weights ** 2).sum(dim=(2, 3, 4), keepdim=True) + self.eps)

weights = weights * d

x = x.reshape(1, -1, h, w)

_, _, *ws = weights.shape

weights = weights.reshape(b * self.filters, *ws)

padding = self._get_same_padding(h, self.kernel, self.dilation, self.stride)

x = F.conv2d(x, weights, padding=padding, groups=b)

x = x.reshape(-1, self.filters, h, w)

return x

# 定义一个带有调制的卷积模块

class Conv2DMod(nn.Module):

def __init__(self, in_chan, out_chan, kernel, demod=True, stride=1, dilation=1, eps = 1e-8, **kwargs):

# 初始化函数

super().__init__()

self.filters = out_chan

self.demod = demod

self.kernel = kernel

self.stride = stride

self.dilation = dilation

self.weight = nn.Parameter(torch.randn((out_chan, in_chan, kernel, kernel)))

self.eps = eps

nn.init.kaiming_normal_(self.weight, a=0, mode='fan_in', nonlinearity='leaky_relu')

# 计算填充大小的函数

def _get_same_padding(self, size, kernel, dilation, stride):

return ((size - 1) * (stride - 1) + dilation * (kernel - 1)) // 2

# 前向传播函数

def forward(self, x, y):

b, c, h, w = x.shape

w1 = y[:, None, :, None, None]

w2 = self.weight[None, :, :, :, :]

weights = w2 * (w1 + 1)

if self.demod:

d = torch.rsqrt((weights ** 2).sum(dim=(2, 3, 4), keepdim=True) + self.eps)

weights = weights * d

x = x.reshape(1, -1, h, w)

_, _, *ws = weights.shape

weights = weights.reshape(b * self.filters, *ws)

padding = self._get_same_padding(h, self.kernel, self.dilation, self.stride)

x = F.conv2d(x, weights, padding=padding, groups=b)

x = x.reshape(-1, self.filters, h, w)

return x

class GeneratorBlock(nn.Module):

def __init__(self, latent_dim, input_channels, filters, upsample = True, upsample_rgb = True, rgba = False):

super().__init__()

self.upsample = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False) if upsample else None

self.to_style1 = nn.Linear(latent_dim, input_channels)

self.to_noise1 = nn.Linear(1, filters)

self.conv1 = Conv2DMod(input_channels, filters, 3)

self.to_style2 = nn.Linear(latent_dim, filters)

self.to_noise2 = nn.Linear(1, filters)

self.conv2 = Conv2DMod(filters, filters, 3)

self.activation = leaky_relu()

self.to_rgb = RGBBlock(latent_dim, filters, upsample_rgb, rgba)

def forward(self, x, prev_rgb, istyle, inoise):

if exists(self.upsample):

x = self.upsample(x)

inoise = inoise[:, :x.shape[2], :x.shape[3], :]

noise1 = self.to_noise1(inoise).permute((0, 3, 2, 1))

noise2 = self.to_noise2(inoise).permute((0, 3, 2, 1))

style1 = self.to_style1(istyle)

x = self.conv1(x, style1)

x = self.activation(x + noise1)

style2 = self.to_style2(istyle)

x = self.conv2(x, style2)

x = self.activation(x + noise2)

rgb = self.to_rgb(x, prev_rgb, istyle)

return x, rgb

# 定义一个生成器模块

class GeneratorBlock(nn.Module):

def __init__(self, latent_dim, input_channels, filters, upsample = True, upsample_rgb = True, rgba = False):

# 初始化函数

super().__init__()

self.upsample = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False) if upsample else None

self.to_style1 = nn.Linear(latent_dim, input_channels)

self.to_noise1 = nn.Linear(1, filters)

self.conv1 = Conv2DMod(input_channels, filters, 3)

self.to_style2 = nn.Linear(latent_dim, filters)

self.to_noise2 = nn.Linear(1, filters)

self.conv2 = Conv2DMod(filters, filters, 3)

self.activation = leaky_relu()

self.to_rgb = RGBBlock(latent_dim, filters, upsample_rgb, rgba)

# 前向传播函数

def forward(self, x, prev_rgb, istyle, inoise):

# 如果需要上采样,则进行上采样操作

if exists(self.upsample):

x = self.upsample(x)

# 裁剪噪声数据

inoise = inoise[:, :x.shape[2], :x.shape[3], :]

noise1 = self.to_noise1(inoise).permute((0, 3, 2, 1))

noise2 = self.to_noise2(inoise).permute((0, 3, 2, 1))

style1 = self.to_style1(istyle)

x = self.conv1(x, style1)

x = self.activation(x + noise1)

style2 = self.to_style2(istyle)

x = self.conv2(x, style2)

x = self.activation(x + noise2)

rgb = self.to_rgb(x, prev_rgb, istyle)

return x, rgb

class DiscriminatorBlock(nn.Module):

# 定义一个鉴别器模块

class DiscriminatorBlock(nn.Module):

# 初始化函数,定义了一个卷积层 conv_res,用于降采样

def __init__(self, input_channels, filters, downsample=True):

# 调用父类的初始化函数

super().__init__()

# 定义一个卷积层 conv_res,用于降采样,1x1卷积核,stride为2(如果 downsample 为 True)

self.conv_res = nn.Conv2d(input_channels, filters, 1, stride = (2 if downsample else 1))

# 定义一个神经网络模型 net,包含两个卷积层和激活函数

self.net = nn.Sequential(

nn.Conv2d(input_channels, filters, 3, padding=1),

leaky_relu(),

nn.Conv2d(filters, filters, 3, padding=1),

leaky_relu()

)

# 如果 downsample 为 True,则定义一个下采样模块 downsample,包含模糊层和卷积层

self.downsample = nn.Sequential(

Blur(),

nn.Conv2d(filters, filters, 3, padding = 1, stride = 2)

) if downsample else None

# 前向传播函数,接收输入 x,返回处理后的结果

def forward(self, x):

# 对输入 x 进行卷积操作,得到 res

res = self.conv_res(x)

# 对输入 x 进行神经网络模型 net 的处理

x = self.net(x)

# 如果 downsample 存在,则对 x 进行下采样

if exists(self.downsample):

x = self.downsample(x)

# 将下采样后的 x 与 res 相加,并乘以 1/sqrt(2)

x = (x + res) * (1 / math.sqrt(2))

# 返回处理后的结果 x

return x

class Generator(nn.Module):

# 生成器类,继承自 nn.Module

def __init__(self, image_size, latent_dim, network_capacity = 16, transparent = False, attn_layers = [], no_const = False, fmap_max = 512):

# 初始化函数,接受图像大小、潜在维度、网络容量、是否透明、注意力层等参数

super().__init__()

self.image_size = image_size

self.latent_dim = latent_dim

self.num_layers = int(log2(image_size) - 1)

filters = [network_capacity * (2 ** (i + 1)) for i in range(self.num_layers)][::-1]

set_fmap_max = partial(min, fmap_max)

filters = list(map(set_fmap_max, filters))

init_channels = filters[0]

filters = [init_channels, *filters]

in_out_pairs = zip(filters[:-1], filters[1:])

self.no_const = no_const

if no_const:

self.to_initial_block = nn.ConvTranspose2d(latent_dim, init_channels, 4, 1, 0, bias=False)

else:

self.initial_block = nn.Parameter(torch.randn((1, init_channels, 4, 4)))

self.initial_conv = nn.Conv2d(filters[0], filters[0], 3, padding=1)

self.blocks = nn.ModuleList([])

self.attns = nn.ModuleList([])

for ind, (in_chan, out_chan) in enumerate(in_out_pairs):

not_first = ind != 0

not_last = ind != (self.num_layers - 1)

num_layer = self.num_layers - ind

attn_fn = attn_and_ff(in_chan) if num_layer in attn_layers else None

self.attns.append(attn_fn)

block = GeneratorBlock(

latent_dim,

in_chan,

out_chan,

upsample = not_first,

upsample_rgb = not_last,

rgba = transparent

)

self.blocks.append(block)

def forward(self, styles, input_noise):

# 前向传播函数,接受样式和输入噪声

batch_size = styles.shape[0]

image_size = self.image_size

if self.no_const:

avg_style = styles.mean(dim=1)[:, :, None, None]

x = self.to_initial_block(avg_style)

else:

x = self.initial_block.expand(batch_size, -1, -1, -1)

rgb = None

styles = styles.transpose(0, 1)

x = self.initial_conv(x)

for style, block, attn in zip(styles, self.blocks, self.attns):

if exists(attn):

x = attn(x)

x, rgb = block(x, rgb, style, input_noise)

return rgb

class Discriminator(nn.Module):

# 判别器类,继承自 nn.Module

def __init__(self, image_size, network_capacity = 16, fq_layers = [], fq_dict_size = 256, attn_layers = [], transparent = False, fmap_max = 512):

# 初始化函数,接受图像大小、网络容量、fq_layers、fq_dict_size、attn_layers、是否透明、fmap_max等参数

super().__init__()

num_layers = int(log2(image_size) - 1)

num_init_filters = 3 if not transparent else 4

blocks = []

filters = [num_init_filters] + [(network_capacity * 4) * (2 ** i) for i in range(num_layers + 1)]

set_fmap_max = partial(min, fmap_max)

filters = list(map(set_fmap_max, filters))

chan_in_out = list(zip(filters[:-1], filters[1:]))

blocks = []

attn_blocks = []

quantize_blocks = []

for ind, (in_chan, out_chan) in enumerate(chan_in_out):

num_layer = ind + 1

is_not_last = ind != (len(chan_in_out) - 1)

block = DiscriminatorBlock(in_chan, out_chan, downsample = is_not_last)

blocks.append(block)

attn_fn = attn_and_ff(out_chan) if num_layer in attn_layers else None

attn_blocks.append(attn_fn)

quantize_fn = PermuteToFrom(VectorQuantize(out_chan, fq_dict_size)) if num_layer in fq_layers else None

quantize_blocks.append(quantize_fn)

self.blocks = nn.ModuleList(blocks)

self.attn_blocks = nn.ModuleList(attn_blocks)

self.quantize_blocks = nn.ModuleList(quantize_blocks)

chan_last = filters[-1]

latent_dim = 2 * 2 * chan_last

self.final_conv = nn.Conv2d(chan_last, chan_last, 3, padding=1)

self.flatten = Flatten()

self.to_logit = nn.Linear(latent_dim, 1)

# 定义前向传播函数,接受输入 x

def forward(self, x):

# 获取输入 x 的 batch size

b, *_ = x.shape

# 初始化量化损失为零张量,与输入 x 相同的设备

quantize_loss = torch.zeros(1).to(x)

# 遍历每个块,注意力块和量化块

for (block, attn_block, q_block) in zip(self.blocks, self.attn_blocks, self.quantize_blocks):

# 对输入 x 应用块操作

x = block(x)

# 如果存在注意力块,则对输入 x 应用注意力块

if exists(attn_block):

x = attn_block(x)

# 如果存在量化块,则对输入 x 应用量化块,并计算损失

if exists(q_block):

x, loss = q_block(x)

quantize_loss += loss

# 对最终输出 x 应用最终卷积层

x = self.final_conv(x)

# 将输出 x 展平

x = self.flatten(x)

# 将展平后的输出 x 转换为 logit

x = self.to_logit(x)

# 压缩输出 x 的维度,去除大小为 1 的维度

return x.squeeze(), quantize_loss

class StyleGAN2(nn.Module):

# 定义 StyleGAN2 类,继承自 nn.Module

def __init__(self, image_size, latent_dim = 512, fmap_max = 512, style_depth = 8, network_capacity = 16, transparent = False, fp16 = False, cl_reg = False, steps = 1, lr = 1e-4, ttur_mult = 2, fq_layers = [], fq_dict_size = 256, attn_layers = [], no_const = False, lr_mlp = 0.1, rank = 0):

# 初始化函数,接受多个参数

super().__init__()

# 调用父类的初始化函数

self.lr = lr

self.steps = steps

# 设置学习率和训练步数

self.ema_updater = EMA(0.995)

# 创建指数移动平均对象

self.S = StyleVectorizer(latent_dim, style_depth, lr_mul = lr_mlp)

self.G = Generator(image_size, latent_dim, network_capacity, transparent = transparent, attn_layers = attn_layers, no_const = no_const, fmap_max = fmap_max)

self.D = Discriminator(image_size, network_capacity, fq_layers = fq_layers, fq_dict_size = fq_dict_size, attn_layers = attn_layers, transparent = transparent, fmap_max = fmap_max)

# 创建 StyleVectorizer、Generator 和 Discriminator 对象

self.SE = StyleVectorizer(latent_dim, style_depth, lr_mul = lr_mlp)

self.GE = Generator(image_size, latent_dim, network_capacity, transparent = transparent, attn_layers = attn_layers, no_const = no_const)

# 创建 StyleVectorizer 和 Generator 对象

self.D_cl = None

if cl_reg:

from contrastive_learner import ContrastiveLearner

# 导入 ContrastiveLearner 类

assert not transparent, 'contrastive loss regularization does not work with transparent images yet'

# 断言透明度为假

self.D_cl = ContrastiveLearner(self.D, image_size, hidden_layer='flatten')

# 创建 ContrastiveLearner 对象

self.D_aug = AugWrapper(self.D, image_size)

# 创建 AugWrapper 对象

set_requires_grad(self.SE, False)

set_requires_grad(self.GE, False)

# 设置 StyleVectorizer 和 Generator 的梯度计算为 False

generator_params = list(self.G.parameters()) + list(self.S.parameters())

self.G_opt = Adam(generator_params, lr = self.lr, betas=(0.5, 0.9))

self.D_opt = Adam(self.D.parameters(), lr = self.lr * ttur_mult, betas=(0.5, 0.9))

# 初始化生成器和判别器的优化器

self._init_weights()

self.reset_parameter_averaging()

# 初始化权重和参数平均

self.cuda(rank)

# 将模型移动到 GPU

if fp16:

(self.S, self.G, self.D, self.SE, self.GE), (self.G_opt, self.D_opt) = amp.initialize([self.S, self.G, self.D, self.SE, self.GE], [self.G_opt, self.D_opt], opt_level='O1', num_losses=3)

# 使用混合精度训练

def _init_weights(self):

# 初始化权重函数

for m in self.modules():

if type(m) in {nn.Conv2d, nn.Linear}:

nn.init.kaiming_normal_(m.weight, a=0, mode='fan_in', nonlinearity='leaky_relu')

# 使用 kaiming_normal_ 初始化权重

for block in self.G.blocks:

nn.init.zeros_(block.to_noise1.weight)

nn.init.zeros_(block.to_noise2.weight)

nn.init.zeros_(block.to_noise1.bias)

nn.init.zeros_(block.to_noise2.bias)

# 初始化 Generator 中的权重

def EMA(self):

# 定义指数移动平均函数

def update_moving_average(ma_model, current_model):

for current_params, ma_params in zip(current_model.parameters(), ma_model.parameters()):

old_weight, up_weight = ma_params.data, current_params.data

ma_params.data = self.ema_updater.update_average(old_weight, up_weight)

# 更新指数移动平均参数

update_moving_average(self.SE, self.S)

update_moving_average(self.GE, self.G)

# 更新 StyleVectorizer 和 Generator 的指数移动平均参数

def reset_parameter_averaging(self):

# 重置参数平均函数

self.SE.load_state_dict(self.S.state_dict())

self.GE.load_state_dict(self.G.state_dict())

# 加载当前状态到指数移动平均模型

def forward(self, x):

# 前向传播函数

return x

# 返回输入

class Trainer():

# 定义 Trainer 类

# 初始化函数,设置各种参数和默认值

def __init__(

self,

name = 'default', # 模型名称,默认为'default'

results_dir = 'results', # 结果保存目录,默认为'results'

models_dir = 'models', # 模型保存目录,默认为'models'

base_dir = './', # 基础目录,默认为当前目录

image_size = 128, # 图像大小,默认为128

network_capacity = 16, # 网络容量,默认为16

fmap_max = 512, # 特征图最大值,默认为512

transparent = False, # 是否透明,默认为False

batch_size = 4, # 批量大小,默认为4

mixed_prob = 0.9, # 混合概率,默认为0.9

gradient_accumulate_every=1, # 梯度累积步数,默认为1

lr = 2e-4, # 学习率,默认为2e-4

lr_mlp = 0.1, # MLP学习率,默认为0.1

ttur_mult = 2, # TTUR倍数,默认为2

rel_disc_loss = False, # 相对鉴别器损失,默认为False

num_workers = None, # 工作进程数,默认为None

save_every = 1000, # 保存频率,默认为1000

evaluate_every = 1000, # 评估频率,默认为1000

num_image_tiles = 8, # 图像平铺数,默认为8

trunc_psi = 0.6, # 截断值,默认为0.6

fp16 = False, # 是否使用FP16,默认为False

cl_reg = False, # 是否使用对比损失正则化,默认为False

no_pl_reg = False, # 是否不使用PL正则化,默认为False

fq_layers = [], # FQ层列表,默认为空列表

fq_dict_size = 256, # FQ字典大小,默认为256

attn_layers = [], # 注意力层列表,默认为空列表

no_const = False, # 是否不使用常数,默认为False

aug_prob = 0., # 数据增强概率,默认为0

aug_types = ['translation', 'cutout'], # 数据增强类型,默认为['translation', 'cutout']

top_k_training = False, # 是否使用Top-K训练,默认为False

generator_top_k_gamma = 0.99, # 生成器Top-K Gamma值,默认为0.99

generator_top_k_frac = 0.5, # 生成器Top-K分数,默认为0.5

dual_contrast_loss = False, # 是否使用双对比损失,默认为False

dataset_aug_prob = 0., # 数据集增强概率,默认为0

calculate_fid_every = None, # 计算FID频率,默认为None

calculate_fid_num_images = 12800, # 计算FID图像数,默认为12800

clear_fid_cache = False, # 是否清除FID缓存,默认为False

is_ddp = False, # 是否使用DDP,默认为False

rank = 0, # 排名,默认为0

world_size = 1, # 世界大小,默认为1

log = False, # 是否记��日志,默认为False

*args, # 可变位置参数

**kwargs # 可变关键字参数

):

self.GAN_params = [args, kwargs] # GAN参数列表

self.GAN = None # GAN对象

self.name = name # 设置模型名称

base_dir = Path(base_dir) # 将基础目录转换为Path对象

self.base_dir = base_dir # 设置基础目录

self.results_dir = base_dir / results_dir # 设置结果保存目录

self.models_dir = base_dir / models_dir # 设置模型保存目录

self.fid_dir = base_dir / 'fid' / name # 设置FID目录

self.config_path = self.models_dir / name / '.config.json' # 设置配置文件路径

assert log2(image_size).is_integer(), 'image size must be a power of 2 (64, 128, 256, 512, 1024)' # 断言图像大小必须是2的幂次方

self.image_size = image_size # 设置图像大小

self.network_capacity = network_capacity # 设置网络容量

self.fmap_max = fmap_max # 设置特征图最大值

self.transparent = transparent # 设置是否透明

self.fq_layers = cast_list(fq_layers) # 将FQ层转换为列表

self.fq_dict_size = fq_dict_size # 设置FQ字典大小

self.has_fq = len(self.fq_layers) > 0 # 判断是否有FQ层

self.attn_layers = cast_list(attn_layers) # 将注意力层转换为列表

self.no_const = no_const # 设置是否不使用常数

self.aug_prob = aug_prob # 设置数据增强概率

self.aug_types = aug_types # 设置数据增强类型

self.lr = lr # 设置学习率

self.lr_mlp = lr_mlp # 设置MLP学习率

self.ttur_mult = ttur_mult # 设置TTUR倍数

self.rel_disc_loss = rel_disc_loss # 设置是否相对鉴别器损失

self.batch_size = batch_size # 设置批量大小

self.num_workers = num_workers # 设置工作进程数

self.mixed_prob = mixed_prob # 设置混合概率

self.num_image_tiles = num_image_tiles # 设置图像平铺数

self.evaluate_every = evaluate_every # 设置评估频率

self.save_every = save_every # 设置保存频率

self.steps = 0 # 步数初始化为0

self.av = None # 初始化av

self.trunc_psi = trunc_psi # 设置截断值

self.no_pl_reg = no_pl_reg # 设置是否不使用PL正则化

self.pl_mean = None # 初始化PL均值

self.gradient_accumulate_every = gradient_accumulate_every # 设置梯度累积步数

assert not fp16 or fp16 and APEX_AVAILABLE, 'Apex is not available for you to use mixed precision training' # 断言Apex是否可用

self.fp16 = fp16 # 设置是否使用FP16

self.cl_reg = cl_reg # 设置是否使用对比损失正则化

self.d_loss = 0 # 初始化鉴别器损失

self.g_loss = 0 # 初始化生成器损失

self.q_loss = None # 初始化Q损失

self.last_gp_loss = None # 初始化上一次梯度惩罚损失

self.last_cr_loss = None # 初始化上一次对比损失

self.last_fid = None # 初始化上一次FID

self.pl_length_ma = EMA(0.99) # 初始化PL长度移动平均

self.init_folders() # 初始化文件夹

self.loader = None # 初始化数据加载器

self.dataset_aug_prob = dataset_aug_prob # 设置数据集增强概率

self.calculate_fid_every = calculate_fid_every # 设置计算FID频率

self.calculate_fid_num_images = calculate_fid_num_images # 设置计算FID图像数

self.clear_fid_cache = clear_fid_cache # 设置是否清除FID缓存

self.top_k_training = top_k_training # 设置是否使用Top-K训练

self.generator_top_k_gamma = generator_top_k_gamma # 设置生成器Top-K Gamma值

self.generator_top_k_frac = generator_top_k_frac # 设置生成器Top-K分数

self.dual_contrast_loss = dual_contrast_loss # 设置是否使用双对比损失

assert not (is_ddp and cl_reg), 'Contrastive loss regularization does not work well with multi GPUs yet' # 断言对比损失正则化在多GPU上不起作用

self.is_ddp = is_ddp # 设置是否使用DDP

self.is_main = rank == 0 # 判断是否为主进程

self.rank = rank # 设置排名

self.world_size = world_size # 设置世界大小

self.logger = aim.Session(experiment=name) if log else None # 设置记录器

@property

# 返回图片的扩展名,如果是透明图片则返回png,否则返回jpg

def image_extension(self):

return 'jpg' if not self.transparent else 'png'

# 返回检查点编号,根据步数和保存频率计算得出

@property

def checkpoint_num(self):

return floor(self.steps // self.save_every)

# 返回超参数字典,包括图片大小和网络容量

@property

def hparams(self):

return {'image_size': self.image_size, 'network_capacity': self.network_capacity}

# 初始化生成对抗网络

def init_GAN(self):

args, kwargs = self.GAN_params

# 创建StyleGAN2对象

self.GAN = StyleGAN2(lr = self.lr, lr_mlp = self.lr_mlp, ttur_mult = self.ttur_mult, image_size = self.image_size, network_capacity = self.network_capacity, fmap_max = self.fmap_max, transparent = self.transparent, fq_layers = self.fq_layers, fq_dict_size = self.fq_dict_size, attn_layers = self.attn_layers, fp16 = self.fp16, cl_reg = self.cl_reg, no_const = self.no_const, rank = self.rank, *args, **kwargs)

# 如果是分布式训练,使用DDP包装GAN的各个部分

if self.is_ddp:

ddp_kwargs = {'device_ids': [self.rank]}

self.S_ddp = DDP(self.GAN.S, **ddp_kwargs)

self.G_ddp = DDP(self.GAN.G, **ddp_kwargs)

self.D_ddp = DDP(self.GAN.D, **ddp_kwargs)

self.D_aug_ddp = DDP(self.GAN.D_aug, **ddp_kwargs)

# 如果存在日志记录器,设置参数

if exists(self.logger):

self.logger.set_params(self.hparams)

# 写入配置信息到配置文件

def write_config(self):

self.config_path.write_text(json.dumps(self.config()))

# 从配置文件加载配置信息

def load_config(self):

config = self.config() if not self.config_path.exists() else json.loads(self.config_path.read_text())

# 更新配置信息

self.image_size = config['image_size']

self.network_capacity = config['network_capacity']

self.transparent = config['transparent']

self.fq_layers = config['fq_layers']

self.fq_dict_size = config['fq_dict_size']

self.fmap_max = config.pop('fmap_max', 512)

self.attn_layers = config.pop('attn_layers', [])

self.no_const = config.pop('no_const', False)

self.lr_mlp = config.pop('lr_mlp', 0.1)

del self.GAN

self.init_GAN()

# 返回配置信息字典

def config(self):

return {'image_size': self.image_size, 'network_capacity': self.network_capacity, 'lr_mlp': self.lr_mlp, 'transparent': self.transparent, 'fq_layers': self.fq_layers, 'fq_dict_size': self.fq_dict_size, 'attn_layers': self.attn_layers, 'no_const': self.no_const}

# 设置数据源

def set_data_src(self, folder):

# 创建数据集对象

self.dataset = Dataset(folder, self.image_size, transparent = self.transparent, aug_prob = self.dataset_aug_prob)

num_workers = num_workers = default(self.num_workers, NUM_CORES if not self.is_ddp else 0)

# 创建数据加载器