nginx load balance

din /etc/nginx/nginx.conf

http { upstream backend { server backend1.example.com; server backend2.example.com; server 192.0.0.1 backup; } server { location / { proxy_pass http://backend; } } }

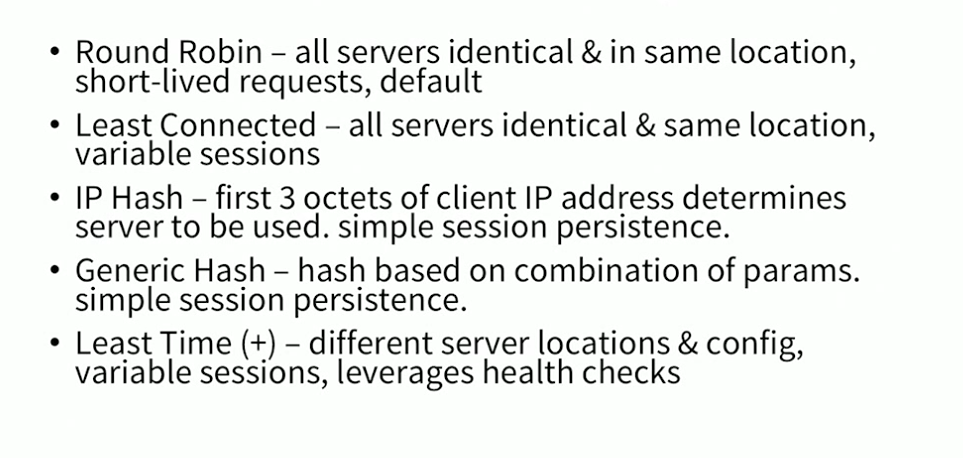

by deault it will use round robin to distribute the request from client to bandend server. it can also use

Least Connections – A request is sent to the server with the least number of active connections, again with server weights taken into consideration:

IP Hash – The server to which a request is sent is determined from the client IP address. In this case, either the first three octets of the IPv4 address or the whole IPv6 address are used to calculate the hash value. The method guarantees that requests from the same address get to the same server unless it is not available.

Generic Hash – The server to which a request is sent is determined from a user‑defined key which can be a text string, variable, or a combination. For example, the key may be a paired source IP address and port, or a URI as in this example:

Random – Each request will be passed to a randomly selected server.

Least Time (NGINX Plus only) – For each request, NGINX Plus selects the server with the lowest average latency and the lowest number of active connections, where the lowest average latency is calculated based on which of the following parameters to theleast_timedirective is included:

header– Time to receive the first byte from the serverlast_byte– Time to receive the full response from the serverlast_byte inflight– Time to receive the full response from the server, taking into account incomplete requests

Session Persistence

- IP hash-- same ip request arrive at same server

- gereral hash -- the request can be routed to same server if mutiple factors are matched, e.g ip , port, url etc

- Sticky cookie -- nginx insert a cookie to request, for future client request, if it is contain same cookie, it route to same server

- Sticky route

- Sticky learn method – NGINX Plus first finds session identifiers by inspecting requests and responses. Then NGINX Plus “learns” which upstream server corresponds to which session identifier. Generally, these identifiers are passed in a HTTP cookie. If a request contains a session identifier already “learned”, NGINX Plus forwards the request to the corresponding server:

Limiting the Number of Connections

With NGINX Plus, it is possible to limit the number of connections to an upstream server by specifying the maximum number with the max_conns parameter.

If the max_conns limit has been reached, the request is placed in a queue for further processing, provided that the queue directive is also included to set the maximum number of requests that can be simultaneously in the queue:

upstream backend {

server backend1.example.com max_conns=3;

server backend2.example.com;

queue 100 timeout=70;

}

Sharing Data with Multiple Worker Processes

As the load blancer running with differt process, each process keep it own memory for those connection status, counter etc. it will results the issue e.g process 1 has overflow server1, but worker process 2 does not aware of it and still sending request to serve1.

When the zone directive is included in an upstream block, the configuration of the upstream group is kept in a memory area shared among all worker processes. This scenario is dynamically configurable, because the worker processes access the same copy of the group configuration and utilize the same related counters.

Configuring HTTP Load Balancing Using DNS

The server is speicific as hostname in cfg, the resove parameter can be added to monitor if those server's hostname changed. The configuration of a server group can be modified at runtime using DNS. if it is chagned, nginx will auto use the new ip.

http {

resolver 10.0.0.1 valid=300s ipv6=off;

resolver_timeout 10s;

server {

location / {

proxy_pass http://backend;

}

}

upstream backend {

zone backend 32k;

least_conn;

# ...

server backend1.example.com resolve;

server backend2.example.com resolve;

}

}

In the example, the resolve parameter to the server directive tells NGINX Plus to re‑resolve the backend1.example.com and backend2.example.com domain names into IP addresses every 300 sec..