k8s service

Service:

“Service” is an abstraction which defines a logical set of Pods and a policy by which to access them. The logical set of Pods is defined by lable selector.

- Service will be assigned an IP address (“cluster IP”), which is used by the service proxies.

- Service can map an incoming port to any targetPort. (By default the targetPort will be set to the same value as the port field and it can defined as a string.)

Pod to Service Communication

A service is an abstraction that routes traffic to a set of pods. A service gets its own Cluster IP, the pods also get their own IP’s and when requests need to reach the pods, it is sent to the service Cluster IP and then forwarded to the actual IP of the pods.

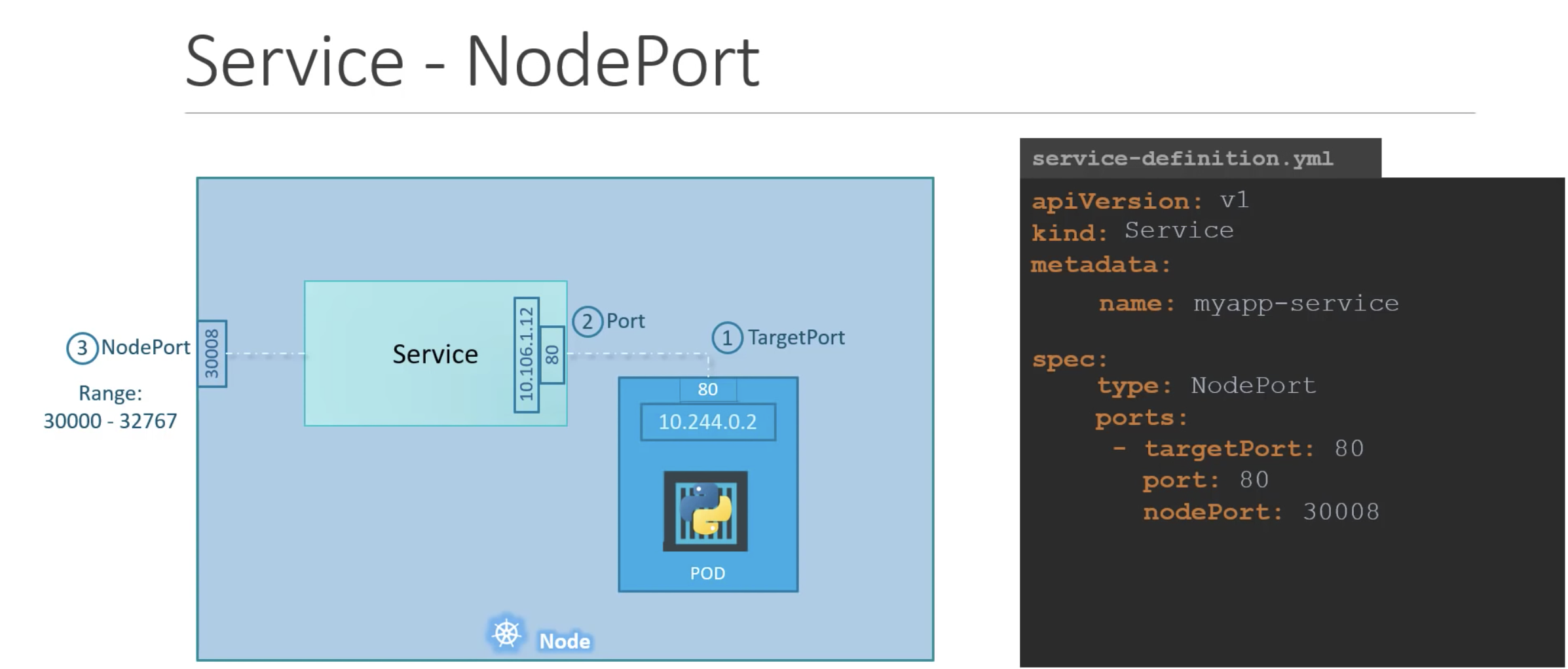

- ClusterIP – It is the IP for a service. Exposes the service on a cluster-internal IP. Cluster is only reachable from within the cluster. This is the default Type. Cluster IP is to have a fixed IP address which can be access by other pod in the same cluster, and the idea is the pod ip may change because pod restart etc, so it need a fixed ip address to communicate. It is used for POD to serverice connection. In the example below 10.106.1.12 is clusterIP.

This way pods(mortals) can come and go without worrying about updating IPs since by default the service will automatically update its endpoint with the IP of the pod that it targets as that pod changes IP.

The way the service knows what pod to focus on is through LabelSelector. This is an important concept to be aware of since LabelSelector is the only way the service knows what pod to target and keep track of their IP’s.

Pod to Pod Communication

The idea is that when a pod is created, Linux Virtual Ethernet Device or veth pair can be used to connect Pod network namespaces to the root network namespace. Pods on an equivalent node can talk through a bridge. A Linux Ethernet bridge is a virtual Layer 2 networking device used to unite two or more network segments, working transparently to connect the two networks together.

For Pods on separate nodes, assuming connectivity between the nodes, the request will go from root namespace of one node to the root namespace of other nodes. The node should then route to route traffic to the right pod.

The pods in Node 1 will be 192.168.1.x. node to will be 192.168.2.x. They connect each other via vxlan, e,g the tool Flannel

There are 4 types of service

- Cluster IP

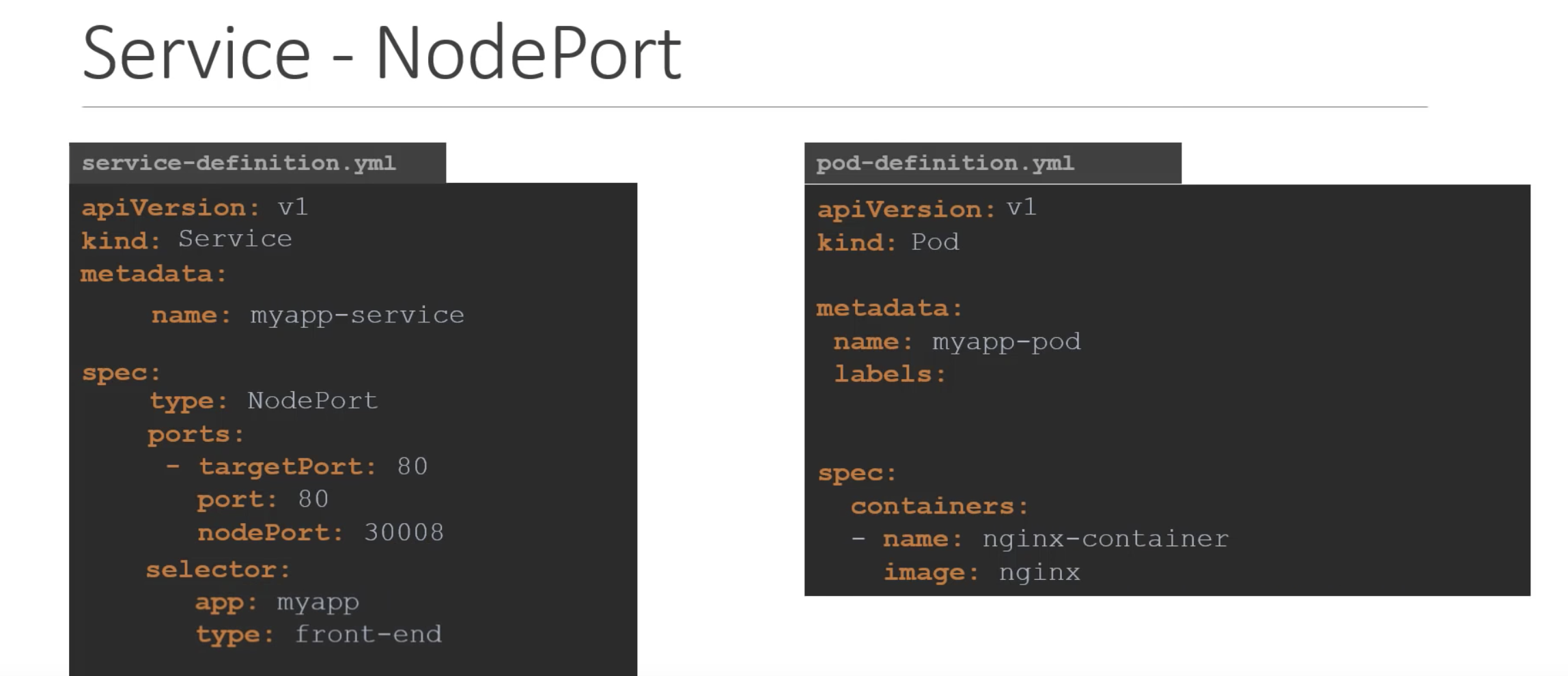

- NodePort – Exposes the service on each Node’s IP at a static port. A ClusterIP service (example below it is 10.106.1.12), to which the NodePort service will route, is automatically created. You’ll be able to contact the NodePort service, from outside the cluster, by using “<NodeIP>:<NodePort>”. In yaml file, Port is mandoary, by default port = target port. It using selector and lable to recognise the pods it connect.

using selector

using selector

-

When would you use this?

There are many downsides to this method:

- You can only have once service per port

- You can only use ports 30000–32767

- If your Node/VM IP address change, you need to deal with that

For these reasons, I don’t recommend using this method in production to directly expose your service. If you are running a service that doesn’t have to be always available, or you are very cost sensitive, this method will work for you. A good example of such an application is a demo app or something temporary.

-

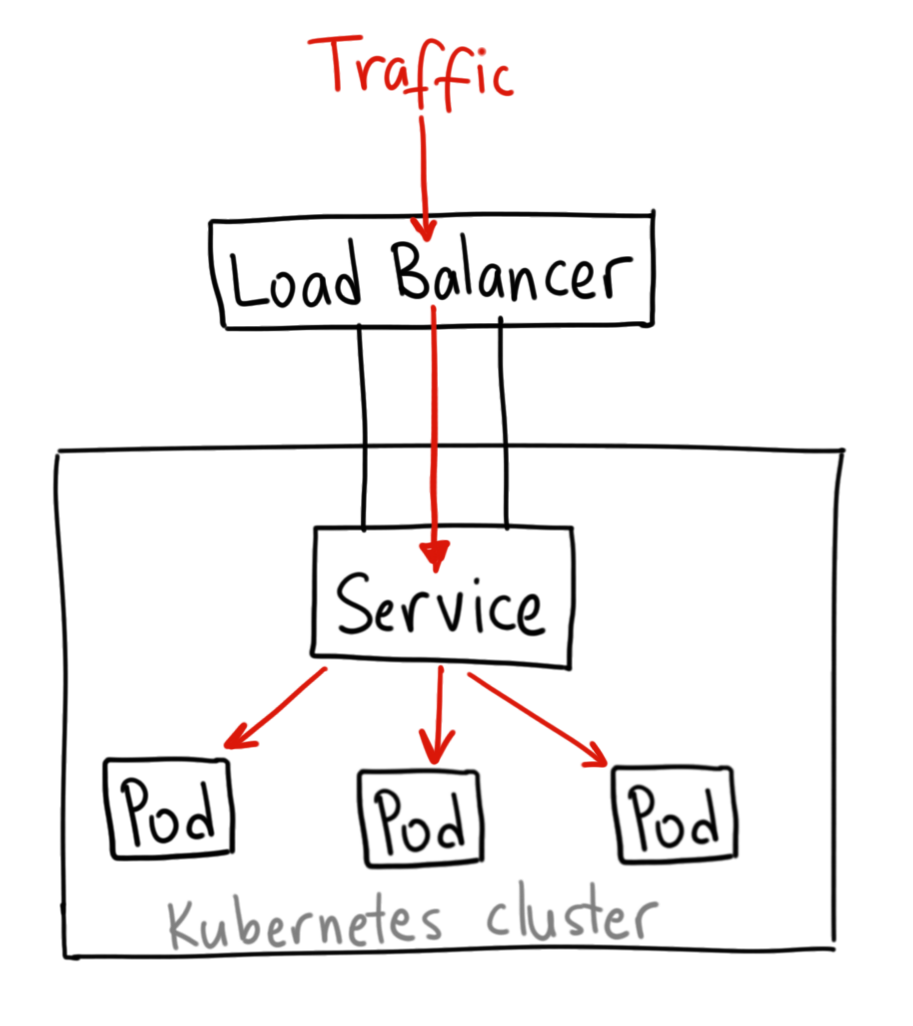

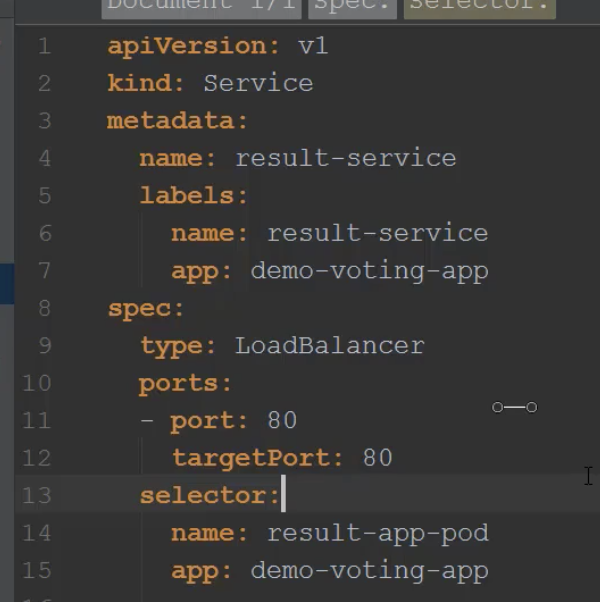

- LoadBalancer – Exposes the service externally using a cloud provider’s load balancer. NodePort and ClusterIP services, to which the external load balancer will route, are automatically created.

- When would you use this?If you want to directly expose a service, this is the default method. All traffic on the port you specify will be forwarded to the service. There is no filtering, no routing, etc. This means you can send almost any kind of traffic to it, like HTTP, TCP, UDP, Websockets, gRPC, or whatever The big downside is that each service you expose with a LoadBalancer will get its own IP address, and you have to pay for a LoadBalancer per exposed service, which can get expensive.

- ExternalName – Maps the service to the contents of the externalName field (e.g. foo.bar.example.com), by returning a CNAME record with its value.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

apiVersion: v1 kind: Service metadata: name: order-service spec: ports: - port: 8080 targetPort: 8170 nodePort: 32222 protocol: TCP selector: component: order-service-app |

- The port is 8080 which represents that order-service can be accessed by other services in the cluster at port 8080.

- The targetPort is 8170 which represents the order-service is actually running on port 8170 on pods

- The nodePort is 32222 which represents that order-service can be accessed via kube-proxy on port 32222.

k8s network https://www.youtube.com/watch?v=WwQ62OyCNz4

The difference between Loadbalancer and Nodeport is https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0