hadoop2 安装

下载

https://archive.apache.org/dist/hadoop/common/hadoop-2.7.2/

jdk 安装

https://blog.csdn.net/zhwyj1019/article/details/81103531

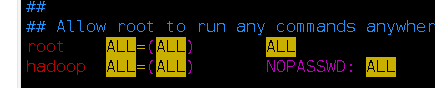

设置 新的用户

useradd hadoop

passwd hadoop

vim /etc/sudoers

设置 hostname 和 host

https://www.cnblogs.com/MWCloud/p/11346867.html

改变文件所属用户

sudo chown hadoop /sss

vim /etc/profile

JAVA_HOME=/opt/soft/jdk1.8.0_121 HADOOP_HOME=/opt/module/hadoop-2.7.2 PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin export JAVA_HOME PATH HADOOP_HOME

source /etc/profile

hadoop101 hadoop102 hadoop103

DN DN DN

NM NM NM

NN RM 2NN

配置 core-site.xml

<!-- 指定HDFS中NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop101:9000</value>

</property>

<!-- 指定Hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.2/data/tmp</value>

</property>

配置 hdfs-site.xml

<!-- 指定Hadoop辅助名称节点主机配置 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop103:50090</value>

</property>

配置 yarn-site.xml

<!-- reducer获取数据的方式 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定YARN的ResourceManager的地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop103</value>

</property>

cp mapred-site.xml.template mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

在hadoop101 运行

hadoop namenode -format

显示 Storage directory /opt/module/hadoop-2.7.2/data/tmp/dfs/name has been successfully formatted.

在 hadoop101 上 运行 hadoop-daemon.sh start namenode

jps 查看

在 hadoop101 ,hadoop102, hadoop103 上 都运行

hadoop-daemon.sh start datanode

在 hadoop103 上 运行

hadoop-daemon.sh start secondarynamenode

在 hadoop102 上启动

yarn-daemon.sh start resourcemanager

在 hadoop101 ,hadoop102, hadoop103 上 都运行

yarn-daemon.sh start nodemanager

停止 hadoop-daemon.sh stop datanode

访问

http://hadoop101:50070/

http://hadoop102:8088/

测试 hadoop fs -mkdir /angdh_input

hadoop fs -put hi /angdh_input/

测试

cd 到

/opt/module/hadoop-2.7.2/share/hadoop/mapreduce

hadoop jar hadoop-mapreduce-examples-2.7.2.jar wordcount /angdh_input /angdh_output

浙公网安备 33010602011771号

浙公网安备 33010602011771号