js逆向的题目,6-10

#####

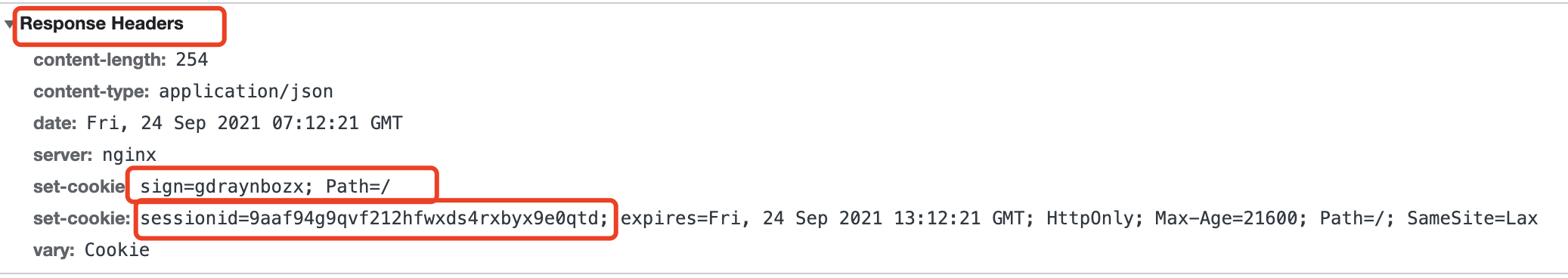

第六题,session 保持

查看接口的,HTTP response

有一个Set-Cookie:告诉浏览器下次请求时需要带上该字段中的Cookie,这个非常重要,是服务器识别用户和维持会话的重要手段。

所以回话保持,就是把上一次设置的cookie,我下一次要带上,

怎么实现,

我们可以打印出出来

resp = requests.post(url, headers=headers, data=data, timeout=5, cookies=cookies, verify=False)

print("resp.cookies",resp.cookies)

这是一个RequestsCookieJar

resp.cookies <RequestsCookieJar[<Cookie sessionid=4dmsmb7bmd583v5j6e0irz9ke2qfqy9r for www.python-spider.com/>, <Cookie sign=baxncmldiw for www.python-spider.com/>]>

for cookie in resp.cookies:

print(cookie.name + "\t" + cookie.value)

我们可以遍历这个cookiejar,把cookie打印出来,

#我们可以将第一次请求的响应中的Set-cookies添加进来,事实上requests库会自动帮我们做这些

直接上python代码:

import urllib3 import requests import json import time urllib3.disable_warnings() class Spider6: def get_cookies(self): cookies = 'vaptchaNetway=cn; __jsl_clearance=1629437158.491|0|clD4VpfqhdaLBWywKWy%2FZyfi6d_e64c840a1ebefb2b5f8c551e84b10e073D; Hm_lvt_337e99a01a907a08d00bed4a1a52e35d=1632264338; _i=Z2N0eWZpbWpxe$; _v=WjJOMGVXWnBiV3B4ZSQ; no-alert=true; m=22d69b36a0db4c94d296e425bb14754d|1632394602000; sessionid=99bci8spz84801oemk33z2h3wgiist7c; Hm_lpvt_337e99a01a907a08d00bed4a1a52e35d=1632396991; sign=jaglcvymhi' cookies = {i.split("=")[0].strip(): i.split("=")[1].strip() for i in cookies.split(";")} # print(cookies) return cookies def get_url(self, url, page): headers = { 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 ' '(KHTML, like Gecko) Ubuntu Chromium/60.0.3112.113 Chrome/60.0.3112.113 Safari/537.36', } data = { "page": page } cookies = self.get_cookies() # print(cookies) resp = requests.post(url, headers=headers, data=data, timeout=5, cookies=cookies, verify=False) # print("resp.cookies",resp.cookies) for cookie in resp.cookies: # print(cookie.name + "\t" + cookie.value) cookies[cookie.name] = cookie.value # print(cookies) resp = requests.post(url, headers=headers, data=data, timeout=5, cookies=cookies, verify=False) return resp.url, resp.status_code, resp.text def process_data(self, text, page): # print(text) data_dict = json.loads(text, strict=False) num_list = [] for i in data_dict["data"]: num_list.append(int(i["value"])) # print(i["value"]) print("第 {} 页的总和 sum(num_list)".format(page), sum(num_list)) return sum(num_list) def start(self): url = "https://www.python-spider.com/api/challenge6" # url = "https://www.python-spider.com/challenge/6" all_page_num_sum = [] for page in range(1, 101): time.sleep(1) url, status_code, text = self.get_url(url, page) page_num = self.process_data(text, page) all_page_num_sum.append(page_num) print("所有页码的总和", sum(all_page_num_sum)) spider4 = Spider6() spider4.start()

###

第七题

使用抓包工具,重放攻击,

步骤,

1,找到包含数据的url,

2,对这个url,进行重放攻击,发现403,

3,检查cookie,两次并没有什么变化,怀疑是这个请求是依赖另一个请求的

4,找到上一个请求cityjson,先请求这个然后再请求我们的数据接口,就成功了

上python代码:

import requests

import urllib3

urllib3.disable_warnings()

url1 = "https://www.python-spider.com/cityjson"

url2 = "https://www.python-spider.com/api/challenge7"

cookies = "vaptchaNetway=cn; Hm_lvt_337e99a01a907a08d00bed4a1a52e35d=1628248083,1629106799; " \

"sessionid=g1siko0evn5hmnn3pbgl0vaoqjx29cfo; Hm_lpvt_337e99a01a907a08d00bed4a1a52e35d=1629124377"

cookies_dict = {cookie.split("=")[0].strip(): cookie.split("=")[1].strip() for cookie in cookies.split(";")}

all_page_sum = []

for i in range(1,101):

print("page: ", i)

data = {"page": i}

res = requests.get(url1, verify=False, cookies=cookies_dict) # 先请求这个url,然后请求下面的url就通了,

res2 = requests.post(url2, verify=False, cookies=cookies_dict, data=data)

# print(res2.text)

page_sum = sum([int(item["value"])for item in res2.json()["data"]])

print([int(item["value"])for item in res2.json()["data"]])

all_page_sum.append(page_sum)

print("all_page_sum =", sum(all_page_sum))

#####

第八题,控制台禁用,

这个题只要已开启控制台,页面就变成空的tab页了

原理:

<script> var x = document.createElement('div'); var isOpening = false; Object.defineProperty(x, 'id', { get:function(){ window.location='about:blank'; window.threshold = 0; } }); console.info(x); </script>

创建了一个div标签,

然后赋值给x,

给x绑定了一个id的方法,这个不开控制台,获取不到id,但是开了控制台就会打印出id,就会触发这个方法,

就是这个原理,

怎么办?

一般这种控制台禁用,

第一种,页面不调跳转的,直接手动打开控制台,就可以了,

第二种,就是页面会跳转,一打开控制台,页面就跳转到其他地方了,

这种方法,一般使用 Charles的map locals或者fiddler 的auto response功能,把那些干扰的js,直接注释掉,就可以了,

我就是使用的,Charles的map locals

具体的使用方法, 网络上都有,

######

第十题

使用抓包工具,重放攻击,

步骤,

1,找到包含数据的url,

2,对这个url,进行重放攻击,发现直接可以拿到数据,说明这个是独立的,我们就可以去用python发起请求了,

3,使用python发起请求,发现是失败的,

4,对比Charles重放攻击发起的请求和python代码发起的请求,发现cookie的顺序不一致,

5,把python代码的cookie顺序,调整为和重放攻击一直的cookie顺序,发送请求,成功,

上python代码:

import requests

import urllib3

urllib3.disable_warnings()

url1 = "https://www.python-spider.com/api/challenge10"

cookies = "vaptchaNetway=cn; Hm_lvt_337e99a01a907a08d00bed4a1a52e35d=1628248083,1629106799; " \

"sessionid=g1siko0evn5hmnn3pbgl0vaoqjx29cfo; Hm_lpvt_337e99a01a907a08d00bed4a1a52e35d=1629124377"

headers = {

"Host": "www.python-spider.com",

"Connection": "keep-alive",

"Content-Length": "0",

"Origin": "https://www.python-spider.com",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36",

"Accept": "*/*",

"Referer": "https://www.python-spider.com/challenge/10",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9",

}

cookies_dict = {cookie.split("=")[0].strip(): cookie.split("=")[1].strip() for cookie in cookies.split(";")}

all_page_sum = []

for i in range(1, 101):

print("page: ", i)

data = {"page": i}

session = requests.session()

session.headers = headers # 关键是这一步,让headers有顺序的请求,

res2 = session.post(url1, verify=False, cookies=cookies_dict, data=data)

page_sum = sum([int(item["value"])for item in res2.json()["data"]])

print([int(item["value"])for item in res2.json()["data"]])

all_page_sum.append(page_sum)

print("all_page_sum =", sum(all_page_sum))

######

浙公网安备 33010602011771号

浙公网安备 33010602011771号