需求描述:

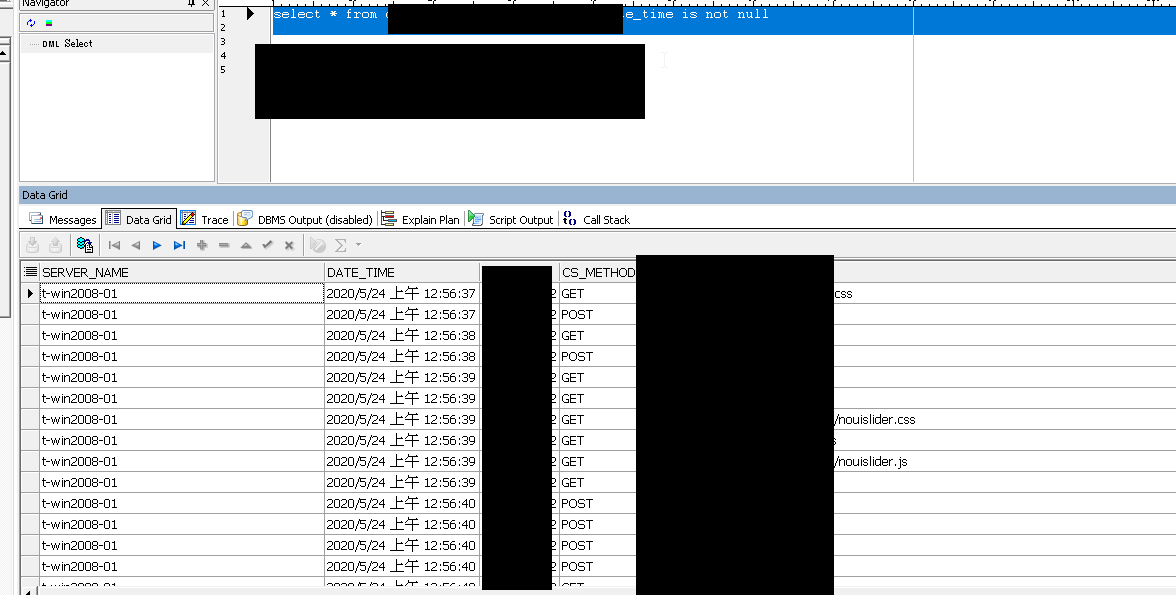

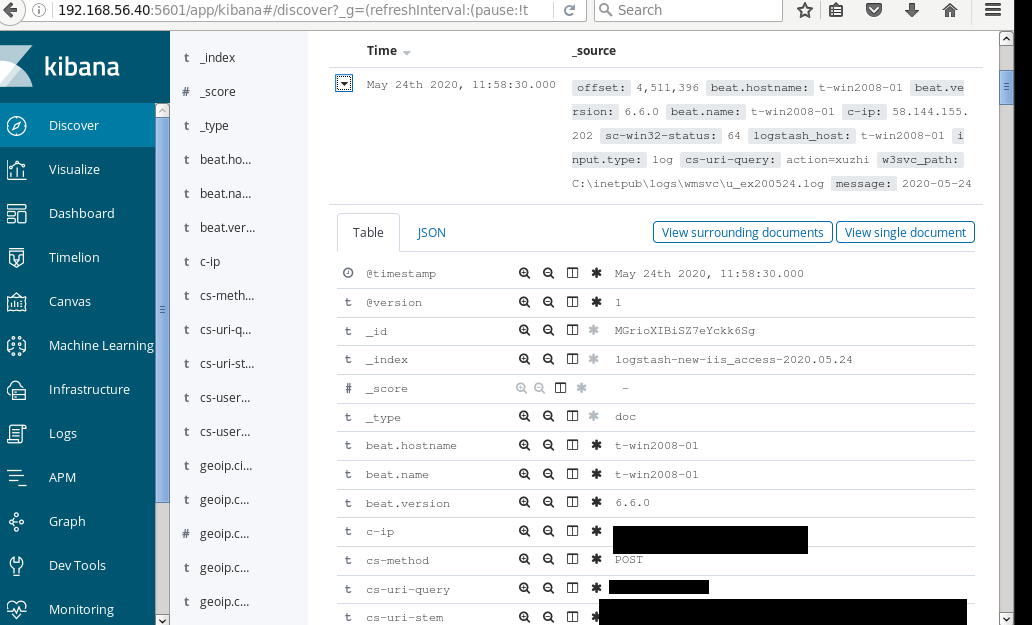

将filebeat上传的iis日志分别存放至ElasticSearch和oracle数据库

作业步骤:

1.准备logstash相关环境(tar.gz已配置完成)

2.安装logstash-output-jdbc插件,可在线安装或离线安装包

3.安装oracle jdbc驱动

4.配置logstash

5.启动logstash测试

【准备logstash环境】

/home/elastic/.bash_profile

# .bash_profile

ELASTIC_HOME=/u01/elasticsearch-6.6.0; export ELASTIC_HOME

KIBANA_HOME=/u01/kibana-6.6.0; export KIBANA_HOME

LOGSTASH_HOME=/u01/logstash-6.6.0; export LOGSTASH_HOME

JAVA_HOME=/usr/local/jdk1.8.0_202; export JAVA_HOME

CLASSPATH=$JAVA_HOME/lib:$JAVA_HOME/jre:$JAVA_HOME/jlib; export CLASSPATH

PATH=$ELASTIC_HOME/bin:$KIBANA_HOME/bin:$LOGSTASH_HOME/bin:$JAVA_HOME/bin:$PATH:$HOME/bin

export PATH

安装ruby相关组件

【安装logstash-output-jdbc插件 】

1.如安装logstash这台主机可以连接外面,可以直接安装jdbc插件

logstash-plugin install logstash-output-jdbc

2.在已安装插件的主机进行打包

#如存在代理则设定Proxy地址,因prepare-offline-pack 时会连接外部网络

export http_proxy=http://x.x.x.x:xx/

export https_proxy=http://x.x.x.x:xx/

#打包插件

[elastic@t-12c-01 logstash-6.6.0]$ logstash-plugin prepare-offline-pack --overwrite --output logstash-output-jdbc.zip logstash-output-jdbc

WARNING: A maven settings file already exist at /home/elastic/.m2/settings.xml, please review the content to make sure it include your proxies configuration.

Offline package created at: logstash-output-jdbc.zip

You can install it with this command `bin/logstash-plugin install file:///u01/logstash-6.6.0/logstash-output-jdbc.zip`

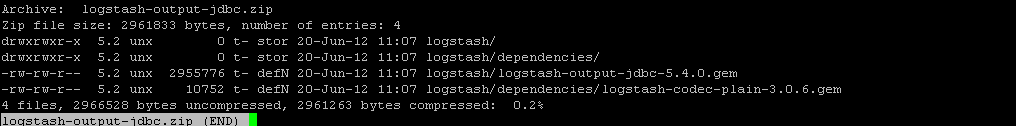

#扩展:查看打包的插件压缩包

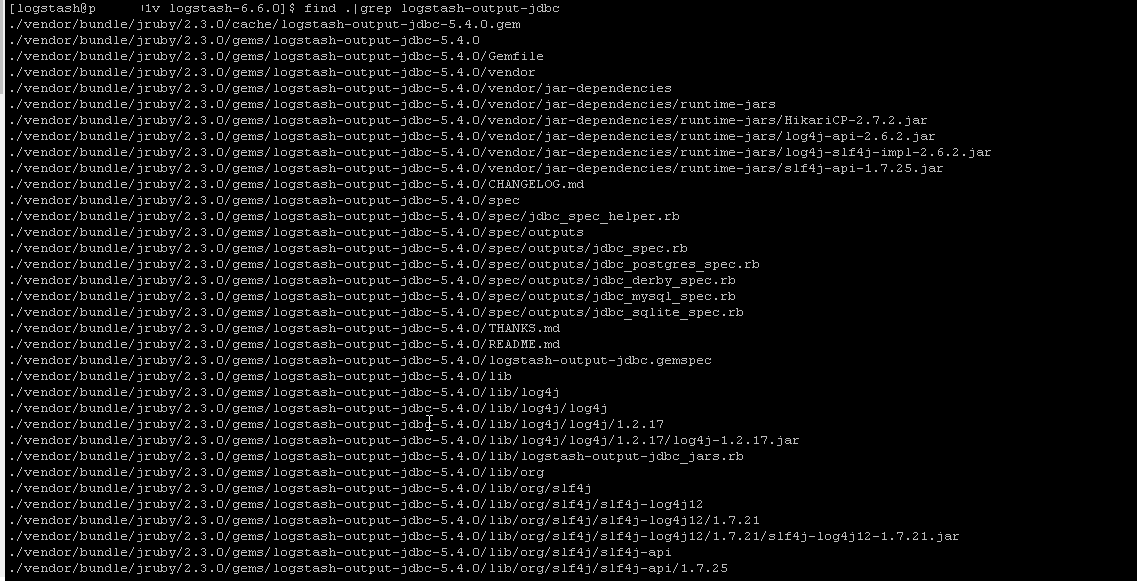

![]()

其实就是./vendor/bundle/jruby/2.3.0/cache/logstash-output-jdbc-5.4.0.gem 这个文件和一个依赖插件包logstash-codec-plain-3.0.6.gem

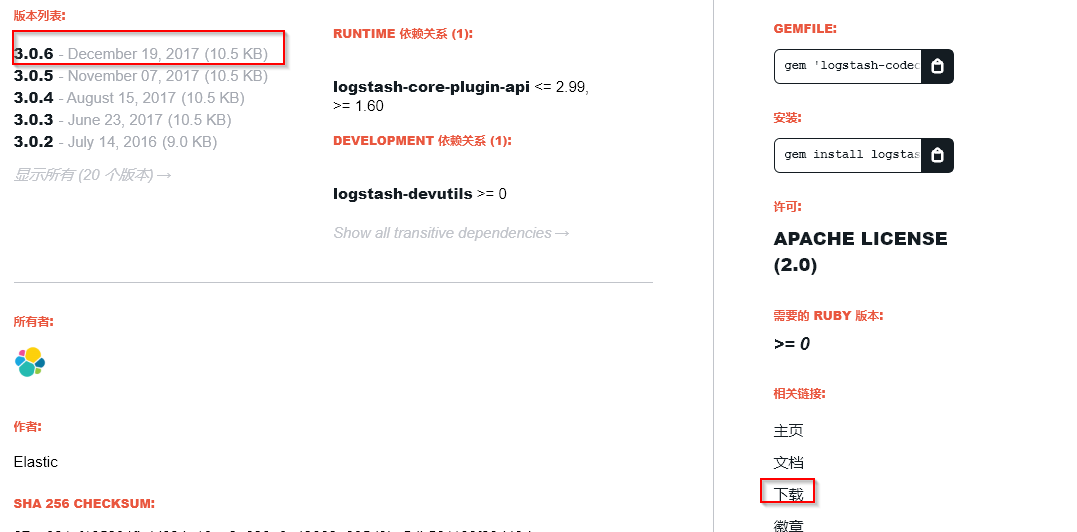

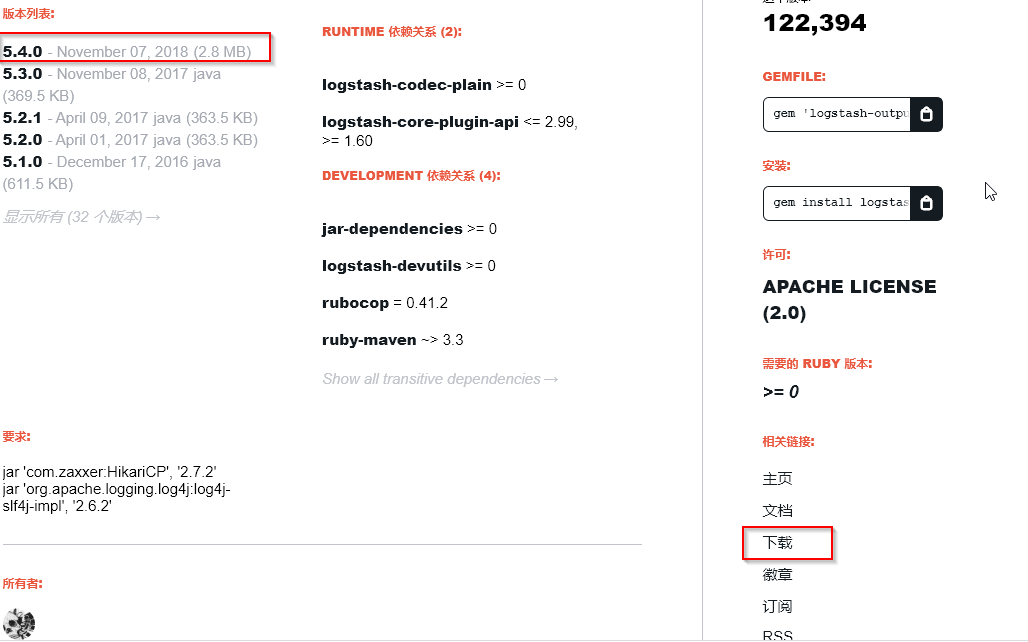

#网上下载者两个包后进行手动生成离线安装包

#创建mkdir -p logstash/dependencies 目录

logstash-output-jdbc-5.4.0.gem 放入logstash

logstash-codec-plain-3.0.6.gem 放入logstash/dependencies

[elastic@t-12c-01 soft]$ find logstash

logstash

logstash/dependencies

logstash/dependencies/logstash-codec-plain-3.0.6.gem

logstash/logstash-output-jdbc-5.4.0.gem

#压缩logstash文件夹,测试zip 需加-q -r后续才能安装成功,不然报 reason: The pack must contains at least one plugin, message: The pack must contains at least one plugin(因为需要子资料夹也要压缩)

zip -q -r logstash-output-jdbc.zip logstash

#扩展zip压缩

linux zip命令参数列表:

-a 将文件转成ASCII模式

-F 尝试修复损坏的压缩文件

-h 显示帮助界面

-m 将文件压缩之后,删除源文件

-n 特定字符串 不压缩具有特定字尾字符串的文件

-o 将压缩文件内的所有文件的最新变动时间设为压缩时候的时间

-q 安静模式,在压缩的时候不显示指令的执行过程

-r 将指定的目录下的所有子目录以及文件一起处理

-S 包含系统文件和隐含文件(S是大写)

-t 日期 把压缩文件的最后修改日期设为指定的日期,日期格式为mmddyyyy

[logstash@xxxxx logstash-6.6.0]$ bin/logstash-plugin install file:///data02/soft/logstash-output-jdbc.zip

Installing file: /data02/soft/logstash-output-jdbc.zip

[logstash@xxxxx logstash-6.6.0]$ bin/logstash-plugin list|grep jdbc

logstash-filter-jdbc_static

logstash-filter-jdbc_streaming

[logstash@xxxxx logstash-6.6.0]$ cat Gemfile|grep jdbc

gem "logstash-filter-jdbc_static"

gem "logstash-filter-jdbc_streaming"

gem "logstash-input-jdbc"

gem "logstash-output-jdbc", "= 5.4.0"

3.如本机无法连接外网,可自行下载源码包 ,此方案经过测试

失败,只是修改了Gemfile文件无实际安装

[elastic@t-12c-01 logstash-6.6.0]$ logstash-plugin list|grep jdbc

logstash-filter-jdbc_static

logstash-filter-jdbc_streaming

logstash-input-jdbc

#解压离线安装包

unzip logstash-output-jdbc-master.zip

#编辑logstash下的Gemfile文件,在其新增

安装插件的名称 离线安装包的绝对路径

gem "logstash-output-jdbc", :path => "/u01/soft/logstash-output-jdbc-master"

#安装插件

[elastic@t-12c-01 logstash-6.6.0]$ bin/logstash-plugin install --no-verify

Installing...

Installation successful

#查询已安装的jdbc插件

[elastic@t-12c-01 logstash-6.6.0]$ bin/logstash-plugin list|grep jdbc

logstash-filter-jdbc_static

logstash-filter-jdbc_streaming

logstash-input-jdbc

logstash-output-jdbc

【安装jdbc驱动】

下载jdbc驱动

https://www.oracle.com/database/technologies/jdbcdriver-ucp-downloads.html

将ojdbc6.jar放置于logstash目录下mkdir -p vendor/jar/jdbc

ls -l /u01/logstash-6.6.0/vendor/jar/jdbc/ojdbc6.jar

【配置logstash】

jdbc { connection_string => "jdbc:oracle:thin:dbuser/dbpassword@192.168.56.1:1521:SID"

statement => [ "INSERT INTO TAB(s_ip) VALUES(?)","s-ip"]

}

[elastic@t-12c-01 logstash-6.6.0]$ cat /u01/logstash-6.6.0/config/from_beat.conf

input {

beats {

port => 5044

}

}

filter {

grok {

# check that fields match your IIS log settings

match => ["message", "%{TIMESTAMP_ISO8601:log_timestamp} (%{IPORHOST:s-ip}|-) (%{WORD:cs-method}|-) %{NOTSPACE:cs-uri-stem} %{NOTSPACE:cs-uri-query} (%{NUMBER:s-port}|-) %{NOTSPACE:cs-username} (%{IPORHOST:c-ip}|-) %{NOTSPACE:cs-useragent} (%NUMBER:sc-status}|-) (%{NUMBER:sc-substatus}|-) (%{NUMBER:sc-win32-status}|-) (%{NUMBER:time-taken}|-)"]

}

date {

match => [ "log_timestamp", "YYYY-MM-dd HH:mm:ss" ]

timezone => "+00:00"

}

mutate {

add_field => { "logstash_host" => "%{[host][name]}"

"w3svc_path" => "%{[log][file][path]}"

}

}

geoip {

source => "c-ip"

target => "geoip"

database => "/u01/logstash-6.6.0/geolite2-city-mirror-master/GeoLite2-City_20191029/GeoLite2-City.mmdb"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float" ]

}

}

output {

jdbc {

connection_string => "jdbc:oracle:thin:dbuser/dbpassword@192.168.56.1:1521:SID"

statement => [ "INSERT INTO TABE(SERVER_NAME,DATE_TIME,S_IP,CS_METHOD,CS_URI_STEM,CS_URI_QUERY,S_PORT,CS_USRNAME,C_IP,CS_USER_AGENT,SC_STATUS,SC_SUBSTATUS,SC_WIN32_STATUS,TIME_TAKEN,W3SVC_PATH) VALUES(?,TO_DATE(?,'YYYY-MM-DD HH24:MI:SS'),?,?,?,?,?,?,?,?,?,?,?,?,?)","logstash_host","log_timestamp","s-ip","cs-method","cs-uri-stem","cs-uri-query","s-port","cs-username","c-ip","cs-useragent","sc-status","sc-substatus","sc-win32-status","time-taken","w3svc_path"]

}

elasticsearch {

index => "logstash-new-iis_access-%{+YYYY.MM.dd}"

}

}

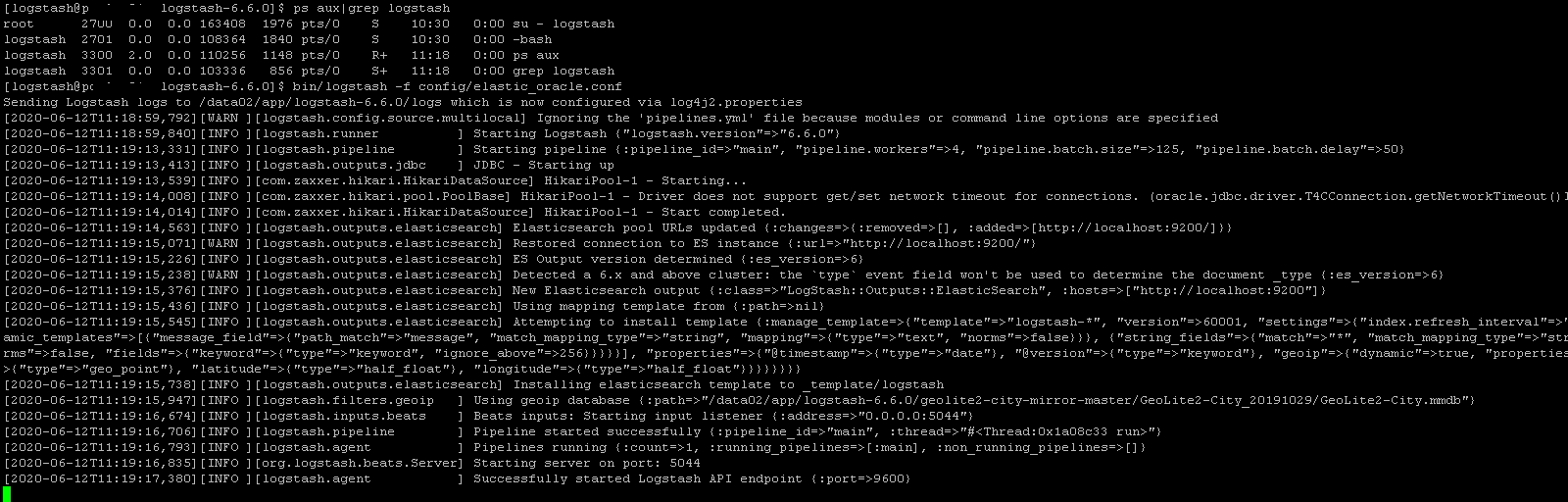

【测试logstash】

nohup logstash -f config/from_beat.conf &

Sending Logstash logs to /u01/logstash-6.6.0/logs which is now configured via log4j2.properties

[2020-06-11T12:00:37,196][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2020-06-11T12:00:37,247][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.0"}

[2020-06-11T12:00:53,634][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2020-06-11T12:00:53,726][INFO ][logstash.outputs.jdbc ] JDBC - Starting up

[2020-06-11T12:00:53,840][INFO ][com.zaxxer.hikari.HikariDataSource] HikariPool-1 - Starting...

[2020-06-11T12:00:54,360][INFO ][com.zaxxer.hikari.pool.PoolBase] HikariPool-1 - Driver does not support get/set network timeout for connections. (oracle.jdbc.driver.T4CConnection.getNetworkTimeout()I)

[2020-06-11T12:00:54,378][INFO ][com.zaxxer.hikari.HikariDataSource] HikariPool-1 - Start completed.

[2020-06-11T12:00:55,579][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[

http://localhost:9200/]}}

[2020-06-11T12:00:56,076][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"

http://localhost:9200/"}

[2020-06-11T12:00:56,215][INFO ][logstash.outputs.elasticsearch] ES Output version determined {:es_version=>6}

[2020-06-11T12:00:56,227][WARN ][logstash.outputs.elasticsearch] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>6}

[2020-06-11T12:00:56,309][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["

http://localhost:9200"]}

[2020-06-11T12:00:56,333][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2020-06-11T12:00:56,422][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[2020-06-11T12:00:56,903][INFO ][logstash.filters.geoip ] Using geoip database {:path=>"/u01/logstash-6.6.0/geolite2-city-mirror-master/GeoLite2-City_20191029/GeoLite2-City.mmdb"}

[2020-06-11T12:00:57,660][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"}

[2020-06-11T12:00:57,708][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x17f9f072 run>"}

[2020-06-11T12:00:57,863][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2020-06-11T12:00:57,970][INFO ][org.logstash.beats.Server] Starting server on port: 5044

[2020-06-11T12:00:58,612][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

浙公网安备 33010602011771号

浙公网安备 33010602011771号