kubernetes系列07—Pod控制器详解

本文收录在容器技术学习系列文章总目录

1、Pod控制器

1.1 介绍

Pod控制器是用于实现管理pod的中间层,确保pod资源符合预期的状态,pod的资源出现故障时,会尝试 进行重启,当根据重启策略无效,则会重新新建pod的资源。

1.2 pod控制器有多种类型

- ReplicationController(RC):RC保证了在所有时间内,都有特定数量的Pod副本正在运行,如果太多了,RC就杀死几个,如果太少了,RC会新建几个

- ReplicaSet(RS):代用户创建指定数量的pod副本数量,确保pod副本数量符合预期状态,并且支持滚动式自动扩容和缩容功能。

- Deployment(重要):工作在ReplicaSet之上,用于管理无状态应用,目前来说最好的控制器。支持滚动更新和回滚功能,还提供声明式配置。

- DaemonSet:用于确保集群中的每一个节点只运行特定的pod副本,通常用于实现系统级后台任务。比如ELK服务

- Job:只要完成就立即退出,不需要重启或重建。

- CronJob:周期性任务控制,不需要持续后台运行

- StatefulSet:管理有状态应用

本文主要讲解ReplicaSet、Deployment、DaemonSet 三中类型的pod控制器。

2、ReplicaSet

2.1 认识ReplicaSet

(1)什么是ReplicaSet?

ReplicaSet是下一代复本控制器,是Replication Controller(RC)的升级版本。ReplicaSet和 Replication Controller之间的唯一区别是对选择器的支持。ReplicaSet支持labels user guide中描述的set-based选择器要求, 而Replication Controller仅支持equality-based的选择器要求。

(2)如何使用ReplicaSet

大多数kubectl 支持Replication Controller 命令的也支持ReplicaSets。rolling-update命令除外,如果要使用rolling-update,请使用Deployments来实现。

虽然ReplicaSets可以独立使用,但它主要被 Deployments用作pod 机制的创建、删除和更新。当使用Deployment时,你不必担心创建pod的ReplicaSets,因为可以通过Deployment实现管理ReplicaSets。

(3)何时使用ReplicaSet?

ReplicaSet能确保运行指定数量的pod。然而,Deployment 是一个更高层次的概念,它能管理ReplicaSets,并提供对pod的更新等功能。因此,我们建议你使用Deployment来管理ReplicaSets,除非你需要自定义更新编排。

这意味着你可能永远不需要操作ReplicaSet对象,而是使用Deployment替代管理 。后续讲解Deployment会详细描述。

2.2 ReplicaSet定义资源清单几个字段

- apiVersion: app/v1 版本

- kind: ReplicaSet 类型

- metadata 元数据

- spec 期望状态

- minReadySeconds:应为新创建的pod准备好的最小秒数

- replicas:副本数; 默认为1

- selector:标签选择器

- template:模板(必要的)

- metadata:模板中的元数据

- spec:模板中的期望状态

- status 当前状态

2.3 演示:创建一个简单的ReplicaSet

(1)编写yaml文件,并创建启动

简单创建一个replicaset:启动2个pod

[root@master manifests]# vim rs-damo.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myapp

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

name: myapp-pod

labels:

app: myapp

release: canary

environment: qa

spec:

containers:

- name: myapp-container

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

[root@master manifests]# kubectl create -f rs-damo.yaml

replicaset.apps/myapp created

(2)查询验证

---查询replicaset(rs)信息

[root@master manifests]# kubectl get rs

NAME DESIRED CURRENT READY AGE

myapp 2 2 2 23s

---查询pod信息

[root@master manifests]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-r4ss4 1/1 Running 0 25s

myapp-zjc5l 1/1 Running 0 26s

---查询pod详细信息;模板中的label都生效了

[root@master manifests]# kubectl describe pod myapp-r4ss4

Name: myapp-r4ss4

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: node2/192.168.130.105

Start Time: Thu, 06 Sep 2018 14:57:23 +0800

Labels: app=myapp

environment=qa

release=canary

... ...

---验证服务

[root@master manifests]# curl 10.244.2.13

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

(3)生成pod原则:“多退少补”

① 删除pod,会立即重新构建,生成新的pod

[root@master manifests]# kubectl delete pods myapp-zjc5l pod "myapp-k4j6h" deleted [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-r4ss4 1/1 Running 0 33s myapp-mdjvh 1/1 Running 0 10s

② 若另一个pod,不小心符合了rs的标签选择器,就会随机干掉一个此标签的pod

---随便启动一个pod [root@master manifests]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS myapp-hxgbh 1/1 Running 0 7m app=myapp,environment=qa,release=canary myapp-mdjvh 1/1 Running 0 6m app=myapp,environment=qa,release=canary pod-test 1/1 Running 0 13s app=myapp,tier=frontend ---将pod-test打上release=canary标签 [root@master manifests]# kubectl label pods pod-test release=canary pod/pod-test labeled ---随机停掉一个pod [root@master manifests]# kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS myapp-hxgbh 1/1 Running 0 8m app=myapp,environment=qa,release=canary myapp-mdjvh 1/1 Running 0 7m app=myapp,environment=qa,release=canary pod-test 0/1 Terminating 0 1m app=myapp,release=canary,tier=frontend

2.4 ReplicaSet动态扩容/缩容

(1)使用edit 修改rs 配置,将副本数改为5;即可实现动态扩容

[root@master manifests]# kubectl edit rs myapp ... ... spec: replicas: 5 ... ... replicaset.extensions/myapp edited

(2)验证

[root@master manifests]# kubectl get pods NAME READY STATUS RESTARTS AGE client 0/1 Error 0 1d myapp-bck7l 1/1 Running 0 16s myapp-h8cqr 1/1 Running 0 16s myapp-hfb72 1/1 Running 0 6m myapp-r4ss4 1/1 Running 0 9m myapp-vvpgf 1/1 Running 0 16s

2.5 ReplicaSet在线升级版本

(1)使用edit 修改rs 配置,将容器的镜像改为v2版;即可实现在线升级版本

[root@master manifests]# kubectl edit rs myapp

... ...

spec:

containers:

- image: ikubernetes/myapp:v2

... ...

replicaset.extensions/myapp edited

(2)查询rs,已经完成修改

[root@master manifests]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR myapp 5 5 5 11m myapp-container ikubernetes/myapp:v2 app=myapp,release=canary

(3)但是,修改完并没有升级

需删除pod,再自动生成新的pod时,就会升级成功;

即可以实现灰度发布:删除一个,会自动启动一个版本升级成功的pod

---访问没有删除pod的服务,显示是V1版 [root@master manifests]# curl 10.244.2.15 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> ---删除一个pod,访问新生成pod的服务,版本升级为v2版 [root@master manifests]# kubectl delete pod myapp-bck7l pod "myapp-bck7l" deleted [root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myapp-hxgbh 1/1 Running 0 20m 10.244.1.17 node1 [root@master manifests]# curl 10.244.1.17 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

3、Deployment

3.1 Deployment简述

(1)介绍

Deployment 为 Pod和Replica Set 提供了一个声明式定义(declarative)方法,用来替代以前的ReplicationController来方便的管理应用

你只需要在 Deployment 中描述您想要的目标状态是什么,Deployment controller 就会帮您将 Pod 和ReplicaSet 的实际状态改变到您的目标状态。您可以定义一个全新的 Deployment 来创建 ReplicaSet 或者删除已有的 Deployment 并创建一个新的来替换。

注意:您不该手动管理由 Deployment 创建的 Replica Set,否则您就篡越了 Deployment controller 的职责!

(2)典型的应用场景包括

- 使用Deployment来创建ReplicaSet。ReplicaSet在后台创建pod。检查启动状态,看它是成功还是失败。

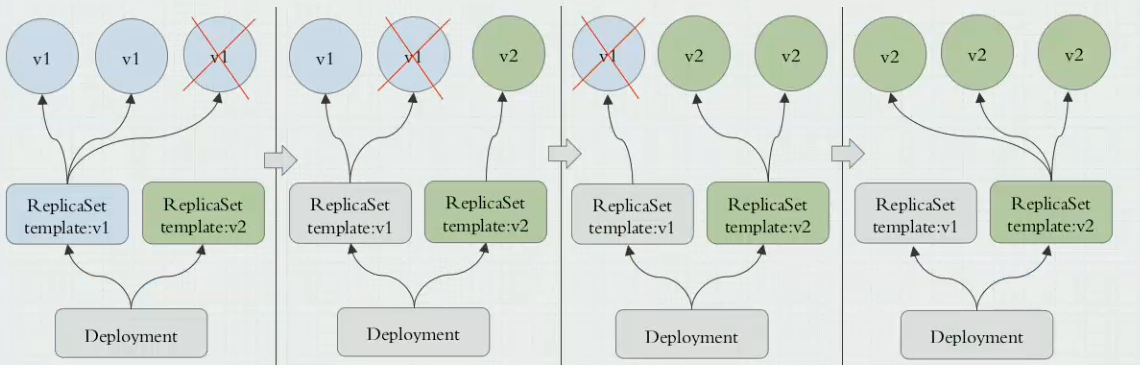

- 然后,通过更新Deployment 的 PodTemplateSpec 字段来声明Pod的新状态。这会创建一个新的ReplicaSet,Deployment会按照控制的速率将pod从旧的ReplicaSet移动到新的ReplicaSet中。

- 滚动升级和回滚应用:如果当前状态不稳定,回滚到之前的Deployment revision。每次回滚都会更新Deployment的revision。

- 扩容和缩容:扩容Deployment以满足更高的负载。

- 暂停和继续Deployment:暂停Deployment来应用PodTemplateSpec的多个修复,然后恢复上线。

- 根据Deployment 的状态判断上线是否hang住了。

- 清除旧的不必要的 ReplicaSet。

3.2 Deployment定义资源清单几个字段

- apiVersion: app/v1 版本

- kind: Deployment 类型

- metadata 元数据

- spec 期望状态

- --------------replicaset 也有的选项---------------

- minReadySeconds:应为新创建的pod准备好的最小秒数

- replicas:副本数; 默认为1

- selector:标签选择器

- template:模板(必须的)

- metadata:模板中的元数据

- spec:模板中的期望状态

- --------------deployment 独有的选项---------------

- strategy:更新策略;用于将现有pod替换为新pod的部署策略

- Recreate:重新创建

- RollingUpdate:滚动更新

- maxSurge:可以在所需数量的pod之上安排的最大pod数;例如:5、10%

- maxUnavailable:更新期间可用的最大pod数;

- revisionHistoryLimit:要保留以允许回滚的旧ReplicaSet的数量,默认10

- paused:表示部署已暂停,部署控制器不处理部署

- progressDeadlineSeconds:在执行此操作之前部署的最长时间被认为是失败的

- status 当前状态

3.3 演示:创建一个简单的Deployment

(1)创建一个简单的ReplicaSet,启动2个pod

[root@master manifests]# vim deploy-damo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

labels:

app: myapp

release: canary

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

[root@master manifests]# kubectl apply -f deploy-damo.yaml

deployment.apps/myapp-deploy configured

注:apply 声明式创建启动;和create差不多;但是可以对一个文件重复操作;create不可以。

(2)查询验证

---查询deployment信息 [root@master manifests]# kubectl get deploy NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE myapp-deploy 2 2 2 2 14s ---查询replicaset信息;deployment会先生成replicaset [root@master manifests]# kubectl get rs NAME DESIRED CURRENT READY AGE myapp-deploy-69b47bc96d 2 2 2 28s ---查询pod信息;replicaset会再创建pod [root@master manifests]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-deploy-69b47bc96d-bm8zc 1/1 Running 0 18s myapp-deploy-69b47bc96d-pjr5v 1/1 Running 0 18s

3.4 Deployment动态扩容/缩容

有2中方法实现

(1)方法1:直接修改yaml文件,将副本数改为3

[root@master manifests]# vim deploy-damo.yaml ... ... spec: replicas: 3 ... ... [root@master manifests]# kubectl apply -f deploy-damo.yaml deployment.apps/myapp-deploy configured

查询验证成功:有3个pod

[root@master manifests]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-deploy-69b47bc96d-bcdnq 1/1 Running 0 25s myapp-deploy-69b47bc96d-bm8zc 1/1 Running 0 2m myapp-deploy-69b47bc96d-pjr5v 1/1 Running 0 2m

(2)通过patch命令打补丁命令扩容

与方法1的区别:不需修改yaml文件;平常测试时使用方便;

但列表格式复杂,极容易出错

[root@master manifests]# kubectl patch deployment myapp-deploy -p '{"spec":{"replicas":5}}'

deployment.extensions/myapp-deploy patched

查询验证成功:有5个pod

[root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-deploy-67f6f6b4dc-2756p 1/1 Running 0 26s myapp-deploy-67f6f6b4dc-2lkwr 1/1 Running 0 26s myapp-deploy-67f6f6b4dc-knttd 1/1 Running 0 21m myapp-deploy-67f6f6b4dc-ms7t2 1/1 Running 0 21m myapp-deploy-67f6f6b4dc-vl2th 1/1 Running 0 21m

3.5 Deployment在线升级版本

(1)直接修改deploy-damo.yaml

[root@master manifests]# vim deploy-damo.yaml

... ...

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v2

... ...

(2)可以动态监控版本升级

[root@master ~]# kubectl get pods -w

发现是滚动升级,先停一个,再开启一个新的(升级);再依次听一个...

(3)验证:访问服务,版本升级成功

[root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myapp-deploy-67f6f6b4dc-6lv66 1/1 Running 0 2m 10.244.1.75 node1 [root@master ~]# curl 10.244.1.75 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

3.6 Deployment修改版本更新策略

(1)方法1:修改yaml文件

[root@master manifests]# vim deploy-damo.yaml

... ...

strategy:

rollingUpdate:

maxSurge: 1 #每次更新一个pod

maxUnavailable: 0 #最大不可用pod为0

... ...

(2)打补丁:修改更新策略

[root@master manifests]# kubectl patch deployment myapp-deploy -p '{"spec":{"strategy":{"rollingUpdate":{"maxSurge":1,"maxUnavailable":0}}}}'

deployment.extensions/myapp-deploy patched

(3)验证:查询详细信息

[root@master manifests]# kubectl describe deployment myapp-deploy ... ... RollingUpdateStrategy: 0 max unavailable, 1 max surge ... ...

(4)升级到v3版

① 金丝雀发布:先更新一个pod,然后立即暂停,等版本运行没问题了,再继续发布

[root@master manifests]# kubectl set image deployment myapp-deploy myapp=ikubernetes/myapp:v3 && kubectl rollout pause deployment myapp-deploy deployment.extensions/myapp-deploy image updated #一个pod更新成功 deployment.extensions/myapp-deploy paused #暂停更新

② 等版本运行没问题了,解除暂停,继续发布更新

[root@master manifests]# kubectl rollout resume deployment myapp-deploy deployment.extensions/myapp-deploy resumed

③ 中间可以一直监控过程

[root@master ~]# kubectl rollout status deployment myapp-deploy #输出版本更新信息 Waiting for deployment "myapp-deploy" rollout to finish: 1 out of 5 new replicas have been updated... Waiting for deployment spec update to be observed... Waiting for deployment spec update to be observed... Waiting for deployment "myapp-deploy" rollout to finish: 1 out of 5 new replicas have been updated... Waiting for deployment "myapp-deploy" rollout to finish: 1 out of 5 new replicas have been updated... Waiting for deployment "myapp-deploy" rollout to finish: 2 out of 5 new replicas have been updated... Waiting for deployment "myapp-deploy" rollout to finish: 2 out of 5 new replicas have been updated... ---也可以使用get查询pod 更新过程 [root@master ~]# kubectl get pods -w

④ 验证:随便访问一个pod的服务,版本升级成功

[root@master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myapp-deploy-6bdcd6755d-2bnsl 1/1 Running 0 1m 10.244.1.77 node1 [root@master ~]# curl 10.244.1.77 Hello MyApp | Version: v3 | <a href="hostname.html">Pod Name</a>

3.7 Deployment版本回滚

(1)命令

查询版本变更历史

$ kubectl rollout history deployment deployment_name

undo回滚版本;--to-revision= 回滚到第几版本

$ kubectl rollout undo deployment deployment_name --to-revision=N

(2)演示

---查询版本变更历史 [root@master manifests]# kubectl rollout history deployment myapp-deploy deployments "myapp-deploy" REVISION CHANGE-CAUSE 1 <none> 2 <none> 3 <none> ---回滚到第1版本 [root@master manifests]# kubectl rollout undo deployment myapp-deploy --to-revision=1 deployment.extensions/myapp-deploy [root@master manifests]# kubectl rollout history deployment myapp-deploy deployments "myapp-deploy" REVISION CHANGE-CAUSE 2 <none> 3 <none> 4 <none>

(3)查询验证,已经回到v1版了

[root@master manifests]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR myapp-deploy-67f6f6b4dc 0 0 0 18h myapp ikubernetes/myapp:v2 app=myapp,pod-template-hash=2392926087,release=canary myapp-deploy-69b47bc96d 5 5 5 18h myapp ikubernetes/myapp:v1 app=myapp,pod-template-hash=2560367528,release=canary myapp-deploy-6bdcd6755d 0 0 0 10m myapp ikubernetes/myapp:v3 app=myapp,pod-template-hash=2687823118,release=canary

4、DaemonSet

4.1 DaemonSet简述

(1)介绍

DaemonSet保证在每个Node上都运行一个容器副本,常用来部署一些集群的日志、监控或者其他系统管理应用

(2)典型的应用包括

- 日志收集,比如fluentd,logstash等

- 系统监控,比如Prometheus Node Exporter,collectd,New Relic agent,Ganglia gmond等

- 系统程序,比如kube-proxy, kube-dns, glusterd, ceph等

4.2 DaemonSet定义资源清单几个字段

- apiVersion: app/v1 版本

- kind: DaemonSet 类型

- metadata 元数据

- spec 期望状态

- --------------replicaset 也有的选项---------------

- minReadySeconds:应为新创建的pod准备好的最小秒数

- selector:标签选择器

- template:模板(必须的)

- metadata:模板中的元数据

- spec:模板中的期望状态

- --------------daemonset 独有的选项---------------

- revisionHistoryLimit:要保留以允许回滚的旧ReplicaSet的数量,默认10

- updateStrategy:用新pod替换现有DaemonSet pod的更新策略

- status 当前状态

4.3 演示:创建一个简单的DaemonSet

(1)创建并创建一个简单的DaemonSet,启动pod,只后台运行filebeat手机日志服务

[root@master manifests]# vim ds-demo.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat-ds

namespace: default

spec:

selector:

matchLabels:

app: filebeat

release: stable

template:

metadata:

labels:

app: filebeat

release: stable

spec:

containers:

- name: filebeat

image: ikubernetes/filebeat:5.6.5-alpine

env:

- name: REDIS_HOST

value: redis.default.svc.cluster.local

- name: REDIS_LOG_LEVEL

value: info

[root@master manifests]# kubectl apply -f ds-demo.yaml

daemonset.apps/myapp-ds created

(2)查询验证

[root@master ~]# kubectl get ds

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

filebeat-ds 2 2 2 2 2 <none> 6m

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

filebeat-ds-r25hh 1/1 Running 0 4m

filebeat-ds-vvntb 1/1 Running 0 4m

[root@master ~]# kubectl exec -it filebeat-ds-r25hh -- /bin/sh

/ # ps aux

PID USER TIME COMMAND

1 root 0:00 /usr/local/bin/filebeat -e -c /etc/filebeat/filebeat.yml

4.4 DaemonSet动态版本升级

(1)使用kubectl set image 命令更新pod的镜像;实现版本升级

[root@master ~]# kubectl set image daemonsets filebeat-ds filebeat=ikubernetes/filebeat:5.6.6-alpine daemonset.extensions/filebeat-ds image updated

(2)验证,升级成功

[root@master ~]# kubectl get ds -o wide NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR filebeat-ds 2 2 2 2 2 <none> 7m filebeat ikubernetes/filebeat:5.6.6-alpine app=filebeat,release=stable

5、StatefulSet

5.1 认识statefulset

(1)statefulset介绍

StatefulSet是为了解决有状态服务的问题(对应Deployments和ReplicaSets是为无状态服务而设计),其应用场景包括

- 稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于PVC来实现

- 稳定的网络标志,即Pod重新调度后其PodName和HostName不变,基于Headless Service(即没有Cluster IP的Service)来实现

- 有序部署,有序扩展,即Pod是有顺序的,在部署或者扩展的时候要依据定义的顺序依次依次进行(即从0到N-1,在下一个Pod运行之前所有之前的Pod必须都是Running和Ready状态),基于init containers来实现

- 有序收缩,有序删除(即从N-1到0)

(2)三个必要组件

从上面的应用场景可以发现,StatefulSet由以下几个部分组成:

- 用于定义网络标志(DNS domain)的 Headless Service(无头服务)

- 定义具体应用的StatefulSet控制器

- 用于创建PersistentVolumes 的 volumeClaimTemplates存储卷模板

5.2 通过statefulset创建pod

5.2.1 创建准备pv

详情请查询PV和PVC详解,创建5个pv,需要有nfs服务器

[root@master volume]# vim pv-demo.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv001

labels:

name: pv001

spec:

nfs:

path: /data/volumes/v1

server: nfs

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv002

labels:

name: pv002

spec:

nfs:

path: /data/volumes/v2

server: nfs

accessModes: ["ReadWriteOnce"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv003

labels:

name: pv003

spec:

nfs:

path: /data/volumes/v3

server: nfs

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv004

labels:

name: pv004

spec:

nfs:

path: /data/volumes/v4

server: nfs

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv005

labels:

name: pv005

spec:

nfs:

path: /data/volumes/v5

server: nfs

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 15Gi

[root@master volume]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv001 5Gi RWO,RWX Retain Available 3s

pv002 5Gi RWO Retain Available 3s

pv003 5Gi RWO,RWX Retain Available 3s

pv004 10Gi RWO,RWX Retain Available 3s

pv005 15Gi RWO,RWX Retain Available 3s

5.2.2 编写使用statefulset创建pod的资源清单,并创建

[root@master pod_controller]# vim statefulset-demo.yaml

#Headless Service

apiVersion: v1

kind: Service

metadata:

name: myapp

labels:

app: myapp

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: myapp-pod

---

#statefuleset

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: myapp

spec:

serviceName: myapp

replicas: 3

selector:

matchLabels:

app: myapp-pod

template:

metadata:

labels:

app: myapp-pod

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

ports:

- containerPort: 80

name: web

volumeMounts:

- name: myappdata

mountPath: /usr/share/nginx/html

#volumeClaimTemplates

volumeClaimTemplates:

- metadata:

name: myappdata

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi

[root@master pod_controller]# kubectl apply -f statefulset-demo.yaml

service/myapp created

statefulset.apps/myapp created

5.2.3 查询并验证pod

---无头服务的service创建成功 [root@master pod_controller]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 173d myapp ClusterIP None <none> 80/TCP 3s ---statefulset创建成功 [root@master pod_controller]# kubectl get sts NAME DESIRED CURRENT AGE myapp 3 3 6s ---查看pvc,已经成功绑定时候的pv [root@master pod_controller]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE myappdata-myapp-0 Bound pv002 5Gi RWO 9s myappdata-myapp-1 Bound pv001 5Gi RWO,RWX 8s myappdata-myapp-2 Bound pv003 5Gi RWO,RWX 6s ---查看pv,有3个已经被绑定 [root@master pod_controller]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv001 5Gi RWO,RWX Retain Bound default/myappdata-myapp-1 21s pv002 5Gi RWO Retain Bound default/myappdata-myapp-0 21s pv003 5Gi RWO,RWX Retain Bound default/myappdata-myapp-2 21s pv004 10Gi RWO,RWX Retain Available 21s pv005 15Gi RWO,RWX Retain Available 21s ---启动了3个pod [root@master pod_controller]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE myapp-0 1/1 Running 0 16s 10.244.1.127 node1 myapp-1 1/1 Running 0 15s 10.244.2.124 node2 myapp-2 1/1 Running 0 13s 10.244.1.128 node1

5.3 statefulset动态扩容和缩容

可以使用scale命令 或 patch打补丁两种方法实现。

5.3.1 扩容

由原本的3个pod扩容到5个

---①使用scale命令实现

[root@master ~]# kubectl scale sts myapp --replicas=5

statefulset.apps/myapp scaled

---②或者通过打补丁来实现

[root@master ~]# kubectl patch sts myapp -p '{"spec":{"replicas":5}}'

statefulset.apps/myapp patched

[root@master pod_controller]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-0 1/1 Running 0 11m

myapp-1 1/1 Running 0 11m

myapp-2 1/1 Running 0 11m

myapp-3 1/1 Running 0 9s

myapp-4 1/1 Running 0 7s

[root@master pod_controller]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

myappdata-myapp-0 Bound pv002 5Gi RWO 11m

myappdata-myapp-1 Bound pv001 5Gi RWO,RWX 11m

myappdata-myapp-2 Bound pv003 5Gi RWO,RWX 11m

myappdata-myapp-3 Bound pv004 10Gi RWO,RWX 13s

myappdata-myapp-4 Bound pv005 15Gi RWO,RWX 11s

[root@master pod_controller]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv001 5Gi RWO,RWX Retain Bound default/myappdata-myapp-1 17m

pv002 5Gi RWO Retain Bound default/myappdata-myapp-0 17m

pv003 5Gi RWO,RWX Retain Bound default/myappdata-myapp-2 17m

pv004 10Gi RWO,RWX Retain Bound default/myappdata-myapp-3 17m

pv005 15Gi RWO,RWX Retain Bound default/myappdata-myapp-4 17m

5.3.2 缩容

由5个pod扩容到2个

---①使用scale命令

[root@master ~]# kubectl scale sts myapp --replicas=2

statefulset.apps/myapp scaled

---②通过打补丁的方法进行缩容

[root@master ~]# kubectl patch sts myapp -p '{"spec":{"replicas":2}}'

statefulset.apps/myapp patched

[root@master pod_controller]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-0 1/1 Running 0 15m

myapp-1 1/1 Running 0 15m

---但是pv和pvc不会被删除,从而实现持久化存储

[root@master pod_controller]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

myappdata-myapp-0 Bound pv002 5Gi RWO 15m

myappdata-myapp-1 Bound pv001 5Gi RWO,RWX 15m

myappdata-myapp-2 Bound pv003 5Gi RWO,RWX 15m

myappdata-myapp-3 Bound pv004 10Gi RWO,RWX 4m

myappdata-myapp-4 Bound pv005 15Gi RWO,RWX 4m

5.4 版本升级

5.4.1 升级配置介绍:rollingUpdate.partition 分区更新

[root@master ~]# kubectl explain sts.spec.updateStrategy.rollingUpdate.partition

KIND: StatefulSet

VERSION: apps/v1

FIELD: partition <integer>

DESCRIPTION:

Partition indicates the ordinal at which the StatefulSet should be

partitioned. Default value is 0.

解释:partition分区指定为n,升级>n的分区;n指第几个容器;默认是0

可以修改yaml资源清单来进行升级;也可通过打补丁的方法升级。

5.4.2 进行“金丝雀”升级

(1)先升级一个pod

先将pod恢复到5个

① 打补丁,将partition的指设为4,就只升级第4个之后的pod;只升级第5个pod,若新版本有问题,立即回滚;若没问题,就全面升级

[root@master ~]# kubectl patch sts myapp -p '{"spec":{"updateStrategy":{"rollingUpdate":{"partition":4}}}}'

statefulset.apps/myapp patched

---查询认证

[root@master ~]# kubectl describe sts myapp

Name: myapp

Namespace: default

... ...

Replicas: 5 desired | 5 total

Update Strategy: RollingUpdate

Partition: 4

... ...

② 升级

[root@master ~]# kubectl set image sts/myapp myapp=ikubernetes/myapp:v2 statefulset.apps/myapp image updated ---已将pod镜像换位v2版 [root@master ~]# kubectl get sts -o wide NAME DESIRED CURRENT AGE CONTAINERS IMAGES myapp 5 5 21h myapp ikubernetes/myapp:v2

③ 验证

查看第5个pod,已经完成升级 [root@master ~]# kubectl get pods myapp-4 -o yaml |grep image - image: ikubernetes/myapp:v2 查看前4个pod,都还是v1版本 [root@master ~]# kubectl get pods myapp-3 -o yaml |grep image - image: ikubernetes/myapp:v1 [root@master ~]# kubectl get pods myapp-0 -o yaml |grep image - image: ikubernetes/myapp:v1

(2)全面升级剩下的pod

---只需将partition的指设为0即可

[root@master ~]# kubectl patch sts myapp -p '{"spec":{"updateStrategy":{"rollingUpdate":{"partition":0}}}}'

statefulset.apps/myapp patched

---验证,所有pod已经完成升级

[root@master ~]# kubectl get pods myapp-0 -o yaml |grep image

- image: ikubernetes/myapp:v2

如果您认为这篇文章还不错或者有所收获,您可以通过右边的“打赏”功能 打赏我一杯咖啡【物质支持】,也可以点击右下角的【赞】按钮【精神支持】,因为这两种支持都是我继续写作,分享的最大动力!

作者:along阿龙

出处:http://www.cnblogs.com/along21/

简介:每天都在进步,每周都在总结,你的一个点赞,一句留言,就可以让博主开心一笑,充满动力!

版权:本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。

已将所有赞助者统一放到单独页面!签名处只保留最近10条赞助记录!查看赞助者列表

| 衷心感谢打赏者的厚爱与支持!也感谢点赞和评论的园友的支持! | ||

|---|---|---|

| 打赏者 | 打赏金额 | 打赏日期 |

| 微信:*光 | 10.00 | 2019-04-14 |

| 微信:小罗 | 10.00 | 2019-03-25 |

| 微信:*光 | 5.00 | 2019-03-24 |

| 微信:*子 | 10.00 | 2019-03-21 |

| 微信:云 | 5.00 | 2019-03-19 |

| 支付宝:马伏硅 | 5.00 | 2019-03-08 |

| 支付宝:唯一 | 10.00 | 2019-02-02 |

| 微信:*亮 | 5.00 | 2018-12-28 |

| 微信:流金岁月1978 | 10.00 | 2018-11-16 |

| 微信:,别输给自己, | 20.00 | 2018-11-06 |

浙公网安备 33010602011771号

浙公网安备 33010602011771号