ORB 特征提取算法(实践篇一)

Oriented FAST and Rotated BRIEF (ORB)

目标检测是计算机视觉中最具挑战性的问题之一。目标检测即识别图像中特定的对象,并能够确定这些对象在图像中的位置。例如,如果我们在下面的图像中检测汽车,我们不仅要检测出图像中有多少辆车,而且还要检测出这些车在图像中的位置。

为了进行这种基于对象的图像分析,我们将使用ORB。ORB是一种非常快速的算法,可以从检测到的关键点创建特征向量。ORB 算法具有一些很好的特性,比如旋转不变性、光照不变性、噪音不变性等。

在本篇文章中,我们将验证 ORB 的这些不变特性,使用 ORB 检测人脸图像中的关键点并进行匹配实验。

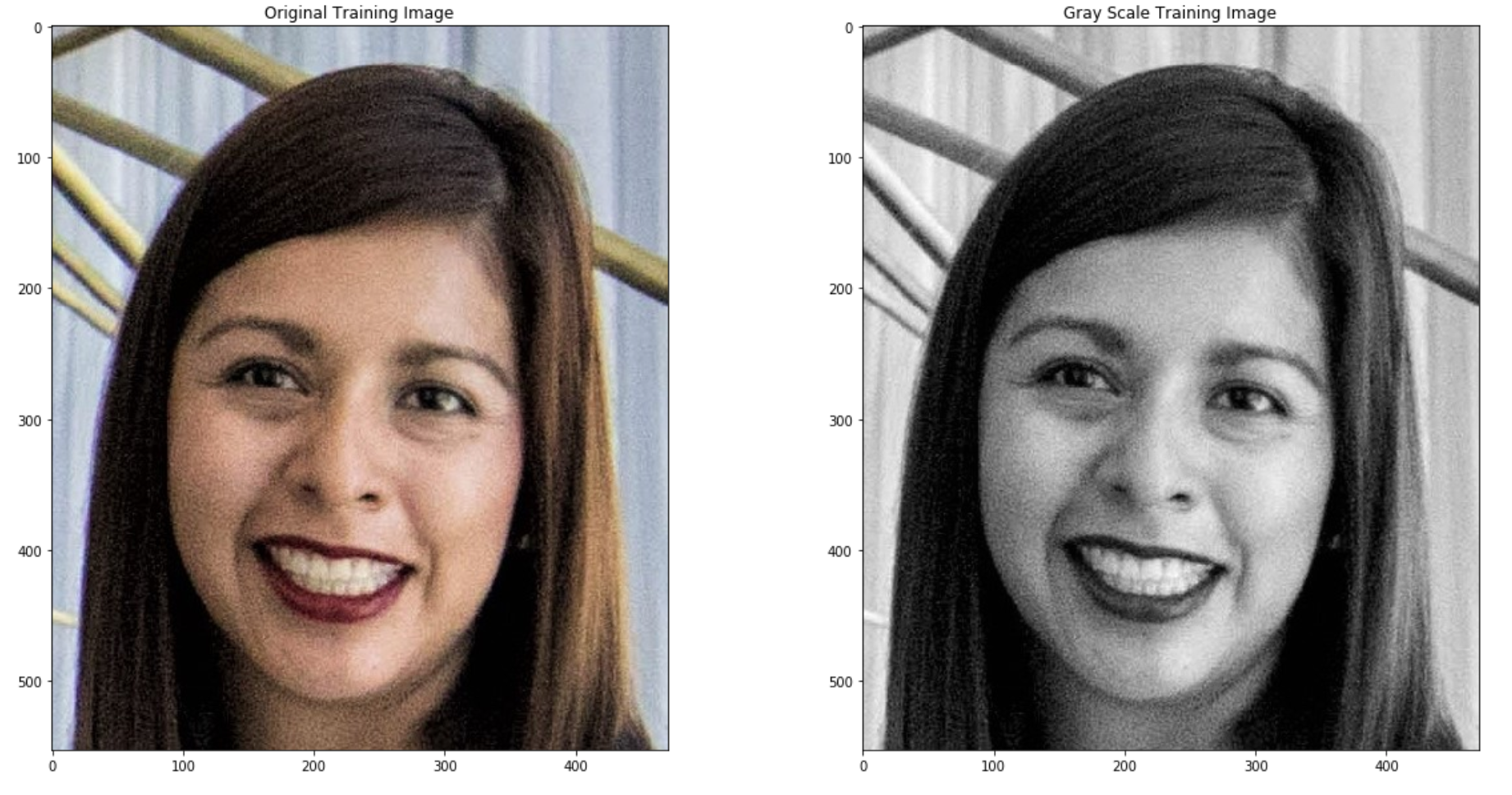

加载训练图像

在 ORB 中,将要被寻找的目标图像被称为训练图像(training image)。此次使用的训练图像是一位女士的人脸图像。

定位关键点

我们将使用 OpenCV 的 ORB 实现定位关键点并创建相应的 ORB 描述符。ORB 算法的参数是使用orb_create()函数设置的。orb_create()函数的参数及其默认值如下:

cv2.ORB_create(nfeatures = 500,

scaleFactor = 1.2,

nlevels = 8,

edgeThreshold = 31,

firstLevel = 0,

WTA_K = 2,

scoreType = HARRIS_SCORE,

patchSize = 31,

fastThreshold = 20)

参数解释如下:

-

nfeatures - int

Determines the maximum number of features (keypoints) to locate. -

scaleFactor - float

Pyramid decimation ratio, must be greater than 1. ORB uses an image pyramid to find features, therefore you must provide the scale factor between each layer in the pyramid and the number of levels the pyramid has. AscaleFactor = 2means the classical pyramid, where each next level has 4x less pixels than the previous. A big scale factor will diminish the number of features found. -

nlevels - int

The number of pyramid levels. The smallest level will have a linear size equal to input_image_linear_size/pow(scaleFactor, nlevels). -

edgeThreshold - - int

The size of the border where features are not detected. Since the keypoints have a specific pixel size, the edges of images must be excluded from the search. The size of theedgeThresholdshould be equal to or greater than the patchSize parameter. -

firstLevel - int

This parameter allows you to determine which level should be treated as the first level in the pyramid. It should be 0 in the current implementation. Usually, the pyramid level with a scale of unity is considered the first level. -

WTA_K - int

The number of random pixels used to produce each element of the oriented BRIEF descriptor. The possible values are 2, 3, and 4, with 2 being the default value. For example, a value of 3 means three random pixels are chosen at a time to compare their brightness. The index of the brightest pixel is returned. Since there are 3 pixels, the returned index will be either 0, 1, or 2. -

scoreType - int

This parameter can be set to either HARRIS_SCORE or FAST_SCORE. The default HARRIS_SCORE means that the Harris corner algorithm is used to rank features. The score is used to only retain the best features. The FAST_SCORE produces slightly less stable keypoints, but it is a little faster to compute. -

patchSize - int

Size of the patch used by the oriented BRIEF descriptor. Of course, on smaller pyramid layers the perceived image area covered by a feature will be larger.

可见, cv2. ORB_create()函数支持的参数很多。前两个参数(nfeatures和 scaleFactor)可能是最常用的参数。其他参数一般保持默认值既能获得比较良好的结果。

在下面的代码中,将使用 ORB_create()函数,并将要检测的最大关键点数量nfeatures设置为 200,将缩放比率scaleFactor设置为 2.1。然后使用.detectandcompute(image)方法来定位给定训练图片 training_gray中的关键点并计算它们对应的 ORB 描述符。最后使用 cv2.drawKeypoints()函数来可视化 ORB 算法找到的关键点。

# Import copy to make copies of the training image

import copy

# Set the default figure size

plt.rcParams['figure.figsize'] = [14.0, 7.0]

# Set the parameters of the ORB algorithm by specifying the maximum number of keypoints to locate and

# the pyramid decimation ratio

orb = cv2.ORB_create(200, 2.0)

# Find the keypoints in the gray scale training image and compute their ORB descriptor.

# The None parameter is needed to indicate that we are not using a mask.

keypoints, descriptor = orb.detectAndCompute(training_gray, None)

# Create copies of the training image to draw our keypoints on

keyp_without_size = copy.copy(training_image)

keyp_with_size = copy.copy(training_image)

# Draw the keypoints without size or orientation on one copy of the training image

cv2.drawKeypoints(training_image, keypoints, keyp_without_size, color = (0, 255, 0))

# Draw the keypoints with size and orientation on the other copy of the training image

cv2.drawKeypoints(training_image, keypoints, keyp_with_size, flags = cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

# Display the image with the keypoints without size or orientation

plt.subplot(121)

plt.title('Keypoints Without Size or Orientation')

plt.imshow(keyp_without_size)

# Display the image with the keypoints with size and orientation

plt.subplot(122)

plt.title('Keypoints With Size and Orientation')

plt.imshow(keyp_with_size)

plt.show()

# Print the number of keypoints detected

print("\nNumber of keypoints Detected: ", len(keypoints))

正如我们在右图中看到的,每个关键点都有一个中心、一个大小和一个角度。中心决定图像中每个关键点的位置;每个关键点的大小由 BRIEF 用于创建其特征向量的 patch 大小决定;角度告诉我们由 rBRIEF 决定的关键点的方向。

一旦找到训练图像的关键点并计算出相应的 ORB 描述符,就可以对查询图像执行相同的操作。为了更清楚地了解 ORB 算法的特性,在下一节中,训练图像和查询图像将使用相同的图片。

特征匹配

在下面的代码中,我们将使用 OpenCV 的 BFMatcher 类比较训练和查询图像中的关键点。“Brute-Force”匹配器的参数是使用 cv2.BFMatcher()函数设置的。cv2.BFMatcher()函数的参数及其默认值如下:

cv2.BFMatcher(normType = cv2.NORM_L2,

crossCheck = false)

Parameters:

-

normType

Specifies the metric used to determine the quality of the match. By default,normType = cv2.NORM_L2, which measures the distance between two descriptors. However, for binary descriptors like the ones created by ORB, the Hamming metric is more suitable. The Hamming metric determines the distance by counting the number of dissimilar bits between the binary descriptors. When the ORB descriptor is created usingWTA_K = 2, two random pixels are chosen and compared in brightness. The index of the brightest pixel is returned as either 0 or 1. Such output only occupies 1 bit, and therefore thecv2.NORM_HAMMINGmetric should be used. If, on the other hand, the ORB descriptor is created usingWTA_K = 3, three random pixels are chosen and compared in brightness. The index of the brightest pixel is returned as either 0, 1, or 2. Such output will occupy 2 bits, and therefore a special variant of the Hamming distance, known as thecv2.NORM_HAMMING2(the 2 stands for 2 bits), should be used instead. Then, for any metric chosen, when comparing the keypoints in the training and query images, the pair with the smaller metric (distance between them) is considered the best match. -

crossCheck - bool

A Boolean variable and can be set to eitherTrueorFalse. Cross-checking is very useful for eliminating false matches. Cross-checking works by performing the matching procedure two times. The first time the keypoints in the training image are compared to those in the query image; the second time, however, the keypoints in the query image are compared to those in the training image (i.e. the comparison is done backwards). When cross-checking is enabled a match is considered valid only if keypoint A in the training image is the best match of keypoint B in the query image and vice-versa (that is, if keypoint B in the query image is the best match of point A in the training image).

一旦设置了BFMatcher 的参数,就可以使用 .match(descriptors_train, descriptors_query)方法通过 ORB 描述符查找训练图像和查询图像之间的匹配关键点。最后使用 cv2.drawMatches ()函数来可视化 Brute-Force 匹配器找到的匹配关键点。此函数水平堆叠训练和查询图像,并从训练图像中的关键点绘制线,以与查询图像中的最佳匹配关键点相对应。为了更清楚地了解 ORB 算法的特性,在下面的示例中,训练图像和查询图像使用内容相同的图片。

import cv2

import matplotlib.pyplot as plt

# Set the default figure size

plt.rcParams['figure.figsize'] = [14.0, 7.0]

# Load the training image

image1 = cv2.imread('./images/face.jpeg')

# Load the query image

image2 = cv2.imread('./images/face.jpeg')

# Convert the training image to RGB

training_image = cv2.cvtColor(image1, cv2.COLOR_BGR2RGB)

# Convert the query image to RGB

query_image = cv2.cvtColor(image2, cv2.COLOR_BGR2RGB)

# Display the training and query images

plt.subplot(121)

plt.title('Training Image')

plt.imshow(training_image)

plt.subplot(122)

plt.title('Query Image')

plt.imshow(query_image)

plt.show()

# Convert the training image to gray scale

training_gray = cv2.cvtColor(training_image, cv2.COLOR_BGR2GRAY)

# Convert the query image to gray scale

query_gray = cv2.cvtColor(query_image, cv2.COLOR_BGR2GRAY)

# Set the parameters of the ORB algorithm by specifying the maximum number of keypoints to locate and

# the pyramid decimation ratio

orb = cv2.ORB_create(1000, 2.0)

# Find the keypoints in the gray scale training and query images and compute their ORB descriptor.

# The None parameter is needed to indicate that we are not using a mask in either case.

keypoints_train, descriptors_train = orb.detectAndCompute(training_gray, None)

keypoints_query, descriptors_query = orb.detectAndCompute(query_gray, None)

# Create a Brute Force Matcher object. Set crossCheck to True so that the BFMatcher will only return consistent

# pairs. Such technique usually produces best results with minimal number of outliers when there are enough matches.

bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck = True)

# Perform the matching between the ORB descriptors of the training image and the query image

matches = bf.match(descriptors_train, descriptors_query)

# The matches with shorter distance are the ones we want. So, we sort the matches according to distance

matches = sorted(matches, key = lambda x : x.distance)

# Connect the keypoints in the training image with their best matching keypoints in the query image.

# The best matches correspond to the first elements in the sorted matches list, since they are the ones

# with the shorter distance. We draw the first 300 mathces and use flags = 2 to plot the matching keypoints

# without size or orientation.

result = cv2.drawMatches(training_gray, keypoints_train, query_gray, keypoints_query, matches[:300], query_gray, flags = 2)

# Display the best matching points

plt.title('Best Matching Points')

plt.imshow(result)

plt.show()

# Print the number of keypoints detected in the training image

print("Number of Keypoints Detected In The Training Image: ", len(keypoints_train))

# Print the number of keypoints detected in the query image

print("Number of Keypoints Detected In The Query Image: ", len(keypoints_query))

# Print total number of matching points between the training and query images

print("\nNumber of Matching Keypoints Between The Training and Query Images: ", len(matches))

在上面的示例中,由于训练图像和查询图像完全相同,我们希望在两个图像中找到相同数量的关键点,并且所有关键点都匹配。可以清楚地看到,事实确实如此,ORB在两个图像中都找到了相同数量的关键点,而且 Brute-Force 匹配器能够正确匹配训练和查询图像中的所有关键点。

后记

在本部分使用 ORB 算法进行了最基本的特征匹配,下一步将验证 ORB 算法的尺度不变性、旋转不变性、光照不变性、噪声不变性。

本文翻译整理自 Udacity 计算机视觉纳米学位练习,官方源码连接:

https://github.com/udacity/CVND_Exercises/blob/master/1_4_Feature_Vectors/2. ORB.ipynb

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 浏览器原生「磁吸」效果!Anchor Positioning 锚点定位神器解析

· 没有源码,如何修改代码逻辑?

· 一个奇形怪状的面试题:Bean中的CHM要不要加volatile?

· [.NET]调用本地 Deepseek 模型

· 一个费力不讨好的项目,让我损失了近一半的绩效!

· PowerShell开发游戏 · 打蜜蜂

· 在鹅厂做java开发是什么体验

· 百万级群聊的设计实践

· WPF到Web的无缝过渡:英雄联盟客户端的OpenSilver迁移实战

· 永远不要相信用户的输入:从 SQL 注入攻防看输入验证的重要性