Kubernetes集群搭建 ver1.20.5

Kubernetes是一个可移植的、可扩展的容器集群管理系统开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化,适用于快速交付和频繁变更的场景

kubernetes的特点

可移植: 支持公有云,私有云,混合云,多重云(multi-cloud)

可扩展: 模块化, 插件化, 可挂载, 可组合

自动化: 自动部署,自动重启,自动复制,自动伸缩/扩展

部署方式

- kubeadm方式部署,部署简单,开发环境

- ansible部署,方便做后期节点的添加与维护

- 二进制方式部署,复杂度高,但可以高度自定义参数

1. 基础环境准备

- 最小化基础系统(此处为Centos 7.9.2009)

- 关闭防火墙 selinux和swap

- 更新软件源

- 时间同步

- 各节点安装docker

- docker要使用兼容的版本,查看kubernetes release changelog说明

- kubernetes-1.20 CRI 移除docker支持,改用docker shim,此处CRI部署使用docker-ce-3:19.03.15-3.el7

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.20.md

Deprecation

Docker support in the kubelet is now deprecated and will be removed in a future release. The kubelet uses a module called "dockershim" which implements CRI support for Docker and it has seen maintenance issues in the Kubernetes community. We encourage you to evaluate moving to a container runtime that is a full-fledged implementation of CRI (v1alpha1 or v1 compliant) as they become available. (#94624, @dims) [SIG Node]

Kubectl: deprecate --delete-local-data (#95076, @dougsland) [SIG CLI, Cloud Provider and Scalability]

1.1 基础初始化

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

setenforce 0

systemctl disable --now firewalld

swapoff -a

sed -i.bak /swap/d /etc/fstab

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo\

yum -y install vim curl dnf

timedatectl set-ntp true

# 增加内核配置

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

vim /etc/hosts # 节点hosts本地解析

# 配置阿里云kubernetes镜像

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# dnf list kubelet --showduplicates

# 由于官网未开放同步方式, 可能会有索引gpg检查失败的情况, 这时请用 yum install -y --nogpgcheck kubelet kubeadm kubectl 安装

1.2 安装docker

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# VERSION=3:20.10.5-3.el7

VERSION=3:19.03.15-3.el7

dnf -y install docker-ce-${VERSION}

systemctl enable --now docker

sudo mkdir -p /etc/docker

# 使用推荐的systemd驱动程序 https://kubernetes.io/docs/setup/production-environment/container-runtimes/

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://0nth4654a.mirror.aliyuncs.com"],

"exec-opts":["native.cgroupdriver=systemd"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

systemctl enable docker

$ vim /usr/lib/systemd/system/docker.service

$ 使用推荐的systemd驱动程序

$ ExecStart=/usr/bin/dockerd --exec-opt native.cgroupdriver=systemd

2. 部署harbor及haproxy高可用反向代理

2.1 镜像加速配置

#kubeadm config images list --kubernetes-version v1.20.5

k8s.gcr.io/kube-apiserver:v1.20.5

k8s.gcr.io/kube-controller-manager:v1.20.5

k8s.gcr.io/kube-scheduler:v1.20.5

k8s.gcr.io/kube-proxy:v1.20.5

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.5

...

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.5 harbor.alp.local/kubernetes-basic/kube-apiserver:v1.20.5

...

2.2 高可用master可配置

3. 初始化master节点

在所有的master节点安装指定版本的kubeadm、kubelet、kubectl、docker

在master和node节点安装kubeadm、kubelet、kubectl、docker等软件

# dnf list kubeadm --showduplicates

version=1.20.5 && echo $version

dnf install kubeadm-$version kubelet-$version kubectl-$version

systemctl enable --now kubelet && systemctl status kubelet

4. 初始化node节点

在所有node节点安装指定版本的kubeadm、kubelet、docker

version=1.20.5 && echo $version

dnf install kubeadm-$version kubelet-$version

systemctl enable --now kubelet

所有节点都需要安装kubeadm on ubuntu

# for master

apt-cache madsion kubeadm=1.17.2-00 kubectl-1.17.2-00 kubelet=1.17.2-00

# for node

apt install kubeadm=1.17.2-00 kubelet=1.17.2-00

5. master节点kubeadm init初始化

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

master节点中任意一台进行初始化,且只需要初始化一次

# kubeadm init -h

# kubeadm config images list --kubernetes-version v1.20.5 # 可提前下载镜像,防止初始化失败

# https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/

kubeadm init \

--apiserver-advertise-address=192.168.1.113 \

--apiserver-bind-port=6443 \

--control-plane-endpoint=192.168.1.113 \

--ignore-preflight-errors=swap \

--service-dns-domain=alp.domain \

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version=1.20.5 \

--pod-network-cidr=10.0.0.0/16 \

--service-cidr=172.20.1.0/20

#################################################

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.1.113:6443 --token 0ej3dg.1msgev7hznukc183 \

--discovery-token-ca-cert-hash sha256:7c901a6b1ae135e2ce1012265d9bd3887e128b7d35e147a29ecdeb5e6ca01568 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.113:6443 --token 0ej3dg.1msgev7hznukc183 \

--discovery-token-ca-cert-hash sha256:7c901a6b1ae135e2ce1012265d9bd3887e128b7d35e147a29ecdeb5e6ca01568

5.1基于文件初始化master节点

kubeadm config print init-defaults > kubeadm-init.yml # 输出默认初始化配置

vim kubeadm-init.yml

# bootstrapTokens时间

# 监听地址 advertiseAddress

# kubernetesVersion

# dnsDomain

# + podSubnet

# serviceSubnet

# imageRepository

# + controlPlanEndpoint # vip地址 172.16.3.248:6443

# 40 line

kubeadm init --config kubeadm-init.yml

5.2 将其他master主机加入集群(扩容master )

kubeadm init phase upload-certs --upload-certs # 取得key

kubeadm join 192.168.1.113:6443 --token gpduj8.mpejowfe7entffhk \

--discovery-token-ca-cert-hash sha256:1a56f5c63583097f19842b56195648c4df233448db80905c4c808f9f28821d82 \

--control-plane --certificate-key ${key}

6. 验证master节点状态

6.1 安装flannel网络插件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

修改默认的pod-network-cidr为初始化设置的(默认配置为10.244.0.0)

kubectl apply -f kube-flannel.yml

6.2 查看集群状态

export KUBECONFIG=/etc/kubernetes/admin.conf

[0 root@master1 /root] #kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.5", GitCommit:"6b1d87acf3c8253c123756b9e61dac642678305f", GitTreeState:"clean", BuildDate:"2021-03-18T01:08:27Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/amd64"}

[0 root@master /root] #kubectl get nodes -A

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 7m17s v1.20.5

[0 root@master1 /root] #kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-54d67798b7-6sfsj 0/1 Pending 0 7m6s

kube-system coredns-54d67798b7-v2lh6 0/1 Pending 0 7m6s

kube-system etcd-master 1/1 Running 0 7m13s

kube-system kube-apiserver-master 1/1 Running 0 7m13s

kube-system kube-controller-manager-master 1/1 Running 0 7m13s

kube-system kube-proxy-6zfdd 1/1 Running 0 7m6s

kube-system kube-scheduler-master 1/1 Running 0 7m13s

6.3 使得单master节点状态可用

kubectl taint node master node-role.kubernetes.io/master=:NoSchedule #设置master不可调度

kubectl taint node master node-role.kubernetes.io/master- # 设置master可调度

kubectl taint nodes node1 key1=value1:NoSchedule #设置node1节点不可调度

kubectl taint nodes node1 key1=value1:NoSchedule- #设置node1节点可调度

# NoSchedule: 一定不能被调度

# PreferNoSchedule: 尽量不要调度

# NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

kubectl describe node master | grep Taints # 查看污点标签

6.4 增加master节点

6.4.1 拷贝证书方式

# 1. 从已kubeadm init的节点拷贝证书

scp /etc/kubernetes/pki/ca.* master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.* master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.* master-2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.* master-2:/etc/kubernetes/pki/etcd/

# 2. 新master进行初始化

kubeadm join 192.168.1.113:6443 --token 0ej3dg.1msgev7hznukc183 \

--discovery-token-ca-cert-hash sha256:7c901a6b1ae135e2ce1012265d9bd3887e128b7d35e147a29ecdeb5e6ca01568 \

--control-plane

6.4.2 生成证书方式

# 1. 当前 maste 生成证书用于添加新控制节点

kubeadm init phase upload-certs --upload-certs

# 2. 新master进行初始化

kubeadm join 192.168.1.113:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c555fd7cdf1f08d1b4aab959417bb620010e3376c6be406d8a36ddc1fdd233eb \

--control-plane \

--certificate-key 6ff68e4e7c0255073a9f0a9d96b76fc8f4ec4b490dfdaf6a567b4dd95622f012

7. 将node节点加入k8s集群

使用kubeadm将node节点加入k8smaster

kubeadm join 192.168.1.113:6443 --token 0ej3dg.1msgev7hznukc183 \

--discovery-token-ca-cert-hash sha256:7c901a6b1ae135e2ce1012265d9bd3887e128b7d35e147a29ecdeb5e6ca01568

kubeadm重新生成加入token

# kubeadm token create --print-join-command

8. 验证集群状态

# 查看node状态

kubectl get node

kubectl describe node node1

# 验证k8s集群状态

kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

# 当前csr证书状态

kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-7bzrk 14m system:bootstrap:0fpghu Approved,Issued

csr-jns87 20m system:node:kubeadm-master1.magedu.net Approved,Issued

csr-vcjdl 14m system:bootstrap:0fpghu Approved,Issued

9. 创建pod并测试网络通信

kubectl create deployment net --image=busybox --replicas=3 -- sleep 36000

# kubectl scale deploy net --replicas=10

kubectl get pod -o wide

kubectl exec -it $(pod_name) -- sh

# ping 其他pod节点和外网测试网络通信

10. 部署dashboard

https://github.com/kubernetes/dashboard

https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

10.2 配置与安装 dashboard与admin-user

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml -o dashboard-v2.2.0.yml

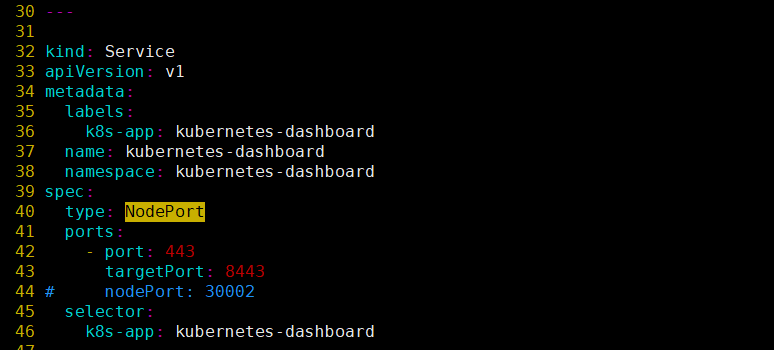

# 由于官方为localhost访问,此处添加type: NodePort直接代理出来访问dashboard(添加第40行)

# cat -n dashboard-v2.2.0.yml | sed -n 32,45p

32 kind: Service

33 apiVersion: v1

34 metadata:

35 labels:

36 k8s-app: kubernetes-dashboard

37 name: kubernetes-dashboard

38 namespace: kubernetes-dashboard

39 spec:

++> 40 type: NodePort

41 ports:

42 - port: 443

43 targetPort: 8443

44 selector:

45 k8s-app: kubernetes-dashboard

# cat admin-user.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

# kubectl apply -f dashboard-v2.2.0.yml -f admin-user.yml

10.2 登录dashboard

#- 1. 获取nodeport 为 32241

#kubectl get services -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 172.16.4.15 <none> 8000/TCP 41m

kubernetes-dashboard NodePort 172.16.1.0 <none> 443:32241/TCP 41m

#- 2. 获取token

# kubectl get secret -A | grep admin-user

# kubectl describe secret admin-user-token-nzpjj -n kubernetes-dashboard

# -3. 访问dashboard

https://192.168.1.113:32241

10.3 Kubeconfig登录

制作Kubeconfig文件

10.4 设置token登录会话保持时间

# vim dashboard/kubernetes-dashboard.yaml

image: harbor.alp.local/baseimages/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --token-ttl=43200

# kubectl apply -f .

11. k8s集群升级

https://v1-20.docs.kubernetes.io/zh/docs/setup/release/version-skew-policy/

11.1 升级kubeadm为指定版本

dnf install kubeadm=1.20.4

11.2 升级master

kubeadm upgrade plan # 查看变化

kubeadm upgrade apply v1.20.4 # 开始升级

dnf install kubelet=1.20.4 kubectl=1.20.4 kubeadm=1.20.4

11.2 升级node

kubeadm upgrade node --kubelet-version 1.20.4 #升级各 node 节点配置文件

dnf install kubelet=1.20.4 kubeadm=1.20.4 #升级 kubelet 二进制包

12. 其他部署相关命名

kubeadm reset # 重置节点,不可恢复

kubeadm token --help # token管理

kubectl taint node master node-role.kubernetes.io/master- #设置master节点可调度

# node节点重建操作

# kubectl drain node01 --delete-local-data --force --ignore-daemonsets

#清空节点(若节点已经NotReady了,则drain命令会卡住无法完成)

kubectl delete node node01 # 删除node01节点

kubectl uncordon node01 # 恢复node01节点调度

# 初始化 Control-plane/Master 节点

kubeadm init \

--apiserver-advertise-address 0.0.0.0 \

# API 服务器所公布的其正在监听的 IP 地址,指定“0.0.0.0”以使用默认网络接口的地址

# 切记只可以是内网IP,不能是外网IP,如果有多网卡,可以使用此选项指定某个网卡

--apiserver-bind-port 6443 \

# API 服务器绑定的端口,默认 6443

--cert-dir /etc/kubernetes/pki \

# 保存和存储证书的路径,默认值:"/etc/kubernetes/pki"

--control-plane-endpoint kuber4s.api \

# 为控制平面指定一个稳定的 IP 地址或 DNS 名称,

# 这里指定的 kuber4s.api 已经在 /etc/hosts 配置解析为本机IP

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

# 选择用于拉取Control-plane的镜像的容器仓库,默认值:"k8s.gcr.io"

# 因 Google被墙,这里选择国内仓库

--kubernetes-version 1.17.3 \

# 为Control-plane选择一个特定的 Kubernetes 版本, 默认值:"stable-1"

--node-name master01 \

# 指定节点的名称,不指定的话为主机hostname,默认可以不指定

--pod-network-cidr 10.10.0.0/16 \

# 指定pod的IP地址范围

--service-cidr 10.20.0.0/16 \

# 指定Service的VIP地址范围

--service-dns-domain cluster.local \

# 为Service另外指定域名,默认"cluster.local"

--upload-certs

# 将 Control-plane 证书上传到 kubeadm-certs Secret

- PS

- 手把手从零搭建与运营生产级的 Kubernetes 集群与 KubeSphere

- kubectl cmd

- kubernetes技能图谱

- kubeadm-init.yml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 36h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.113

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

# controlPlaneEndpoint: 192.168.1.113:6443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.5

networking:

dnsDomain: alpine.local

podSubnet: 10.0.0.0/16

serviceSubnet: 172.16.0.0/20

scheduler: {}