Stanford 224N- NLP with Deep Learning

Day 1

复杂问题简单化,机器学习 = 寻找一种函数 → f(' complicated ') = output

机器学习三板斧:

- define a set of function 2. goodness of function 3. pick the best function 核心数学建模

深度学习是机器学习重要分支:多层次ANN

- Neural Network 2. goodness of function 3. pick the best ANN

深度学习能拟合更多的线段,更加高效准确

深度学习能拟合更多的线段,更加高效准确

机器学习和深度学习:

ML:需要更多的人工数据的提取和表达,模型细致算法的尝试和训练

DL:自动提取内在特征,减少了很多人工参与的工作,实现端到端模型(but uninterpretable)

NLP: Sentiment analysis

Language processing (文本分类,摘要处理,机器翻译) idea: 算法描述输入进去 → 转换成代码

Man-Machine dialogue

Machine Learning Method classification:

- Machine Learning: prediction and clustering

- Deep Learning: CV, NLP(语音识别 → seq2seq ), 智能决策(reinforcement learning → games)

- Reinforcement learning: DRL

- GAN

224N-实录1

NLP and Deep Learning

What is NLP? CS + AI + linguistics(vision systems are common, but human being are alone for languages)

for computers to process & understand natural language in order to perform useful tasks.

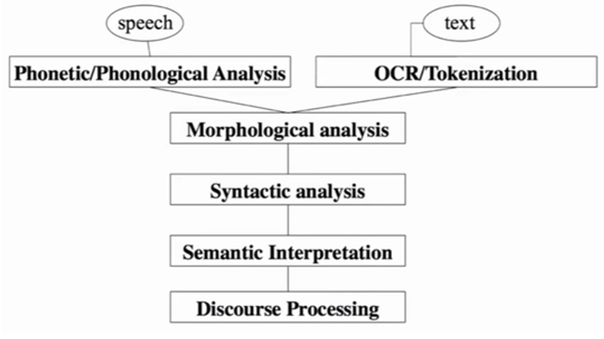

levels of language:

单词结构形态分析, interpretation + context

Special of human language:

not just data processing and analysis.

A human language is a system specifically constructed to convey the speaker's meaning.

A human language is a discrete/symbolic/categorical signaling system.

不同载体可以传达相同信息, The symbol is invariant across different encodings

project the symbol system into our brains (symbolic processors → continuous patterns of activation)

Huge problem of sparsity → vocabulary

What is DL?

It's not like traditional way to write program and tell computer what to do. (human to look at this problem and find solutions)

Most ML methods: human-designed representations and input features

→ machine learning almost nothing, instead human learn a lot about important properties (take time do feature engineering)

Machine only do numerical optimization, adjust parameters for lots of features

So that Not Only ML → DL, representation learning (raw signals from the world)

inventing it own features!!! end-to-end joint system learning

Multiple layers of representations are strong (ANN-- kinda stacked generalized linear models)

our manually designed features tend to be overspecified, learned features are easy to adapt, fast to train and keep on learning.

Far Better Performance!!! 80s,90s to now, we have BigData and strong compute power(parallel vector processing in GPU)

NLP is hard: language is ambiguous

Every trace of phrasing, and spelling and tone and timing carries countless signals and contexts and subcontexts.

Every listener interpret these signals in their own way.

Deep NLP:

Word Vector — visualization

place words in a high-dimensional vector space. Wonderful semantic spaces!

Words with similar meanings will cluster together

The direction in the vector space will tell you about meaning

What do these dimensions mean: nothing, 300d have no real meaning

Morphology, sentence structure, semantics (meaning of sentences) → ANN makes decision for us (RNN)

Sentiment Analysis