莫烦TensorFlow_05 add_layer

import tensorflow as tf

import numpy as np

def add_layer(inputs, in_size, out_size, activation_function = None):

Weights = tf.Variable(tf.random_normal([in_size, out_size])) # hang lie

biases = tf.Variable(tf.zeros([1, out_size]) + 0.1)

Wx_plus_b = tf.matmul(inputs, Weights) + biases

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs

x_data = np.linspace(-1,1,300)[:, np.newaxis] # 一列;[np.newaxis,:] 一行

noise = np.random.normal(0, 0.05, x_data.shape)

y_data = np.square(x_data) - 0.5 + noise

#input layer 1

#hidden layer 10

#output layer 1

xs = tf.placeholder(tf.float32, [None, 1]) # 行数不固定,列数是1

ys = tf.placeholder(tf.float32, [None, 1])

l1 = add_layer(xs, 1, 10, activation_function = tf.nn.relu)

prediction = add_layer(l1, 10, 1, activation_function = None)

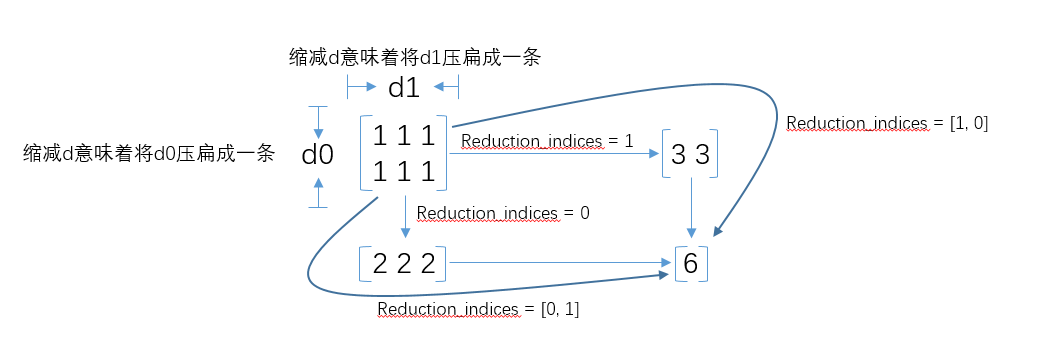

loss = tf.reduce_mean(

tf.reduce_sum(

tf.square(ys - prediction),

reduction_indices=[1]

)

)

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init)

for i in range(1000):

sess.run(train_step, feed_dict={xs:x_data, ys:y_data})

if i % 50 == 0:

print(sess.run(loss,

feed_dict={xs:x_data, ys:y_data}

)

)

浙公网安备 33010602011771号

浙公网安备 33010602011771号