openstack 基础环境配置

1.

网卡配置

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE=Ethernet BOOTPROTO=none NAME=ens33 DEVICE=ens33 ONBOOT=yes IPADDR=10.0.0.11 GATEWAY=10.0.0.2 DNS1=114.114.114.114 [root@controller ~]#

关闭selinux 服务

[root@controller ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config [root@controller ~]# setenforce 0

配置yum源

echo '[local] name=local baseurl=file:///mnt gpgcheck=0 [openstack] name=openstack baseurl=file:///opt/repo gpgcheck=0' >/etc/yum.repos.d/local.repo

echo 'mount /dev/cdrom /mnt' >>/etc/rc.local ##设置开机自动挂载镜像

一、基础配置

1.时间同步配置

控制节点和计算节点均安装chrony

[root@controller ~]# yum install -y chrony [root@compute1 ~]# yum install -y chrony

服务端配置

[root@controller ~]# cat /etc/chrony.conf # Use public servers from the pool.ntp.org project. # Please consider joining the pool (http://www.pool.ntp.org/join.html). server ntp1.aliyun.com iburst ###同步阿里云时间 # Record the rate at which the system clock gains/losses time. driftfile /var/lib/chrony/drift # Allow the system clock to be stepped in the first three updates # if its offset is larger than 1 second. makestep 1.0 3 # Enable kernel synchronization of the real-time clock (RTC). rtcsync # Enable hardware timestamping on all interfaces that support it. #hwtimestamp * # Increase the minimum number of selectable sources required to adjust # the system clock. #minsources 2 # Allow NTP client access from local network. #allow 192.168.0.0/16 allow 10.0.0.0/24 ###允许本地网络同步的段落 # Serve time even if not synchronized to a time source. #local stratum 10 # Specify file containing keys for NTP authentication. #keyfile /etc/chrony.keys # Specify directory for log files. logdir /var/log/chrony # Select which information is logged. #log measurements statistics tracking [root@controller ~]#

客户端配置

[root@compute1 ~]# cat /etc/chrony.conf # Use public servers from the pool.ntp.org project. # Please consider joining the pool (http://www.pool.ntp.org/join.html). server 10.0.0.11 iburst ###指定同步的服务器即可 # Record the rate at which the system clock gains/losses time. driftfile /var/lib/chrony/drift # Allow the system clock to be stepped in the first three updates # if its offset is larger than 1 second. makestep 1.0 3 # Enable kernel synchronization of the real-time clock (RTC). rtcsync # Enable hardware timestamping on all interfaces that support it. #hwtimestamp * # Increase the minimum number of selectable sources required to adjust # the system clock. #minsources 2 # Allow NTP client access from local network. #allow 192.168.0.0/16 # Serve time even if not synchronized to a time source. #local stratum 10 # Specify file containing keys for NTP authentication. #keyfile /etc/chrony.keys # Specify directory for log files. logdir /var/log/chrony # Select which information is logged. #log measurements statistics tracking [root@compute1 ~]#

配置完成之后重启服务

[root@controller ~]# systemctl restart chronyd

[root@compute1 ~]# systemctl restart chronyd

注意:如果时间同步服务器防火墙是开启的,客户端同步时间时会失败,可将其关闭或允许放行端口

2.安装openstack 客户端和openstack-selinux

首先配置yum 网络源

https://developer.aliyun.com/mirror

所有节点安装

[root@controller ~]# yum install -y python-openstackclient openstack-selinux

[root@compute1 ~]# yum install -y python-openstackclient openstack-selinux

3.在控制节点安装 数据库

[root@controller ~]# yum install -y mariadb mariadb-server python2-PyMySQL -y

配置

echo '[mysqld] bind-address = 10.0.0.11 default-storage-engine = innodb innodb_file_per_table max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8' >/etc/my.cnf.d/openstack.cnf

启动

[root@controller ~]# systemctl start mariadb

[root@controller ~]# systemctl enable mariadb

数据库安全初始化

[root@controller ~]# mysql_secure_installation

[root@controller ~]# mysql_secure_installation NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY! In order to log into MariaDB to secure it, we'll need the current password for the root user. If you've just installed MariaDB, and you haven't set the root password yet, the password will be blank, so you should just press enter here. Enter current password for root (enter for none): OK, successfully used password, moving on... Setting the root password ensures that nobody can log into the MariaDB root user without the proper authorisation. Set root password? [Y/n] n ... skipping. By default, a MariaDB installation has an anonymous user, allowing anyone to log into MariaDB without having to have a user account created for them. This is intended only for testing, and to make the installation go a bit smoother. You should remove them before moving into a production environment. Remove anonymous users? [Y/n] y ... Success! Normally, root should only be allowed to connect from 'localhost'. This ensures that someone cannot guess at the root password from the network. Disallow root login remotely? [Y/n] y ... Success! By default, MariaDB comes with a database named 'test' that anyone can access. This is also intended only for testing, and should be removed before moving into a production environment. Remove test database and access to it? [Y/n] y - Dropping test database... ... Success! - Removing privileges on test database... ... Success! Reloading the privilege tables will ensure that all changes made so far will take effect immediately. Reload privilege tables now? [Y/n] y ... Success! Cleaning up... All done! If you've completed all of the above steps, your MariaDB installation should now be secure. Thanks for using MariaDB! [root@controller ~]#

4.安装消息队列

[root@controller ~]# yum install rabbitmq-server -y

启动

[root@controller ~]# systemctl enable rabbitmq-server.service

[root@controller ~]# systemctl start rabbitmq-server.service

添加openstack 用户

[root@controller ~]# rabbitmqctl add_user openstack RABBIT_PASS

给openstack 用户设置读写执行权限

[root@controller ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

监听两个端口

[root@controller ~]# netstat -lntup Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 24498/beam.smp ###做rabbitmq集群使用 tcp 0 0 0.0.0.0:4369 0.0.0.0:* LISTEN 1/systemd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 9537/sshd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 11063/master tcp6 0 0 :::5672 :::* LISTEN 24498/beam.smp ###给客户端使用 tcp6 0 0 :::3306 :::* LISTEN 24313/mysqld tcp6 0 0 :::22 :::* LISTEN 9537/sshd tcp6 0 0 ::1:25 :::* LISTEN 11063/master udp 0 0 0.0.0.0:123 0.0.0.0:* 23856/chronyd udp 0 0 127.0.0.1:323 0.0.0.0:* 23856/chronyd udp6 0 0 ::1:323 :::* 23856/chronyd

启用rabbitmq 管理插件,方便后期做监控

[root@controller ~]# rabbitmq-plugins enable rabbitmq_management

会增加一个监听端口

[root@controller ~]# netstat -lntup Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 24498/beam.smp tcp 0 0 0.0.0.0:4369 0.0.0.0:* LISTEN 1/systemd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 9537/sshd tcp 0 0 0.0.0.0:15672 0.0.0.0:* LISTEN 24498/beam.smp tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 11063/master tcp6 0 0 :::5672 :::* LISTEN 24498/beam.smp tcp6 0 0 :::3306 :::* LISTEN 24313/mysqld tcp6 0 0 :::22 :::* LISTEN 9537/sshd tcp6 0 0 ::1:25 :::* LISTEN 11063/master udp 0 0 0.0.0.0:123 0.0.0.0:* 23856/chronyd udp 0 0 127.0.0.1:323 0.0.0.0:* 23856/chronyd udp6 0 0 ::1:323 :::* 23856/chronyd

浏览器访问15672登陆rabbitmq http://10.0.0.11:15672

5.在控制节点安装memcached缓存token

[root@controller ~]# yum install -y memcached python-memcached

修改配置

[root@controller ~]# sed -i 's#127.0.0.1#10.0.0.11#g' /etc/sysconfig/memcached

[root@controller ~]# cat /etc/sysconfig/memcached PORT="11211" USER="memcached" MAXCONN="1024" CACHESIZE="64" OPTIONS="-l 10.0.0.11,::1"

启动

[root@controller ~]# systemctl enable memcached.service

[root@controller ~]# systemctl start memcached.service

监听的端口

[root@controller ~]# systemctl restart memcached.service [root@controller ~]# netstat -lntup Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 24498/beam.smp tcp 0 0 10.0.0.11:11211 0.0.0.0:* LISTEN 25732/memcached tcp 0 0 0.0.0.0:4369 0.0.0.0:* LISTEN 1/systemd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 9537/sshd tcp 0 0 0.0.0.0:15672 0.0.0.0:* LISTEN 24498/beam.smp tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 11063/master tcp6 0 0 :::5672 :::* LISTEN 24498/beam.smp tcp6 0 0 :::3306 :::* LISTEN 24313/mysqld tcp6 0 0 ::1:11211 :::* LISTEN 25732/memcached tcp6 0 0 :::22 :::* LISTEN 9537/sshd tcp6 0 0 ::1:25 :::* LISTEN 11063/master udp 0 0 10.0.0.11:11211 0.0.0.0:* 25732/memcached udp 0 0 0.0.0.0:123 0.0.0.0:* 23856/chronyd udp 0 0 127.0.0.1:323 0.0.0.0:* 23856/chronyd udp6 0 0 ::1:11211 :::* 25732/memcached udp6 0 0 ::1:323 :::* 23856/chronyd

二、认证服务

在控制节点运行

1登陆数据库,为keystone 创建数据库、授权

MariaDB [(none)]> CREATE DATABASE keystone; MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';

[root@controller ~]# mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 2 Server version: 10.1.20-MariaDB MariaDB Server Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> CREATE DATABASE keystone; Query OK, 1 row affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASSMariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> exit

2.安装应用包

[root@controller ~]# yum install -y openstack-keystone httpd mod_wsgi

3.修改配置文件

备份配置文件

[root@controller ~]# cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

[root@controller ~]#grep -Ev '^$|#' /etc/keystone/keystone.conf.bak >/etc/keystone/keystone.conf

a.手动修改配置文件

[root@controller ~]# vi /etc/keystone/keystone.conf [DEFAULT] admin_token = ADMIN_TOKEN [assignment] [auth] [cache] [catalog] [cors] [cors.subdomain] [credential] [database] connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone [domain_config] [DEFAULT] admin_token = ADMIN_TOKEN [assignment] [auth] [cache] [catalog] [cors] [cors.subdomain] [credential] [database] connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone [domain_config] [endpoint_filter] [endpoint_policy] [eventlet_server] [eventlet_server_ssl] [federation] [fernet_tokens] [identity] [identity_mapping] [kvs] [ldap] [matchmaker_redis] [memcache] [oauth1] [os_inherit] [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_middleware] [oslo_policy] [paste_deploy] [policy] [resource] [revoke] [role] [saml] [shadow_users] [signing] [ssl] [token] provider = fernet -- INSERT --

b.安装openstack 配置文件修改工具

[root@controller ~]# yum install openstack-utils -y

工具修改配置文件

[root@controller ~]# openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token ADMIN_TOKEN [root@controller ~]# openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone [root@controller ~]# openstack-config --set /etc/keystone/keystone.conf token provider fernet

[root@controller ~]# cat /etc/keystone/keystone.conf [DEFAULT] admin_token = ADMIN_TOKEN [assignment] [auth] [cache] [catalog] [cors] [cors.subdomain] [credential] [database] connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone [domain_config] [endpoint_filter] [endpoint_policy] [eventlet_server] [eventlet_server_ssl] [federation] [fernet_tokens] [identity] [identity_mapping] [kvs] [ldap] [matchmaker_redis] [memcache] [oauth1] [os_inherit] [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_middleware] [oslo_policy] [paste_deploy] [policy] [resource] [revoke] [role] [saml] [shadow_users] [signing] [ssl] [token] provider = fernet [tokenless_auth] [trust]

4.同步数据库

同步之前数据库状态,内容为空

[root@controller ~]# mysql keystone -e 'show tables;'

同步

[root@controller ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

同步后数据库状态及内容

[root@controller ~]# mysql keystone -e 'show tables;' +------------------------+ | Tables_in_keystone | +------------------------+ | access_token | | assignment | | config_register | | consumer | | credential | | domain | | endpoint | | endpoint_group | | federated_user | | federation_protocol | | group | | id_mapping | | identity_provider | | idp_remote_ids | | implied_role | | local_user | | mapping | | migrate_version | | password | | policy | | policy_association | | project | | project_endpoint | | project_endpoint_group | | region | | request_token | | revocation_event | | role | | sensitive_config | | service | | service_provider | | token | | trust | | trust_role | | user | | user_group_membership | | whitelisted_config | +------------------------+ [root@controller ~]#

5.初始化fernet

[root@controller ~]# ll /etc/keystone/ total 104 -rw-r-----. 1 root keystone 2303 Feb 1 2017 default_catalog.templates -rw-r-----. 1 root keystone 661 Dec 20 10:42 keystone.conf -rw-r-----. 1 root root 73101 Dec 20 10:19 keystone.conf.bak -rw-r-----. 1 root keystone 2400 Feb 1 2017 keystone-paste.ini -rw-r-----. 1 root keystone 1046 Feb 1 2017 logging.conf -rw-r-----. 1 keystone keystone 9699 Feb 1 2017 policy.json -rw-r-----. 1 keystone keystone 665 Feb 1 2017 sso_callback_template.html

[root@controller ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# ll /etc/keystone/ total 104 -rw-r-----. 1 root keystone 2303 Feb 1 2017 default_catalog.templates drwx------. 2 keystone keystone 24 Dec 20 10:58 fernet-keys -rw-r-----. 1 root keystone 661 Dec 20 10:42 keystone.conf -rw-r-----. 1 root root 73101 Dec 20 10:19 keystone.conf.bak -rw-r-----. 1 root keystone 2400 Feb 1 2017 keystone-paste.ini -rw-r-----. 1 root keystone 1046 Feb 1 2017 logging.conf -rw-r-----. 1 keystone keystone 9699 Feb 1 2017 policy.json -rw-r-----. 1 keystone keystone 665 Feb 1 2017 sso_callback_template.html

6.配置httpd

加速启动

[root@controller ~]# echo "ServerName controller" >>/etc/httpd/conf/httpd.conf

修改配置文件

echo 'Listen 5000 Listen 35357 <VirtualHost *:5000> WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP} WSGIProcessGroup keystone-public WSGIScriptAlias / /usr/bin/keystone-wsgi-public WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On ErrorLogFormat "%{cu}t %M" ErrorLog /var/log/httpd/keystone-error.log CustomLog /var/log/httpd/keystone-access.log combined <Directory /usr/bin> Require all granted </Directory> </VirtualHost> <VirtualHost *:35357> WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP} WSGIProcessGroup keystone-admin WSGIScriptAlias / /usr/bin/keystone-wsgi-admin WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On ErrorLogFormat "%{cu}t %M" ErrorLog /var/log/httpd/keystone-error.log CustomLog /var/log/httpd/keystone-access.log combined <Directory /usr/bin> Require all granted </Directory> </VirtualHost>' >/etc/httpd/conf.d/wsgi-keystone.conf

启动httpd

[root@controller ~]# systemctl enable httpd.service

[root@controller ~]# systemctl start httpd.service

[root@controller ~]# netstat -lntup Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 9517/beam.smp tcp 0 0 10.0.0.11:3306 0.0.0.0:* LISTEN 20963/mysqld tcp 0 0 10.0.0.11:11211 0.0.0.0:* LISTEN 9519/memcached tcp 0 0 0.0.0.0:4369 0.0.0.0:* LISTEN 1/systemd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 9536/sshd tcp 0 0 0.0.0.0:15672 0.0.0.0:* LISTEN 9517/beam.smp tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 9941/master tcp6 0 0 :::5000 :::* LISTEN 22202/httpd tcp6 0 0 :::5672 :::* LISTEN 9517/beam.smp tcp6 0 0 ::1:11211 :::* LISTEN 9519/memcached tcp6 0 0 :::80 :::* LISTEN 22202/httpd tcp6 0 0 :::22 :::* LISTEN 9536/sshd tcp6 0 0 ::1:25 :::* LISTEN 9941/master tcp6 0 0 :::35357 :::* LISTEN 22202/httpd udp 0 0 10.0.0.11:11211 0.0.0.0:* 9519/memcached udp 0 0 0.0.0.0:123 0.0.0.0:* 8744/chronyd udp 0 0 127.0.0.1:323 0.0.0.0:* 8744/chronyd udp6 0 0 ::1:11211 :::* 9519/memcached udp6 0 0 ::1:323 :::* 8744/chronyd [root@controller ~]#

7.将keystone 自己注册到keystone中

[root@controller ~]# export OS_TOKEN=ADMIN_TOKEN [root@controller ~]# export OS_URL=http://controller:35357/v3 [root@controller ~]# export OS_IDENTITY_API_VERSION=3

[root@controller ~]# env | grep OS HOSTNAME=controller OS_IDENTITY_API_VERSION=3 OS_TOKEN=ADMIN_TOKEN OS_URL=http://controller:35357/v3

8.创建服务

[root@controller ~]# openstack service create --name keystone --description "OpenStack Identity" identity

[root@controller ~]# openstack endpoint create --region RegionOne identity public http://controller:5000/v3 [root@controller ~]# openstack endpoint create --region RegionOne identity internal http://controller:5000/v3 [root@controller ~]# openstack endpoint create --region RegionOne identity admin http://controller:35357/v3

[root@controller ~]# openstack service create --name keystone --description "OpenStack Identity" identity +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | OpenStack Identity | | enabled | True | | id | b530209fdb8f4d29b687792106fe1314 | | name | keystone | | type | identity | +-------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne identity public http://controller:5000/v3 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | d0f6303bb2084b9a8592925c501ff233 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | b530209fdb8f4d29b687792106fe1314 | | service_name | keystone | | service_type | identity | | url | http://controller:5000/v3 | +--------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne identity internal http://controller:5000/v3 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | d7cce885611c40828ded55bd30762e8a | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | b530209fdb8f4d29b687792106fe1314 | | service_name | keystone | | service_type | identity | | url | http://controller:5000/v3 | +--------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne identity admin http://controller:35357/v3 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 5b8f6a9fb42547bc9766d80d97146694 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | b530209fdb8f4d29b687792106fe1314 | | service_name | keystone | | service_type | identity | | url | http://controller:35357/v3 | +--------------+----------------------------------+

9.创建域,项目,用户,角色

[root@controller ~]# openstack domain create --description "Default Domain" default ###创建默认域 [root@controller ~]# openstack project create --domain default --description "Admin Project" admin ###在默认域中创建admin项目 [root@controller ~]# openstack user create --domain default --password ADMIN_PASS admin ###在默认域中创建admin用户 [root@controller ~]# openstack role create admin ###创建admin角色

关联项目角色用户

[root@controller ~]# openstack role add --project admin --user admin admin

注:在admin项目中,给admin用户添加admin角色

10.创建service 项目,存放后期glance,nova,neutron的服务的账号

[root@controller ~]# openstack project create --domain default --description "Service Project" service

[root@controller ~]# openstack project create --domain default --description "Service Project" service +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | Service Project | | domain_id | c9f9dbbfdfe34c45b9e18b1aca5aea1c | | enabled | True | | id | 2c07141fc8af4ebfa08956209b0a5b8e | | is_domain | False | | name | service | | parent_id | c9f9dbbfdfe34c45b9e18b1aca5aea1c | +-------------+----------------------------------+ [root@controller ~]#

11.取消环境变量

[root@controller ~]# env | grep OS HOSTNAME=controller OS_IDENTITY_API_VERSION=3 OS_TOKEN=ADMIN_TOKEN OS_URL=http://controller:35357/v3

a.

[root@controller ~]# unset OS_TOKEN

b.退出当前登陆窗口,重新登陆,当前会话的环境变量就自动清除

12.用新创建的用户获取token

重新配置环境变量

[root@controller ~]# export OS_PROJECT_DOMAIN_NAME=default

[root@controller ~]# export OS_DOMAIN_NAME=default ##注意这个配置不需要,只是在下面的问题时可以解决,命令执行不成功可能环境变量有错误字符 [root@controller ~]# export OS_USER_DOMAIN_NAME=default [root@controller ~]# export OS_PROJECT_NAME=admin [root@controller ~]# export OS_USERNAME=admin [root@controller ~]# export OS_PASSWORD=ADMIN_PASS [root@controller ~]# export OS_AUTH_URL=http://controller:35357/v3 [root@controller ~]# export OS_IDENTITY_API_VERSION=3 [root@controller ~]# export OS_IMAGE_API_VERSION=2

[root@controller ~]# env | grep OS HOSTNAME=controller OS_IMAGE_API_VERSION=2 OS_PROJECT_NAME=admin OS_IDENTITY_API_VERSION=3 OS_USER_DOMIAN_NAME=default OS_PASSWORD=ADMIN_PASS OS_AUTH_URL=http://controller:35357/v3 OS_USERNAME=admin OS_PROJECT_DOMAIN_NAME=default

验证获取token

[root@controller keystone]# openstack token issue

[root@controller keystone]# openstack token issue +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | expires | 2019-12-20T09:14:39.000000Z | | id | gAAAAABd_ILvWUSJcQbZN8QlZh8SoD_mEdIZ9-8w3JiDQJRlaS9H_bFp5UDefimg1EozBFsovz4Nh4bEE_-xz44YhQVy5vXQYFDPSvMHBqzzaxrjQVDTsmUB75H0oahUJLV_Nr6ptfFnKf5Q0GquaOgPZrXNKXQgHv7IXYJIF35vKRsKKi9FNuM | | project_id | 45f70e0011bb4c09985709c1a5dccd0d | | user_id | d1ec935819424b6db22198b528834b4e | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ [root@controller keystone]#

问题:执行命令报错 [root@controller ~]# openstack token issue The request you have made requires authentication. (HTTP 401) (Request-ID: req-16681189-ed64-4bc7-b6b3-b29fd02284ab)

解决方法:

通过查看日志/var/log.keystone

2019-12-20 16:02:13.115 9663 ERROR keystone.auth.plugins.core [req-ffc8ea83-61b9-4cc5-9a1c-35ecca60252d - - - - -] Could not find domain: default

[root@controller keystone]# export OS_DOMAIN_NAME=default

创建脚本,方便每次登陆都要重新配置环境变量

[root@controller ~]# vi admin-openrc export OS_PROJECT_DOMAIN_NAME=default export OS_DOMAIN_NAME=default export OS_USER_DOMIAN_NAME=default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=ADMIN_PASS export OS_AUTH_URL=http://controller:35357/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2

[root@controller ~]# source admin-openrc

让系统每次登陆自动执行脚本,配置根目录下.bashrc文件

[root@controller ~]# cat .bashrc # .bashrc # User specific aliases and functions alias rm='rm -i' alias cp='cp -i' alias mv='mv -i' # Source global definitions if [ -f /etc/bashrc ]; then . /etc/bashrc fi source admin-openrc [root@controller ~]#

三、镜像服务

1创建数据库并授权

登陆数据库 创建glance 数据库并授权给

MariaDB [(none)]> CREATE DATABASE glance; MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'loalhost' IDENTIFIED BY 'GLANCE_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

[root@controller ~]# mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 3 Server version: 10.1.20-MariaDB MariaDB Server Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> CREATE DATABASE glance; Query OK, 1 row affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'loalhost' IDENTIFIED BY 'GLANCE_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]>

2.在keystone 上创建glance 用户

[root@controller ~]# openstack user create --domain default --password GLANCE_PASS glance

[root@controller ~]# openstack role add --project service --user glance admin

[root@controller ~]# openstack user create --domain default --password GLANCE_PASS glance +-----------+----------------------------------+ | Field | Value | +-----------+----------------------------------+ | domain_id | c9f9dbbfdfe34c45b9e18b1aca5aea1c | | enabled | True | | id | 69c339ae836d448fb9dae8dca4b3b623 | | name | glance | +-----------+----------------------------------+ [root@controller ~]# openstack role add --project service --user glance admin

查看授权关联情况

[root@controller ~]# openstack role assignment list +----------------------+----------------------+-------+----------------------+--------+-----------+ | Role | User | Group | Project | Domain | Inherited | +----------------------+----------------------+-------+----------------------+--------+-----------+ | d63b6106e1a042228b12 | 69c339ae836d448fb9da | | 2c07141fc8af4ebfa089 | | False | | 2d9d7bd4532c | e8dca4b3b623 | | 56209b0a5b8e | | | | d63b6106e1a042228b12 | d1ec935819424b6db221 | | 45f70e0011bb4c099857 | | False | | 2d9d7bd4532c | 98b528834b4e | | 09c1a5dccd0d | | | +----------------------+----------------------+-------+----------------------+--------+-----------+ [root@controller ~]# openstack role list +----------------------------------+-------+ | ID | Name | +----------------------------------+-------+ | d63b6106e1a042228b122d9d7bd4532c | admin | +----------------------------------+-------+ [root@controller ~]# openstack user list +----------------------------------+--------+ | ID | Name | +----------------------------------+--------+ | 69c339ae836d448fb9dae8dca4b3b623 | glance | | d1ec935819424b6db22198b528834b4e | admin | +----------------------------------+--------+ [root@controller ~]# openstack project list +----------------------------------+---------+ | ID | Name | +----------------------------------+---------+ | 2c07141fc8af4ebfa08956209b0a5b8e | service | | 45f70e0011bb4c09985709c1a5dccd0d | admin | +----------------------------------+---------+ [root@controller ~]#

3.在keystone创建glance服务 注册api

[root@controller ~]# openstack service create --name glance --description "OpenStack Image" image [root@controller ~]# openstack endpoint create --region RegionOne image public http://controller:9292 [root@controller ~]# openstack endpoint create --region RegionOne image internal http://controller:9292 [root@controller ~]# openstack endpoint create --region RegionOne image admin http://controller:9292

[root@controller ~]# openstack service create --name glance --description "OpenStack Image" image +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | OpenStack Image | | enabled | True | | id | 0fa74611fd2c46f78e52b20231180273 | | name | glance | | type | image | +-------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne image public http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | dd6181342d84437884988fd0f5739d21 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 0fa74611fd2c46f78e52b20231180273 | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne image internal http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 55b689aac921492f9052c1a58c029a59 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 0fa74611fd2c46f78e52b20231180273 | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne image admin http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | ca1c5de468434cacb6ee94828351e517 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 0fa74611fd2c46f78e52b20231180273 | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+ [root@controller ~]#

4.安装glance 服务软件包

[root@controller ~]# yum install -y openstack-glance

5.修改配置文件

修改/etc/glance/glance-api.conf

[root@controller ~]# cp /etc/glance/glance-api.conf{,.bak} [root@controller ~]# grep '^[a-z\[]' /etc/glance/glance-api.conf.bak >/etc/glance/glance-api.conf

配置

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

[root@controller glance]# cat /etc/glance/glance-api.conf [DEFAULT] [cors] [cors.subdomain] [database] connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/ [image_format] [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = GLANCE_PASS [matchmaker_redis] [oslo_concurrency] [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_policy] [paste_deploy] flavor = keystone [profiler] [store_type_location_strategy] [task] [taskflow_executor] [root@controller glance]#

修改/etc/glance/glance-registry.conf

[root@controller ~]# cp /etc/glance/glance-registry.conf{,.bak} [root@controller ~]# grep '^[a-z\[]' /etc/glance/glance-registry.conf.bak > /etc/glance/glance-registry.conf

配置

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

验证MD5值

[root@controller glance]# md5sum /etc/glance/glance-api.conf

3e1a4234c133eda11b413788e001cba3 /etc/glance/glance-api.conf

[root@controller glance]# md5sum /etc/glance/glance-registry.conf

46acabd81a65b924256f56fe34d90b8f /etc/glance/glance-registry.conf

[root@controller glance]# cat /etc/glance/glance-registry.conf [DEFAULT] [database] connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance [glance_store] [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = GLANCE_PASS [matchmaker_redis] [oslo_messaging_amqp] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_policy] [paste_deploy] flavor = keystone [profiler]

注意:以上修改同样可以通过vi编辑配置文件修改

[root@controller ~]# md5sum /etc/glance/glance-api.conf 3e1a4234c133eda11b413788e001cba3 /etc/glance/glance-api.conf [root@controller ~]# md5sum /etc/glance/glance-registry.conf 46acabd81a65b924256f56fe34d90b8f /etc/glance/glance-registry.conf

6.同步数据库

[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance

[root@controller glance]# mysql glance -e "show tables;" +----------------------------------+ | Tables_in_glance | +----------------------------------+ | artifact_blob_locations | | artifact_blobs | | artifact_dependencies | | artifact_properties | | artifact_tags | | artifacts | | image_locations | | image_members | | image_properties | | image_tags | | images | | metadef_namespace_resource_types | | metadef_namespaces | | metadef_objects | | metadef_properties | | metadef_resource_types | | metadef_tags | | migrate_version | | task_info | | tasks | +----------------------------------+

7启动glance 服务

[root@controller ~]# systemctl enable openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# systemctl start openstack-glance-api.service openstack-glance-registry.service

检查端口

[root@controller ~]# netstat -lntup Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:9191 0.0.0.0:* LISTEN 20041/python2 tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 9284/beam.smp tcp 0 0 10.0.0.11:3306 0.0.0.0:* LISTEN 9572/mysqld tcp 0 0 10.0.0.11:11211 0.0.0.0:* LISTEN 9258/memcached tcp 0 0 0.0.0.0:9292 0.0.0.0:* LISTEN 20040/python2 tcp 0 0 0.0.0.0:4369 0.0.0.0:* LISTEN 1/systemd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 9291/sshd tcp 0 0 0.0.0.0:15672 0.0.0.0:* LISTEN 9284/beam.smp tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 9689/master tcp6 0 0 :::5672 :::* LISTEN 9284/beam.smp tcp6 0 0 :::5000 :::* LISTEN 9281/httpd tcp6 0 0 ::1:11211 :::* LISTEN 9258/memcached tcp6 0 0 :::80 :::* LISTEN 9281/httpd tcp6 0 0 :::22 :::* LISTEN 9291/sshd tcp6 0 0 ::1:25 :::* LISTEN 9689/master tcp6 0 0 :::35357 :::* LISTEN 9281/httpd udp 0 0 10.0.0.11:11211 0.0.0.0:* 9258/memcached udp 0 0 0.0.0.0:123 0.0.0.0:* 8694/chronyd udp 0 0 127.0.0.1:323 0.0.0.0:* 8694/chronyd udp6 0 0 ::1:11211 :::* 9258/memcached udp6 0 0 ::1:323 :::* 8694/chronyd

8.验证服务

[root@controller ~]# openstack image create "cirros" \ > --file cirros-0.3.4-x86_64-disk.img \ > --disk-format qcow2 --container-format bare \ > --public

[root@controller ~]# openstack image create "cirros" \ > --file cirros-0.3.4-x86_64-disk.img \ > --disk-format qcow2 --container-format bare \ > --public +------------------+------------------------------------------------------+ | Field | Value | +------------------+------------------------------------------------------+ | checksum | ee1eca47dc88f4879d8a229cc70a07c6 | | container_format | bare | | created_at | 2019-12-22T06:35:47Z | | disk_format | qcow2 | | file | /v2/images/4492225b-eb11-4705-90dd-46c8e8cfe238/file | | id | 4492225b-eb11-4705-90dd-46c8e8cfe238 | | min_disk | 0 | | min_ram | 0 | | name | cirros | | owner | 45f70e0011bb4c09985709c1a5dccd0d | | protected | False | | schema | /v2/schemas/image | | size | 13287936 | | status | active | | tags | | | updated_at | 2019-12-22T06:35:48Z | | virtual_size | None | | visibility | public | +------------------+------------------------------------------------------+ [root@controller ~]# openstack image list +--------------------------------------+--------+--------+ | ID | Name | Status | +--------------------------------------+--------+--------+ | 4492225b-eb11-4705-90dd-46c8e8cfe238 | cirros | active | +--------------------------------------+--------+--------+ [root@controller ~]#

提示:在删除镜像的同时,要删除数据库表中的记录,

[root@controller ~]# mysql glance -e "show tables"| grep images

四、nova计算服务

在控制节点安装服务

1.创建数据库并授权

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

2.在keystone 在keystone 上创建系统用户并关联角色

[root@controller ~]# openstack user create --domain default --password NOVA_PASS nova

[root@controller ~]# openstack role add --project service --user nova admin

[root@controller ~]# openstack user create --domain default --password NOVA_PASS nova +-----------+----------------------------------+ | Field | Value | +-----------+----------------------------------+ | domain_id | c9f9dbbfdfe34c45b9e18b1aca5aea1c | | enabled | True | | id | fc1e90dcf4de4e69b4a0f7353281bb3e | | name | nova | +-----------+----------------------------------+ [root@controller ~]# openstack role add --project service --user nova admin

3.在keystone上创建服务并注册api

[root@controller ~]# openstack service create --name nova --description "OpenStack Compute" compute [root@controller ~]# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1/%\(tenant_id\)s [root@controller ~]# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1/%\(tenant_id\)s [root@controller ~]# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1/%\(tenant_id\)s

[root@controller ~]# openstack service create --name nova --description "OpenStack Compute" compute +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | OpenStack Compute | | enabled | True | | id | bb74eb99fdf043c082378ddda4c4b3d6 | | name | nova | | type | compute | +-------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1/%\(tenant_id\)s +--------------+-------------------------------------------+ | Field | Value | +--------------+-------------------------------------------+ | enabled | True | | id | 40ac81e4976f4ebd84d233b38e3f3a58 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | bb74eb99fdf043c082378ddda4c4b3d6 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1/%(tenant_id)s | +--------------+-------------------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1/%\(tenant_id\)s +--------------+-------------------------------------------+ | Field | Value | +--------------+-------------------------------------------+ | enabled | True | | id | fefa53bb492e4ffb86af076dfa711961 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | bb74eb99fdf043c082378ddda4c4b3d6 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1/%(tenant_id)s | +--------------+-------------------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1/%\(tenant_id\)s +--------------+-------------------------------------------+ | Field | Value | +--------------+-------------------------------------------+ | enabled | True | | id | 014d1119aaeb4f06b617957b4ca58410 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | bb74eb99fdf043c082378ddda4c4b3d6 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1/%(tenant_id)s | +--------------+-------------------------------------------+ [root@controller ~]#

4.安装服务软件包

[root@controller ~]# yum install -y openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler

5.修改配置文件

[root@controller ~]# cp /etc/nova/nova.conf{,.bak} [root@controller ~]# ls /etc/nova/nova.conf nova.conf nova.conf.bak [root@controller ~]# grep '^[a-z\[]' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.11

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address '$my_ip'

校验

[root@controller ~]# md5sum /etc/nova/nova.conf

47ded61fdd1a79ab91bdb37ce59ef192 /etc/nova/nova.conf

6.同步数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova [root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

[root@controller ~]# mysql nova_api -e 'show tables;' +--------------------+ | Tables_in_nova_api | +--------------------+ | build_requests | | cell_mappings | | flavor_extra_specs | | flavor_projects | | flavors | | host_mappings | | instance_mappings | | migrate_version | | request_specs | +--------------------+ [root@controller ~]# mysql nova -e 'show tables;' +--------------------------------------------+ | Tables_in_nova | +--------------------------------------------+ | agent_builds | | aggregate_hosts | | aggregate_metadata | | aggregates | | allocations | | block_device_mapping | | bw_usage_cache | | cells | | certificates | | compute_nodes | | console_pools | | consoles | | dns_domains | | fixed_ips | | floating_ips | | instance_actions | | instance_actions_events | | instance_extra | | instance_faults | | instance_group_member | | instance_group_policy | | instance_groups | | instance_id_mappings | | instance_info_caches | | instance_metadata | | instance_system_metadata | | instance_type_extra_specs | | instance_type_projects | | instance_types | | instances | | inventories | | key_pairs | | migrate_version | | migrations | | networks | | pci_devices | | project_user_quotas | | provider_fw_rules | | quota_classes | | quota_usages | | quotas | | reservations | | resource_provider_aggregates | | resource_providers | | s3_images | | security_group_default_rules | | security_group_instance_association | | security_group_rules | | security_groups | | services | | shadow_agent_builds | | shadow_aggregate_hosts | | shadow_aggregate_metadata | | shadow_aggregates | | shadow_block_device_mapping | | shadow_bw_usage_cache | | shadow_cells | | shadow_certificates | | shadow_compute_nodes | | shadow_console_pools | | shadow_consoles | | shadow_dns_domains | | shadow_fixed_ips | | shadow_floating_ips | | shadow_instance_actions | | shadow_instance_actions_events | | shadow_instance_extra | | shadow_instance_faults | | shadow_instance_group_member | | shadow_instance_group_policy | | shadow_instance_groups | | shadow_instance_id_mappings | | shadow_instance_info_caches | | shadow_instance_metadata | | shadow_instance_system_metadata | | shadow_instance_type_extra_specs | | shadow_instance_type_projects | | shadow_instance_types | | shadow_instances | | shadow_key_pairs | | shadow_migrate_version | | shadow_migrations | | shadow_networks | | shadow_pci_devices | | shadow_project_user_quotas | | shadow_provider_fw_rules | | shadow_quota_classes | | shadow_quota_usages | | shadow_quotas | | shadow_reservations | | shadow_s3_images | | shadow_security_group_default_rules | | shadow_security_group_instance_association | | shadow_security_group_rules | | shadow_security_groups | | shadow_services | | shadow_snapshot_id_mappings | | shadow_snapshots | | shadow_task_log | | shadow_virtual_interfaces | | shadow_volume_id_mappings | | shadow_volume_usage_cache | | snapshot_id_mappings | | snapshots | | tags | | task_log | | virtual_interfaces | | volume_id_mappings | | volume_usage_cache | +--------------------------------------------+

7.启动服务

# systemctl enable openstack-nova-api.service \ openstack-nova-consoleauth.service openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.service # systemctl start openstack-nova-api.service \ openstack-nova-consoleauth.service openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.service

问题:

启动报错

[root@controller ~]# journalctl -xe

Dec 22 20:40:27 controller sudo[19958]: pam_unix(sudo:account): helper binary execve failed: Permission denied

Dec 22 20:40:27 controller sudo[19955]: nova : PAM account management error: Authentication service cannot retrieve authentic

nova-api 错误日志 2019-12-22 19:14:01.435 18041 CRITICAL nova [-] ProcessExecutionError: Unexpected error while running command. Command: sudo nova-rootwrap /etc/nova/rootwrap.conf iptables-save -c Exit code: 1 Stdout: u'' Stderr: u'sudo: PAM account management error: Authentication service cannot retrieve authentication info\n' 2019-12-22 19:14:01.435 18041 ERROR nova Traceback (most recent call last): 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/bin/nova-api", line 10, in <module> 2019-12-22 19:14:01.435 18041 ERROR nova sys.exit(main()) 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/cmd/api.py", line 57, in main 2019-12-22 19:14:01.435 18041 ERROR nova server = service.WSGIService(api, use_ssl=should_use_ssl) 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/service.py", line 358, in __init__ 2019-12-22 19:14:01.435 18041 ERROR nova self.manager = self._get_manager() 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/service.py", line 415, in _get_manager 2019-12-22 19:14:01.435 18041 ERROR nova return manager_class() 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/api/manager.py", line 30, in __init__ 2019-12-22 19:14:01.435 18041 ERROR nova self.network_driver.metadata_accept() 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/network/linux_net.py", line 706, in metadat a_accept 2019-12-22 19:14:01.435 18041 ERROR nova iptables_manager.apply() 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/network/linux_net.py", line 446, in apply 2019-12-22 19:14:01.435 18041 ERROR nova self._apply() 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/oslo_concurrency/lockutils.py", line 271, in inn er 2019-12-22 19:14:01.435 18041 ERROR nova return f(*args, **kwargs) 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/network/linux_net.py", line 466, in _apply 2019-12-22 19:14:01.435 18041 ERROR nova attempts=5) 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/network/linux_net.py", line 1267, in _execu te 2019-12-22 19:14:01.435 18041 ERROR nova return utils.execute(*cmd, **kwargs) 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/utils.py", line 388, in execute 2019-12-22 19:14:01.435 18041 ERROR nova return RootwrapProcessHelper().execute(*cmd, **kwargs) 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/nova/utils.py", line 271, in execute 2019-12-22 19:14:01.435 18041 ERROR nova return processutils.execute(*cmd, **kwargs) 2019-12-22 19:14:01.435 18041 ERROR nova File "/usr/lib/python2.7/site-packages/oslo_concurrency/processutils.py", line 389, in execute 2019-12-22 19:14:01.435 18041 ERROR nova cmd=sanitized_cmd) 2019-12-22 19:14:01.435 18041 ERROR nova ProcessExecutionError: Unexpected error while running command. 2019-12-22 19:14:01.435 18041 ERROR nova Command: sudo nova-rootwrap /etc/nova/rootwrap.conf iptables-save -c 2019-12-22 19:14:01.435 18041 ERROR nova Exit code: 1 2019-12-22 19:14:01.435 18041 ERROR nova Stdout: u'' 2019-12-22 19:14:01.435 18041 ERROR nova Stderr: u'sudo: PAM account management error: Authentication service cannot retrieve auth entication info\n'

解决方法:

关闭selinux

修改/etc/selinux/config文件

[root@controller ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

[root@controller ~]# setenforce 0

8.验证服务

[root@controller nova]# nova service-list

问题,执行验证命令时,报错

ERROR (AuthorizationFailure): Authentication cannot be scoped to multiple targets. Pick one of: project, domain, trust or unscope

问题原因:环境变量配置的有问题

原来的配置 [root@controller ~]# env | grep OS HOSTNAME=controller OS_IMAGE_API_VERSION=2 OS_PROJECT_NAME=admin OS_IDENTITY_API_VERSION=3 OS_USER_DOMIAN_NAME=default ##单词写错 OS_PASSWORD=ADMIN_PASS OS_DOMAIN_NAME=default OS_AUTH_URL=http://controller:35357/v3 OS_USERNAME=admin OS_PROJECT_DOMAIN_NAME=default 更改后的配置 [root@controller ~]# env | grep OS HOSTNAME=controller OS_USER_DOMAIN_NAME=default OS_IMAGE_API_VERSION=2 OS_PROJECT_NAME=admin OS_IDENTITY_API_VERSION=3 OS_PASSWORD=ADMIN_PASS OS_AUTH_URL=http://controller:35357/v3 OS_USERNAME=admin OS_PROJECT_DOMAIN_NAME=default

修正admin-openrc脚本,重新登陆即可,使脚本重新生效,修改环境变量配置

执行结果

[root@controller ~]# nova service-list +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-conductor | controller | internal | enabled | up | 2019-12-23T01:30:56.000000 | - | | 2 | nova-consoleauth | controller | internal | enabled | up | 2019-12-23T01:30:56.000000 | - | | 3 | nova-scheduler | controller | internal | enabled | up | 2019-12-23T01:30:56.000000 | - | +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

另外两个服务一个时nova-api,显示界面正常说明nova-api没有问题,另一个服务nova-novncproxy通过一下检测 [root@controller ~]# netstat -lntup|grep 6080 tcp 0 0 0.0.0.0:6080 0.0.0.0:* LISTEN 9475/python2 [root@controller ~]# ps -ef | grep 9475 nova 9475 1 0 09:23 ? 00:00:01 /usr/bin/python2 /usr/bin/nova-novncproxy --web /usr/share/novnc/ root 10919 10631 0 09:47 pts/0 00:00:00 grep --color=auto 9475

在计算机点安装服务

1.安装服务

[root@compute1 ~]# yum install -y openstack-nova-compute openstack-utils.noarch

2.修改配置文件

[root@compute1 ~]# cp /etc/nova/nova.conf{,.bak} [root@compute1 ~]# grep -Ev '^$|#' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.31

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled True

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html

3.启动服务

[root@compute1 ~]# systemctl enable libvirtd.service openstack-nova-compute.service

[root@compute1 ~]# systemctl start libvirtd.service openstack-nova-compute.service

4.验证服务

在控制节点查看nova服务状态

[root@controller ~]# nova service-list +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-conductor | controller | internal | enabled | up | 2019-12-23T06:28:53.000000 | - | | 2 | nova-consoleauth | controller | internal | enabled | up | 2019-12-23T06:28:50.000000 | - | | 3 | nova-scheduler | controller | internal | enabled | up | 2019-12-23T06:28:51.000000 | - | | 6 | nova-compute | compute1 | nova | enabled | up | 2019-12-23T06:28:48.000000 | - | +----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

五、neutron 网络服务

在控制节点上安装配置

1.创建数据库并授权

MariaDB [(none)]> CREATE DATABASE neutron; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';

[root@controller ~]# mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 44 Server version: 10.1.20-MariaDB MariaDB Server Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> CREATE DATABASE neutron; Query OK, 1 row affected (0.00 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS'; Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> exit

2.在keystone创建系统用户

[root@controller ~]# openstack user create --domain default --password NEUTRON_PASS neutron

[root@controller ~]# openstack role add --project service --user neutron admin

[root@controller ~]# openstack user create --domain default --password NEUTRON_PASS neutron +-----------+----------------------------------+ | Field | Value | +-----------+----------------------------------+ | domain_id | c9f9dbbfdfe34c45b9e18b1aca5aea1c | | enabled | True | | id | 25e38bd3c4cb43069ae6f02024b00f1f | | name | neutron | +-----------+----------------------------------+ [root@controller ~]# openstack role add --project service --user neutron admin

3.在keystone 创建服务并注册api

[root@controller ~]# openstack service create --name neutron --description "OpenStack Networking" network [root@controller ~]# openstack endpoint create --region RegionOne network public http://controller:9696 [root@controller ~]# openstack endpoint create --region RegionOne network internal http://controller:9696 [root@controller ~]# openstack endpoint create --region RegionOne network admin http://controller:9696

[root@controller ~]# openstack service create --name neutron --description "OpenStack Networking" network +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | OpenStack Networking | | enabled | True | | id | 23811beac4d34f438aeededbe1542041 | | name | neutron | | type | network | +-------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne network public http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | fd99523437624934bec3b31c7f222679 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 23811beac4d34f438aeededbe1542041 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne network internal http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 7a71f08bc75d47378e9cd09bb9114dca | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 23811beac4d34f438aeededbe1542041 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+ [root@controller ~]# openstack endpoint create --region RegionOne network admin http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 0a650a177ab14af79a389821c3cb4d67 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 23811beac4d34f438aeededbe1542041 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+

4.安装服务相应软件包

[root@controller ~]# yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

5.修改配置文件

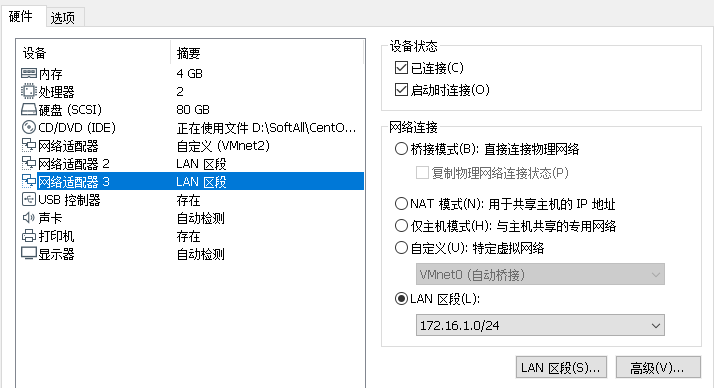

增加一块万卡配置

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.0.0.11 netmask 255.255.255.0 broadcast 10.0.0.255 inet6 fe80::389d:e340:ea17:3a30 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:67:46:37 txqueuelen 1000 (Ethernet) RX packets 22405 bytes 7134527 (6.8 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 16055 bytes 4999491 (4.7 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens37: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.10.100.11 netmask 255.255.255.0 broadcast 10.10.100.255 ether 00:0c:29:67:46:41 txqueuelen 1000 (Ethernet) RX packets 53 bytes 9300 (9.0 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 52 bytes 8782 (8.5 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 165658 bytes 48334681 (46.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 165658 bytes 48334681 (46.0 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@controller ~]# nmcli con show NAME UUID TYPE DEVICE ens33 5b7edfad-37ad-4254-a1d9-660686d0f9d7 ethernet ens33 Wired connection 1 2fe07932-584b-33ee-9e22-abef21281914 ethernet ens37 [root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37 NAME=ens37 DEVICE=ens37 TYPE=Ethernet ONBOOT="yes" BOOTPROTO="none" UUID=2fe07932-584b-33ee-9e22-abef21281914 HWADDR=00:0C:29:67:46:41 [root@controller ~]#

修改配置文件

a:修改/etc/neutron/neutron.conf cp /etc/neutron/neutron.conf{,.bak} grep '^[a-z\[]' /etc/neutron/neutron.conf.bak >/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes True

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

b:修改/etc/neutron/plugins/ml2/ml2_conf.ini cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak} grep '^[a-z\[]' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset True

c:修改/etc/neutron/plugins/ml2/linuxbridge_agent.ini cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak} grep '^[a-z\[]' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:ens33

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan False

d:修改/etc/neutron/dhcp_agent.ini

cp /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak >/etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.BridgeInterfaceDriver

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

e:修改/etc/neutron/metadata_agent.ini

cp /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak >/etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_ip controller

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

f:修改/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy True

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

6.初始化数据库

创建软连接

[root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

同步数据库

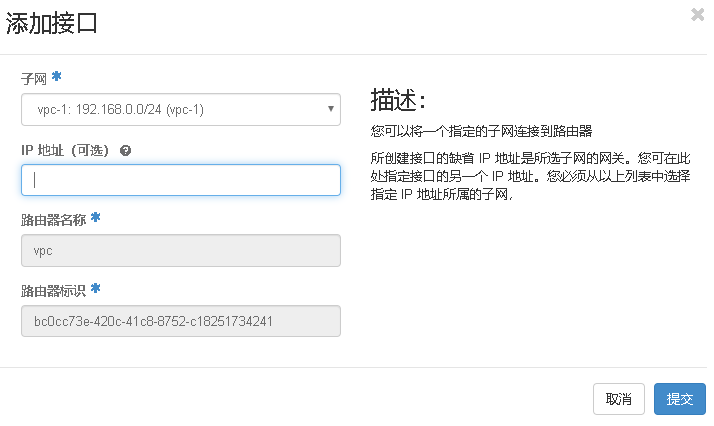

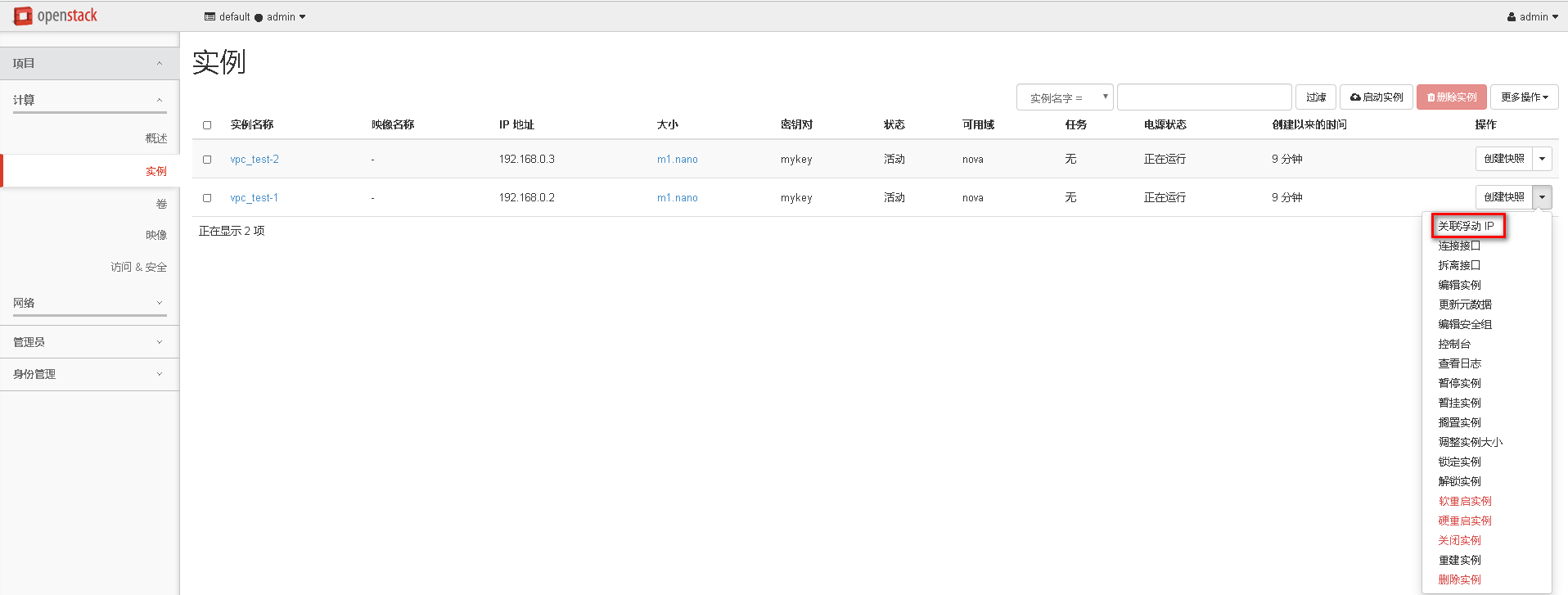

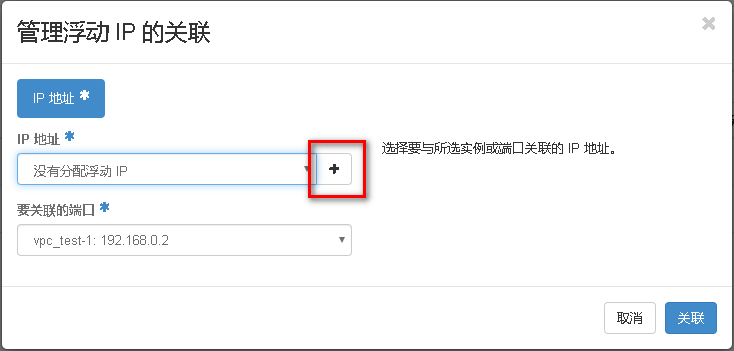

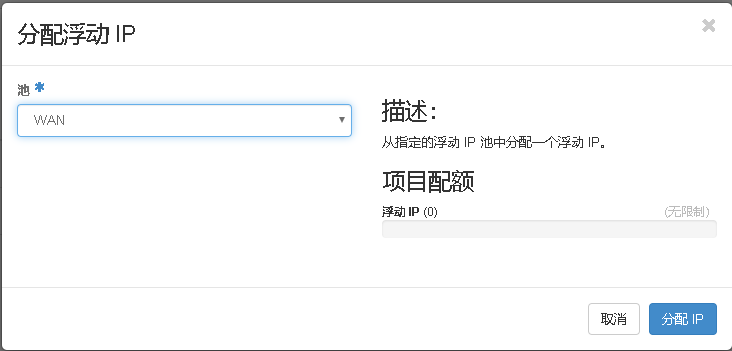

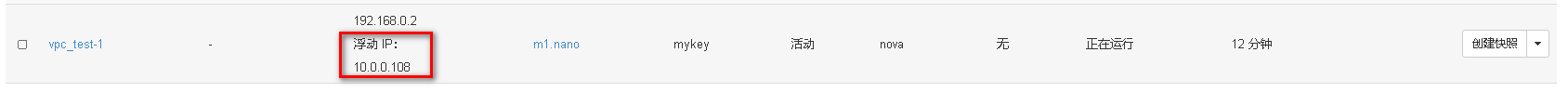

[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron