一维线性回归pytorch实现

训练代码:

import torch

import numpy as np

x_train = np.array([[3.3], [4.4], [5.5], [6.71], [6.93], [4.168], [9.799]])

y_train = np.array([[1.7], [2.76], [2.09], [3.19], [1.694], [1.573], [3.366]])

x_train = torch.from_numpy(x_train).float()

y_train = torch.from_numpy(y_train).float()

print('x_train:{}'.format(x_train))

print('y_train:{}'.format(y_train))

class LinearRegression(torch.nn.Module):

def __init__(self):

super(LinearRegression, self).__init__()

self.linear = torch.nn.Linear(1, 1) #输入输出均是一维的

def forward(self, x):

out = self.linear(x)

return out

model = LinearRegression() #定义模型

criterion = torch.nn.MSELoss() #定义损失函数

optimizer = torch.optim.SGD(model.parameters(), lr = 1e-2) #优化器

num_epochs = 1000 #优化1000次

for epoch in range(num_epochs):

inputs_ = torch.autograd.Variable(x_train)

target = torch.autograd.Variable(y_train)

#forward

out = model(inputs_) #由于继承了nn.module模块,该模块已经调用了forward函数,该语句等价于 out = model.forward(inputs_)

loss = criterion(out, target)

#backward

optimizer.zero_grad() #每次做反向传播前都要归零梯度

loss.backward()

optimizer.step() #更新参数

if (epoch+1) % 20 == 0: #每隔一段时间看下训练结果

print('Epoch[{}/{}], loss: {:.6f}'.format(epoch+1, num_epochs, loss.data))

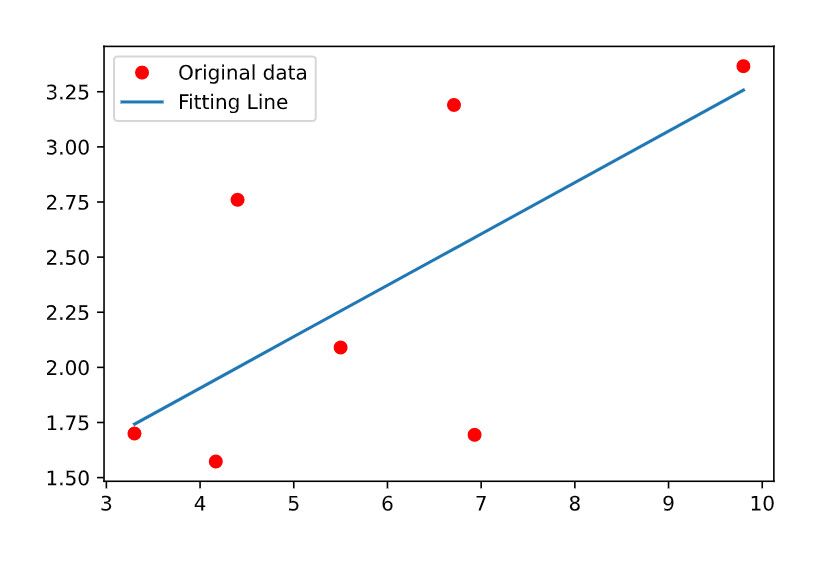

做完训练后,可以查看下预测结果:

import matplotlib.pyplot as plt

model.eval() #将模型变为预测模型

predict = model(torch.autograd.Variable(x_train))

predict = predict.data.numpy()

plt.plot(x_train.numpy(), y_train.numpy(), 'ro', label='Original data')

plt.plot(x_train.numpy(), predict, label = 'Fitting Line')

plt.legend()

plt.show()

本文来自博客园,作者:aJream,转载请记得标明出处:https://www.cnblogs.com/ajream/p/15383550.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号