逻辑回归

-

逻辑回归

-

适用类型:解决二分类问题

-

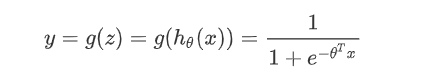

逻辑回归的出现:线性回归可以预测连续值,但是不能解决分类问题,我们需要根据预测的结果判定其属于正类还是负类。所以逻辑回归就是将线性回归的结果,通过Sigmoid函数映射到(0,1)之间

-

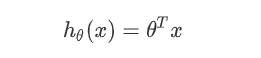

线性回归的决策函数:数据与θ的乘法,数据的矩阵格式(样本数×列数),θ的矩阵格式(列数×1)

将其通过Sigmoid函数,获得逻辑回归的决策函数

-

使用Sigmoid函数的原因:

-

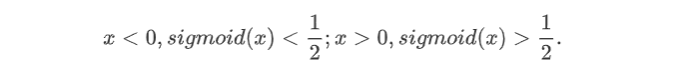

可以对(-∞, +∞)的结果,映射到(0, 1)之间作为概率

-

可以将1/2作为决策边界

-

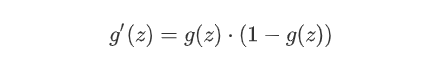

数学特性好,求导容易

-

-

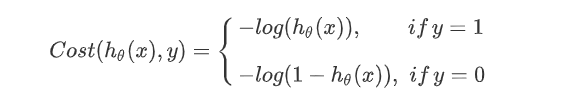

逻辑回归的损失函数

-

线性回归的损失函数维平方损失函数,如果将其用于逻辑回归的损失函数,则其数学特性不好,有很多局部极小值,难以用梯度下降法求解最优

-

这里使用对数损失函数

解释:如果一个样本为正样本,那么我们希望将其预测为正样本的概率p越大越好,也就是决策函数的值越大越好,则logp越大越好,逻辑回归的决策函数值就是样本为正的概率;如果一个样本为负样本,那么我们希望将其预测为负样本的概率越大越好,也就是(1-p)越大越好,即log(1-p)越大越好

为什么使用对数函数:样本集中有很多样本,要求其概率连乘,概率为0-1之间的数,连乘越来越小,利用log变换将其变为连加,不会溢出,不会超出计算精度

-

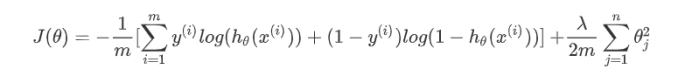

损失函数:: y(1->m)表示Sigmoid值(样本数×1),hθx(1->m)表示决策函数值(样本数×1),所以中括号的值(1×1)

-

-

二分类逻辑回归直线编码实现

import pandas as pd import numpy as np from matplotlib import pyplot as plt from scipy.optimize import minimize from sklearn.preprocessing import PolynomialFeatures class MyLogisticRegression: def __init__(self): plt.rcParams["font.sans-serif"] = ["SimHei"] # 数据初始化加载 self.fr = open(r"./testSet.txt") self.data_mat, self.label_mat = self.load_data() # 数据初始化加载 def load_data(self): data_mat = [] label_mat = [] # 文件方式获取文件数据 for line in self.fr.readlines(): line_array = line.strip().split() data_mat.append([1.0, float(line_array[0]), float(line_array[1])]) label_mat.append(int(line_array[2])) return np.array(data_mat), np.array(label_mat) def plot_data(self, xLabel=None, yLabel=None, negSimpleText="neg", posSimpleText="pos", thetas=None): """ 散点数据显示(二分类问题) :param xLabel: x轴文本 :param yLabel: y轴文本 :param negSimpleText: 数据标签为0显示的文本标签 :param posSimpleText: 数据标签为1显示的文本标签 :param thetas: 回归系数 :return: 标签数据不同的显示效果 """ data_set = self.data_mat[:, 1:3] # 数据标签为0用+号显示,数据标签为1用圆心号显示 neg = self.label_mat == 0 pos = self.label_mat == 1 # 散点画图 fig1 = plt.figure(figsize=(12, 8)) ax1 = fig1.add_subplot(111) ax1.scatter(data_set[neg][:, 0], data_set[neg][:, 1], marker="+", s=60, label=negSimpleText) ax1.scatter(data_set[pos][:, 0], data_set[pos][:, 1], marker="o", s=60, label=posSimpleText) ax1.set_xlabel(xLabel, fontsize=20) # 描绘逻辑回归直线 if isinstance(thetas, type(np.array([]))): xMin = data_set[:, 0].min() xMax = data_set[:, 0].max() xPlot = np.arange(xMin, xMax, 1) yPlot = (-thetas[0]-thetas[1]*xPlot)/thetas[2] ax1.plot(xPlot, yPlot, c="r", label="Decision Boundary") ax1.legend(fontsize=14) plt.show() plt.close() # 定义sigmoid函数 @staticmethod def sigmoid(z): return 1.0 / (1 + np.exp(-z)) def cost_func(self, theta, x, y): """ 损失函数具体实现 :param theta: 回归系数 :param x: 带有截距项的数据集 :param y: 标签数据集 :return: 损失函数值 """ m = y.size h = self.sigmoid(x.dot(theta)) J = -1*(1/m)*(np.log(h).T.dot(y) + np.log(1-h).T.dot(1-y)) if np.isnan(J[0]): return np.inf return J[0] def gradient(self, theta, x, y): """ 梯度函数 :param theta: 回归系数数组 :param x: 数据集,带有截距项的数据集 :param y: 标签集,单列向量 :return: """ m = y.size h = self.sigmoid(x.dot(theta.reshape(-1, 1))) grad = (1/m)*x.T.dot(h-y) return grad.flatten() def gradient_descent(self, alpha=0.01, iterations=2000): """ 逻辑回归梯度下降收敛函数 :param alpha: 学习率 :param iterations: 最大迭代次数 :return:theta: 回归系数组 """ m, n = self.data_mat.shape label_set = self.label_mat.reshape(-1, 1) theta = np.zeros((n, 1)) theta_set = [] for i in range(iterations): grad = self.gradient(theta, self.data_mat, label_set) theta = theta - alpha*grad.reshape(-1, 1) theta_set.append(theta) return theta, theta_set # 梯度上升 def grad_ascent(self, alpha=0.01, max_cycles=1500): data_mat = np.mat(self.data_mat) label_mat = np.mat(self.label_mat).transpose() m, n = np.shape(data_mat) weight = np.zeros((n, 1)) for k in range(max_cycles): h = self.sigmoid(data_mat*weight) error = label_mat - h weight = weight + 1/m * alpha*data_mat.transpose()*error return np.array(weight) # 算法预测及应用 def predict(self, theta, data_set, threshold=0.5): p = self.sigmoid(data_set.dot(theta.T)) >= threshold return p.astype("int") if __name__ == '__main__': my_logistic_regression = MyLogisticRegression() my_logistic_regression.plot_data(xLabel=u'原始数据') # 使用梯度下降求解决策结果 res, theta_set = my_logistic_regression.gradient_descent(alpha=0.1, iterations=500) my_logistic_regression.plot_data(xLabel=u"决策边界", thetas=res.reshape(1, -1)[0]) # 使用梯度上升求解决策结果 res = my_logistic_regression.grad_ascent(max_cycles=5000) my_logistic_regression.plot_data(xLabel=u"决策边界", thetas=res.reshape(1, -1)[0]) # 使用cost_func求解决策结果 a = my_logistic_regression.data_mat b = my_logistic_regression.label_mat.reshape(-1, 1) thetas = np.zeros((a.shape[1])) res = minimize(my_logistic_regression.cost_func, thetas, args=(a, b), method=None, jac=my_logistic_regression.gradient, options={"maxiter": 400}) my_logistic_regression.plot_data(xLabel=u"决策边界", thetas=res["x"]) # 单个数据测试 test = np.array([1, 2, 6]) print("Result of [1, 2, 6]:", my_logistic_regression.predict(res.x, test)) prob = my_logistic_regression.sigmoid(test.dot(res.x.T)) print("Prob of [1, 2, 6]:", prob) # 准确率测试 p = my_logistic_regression.predict(res.x, my_logistic_regression.data_mat) err = sum(p != my_logistic_regression.label_mat.ravel()) print("Error Rate: %.2f%%" % ((err / p.size) * 100))

-