Tensorflow2.0之时间序列预测-单变量历史数据滚动预测

文章目录

- 项目介绍

- Tensorflow实现

项目介绍

任务:通过前n个时间点的温度来预测第n+1个时间点的温度。

数据集:2009_2016耶拿天气数据集。

Tensorflow实现

1、导入需要的库

import tensorflow as tf

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

import os

import pandas as pd

mpl.rcParams['figure.figsize'] = (8, 6)

mpl.rcParams['axes.grid'] = False

12345678910

2、引入数据集

zip_path = tf.keras.utils.get_file(

origin='https://storage.googleapis.com/tensorflow/tf-keras-datasets/jena_climate_2009_2016.csv.zip',

fname='jena_climate_2009_2016.csv.zip',

extract=True)

csv_path, _ = os.path.splitext(zip_path)

df = pd.read_csv(csv_path)

df.head()

输出数据集前五行为:

3、划分特征和标签、建模预测

3.1 用单变量预测一个未来时间点

在这里,我们只是用过去的一些温度信息来预测未来的一个时间点的温度。也就是说,数据集中只包括温度信息。

3.1.1 取出只含温度的数据集

uni_data = df['T (degC)']

uni_data.index = df['Date Time']

uni_data.head()

输出数据集中的前五行:

Date Time

01.01.2009 00:10:00 -8.02

01.01.2009 00:20:00 -8.41

01.01.2009 00:30:00 -8.51

01.01.2009 00:40:00 -8.31

01.01.2009 00:50:00 -8.27

Name: T (degC), dtype: float64

1234567

3.1.2 温度随时间变化绘图

uni_data.plot(subplots=True)

1

3.1.3 将数据集转换为数组类型

uni_data = uni_data.values

uni_data.shape

12

得到:

(420551,)

1

可见数据集中包含了420551个时间点的温度信息。

3.1.4 标准化

TRAIN_SPLIT = 300000 # 只取前300000行数据

uni_train_mean = uni_data[:TRAIN_SPLIT].mean()

uni_train_std = uni_data[:TRAIN_SPLIT].std()

uni_data = (uni_data-uni_train_mean)/uni_train_std

1234

3.1.5 写函数来划分特征和标签

def univariate_data(dataset, start_index, end_index, history_size, target_size):

data = []

labels = []

start_index = start_index + history_size

if end_index is None:

end_index = len(dataset) - target_size

for i in range(start_index, end_index):

indices = range(i-history_size, i)

# Reshape data from (history_size,) to (history_size, 1)

data.append(np.reshape(dataset[indices], (history_size, 1)))

labels.append(dataset[i+target_size])

return np.array(data), np.array(labels)

以上函数表示用前history_size个时间点的温度预测第history_size+target_size+1个时间点的温度。start_index和end_index表示数据集datasets起始的时间点,我们将要从这些时间点中取出特征和标签。

3.1.6 从数据集中划分特征和标签

univariate_past_history = 20

univariate_future_target = 0

x_train_uni, y_train_uni = univariate_data(uni_data, 0, TRAIN_SPLIT,

univariate_past_history,

univariate_future_target)

x_val_uni, y_val_uni = univariate_data(uni_data, TRAIN_SPLIT, None,

univariate_past_history,

univariate_future_target)

以上代码说明:每个样本有20个特征(即20个时间点的温度信息),其标签为第21个时间点的温度值,如:

print(uni_data[:25])

print ('Single window of past history')

print (x_train_uni[0])

print ('\n Target temperature to predict')

print (y_train_uni[0])

得到:

array([-1.99766294, -2.04281897, -2.05439744, -2.0312405 , -2.02660912,

-2.00113649, -1.95134907, -1.95134907, -1.98492663, -2.04513467,

-2.08334362, -2.09723778, -2.09376424, -2.09144854, -2.07176515,

-2.07176515, -2.07639653, -2.08913285, -2.09260639, -2.10418486,

-2.10418486, -2.09492208, -2.10997409, -2.11692118, -2.13776242])

Single window of past history

[[-1.99766294]

[-2.04281897]

[-2.05439744]

[-2.0312405 ]

[-2.02660912]

[-2.00113649]

[-1.95134907]

[-1.95134907]

[-1.98492663]

[-2.04513467]

[-2.08334362]

[-2.09723778]

[-2.09376424]

[-2.09144854]

[-2.07176515]

[-2.07176515]

[-2.07639653]

[-2.08913285]

[-2.09260639]

[-2.10418486]]

Target temperature to predict

-2.1041848598100876

可见第一个样本的特征为前20个时间点的温度,其标签为第21个时间点的温度。根据同样的规律,第二个样本的特征为第2个时间点的温度值到第21个时间点的温度值,其标签为第22个时间点的温度……

3.1.7 设置绘图函数

def create_time_steps(length):

return list(range(-length, 0))

def show_plot(plot_data, delta, title):

labels = ['History', 'True Future', 'Model Prediction']

marker = ['.-', 'rx', 'go']

time_steps = create_time_steps(plot_data[0].shape[0]) # 返回-20到-1的列表

if delta:

future = delta

else:

future = 0

plt.title(title)

for i, x in enumerate(plot_data):

if i:

plt.plot(future, plot_data[i], marker[i], markersize=10,

label=labels[i])

else:

plt.plot(time_steps, plot_data[i].flatten(), marker[i], label=labels[i])

plt.legend()

plt.xlim([time_steps[0], (future+5)*2])

plt.xlabel('Time-Step')

return plt

以上代码中的create_time_steps(length) 函数即创造一个从-20到-1的列表,作为x轴的数值。

3.1.8 绘制第一个样本的特征和标签

因为此时还没有预测值,所以绘图函数中的’Model Prediction’是用不到的。

show_plot([x_train_uni[0], y_train_uni[0]], 0, 'Sample Example')

3.1.9 将特征和标签切片

BATCH_SIZE = 256

BUFFER_SIZE = 10000

train_univariate = tf.data.Dataset.from_tensor_slices((x_train_uni, y_train_uni))

train_univariate = train_univariate.cache().shuffle(BUFFER_SIZE).batch(BATCH_SIZE).repeat()

val_univariate = tf.data.Dataset.from_tensor_slices((x_val_uni, y_val_uni))

val_univariate = val_univariate.batch(BATCH_SIZE).repeat()

12345678

我们可以通过:

for x, y in val_univariate.take(1):

print(x.shape)

print(y.shape)

来查看每批样本的尺寸。

(256, 20, 1)

(256,)

3.1.10 建模

simple_lstm_model = tf.keras.models.Sequential([

tf.keras.layers.LSTM(8, input_shape=x_train_uni.shape[-2:]),

tf.keras.layers.Dense(1)

])

simple_lstm_model.compile(optimizer='adam', loss='mae')

3.1.11 训练模型

EVALUATION_INTERVAL = 200

EPOCHS = 10

simple_lstm_model.fit(train_univariate, epochs=EPOCHS,

steps_per_epoch=EVALUATION_INTERVAL,

validation_data=val_univariate, validation_steps=50)

Train for 200 steps, validate for 50 steps

Epoch 1/10

200/200 [==============================] - 5s 25ms/step - loss: 0.4075 - val_loss: 0.1351

Epoch 2/10

200/200 [==============================] - 2s 10ms/step - loss: 0.1118 - val_loss: 0.0359

Epoch 3/10

200/200 [==============================] - 2s 10ms/step - loss: 0.0489 - val_loss: 0.0290

Epoch 4/10

200/200 [==============================] - 2s 10ms/step - loss: 0.0443 - val_loss: 0.0258

Epoch 5/10

200/200 [==============================] - 2s 10ms/step - loss: 0.0299 - val_loss: 0.0235

Epoch 6/10

200/200 [==============================] - 2s 10ms/step - loss: 0.0317 - val_loss: 0.0224

Epoch 7/10

200/200 [==============================] - 2s 11ms/step - loss: 0.0286 - val_loss: 0.0207

Epoch 8/10

200/200 [==============================] - 2s 11ms/step - loss: 0.0263 - val_loss: 0.0197

Epoch 9/10

200/200 [==============================] - 2s 10ms/step - loss: 0.0253 - val_loss: 0.0181

Epoch 10/10

200/200 [==============================] - 2s 11ms/step - loss: 0.0227 - val_loss: 0.0174

3.1.12 将预测值也绘制在图上

for x, y in val_univariate.take(3):

plot = show_plot([x[0].numpy(), y[0].numpy(),

simple_lstm_model.predict(x)[0]], 0, 'Simple LSTM model')

plot.show()

3.2 用多变量预测一个未来时间点

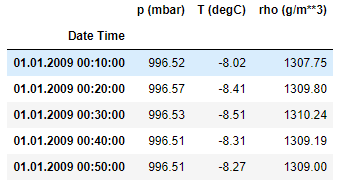

在这里,我们用过去的一些压强信息、温度信息以及密度信息来预测未来的一个时间点的温度。也就是说,数据集中应该包括压强信息、温度信息以及密度信息。

3.2.1 取出含所考虑变量的数据集

features_considered = ['p (mbar)', 'T (degC)', 'rho (g/m**3)']

features = df[features_considered]

features.index = df['Date Time']

features.head()

1234

数据集前五行数据为:

3.2.2 压强、温度、密度随时间变化绘图

features.plot(subplots=True)

1

3.2.3 将数据集转换为数组类型并标准化

dataset = features.values

data_mean = dataset[:TRAIN_SPLIT].mean(axis=0)

data_std = dataset[:TRAIN_SPLIT].std(axis=0)

dataset = (dataset-data_mean)/data_std

12345

3.2.4 写函数来划分特征和标签

在这里,我们不再像3.1中一样用到每个数据,而是在函数中加入step参数,这表明所使用的样本每step个时间点取一次特征和标签。

def multivariate_data(dataset, target, start_index, end_index, history_size,

target_size, step, single_step=False):

data = []

labels = []

start_index = start_index + history_size

if end_index is None:

end_index = len(dataset) - target_size

for i in range(start_index, end_index):

indices = range(i-history_size, i, step)

data.append(dataset[indices])

if single_step:

labels.append(target[i+target_size])

else:

labels.append(target[i:i+target_size])

return np.array(data), np.array(labels)

12345678910111213141516171819

3.2.5 从数据集中划分特征和标签

past_history = 720

future_target = 72

STEP = 6

x_train_single, y_train_single = multivariate_data(dataset, dataset[:, 1], 0,

TRAIN_SPLIT, past_history,

future_target, STEP,

single_step=True)

x_val_single, y_val_single = multivariate_data(dataset, dataset[:, 1],

TRAIN_SPLIT, None, past_history,

future_target, STEP,

single_step=True)

123456789101112

以上代码说明:每个样本的特征为过去的720个时间点的信息,但由于step被设置为6,则在过去的720个时间点的信息中,有720/6=120个时间点的信息被纳入样本特征。

我们可以打印出样本的特征信息:

print ('Single window of past history : {}'.format(x_train_single[0].shape))

1

得到单个样本的特征尺寸为:

Single window of past history : (120, 3)

1

3.2.6 将特征和标签切片

train_data_single = tf.data.Dataset.from_tensor_slices((x_train_single, y_train_single))

train_data_single = train_data_single.cache().shuffle(BUFFER_SIZE).batch(BATCH_SIZE).repeat()

val_data_single = tf.data.Dataset.from_tensor_slices((x_val_single, y_val_single))

val_data_single = val_data_single.batch(BATCH_SIZE).repeat()

12345

3.2.7 建模

single_step_model = tf.keras.models.Sequential()

single_step_model.add(tf.keras.layers.LSTM(32,

input_shape=x_train_single.shape[-2:]))

single_step_model.add(tf.keras.layers.Dense(1))

single_step_model.compile(optimizer=tf.keras.optimizers.RMSprop(), loss='mae')

123456

3.2.8 训练模型

single_step_history = single_step_model.fit(train_data_single, epochs=EPOCHS,

steps_per_epoch=EVALUATION_INTERVAL,

validation_data=val_data_single,

validation_steps=50)

1234

Train for 200 steps, validate for 50 steps

Epoch 1/10

200/200 [==============================] - 35s 176ms/step - loss: 0.3090 - val_loss: 0.2647

Epoch 2/10

200/200 [==============================] - 41s 203ms/step - loss: 0.2625 - val_loss: 0.2432

Epoch 3/10

200/200 [==============================] - 51s 256ms/step - loss: 0.2614 - val_loss: 0.2476

Epoch 4/10

200/200 [==============================] - 61s 307ms/step - loss: 0.2566 - val_loss: 0.2447

Epoch 5/10

200/200 [==============================] - 72s 361ms/step - loss: 0.2267 - val_loss: 0.2360

Epoch 6/10

200/200 [==============================] - 86s 432ms/step - loss: 0.2413 - val_loss: 0.2667

Epoch 7/10

200/200 [==============================] - 82s 412ms/step - loss: 0.2414 - val_loss: 0.2577

Epoch 8/10

200/200 [==============================] - 76s 378ms/step - loss: 0.2407 - val_loss: 0.2371

Epoch 9/10

200/200 [==============================] - 73s 365ms/step - loss: 0.2447 - val_loss: 0.2486

Epoch 10/10

200/200 [==============================] - 74s 368ms/step - loss: 0.2385 - val_loss: 0.2445

123456789101112131415161718192021

3.2.9 绘制预测图

for x, y in val_data_single.take(3):

plot = show_plot([x[0][:, 1].numpy(), y[0].numpy(),

single_step_model.predict(x)[0]], 12,

'Single Step Prediction')

plot.show()

12345

3.3 用多变量预测多个未来时间点

在这里,我们用过去的一些压强信息、温度信息以及密度信息来预测未来的多个时间点的温度。也就是说,数据集中应该包括压强信息、温度信息以及密度信息。

3.3.1 从数据集中划分特征和标签

future_target = 72

x_train_multi, y_train_multi = multivariate_data(dataset, dataset[:, 1], 0,

TRAIN_SPLIT, past_history,

future_target, STEP)

x_val_multi, y_val_multi = multivariate_data(dataset, dataset[:, 1],

TRAIN_SPLIT, None, past_history,

future_target, STEP)

1234567

以上代码说明特征的划分方式和3.2中的相同,但每个样本的标签中都包含了未来72个时间点的温度信息。

我们可以打印出样本的特征信息:

print ('Single window of past history : {}'.format(x_train_multi[0].shape))

print ('\n Target temperature to predict : {}'.format(y_train_multi[0].shape))

12

得到单个样本的特征尺寸为:

Single window of past history : (120, 3)

Target temperature to predict : (72,)

123

3.3.2 将特征和标签切片

train_data_multi = tf.data.Dataset.from_tensor_slices((x_train_multi, y_train_multi))

train_data_multi = train_data_multi.cache().shuffle(BUFFER_SIZE).batch(BATCH_SIZE).repeat()

val_data_multi = tf.data.Dataset.from_tensor_slices((x_val_multi, y_val_multi))

val_data_multi = val_data_multi.batch(BATCH_SIZE).repeat()

12345

3.3.3 编写绘图函数

def multi_step_plot(history, true_future, prediction):

plt.figure(figsize=(12, 6))

num_in = create_time_steps(len(history))

num_out = len(true_future)

plt.plot(num_in, np.array(history[:, 1]), label='History')

plt.plot(np.arange(num_out)/STEP, np.array(true_future), 'bo',

label='True Future')

if prediction.any():

plt.plot(np.arange(num_out)/STEP, np.array(prediction), 'ro',

label='Predicted Future')

plt.legend(loc='upper left')

plt.show()

12345678910111213

3.3.4 绘制温度信息(不含预测值)

for x, y in train_data_multi.take(1):

multi_step_plot(x[0], y[0], np.array([0]))

12

3.3.5 建模

multi_step_model = tf.keras.models.Sequential()

multi_step_model.add(tf.keras.layers.LSTM(32,

return_sequences=True,

input_shape=x_train_multi.shape[-2:]))

multi_step_model.add(tf.keras.layers.LSTM(16, activation='relu'))

multi_step_model.add(tf.keras.layers.Dense(72))

multi_step_model.compile(optimizer=tf.keras.optimizers.RMSprop(clipvalue=1.0), loss='mae')

12345678

3.3.6 训练模型

multi_step_history = multi_step_model.fit(train_data_multi, epochs=EPOCHS,

steps_per_epoch=EVALUATION_INTERVAL,

validation_data=val_data_multi,

validation_steps=50)

1234

Train for 200 steps, validate for 50 steps

Epoch 1/10

200/200 [==============================] - 113s 565ms/step - loss: 0.4974 - val_loss: 0.3019

Epoch 2/10

200/200 [==============================] - 119s 593ms/step - loss: 0.3480 - val_loss: 0.2845

Epoch 3/10

200/200 [==============================] - 134s 670ms/step - loss: 0.3335 - val_loss: 0.2523

Epoch 4/10

200/200 [==============================] - 177s 887ms/step - loss: 0.2438 - val_loss: 0.2093

Epoch 5/10

200/200 [==============================] - 167s 837ms/step - loss: 0.1962 - val_loss: 0.2025

Epoch 6/10

200/200 [==============================] - 174s 871ms/step - loss: 0.2062 - val_loss: 0.2108

Epoch 7/10

200/200 [==============================] - 175s 873ms/step - loss: 0.1981 - val_loss: 0.2047

Epoch 8/10

200/200 [==============================] - 169s 846ms/step - loss: 0.1965 - val_loss: 0.1983

Epoch 9/10

200/200 [==============================] - 170s 849ms/step - loss: 0.2001 - val_loss: 0.1873

Epoch 10/10

200/200 [==============================] - 172s 861ms/step - loss: 0.1913 - val_loss: 0.1828

123456789101112131415161718192021

3.3.7 绘制温度信息(含预测值)

for x, y in val_data_multi.take(3):

multi_step_plot(x[0], y[0], multi_step_model.predict(x)[0])

12

版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。

本文链接:https://blog.csdn.net/qq_36758914/article/details/104750077

版权声明:本文为CSDN博主「cofisher」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_36758914/article/details/104750077

本文作者:薄书

本文链接:https://www.cnblogs.com/aimoboshu/p/14566109.html

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步