hive——Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaExcColumn length too big for column 'TYPE_NAME' (max = 21845)

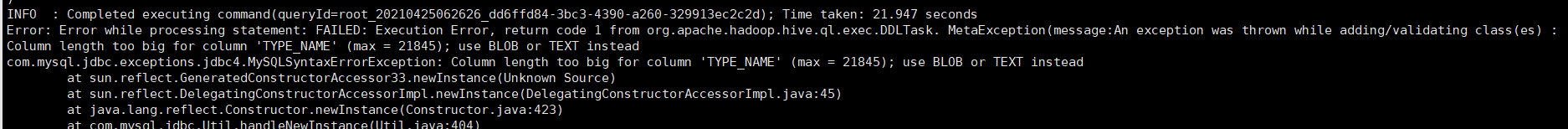

工作太忙,隔了好久的HIVE没玩,捡起来重新学习下,建表出现错误如:

Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaExcColumn length too big for column 'TYPE_NAME' (max = 21845)

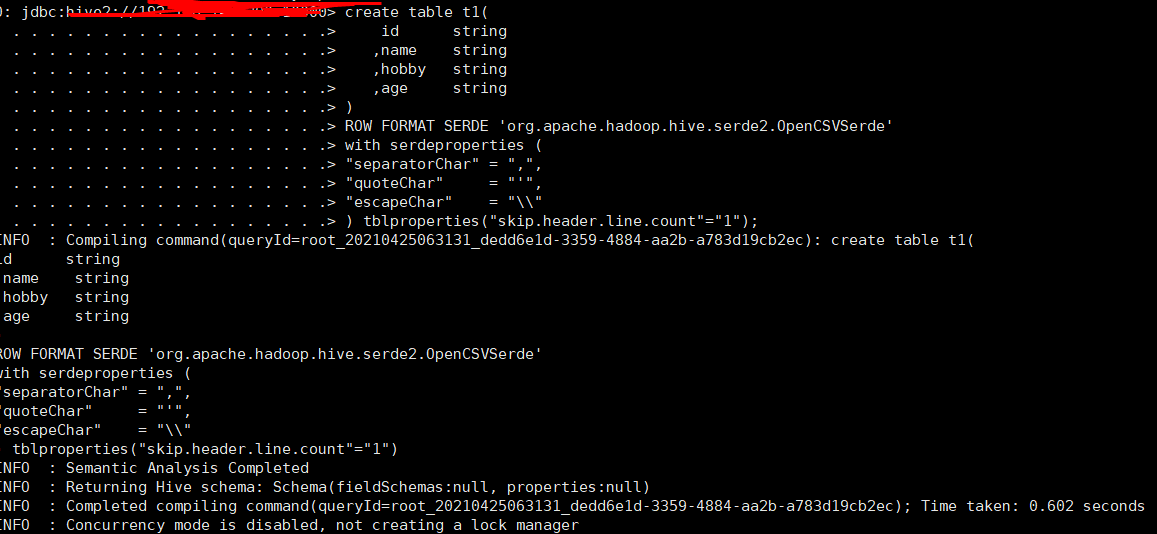

为了后面导入CSV数据,建表语句如下:

create table t1( id string ,name string ,hobby string ,age string ) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' with serdeproperties ( "separatorChar" = ",", "quoteChar" = "'", "escapeChar" = "\\" ) tblproperties("skip.header.line.count"="1")

解决方法:错误一直报数据类型的问题,而 hive 建表类型基本为 string,是hive使用MySQL存储元数据编码问题

导入语句:

alter database hive character set latin1;