Hadoop3.1.1

下载

http://archive.apache.org/dist/hadoop/common/hadoop-3.1.1/

上传至服务器/opt/software

解压

[root@server1 servers]# tar -zxvf hadoop-3.1.1.tar.gz -C /opt/servers

配置环境变量

[root@server1 ~]# vim /etc/profile

添加环境变量

#HADOOP_HOME export HADOOP_HOME=/opt/servers/hadoop-3.1.1 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile

编辑

hadoop-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_231

伪分布式运行模式

core-site.xml

<configuration>

<!-- hdfs://server1:8020 中server1是主机名 -->

<property>

<name>fs.default.name</name>

<value>hdfs://server1:8020</value>

</property>

<!-- 临时文件存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/servers/hadoop-3.1.1/datas/tmp</value>

</property>

<!-- 缓冲区大小 -->

<property>

<name>io.file.buffer.size</name>

<value>8129</value>

</property>

<!-- 开启HDFS垃圾桶机制,删除掉的数据可以从垃圾桶中回收 单位为分钟-->

<property>

<name>fs.trash.interval</name>

<value>10080</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>server1:50070</value>

</property>

</configuration>

启动集群

格式化NameNode(第一次启动时格式化,以后就不要总格式化)

bin/hdfs namenode -format

启动NameNode

[root@server1 hadoop-3.1.1]# sbin/hadoop-daemon.sh start namenode

启动DataNode

[root@server1 hadoop-3.1.1]# sbin/hadoop-daemon.sh start datanode

查看是否启动成功

[root@server1 hadoop-3.1.1]# jps 8001 Jps 7731 NameNode 7855 DataNode

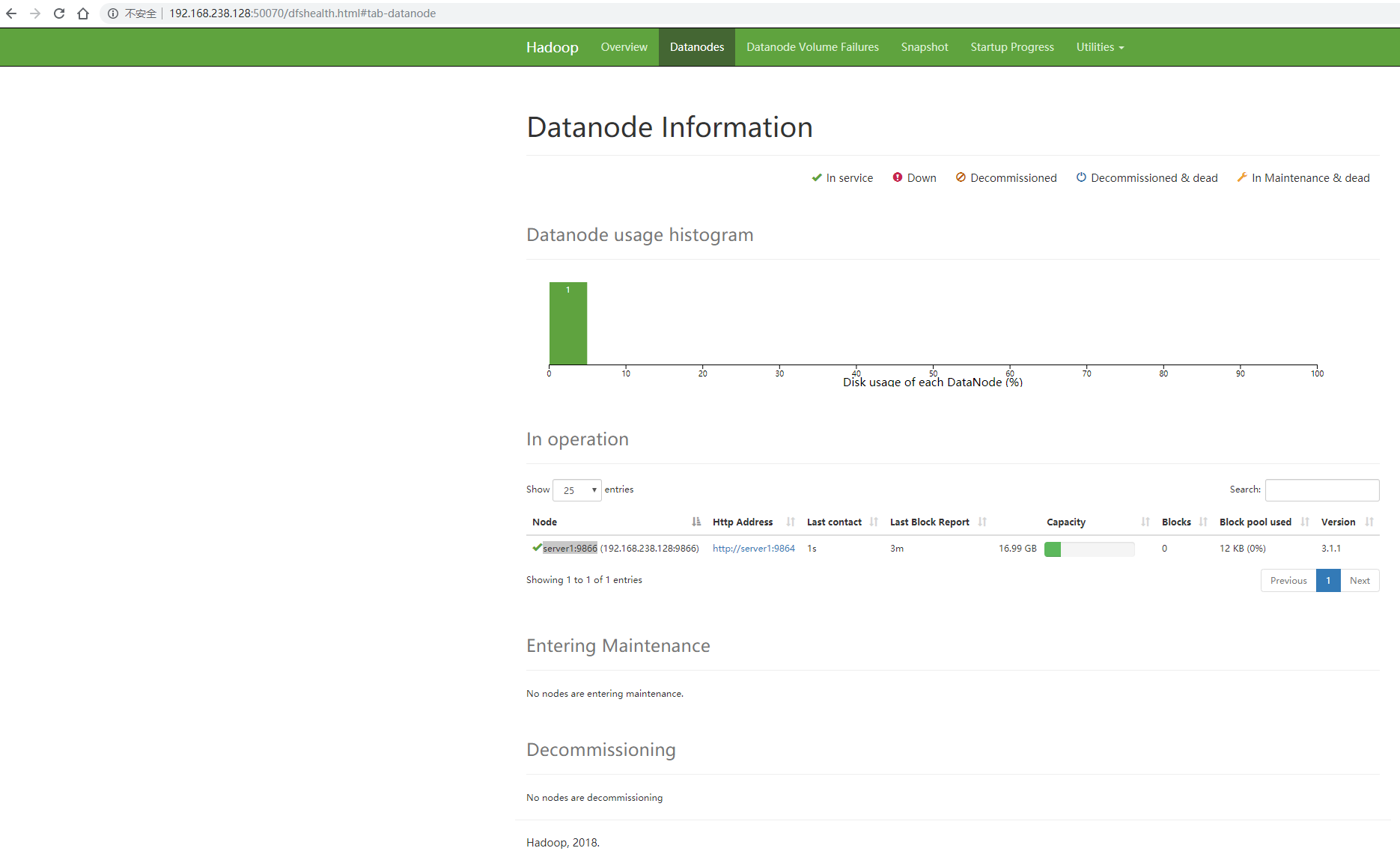

web端查看HDFS文件系统

启动YARN并运行MapReduce程序

配置yarn-env.sh

配置yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<!--Reducer获取数据的方式-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--指定YARN的ResourceManager的地址-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>server1</value>

</property>

</configuration>

配置mapred-site.xml

<configuration>

<!--指定MR运行在YARN上-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

启动集群(启动前必须保证NameNode和DataNode已经启动)

启动ResourceManager

yarn-daemon.sh start resourcemanager

启动NodeManager

yarn-daemon.sh start nodemanager

查看启动情况

[root@server1 hadoop-3.1.1]# jps 7731 NameNode 8660 Jps 8566 NodeManager 8268 ResourceManager 7855 DataNode

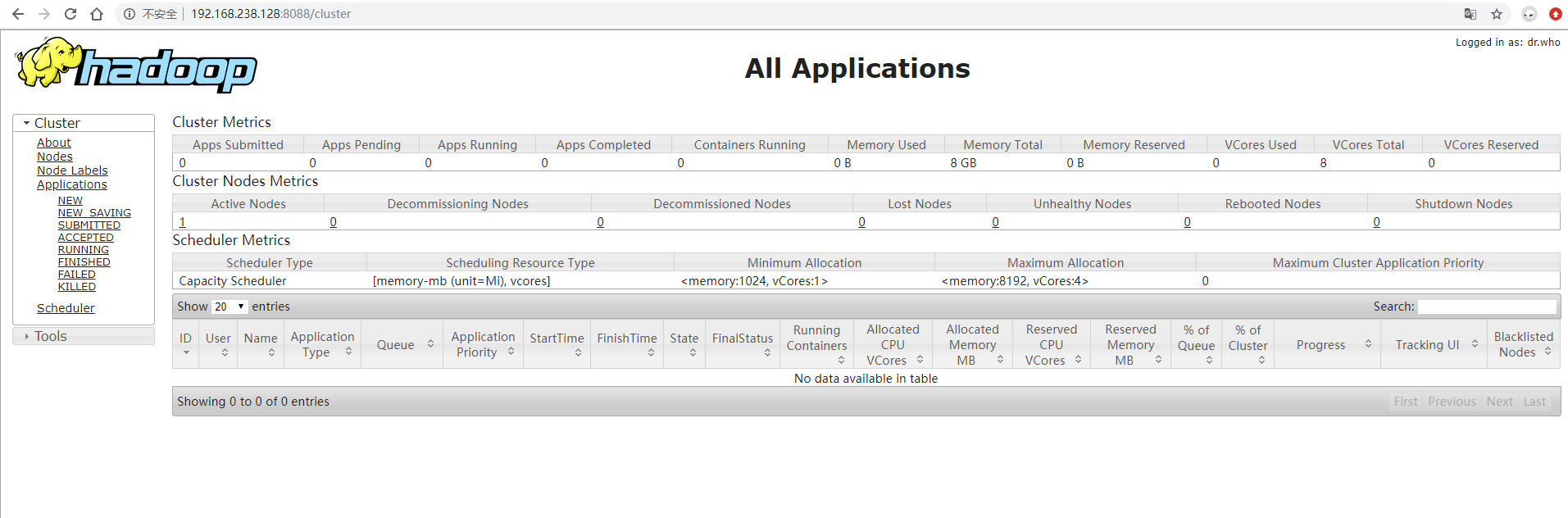

YARN的浏览器页面查看

集群配置

| server1 | server2 | server3 | |

| HDFS |

NameNode DataNode |

DataNode |

SecondaryNameNode DataNode |

| YARN | NodeManager |

ResourceManager NodeManager |

NodeManager |

配置core-site.xml

<configuration>

<!--指定HDFS中NameNode的地址-->

<property>

<name>fs.default.name</name>

<value>hdfs://server1:8020</value>

</property>

<!-- 临时文件存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/servers/hadoop-3.1.1/datas/tmp</value>

</property>

<!-- 缓冲区大小 -->

<property>

<name>io.file.buffer.size</name>

<value>8129</value>

</property>

<!-- 开启HDFS垃圾桶机制,删除掉的数据可以从垃圾桶中回收 单位为分钟-->

<property>

<name>fs.trash.interval</name>

<value>10080</value>

</property>

</configuration>

配置hadoop-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_231

配置hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>server1:50070</value>

</property>

<!--指定Hadoop辅助名称节点主机配置-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>server3:50090</value>

</property>

</configuration>

配置yarn-site.xm

<configuration>

<!-- Site specific YARN configuration properties -->

<!--Reducer获取数据的方式-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--指定YARN的ResourceManager的地址-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>server2</value>

</property>

</configuration>

配置mapred-site.xml

<configuration>

<!--指定MR运行在YARN上-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- 历史服务器 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>server2:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>server2:19888</value>

</property>

</configuration>

编辑

vim /opt/servers/hadoop-3.1.1/etc/hadoop/workers

删除locahost

添加

server1

server2

server3

归档拷贝(在2跟3上执行;可使用集群分发脚本,后文有说明)

[root@server3 servers]# rsync -av server1:/opt/servers/hadoop-3.1.1 /opt/servers

如果不能使用rsync 先安装

[root@server3 servers]# yum install rsync -y

格式化集群

[hadoop@server1 ~]# hdfs namenode -format

启动

namenode节点

[hadoop@server1 root]$ start-dfs.sh

yarn节点

[hadoop@server2 root]$ start-yarn.sh

[hadoop@server2 root]$ mr-jobhistory-daemon.sh start historyserver

关闭

[hadoop@server2 root]$ mr-jobhistory-daemon.sh stop historyserver

[hadoop@server2 root]$ stop-yarn.sh

[hadoop@server1 root]$ stop-dfs.sh

xsync集群分发脚本(循环复制文件到所有节点的相同目录下)

在/home/hadoop目录下创建bin目录,并在bin目录下xsync创建文件,

[hadoop@server1 ~]$ vim xsync

文件内容如下:

#!/bin/bash pcount=$# if((pcount==0)); then echo no args; exit; fi p1=$1 fname=`basename $p1` echo fname=$fname pdir=`cd -P $(dirname $p1); pwd` echo pdir=$pdir user=`whoami` for((host=2; host<4; host++)); do echo ------------------- server$host -------------- rsync -rvl $pdir/$fname $user@server$host:$pdir done

(如果后面执行错误 )

vim xsync进入后, 在底部模式下, 执行:set fileformat=unix后执行:wq保存修改。 然后就可以执行./xsync运行脚本了

复制到所有用户环境变量中

[hadoop@server1 ~]$ sudo cp xsync /bin

使用示例

[hadoop@server1 servers]$ xsync hadoop-3.1.1 fname=hadoop-3.1.1 pdir=/opt/servers ------------------- server1 -------------- sending incremental file list sent 689,281 bytes received 2,592 bytes 461,248.67 bytes/sec total size is 835,950,736 speedup is 1,208.24 ------------------- server2 -------------- sending incremental file list hadoop-3.1.1/datas/tmp/dfs/data/current/VERSION hadoop-3.1.1/datas/tmp/dfs/data/current/BP-1402719487-192.168.238.128-1575364988262/scanner.cursor hadoop-3.1.1/datas/tmp/dfs/data/current/BP-1402719487-192.168.238.128-1575364988262/current/VERSION hadoop-3.1.1/datas/tmp/dfs/name/current/VERSION hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000001-0000000000000000001 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000002-0000000000000000002 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000003-0000000000000000003 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000004-0000000000000000005 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_inprogress_0000000000000000006 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000003 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000003.md5 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000005 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000005.md5 hadoop-3.1.1/datas/tmp/dfs/name/current/seen_txid hadoop-3.1.1/etc/hadoop/mapred-site.xml hadoop-3.1.1/etc/hadoop/workers hadoop-3.1.1/etc/hadoop/yarn-site.xml hadoop-3.1.1/logs/SecurityAuth-hadoop.audit hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.log hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.5 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.log hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.5 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.log hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.5 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.log hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.5 sent 715,375 bytes received 39,908 bytes 1,510,566.00 bytes/sec total size is 835,950,736 speedup is 1,106.80 ------------------- server3 -------------- sending incremental file list hadoop-3.1.1/datas/tmp/dfs/data/current/VERSION hadoop-3.1.1/datas/tmp/dfs/data/current/BP-1402719487-192.168.238.128-1575364988262/scanner.cursor hadoop-3.1.1/datas/tmp/dfs/data/current/BP-1402719487-192.168.238.128-1575364988262/current/VERSION hadoop-3.1.1/datas/tmp/dfs/name/current/VERSION hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000001-0000000000000000001 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000002-0000000000000000002 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000003-0000000000000000003 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_0000000000000000004-0000000000000000005 hadoop-3.1.1/datas/tmp/dfs/name/current/edits_inprogress_0000000000000000006 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000003 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000003.md5 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000005 hadoop-3.1.1/datas/tmp/dfs/name/current/fsimage_0000000000000000005.md5 hadoop-3.1.1/datas/tmp/dfs/name/current/seen_txid hadoop-3.1.1/etc/hadoop/mapred-site.xml hadoop-3.1.1/etc/hadoop/workers hadoop-3.1.1/etc/hadoop/yarn-site.xml hadoop-3.1.1/logs/SecurityAuth-hadoop.audit hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.log hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-datanode-server1.out.5 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.log hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-namenode-server1.out.5 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.log hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-nodemanager-server1.out.5 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.log hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.1 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.2 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.3 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.4 hadoop-3.1.1/logs/hadoop-hadoop-resourcemanager-server1.out.5 sent 715,375 bytes received 39,908 bytes 503,522.00 bytes/sec total size is 835,950,736 speedup is 1,106.80