【python】小型神经网络的搭建

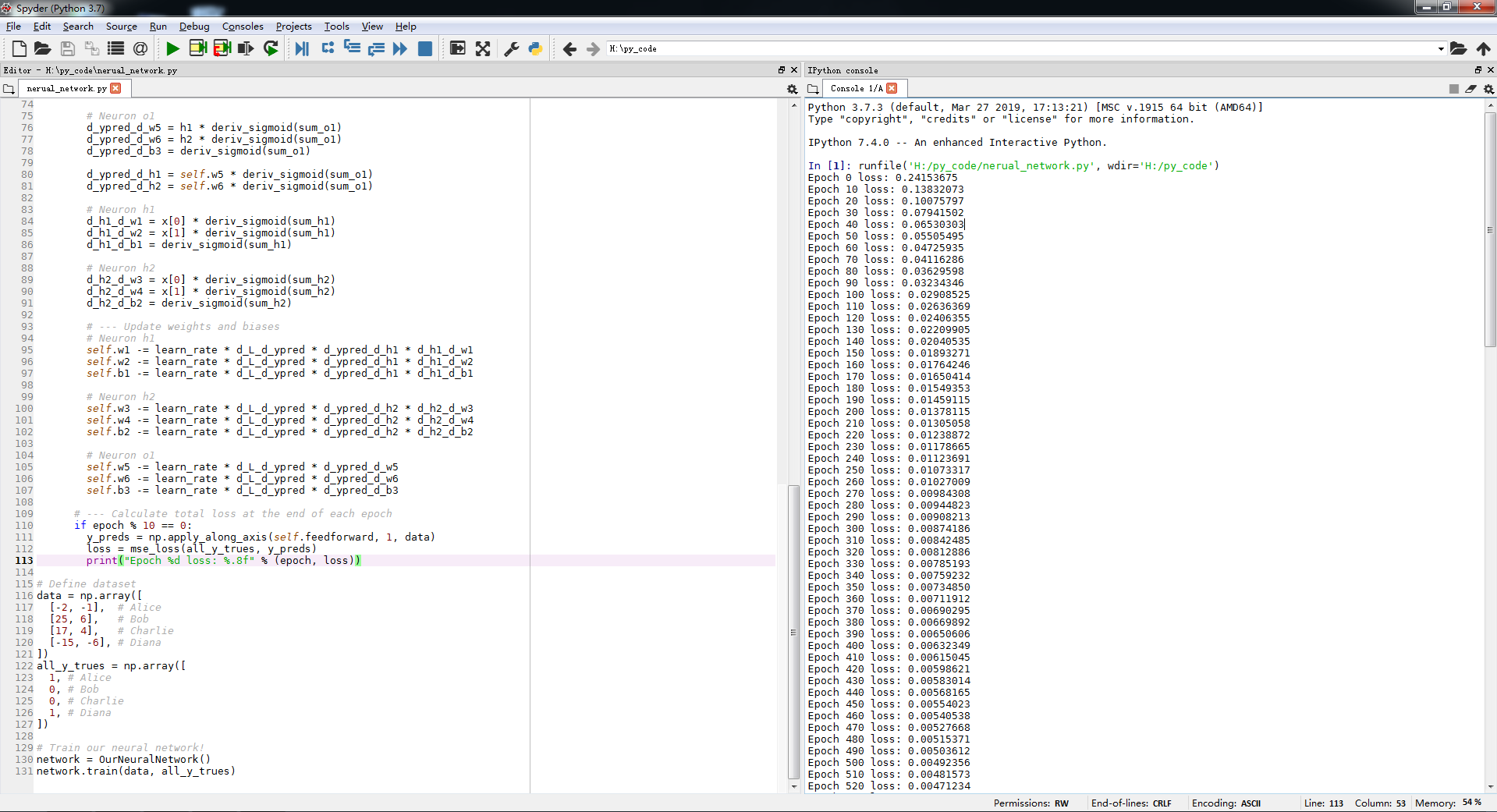

1 import numpy as np 2 3 def sigmoid(x): 4 # Sigmoid activation function: f(x) = 1 / (1 + e^(-x)) 5 return 1 / (1 + np.exp(-x)) 6 7 def deriv_sigmoid(x): 8 # Derivative of sigmoid: f'(x) = f(x) * (1 - f(x)) 9 fx = sigmoid(x) 10 return fx * (1 - fx) 11 12 def mse_loss(y_true, y_pred): 13 # y_true and y_pred are numpy arrays of the same length. 14 return ((y_true - y_pred) ** 2).mean() 15 16 class OurNeuralNetwork: 17 ''' 18 A neural network with: 19 - 2 inputs 20 - a hidden layer with 2 neurons (h1, h2) 21 - an output layer with 1 neuron (o1) 22 23 *** DISCLAIMER ***: 24 The code below is intended to be simple and educational, NOT optimal. 25 Real neural net code looks nothing like this. DO NOT use this code. 26 Instead, read/run it to understand how this specific network works. 27 ''' 28 def __init__(self): 29 # Weights 30 self.w1 = np.random.normal() 31 self.w2 = np.random.normal() 32 self.w3 = np.random.normal() 33 self.w4 = np.random.normal() 34 self.w5 = np.random.normal() 35 self.w6 = np.random.normal() 36 37 # Biases 38 self.b1 = np.random.normal() 39 self.b2 = np.random.normal() 40 self.b3 = np.random.normal() 41 42 def feedforward(self, x): 43 # x is a numpy array with 2 elements. 44 h1 = sigmoid(self.w1 * x[0] + self.w2 * x[1] + self.b1) 45 h2 = sigmoid(self.w3 * x[0] + self.w4 * x[1] + self.b2) 46 o1 = sigmoid(self.w5 * h1 + self.w6 * h2 + self.b3) 47 return o1 48 49 def train(self, data, all_y_trues): 50 ''' 51 - data is a (n x 2) numpy array, n = # of samples in the dataset. 52 - all_y_trues is a numpy array with n elements. 53 Elements in all_y_trues correspond to those in data. 54 ''' 55 learn_rate = 0.1 56 epochs = 1000 # number of times to loop through the entire dataset 57 58 for epoch in range(epochs): 59 for x, y_true in zip(data, all_y_trues): 60 # --- Do a feedforward (we'll need these values later) 61 sum_h1 = self.w1 * x[0] + self.w2 * x[1] + self.b1 62 h1 = sigmoid(sum_h1) 63 64 sum_h2 = self.w3 * x[0] + self.w4 * x[1] + self.b2 65 h2 = sigmoid(sum_h2) 66 67 sum_o1 = self.w5 * h1 + self.w6 * h2 + self.b3 68 o1 = sigmoid(sum_o1) 69 y_pred = o1 70 71 # --- Calculate partial derivatives. 72 # --- Naming: d_L_d_w1 represents "partial L / partial w1" 73 d_L_d_ypred = -2 * (y_true - y_pred) 74 75 # Neuron o1 76 d_ypred_d_w5 = h1 * deriv_sigmoid(sum_o1) 77 d_ypred_d_w6 = h2 * deriv_sigmoid(sum_o1) 78 d_ypred_d_b3 = deriv_sigmoid(sum_o1) 79 80 d_ypred_d_h1 = self.w5 * deriv_sigmoid(sum_o1) 81 d_ypred_d_h2 = self.w6 * deriv_sigmoid(sum_o1) 82 83 # Neuron h1 84 d_h1_d_w1 = x[0] * deriv_sigmoid(sum_h1) 85 d_h1_d_w2 = x[1] * deriv_sigmoid(sum_h1) 86 d_h1_d_b1 = deriv_sigmoid(sum_h1) 87 88 # Neuron h2 89 d_h2_d_w3 = x[0] * deriv_sigmoid(sum_h2) 90 d_h2_d_w4 = x[1] * deriv_sigmoid(sum_h2) 91 d_h2_d_b2 = deriv_sigmoid(sum_h2) 92 93 # --- Update weights and biases 94 # Neuron h1 95 self.w1 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_w1 96 self.w2 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_w2 97 self.b1 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_b1 98 99 # Neuron h2 100 self.w3 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_w3 101 self.w4 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_w4 102 self.b2 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_b2 103 104 # Neuron o1 105 self.w5 -= learn_rate * d_L_d_ypred * d_ypred_d_w5 106 self.w6 -= learn_rate * d_L_d_ypred * d_ypred_d_w6 107 self.b3 -= learn_rate * d_L_d_ypred * d_ypred_d_b3 108 109 # --- Calculate total loss at the end of each epoch 110 if epoch % 10 == 0: 111 y_preds = np.apply_along_axis(self.feedforward, 1, data) 112 loss = mse_loss(all_y_trues, y_preds) 113 print("Epoch %d loss: %.8f" % (epoch, loss)) 114 115 # Define dataset 116 data = np.array([ 117 [-2, -1], # Alice 118 [25, 6], # Bob 119 [17, 4], # Charlie 120 [-15, -6], # Diana 121 ]) 122 all_y_trues = np.array([ 123 1, # Alice 124 0, # Bob 125 0, # Charlie 126 1, # Diana 127 ]) 128 129 # Train our neural network! 130 network = OurNeuralNetwork() 131 network.train(data, all_y_trues)

tz@croplab,HZAU

2019/6/19

分类:

algorithm

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY