K8S中使用Helm安装Jenkins相关文档

一、首先需要一个k8s环境,你可以使用kubeadm或者二进制部署。(注意:这里使用的kubeadm部署k8s集群)

二、安装helm工具:

K8S集群最好保持时间同步:

[root@master ~]# yum -y insatll ntp &> /dev/null

以下操作需要机器访问外网

[root@master ~]# wget https://get.helm.sh/helm-v3.2.2-linux-amd64.tar.gz

[root@master ~]# tar zxf helm-v3.2.2-linux-amd64.tar.gz

[root@master ~]# cd linux-amd64 && mv helm /usr/bin/

2.查看仓库列表

[root@master ~]# helm repo list

NAME URL

stable https://kubernetes-charts.storage.googleapis.com

elastic https://helm.elastic.co

3.使用helm部署ingress

拉取安装包

[root@master ~]# helm pull stable/nginx-ingress

解压nginx-ingress压缩包:

[root@master ~]# tar -xf nginx-ingress-1.30.3.tgz

[root@master ingress]# cd nginx-ingress/

[root@master nginx-ingress]# ls

Chart.yaml ci OWNERS README.md templates values.yaml

4.给节点打标签以便于调度:

[root@k8s-master nginx-ingress]# kubectl get nodes --show-labels #查看目前node节点上面所有的标签

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready master 160d v1.17.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/master=

k8s-node-01 Ready <none> 160d v1.17.0 app=traefik,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-01,kubernetes.io/os=linux

k8s-node-02 Ready <none> 160d v1.17.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,jenkins=jenkins,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-02,kubernetes.io/os=linux

k8s-node-03 Ready <none> 160d v1.17.0 app=ingress,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-03,kubernetes.io/os=linux

可以看出我上面给k8s-node-03打上ingress的标签;如果没有打标签可以使用以下命令给对应node打标签:

kubectl label node k8s-node-03 app=ingress

删除node上面标签可以使用以下命令:

kubectl label node k8s-node-03 app-

[root@master nginx-ingress]# vim values.yaml

.............................................

省略前面内容

# Required for use with CNI based kubernetes installations (such as ones set up by kubeadm),

# since CNI and hostport don't mix yet. Can be deprecated once https://github.com/kubernetes/kubernetes/issues/23920

# is merged

hostNetwork: true #将hostNetwork:ture 目的是使用主机网络模式

.........................................

terminationGracePeriodSeconds: 60

## Node labels for controller pod assignment

## Ref: https://kubernetes.io/docs/user-guide/node-selection/ #values.yaml文件会创建两个deployment,controller和backend 所以要修改两个nodeSelector,两个pod调度到哪台机器上可以按需进行修改,也可以不修改直接随机调度,随机调度后需要找到运行pod的IP地址,配置文件开启了hostnetwork,此台机器的IP就是访问入口(注意:这里的nodeSelector一点要在创建ingress之前给k8s中对应需要调度的node打上标签。否者创建时候会调度失败的)

##

nodeSelector: {app: ingress}

............................................

## Annotations to be added to default backend pods

## Node labels for controller pod assignment

## Ref: https://kubernetes.io/docs/user-guide/node-selection/

##

nodeSelector: {app: ingress}

...........................................

修改完毕后创建helm release

创建命令:

helm install ingeress stable/nginx-ingress -f values.yaml

创建完成后我们可以使用命令查看ingress启动情况:创建ingress会启动两个pod。一个是ingress-controller和ingress-default-backend(如果显示以下情况说明创建完成)

[root@k8s-master nginx-ingress]# kubectl get pod -A |grep ingress

default ingeress-nginx-ingress-controller-58b9fbb466-b6psr 1/1 Running 0 16m

default ingeress-nginx-ingress-default-backend-6cf8bdbc5f-vczx2 1/1 Running 0 16m

helm卸载命令: helm uninstall ingress

5、测试ingress是否正常

1.创建Nginx的yaml文件:

[root@k8s-master nginx]# kubectl create deployment nginx --image=nginx:latest -o yaml --dry-run > nginx.yaml

[root@k8s-master nginx]# cat nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: nginx name: nginx spec: replicas: 1 selector: matchLabels: app: nginx strategy: {} template: metadata: creationTimestamp: null labels: app: nginx spec: containers: - image: nginx:latest name: nginx resources: {} status: {}

部署Nginxdeployment:

[root@k8s-master nginx]# kubectl apply -f nginx.yaml

deployment.apps/nginx created

2.创建svc

[root@k8s-master nginx]# kubectl expose deployment nginx --port=80 --target-port=80 --cluster-ip=None -o yaml --dry-run > nginx-svc.yaml

注意:本次使用的是无头服务,使用无头服务会减少一次流量转发,具体可相关详情可以自己百度

[root@k8s-master nginx]# cat nginx-svc.yaml apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: nginx name: nginx spec: clusterIP: None ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx status: loadBalancer: {}

[root@k8s-master nginx]# kubectl apply -f nginx-svc.yaml

service/nginx created

3.创建ingress

vim nginx-ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: annotations: kubernetes.io/ingress.class: nginx name: nginx namespace: default spec: rules: - host: mynginx.com http: paths: - backend: serviceName: nginx servicePort: 80 path: /

[root@k8s-master nginx]# kubectl apply -f nginx-ingress.yaml

ingress.extensions/nginx created

查看ingress创建情况:

[root@k8s-master nginx]# kubectl get ingress

NAME HOSTS ADDRESS PORTS AGE

nginx mynginx.com 80 6s

在本地hosts文件添加域名解析

编辑hosts文件

C:\Windows\System32\drivers\etc\hosts

#IP地址需要看你的ingress运行到哪台机器

192.168.111.163 mynginx.com

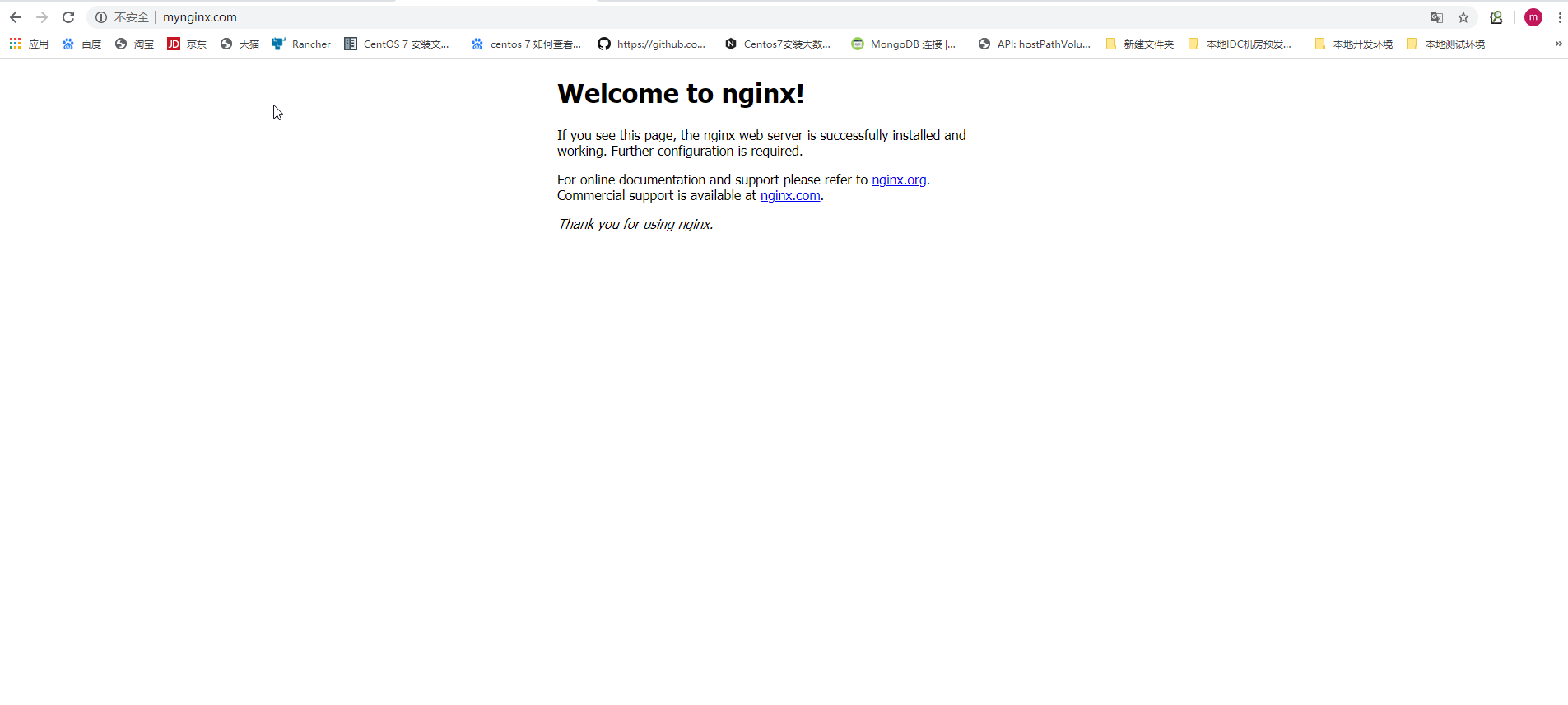

访问测试:

6、安装nfs-storageclass:

此方法适用于其他helm安装软件的文件挂载方式

enkins使用storageclass作为存储,需要提前部署

所有节点都要安装nfs-utils

[root@master ~]# yum -y install nfs-utils rpcbind &> /dev/null

[root@master ~]# systemctl restart nfs-utils

[root@master ~]# systemctl restart rpcbind && systemctl enable rpcbind

[root@master ~]# systemctl restart nfs-server && systemctl enable nfs-server(其它节点一样安装)

在nfs服务器创建访问规则(每个节点都要创建)

[root@master ~]# echo "/data/k8s *(rw,async,no_root_squash)" >> /etc/exports

[root@master ~]# exportfs -r

创建nfs-serviceaccount

[root@k8s-master nfs]# vim nfs-serviceaccount.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["list", "watch", "create", "update", "patch"] - apiGroups: [""] resources: ["endpoints"] verbs: ["create", "delete", "get", "list", "watch", "patch", "update"] ---

kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io

创建nfsStorageClass:

[root@k8s-master nfs]# vim nfs-storageclass.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: course-nfs-storage provisioner: nfs-storage

创建nfs-client:

[root@k8s-master nfs]#vim nfs-client.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner spec: selector: matchLabels: app: nfs-client-provisioner replicas: 1 strategy: type: Recreate template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: nfs-storage - name: NFS_SERVER value: 192.168.111.158 #nfs存储服务器IP地址 - name: NFS_PATH value: /data/k8s #存储位置 volumes: - name: nfs-client-root nfs: server: 192.168.111.158 #nfs存储服务器IP地址 path: /data/k8s

创建这三个yaml文件

[root@k8s-master nfs]# kubectl apply -f nfs-sa.yaml

[root@k8s-master nfs]# kubectl apply -f nfs-client.yaml

[root@k8s-master nfs]# kubectl apply -f nfs-storageclass.yaml

7、安装Jenkins

本次安装使用默认的名称空间

下载Jenkins包

[root@k8s-master nfs]# helm pull stable/jenkins

[root@k8s-master k8s]# ls

jenkins-1.9.17.tgz

[root@k8s-master k8s]# tar -xf jenkins-1.9.17.tgz

[root@k8s-master k8s]# cd jenkins/

[root@k8s-master jenkins]# ls

CHANGELOG.md Chart.yaml OWNERS README.md templates values.yaml

编辑values.yaml文件

以下截取了需要修改的部分

调度器相关

# ref: https://kubernetes.io/docs/concepts/configuration……

nodeSelector: {jenkins: jenkins } # 这是指定Jenkins调度到那一台node上面,node标签需要提前创建,前面有说明如何创建node标签

tolerations: []

# Leverage a priorityClass to ensure your

ingress相关

ingress:

enabled: true # 如果要使用ingress为入口访问就需要将 enabled: t设置为true,如果不需要将其设置为false

# For Kubernetes v1.14+, use 'networking.k8s.io/v1beta1'

apiVersion: "extensions/v1beta1"

labels: {}

annotations: {kubernetes.io/ingress.class: nginx}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

# Set this path to jenkinsUriPrefix above or use annotations to rewrite path

# path: "/jenkins"

# configures the hostname e.g. jenkins.example.com

hostName: myjenkins.com # 这里是访问域名

存储使用刚才创建的nfs-storageclass

storageClass:

annotations: {volume.beta.kubernetes.io/storage-class: course-nfs-storage} #只是之前创建nfs-storageclass存储;

accessMode: "ReadWriteOnce"

size: "15Gi"

配置文件修改完毕后安装Jenkins

Jenkins初次安装会很慢,大概15分钟左右

[root@k8s-master k8s]# helm install jenkins stable/jenkins -f values.yaml

NAME: jenkins

LAST DEPLOYED: Thu Jun 4 07:01:02 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get your 'admin' user password by running: #查看Jenkins登录密码

printf $(kubectl get secret --namespace default jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echo

2. Visit http://myjenkins.com #配置文件里开启了ingress,配置hosts后使用域名访问

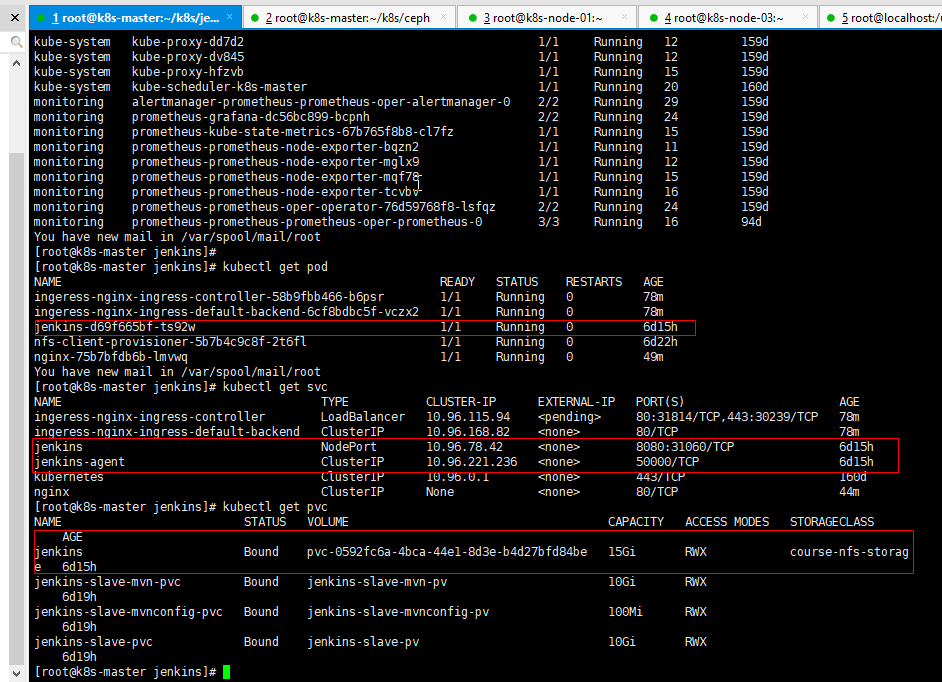

查看helm都安装了什么

注意:我这里是原来部署的没有使用ingress做代理。而是直接使用NodePort模式暴露的Jenkins访问地址;使用ingress直接通过域名访问Jenkins即可

8、使用Jenkins-slave的准备工作

[root@k8s-master k8s]# wget https://storage.googleapis.com/harbor-releases/release-1.9.0/harbor-offline-installer-v1.9.1.tgz

[root@k8s-master k8s]# tar zxvf harbor-offline-installer-v1.9.1.tgz

[root@k8s-master k8s]# cd harbor

创建证书目录

[root@localhost habor]#mkdir -p key

创建ca证书

[root@master harbor]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout ca.key -x509 -days 365 -out ca.crt

其中:(req:申请证书签署请求;-newkey 新密钥 ;-x509:可以用来显示证书的内容,转换其格式,给CSR签名等X.509证书的管理工作,这里用来自签名。)

一路回车出现Common Name 输入IP或域名

Common Name (eg, your name or your server's hostname) []:192.168.111.161

生成证书签名请求

[root@localhost habor]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout192.168.111.161.key -out 192.168.37.10.csr

一路回车出现Common Name 输入IP或域名

Common Name (eg, your name or your server's hostname) []:192.168.111.161

生成证书

[root@localhost habor] # echo subjectAltName = IP:192.168.111.161 > extfile.cnf

[root@localhost habor] #openssl x509 -req -days 365 -in192.168.111.161.csr -CA ca.crt -CAkey ca.key -CAcreateserial -extfile extfile.cnf -out 192.168.111.161.crt

[root@localhost habor] # vim harbor.yml

hostname: 192.168.111.161

# http related config

http:

# port for http, default is 80. If https enabled, this port will redirect to https port

port: 80 #http端口,可修改

# https related config

https:

# # https port for harbor, default is 443

port: 443 #https端口,最好使用默认

# # The path of cert and key files for nginx

certificate: /root/harbor/key/192.168.111.161.10.crt #刚才生成的crt文件绝对路径

private_key: /root/harbor/key/192.168.111.161.key #刚才生成的key文件绝对路径

启动程序初始化:

[root@localhost habor] # ./install.sh

node节点登录证书:需要把之前制作的ca证书添加到信任(因为是自签名证书)

将此ca.crt文件分发到所有node节点

[root@k8s-master /]#mkdir -p /etc/docker/certs.d/192.168.111.161

[root@localhost habor] # scp ca.crt root@192.168.111.158:/etc/docker/certs.d/192.168.111.161/

[root@k8s-master /]# systemctl restart docker

其它k8s-node上面做同样操作

[root@k8s-master /]# docker login 192.168.111.161 -u admin -p Harbor12345

访问https://192.168.111.161,(最好使用火狐浏览器) 注意:镜像保存项目名称需要自己手动创建,不创建默认会使用library默认项目库

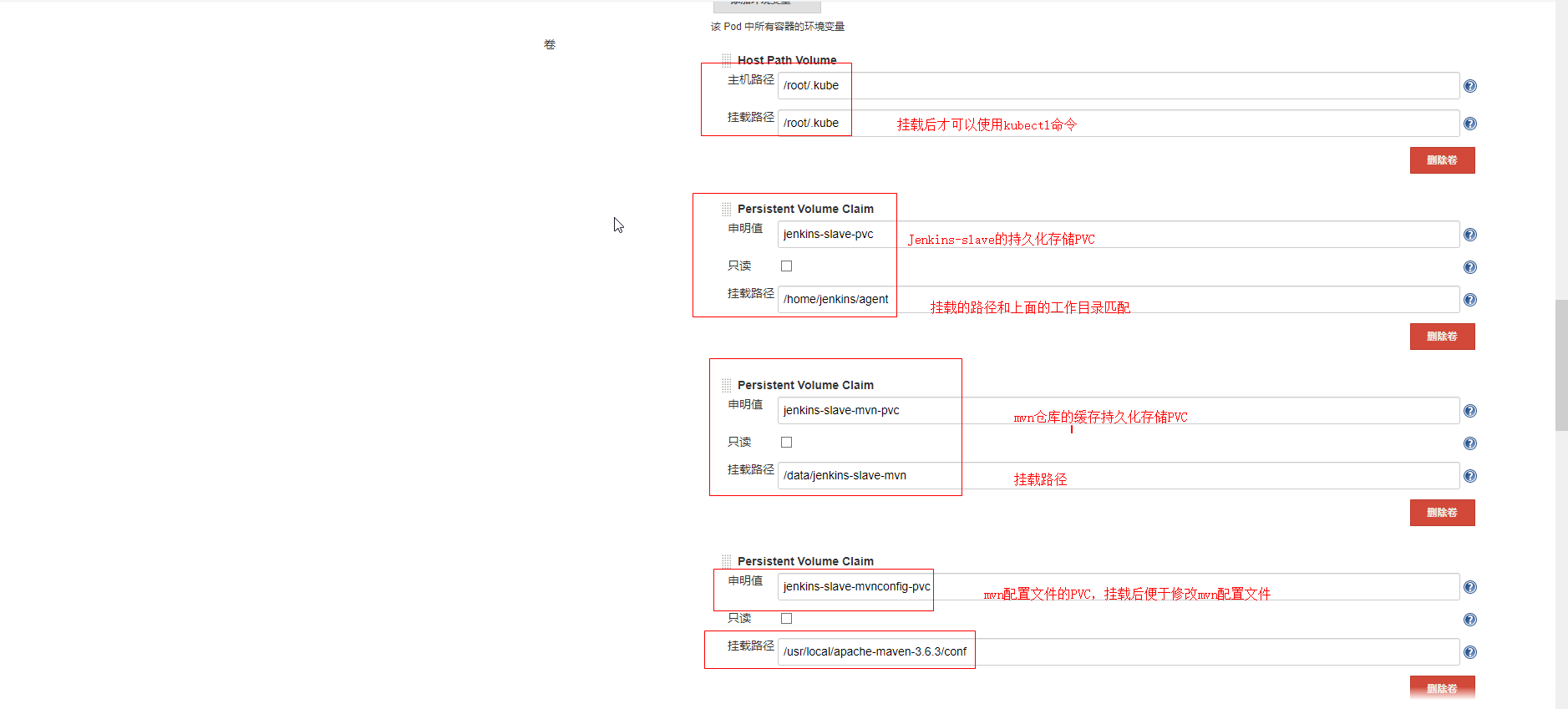

9、Jenkins-slave 使用的pvc

此pvc的作用:

1.jenkins-slave持久化工作目录

2.mvn编译缓存持久化

3.mvn配置文件持久化

[root@k8s-master k8s]# vim jenkins-slave-pvc.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: jenkins-slave-pv labels: apps: jenkins-slave spec: capacity: storage: 10Gi accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain #persistentVolumeReclaimPolicy: Delete nfs: path: /data/jenkins-slave server: 192.168.111.158 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: jenkins-slave-pvc #jenkins-slave工作目录的PVC spec: accessModes: - ReadWriteMany volumeMode: Filesystem resources: requests: storage: 10Gi selector: matchLabels: apps: jenkins-slave --- apiVersion: v1 kind: PersistentVolume metadata: name: jenkins-slave-mvn-pv labels: apps: jenkins-slave-mvn spec: capacity: storage: 10Gi accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain #persistentVolumeReclaimPolicy: Delete nfs: path: /data/jenkins-slave-mvn server: 192.168.111.158 #这里是k8s中masterIP地址 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: jenkins-slave-mvn-pvc #jenkins-slave mvn缓存的pvc spec: accessModes: - ReadWriteMany volumeMode: Filesystem resources: requests: storage: 10Gi selector: matchLabels: apps: jenkins-slave-mvn --- apiVersion: v1 kind: PersistentVolume metadata: name: jenkins-slave-mvnconfig-pv labels: apps: jenkins-slave-mvnconfig spec: capacity: storage: 100Mi accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain #persistentVolumeReclaimPolicy: Delete nfs: path: /data/jenkins-slave-mvnconfig server: 192.168.111.158 #这里是k8s中masterIP地址 --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: jenkins-slave-mvnconfig-pvc #jenkins-slave mvn配置文件的pvc spec: accessModes: - ReadWriteMany volumeMode: Filesystem resources: requests: storage: 100Mi selector: matchLabels: apps: jenkins-slave-mvnconfig

创建对应的存储目录

[root@k8s-master k8s]# mkdir -p /data/jenkins-slave

[root@k8s-master k8s]# mkdir -p /data/jenkins-slave-mvn

[root@k8s-master k8s]#mkdir -p /data/jenkins-slave-mvnconfig

创建pv,pvc,并开启相关目录挂载权限

[root@k8s-master k8s]# kubectl apply -f jenkins-slave-pvc.yaml

[root@k8s-master k8s]# cat /etc/exports

/data/k8s *(rw,async,no_root_squash)

/data/jenkins-slave *(rw,async,no_root_squash)

/data/jenkins-slave-mvn *(rw,async,no_root_squash)

/data/jenkins-slave-mvnconfig *(rw,async,no_root_squash)

使挂载权限生效

[root@k8s-master k8s]#exportfs -r

Jenkins-slave 使用的rbac

apiVersion: v1 kind: ServiceAccount metadata: name: jenkins namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: jenkins rules: - apiGroups: ["extensions", "apps"] resources: ["deployments"] verbs: ["create", "delete", "get", "list", "watch", "patch", "update"] - apiGroups: [""] resources: ["services", "namespaces"] verbs: ["create", "delete", "get", "list", "watch", "patch", "update"] - apiGroups: [""] resources: ["pods"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/exec"] verbs: ["create","delete","get","list","patch","update","watch"] - apiGroups: [""] resources: ["pods/log"] verbs: ["get","list","watch"] - apiGroups: [""] resources: ["secrets"] verbs: ["get"] - apiGroups: ["apiextensions.k8s.io"] resources: ["customresourcedefinitions"] verbs: ["create","delete","get","list","patch","update","watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: jenkins namespace: default roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: jenkins subjects: - kind: ServiceAccount name: jenkins namespace: default

[root@k8s-master k8s]# vim jenkins-slave-rbac.yaml

制作jenkins-slave镜像

[root@k8s-master k8s]#wget http://apache.communilink.net/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz

[root@k8s-master k8s]# cat Dockerfile

FROM jenkinsci/jnlp-slave:4.3-1

ADD apache-maven-3.6.3-bin.tar.gz /usr/local/

ENV PATH="/usr/local/apache-maven-3.6.3/bin:${PATH}"

[root@k8s-master k8s]# vim Dockerfile

FROM jenkinsci/jnlp-slave:4.3-1

ADD apache-maven-3.6.3-bin.tar.gz /usr/local/

ENV PATH="/usr/local/apache-maven-3.6.3/bin:${PATH}"

使用 Dockerfile制作镜像:

docker build -t 192.168.111.161/jenkins/slave:v1 -f Dockerfile

准备mvn相关配置

[root@k8s-master k8s]# tar -xf apache-maven-3.6.3

[root@k8s-master k8s]# cd apache-maven-3.6.3

[root@k8s-master apache-maven-3.6.3]# cp -rf conf/* /data/jenkins-slave-mvnconfig/

[root@k8s-master apache-maven-3.6.3]# cd /data/jenkins-slave-mvnconfig/

[root@k8s-master jenkins-slave-mvnconfig]# vim settings.xml

配置mvn缓存路径为/data/jenkins-slave-mvn,此目录后续会挂载

| Default: ${user.home}/.m2/repository

<localRepository>/path/to/local/repo</localRepository>

-->

<localRepository>/data/jenkins-slave-mvn</localRepository>

<!-- interactiveMode

| This will determine

生成镜像并命名为私库地址并上传(基于你已经成功登录harbor私库为前提)

[root@k8s-master jenkins-slave-mvnconfig]# docker push 192.168.111.161/jenkins/slave:v1

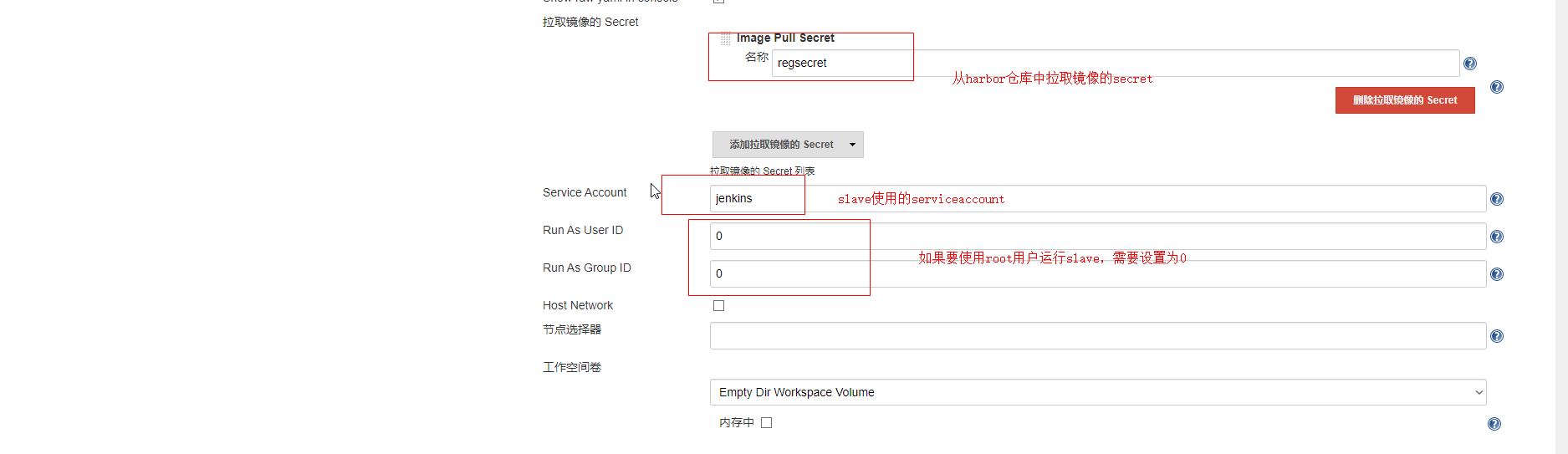

创建jenkins-slave仓库secret

供jenkins-slave拉取私库镜像

[root@k8s-master k8s]# kubectl create secret docker-registry regsecret --docker-server=192.168.111.161 --docker-username=admin --docker-password=Harbor12345 --docker-email=11@qq.com -n default

注意:#创建secret只针对当前的名称空间,不同名称空间需要创建不同的secret

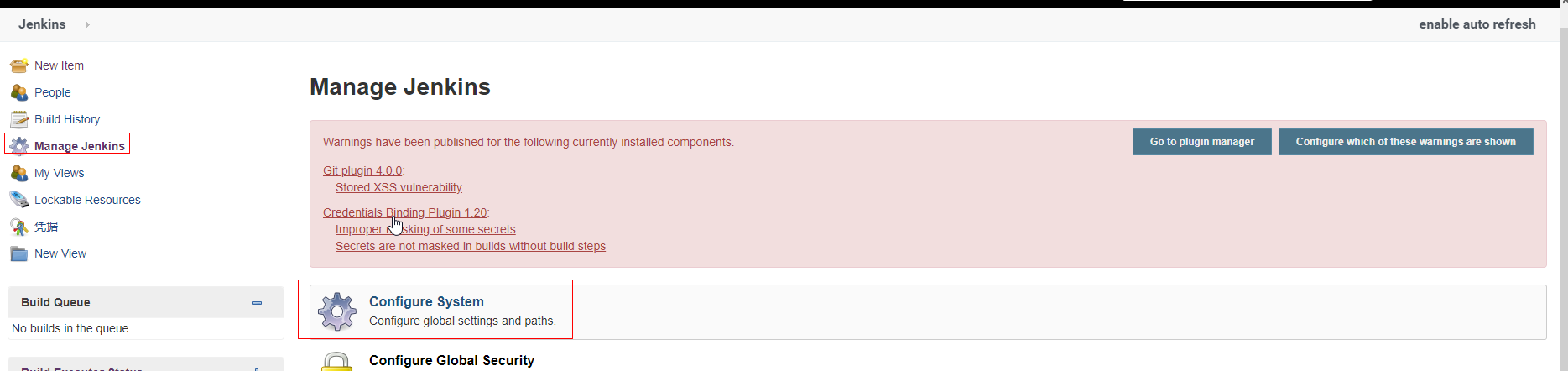

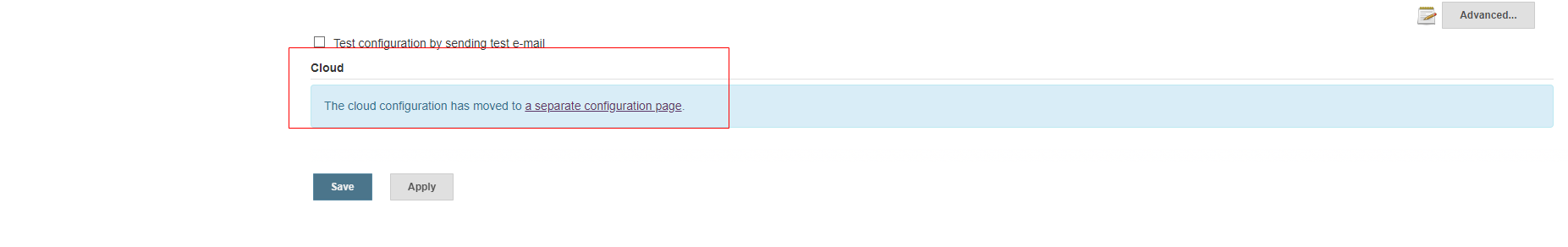

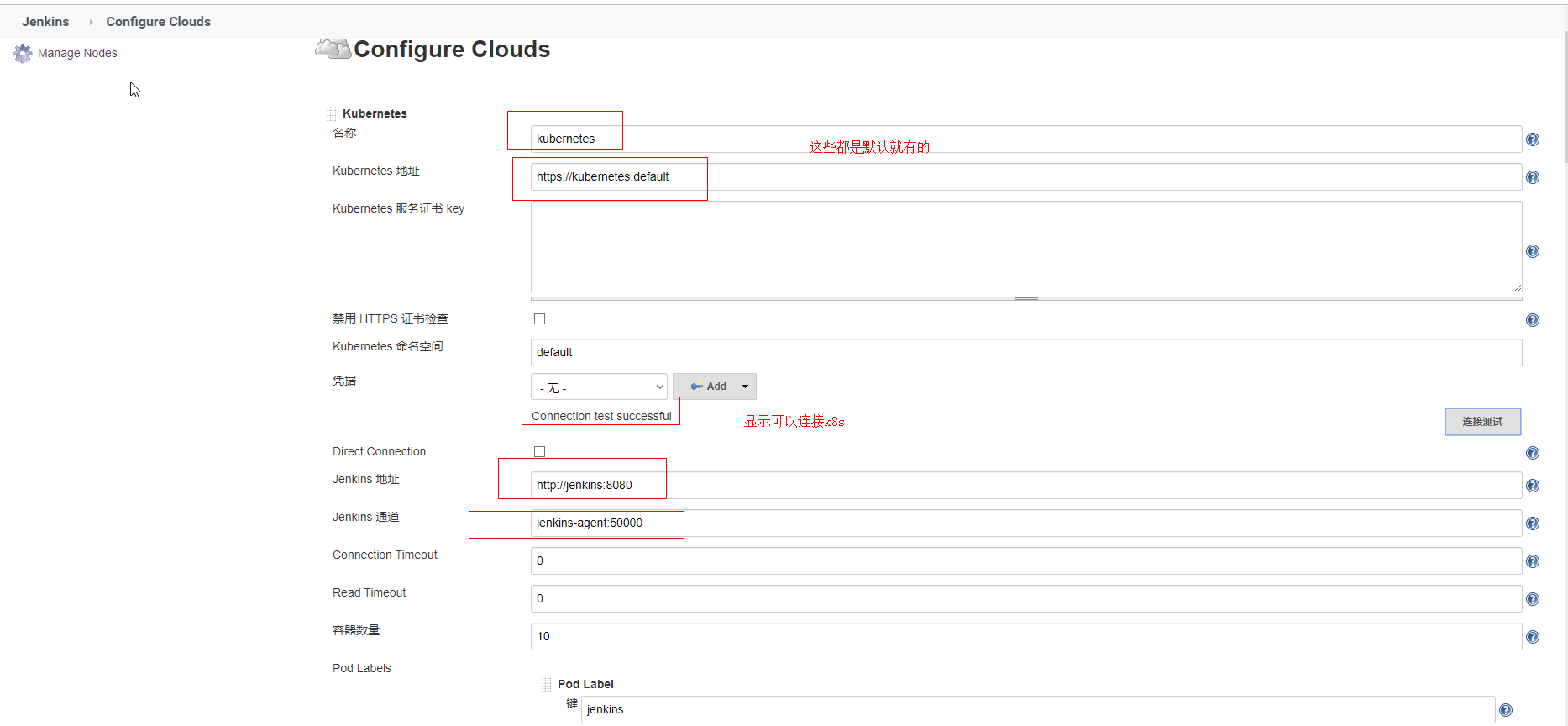

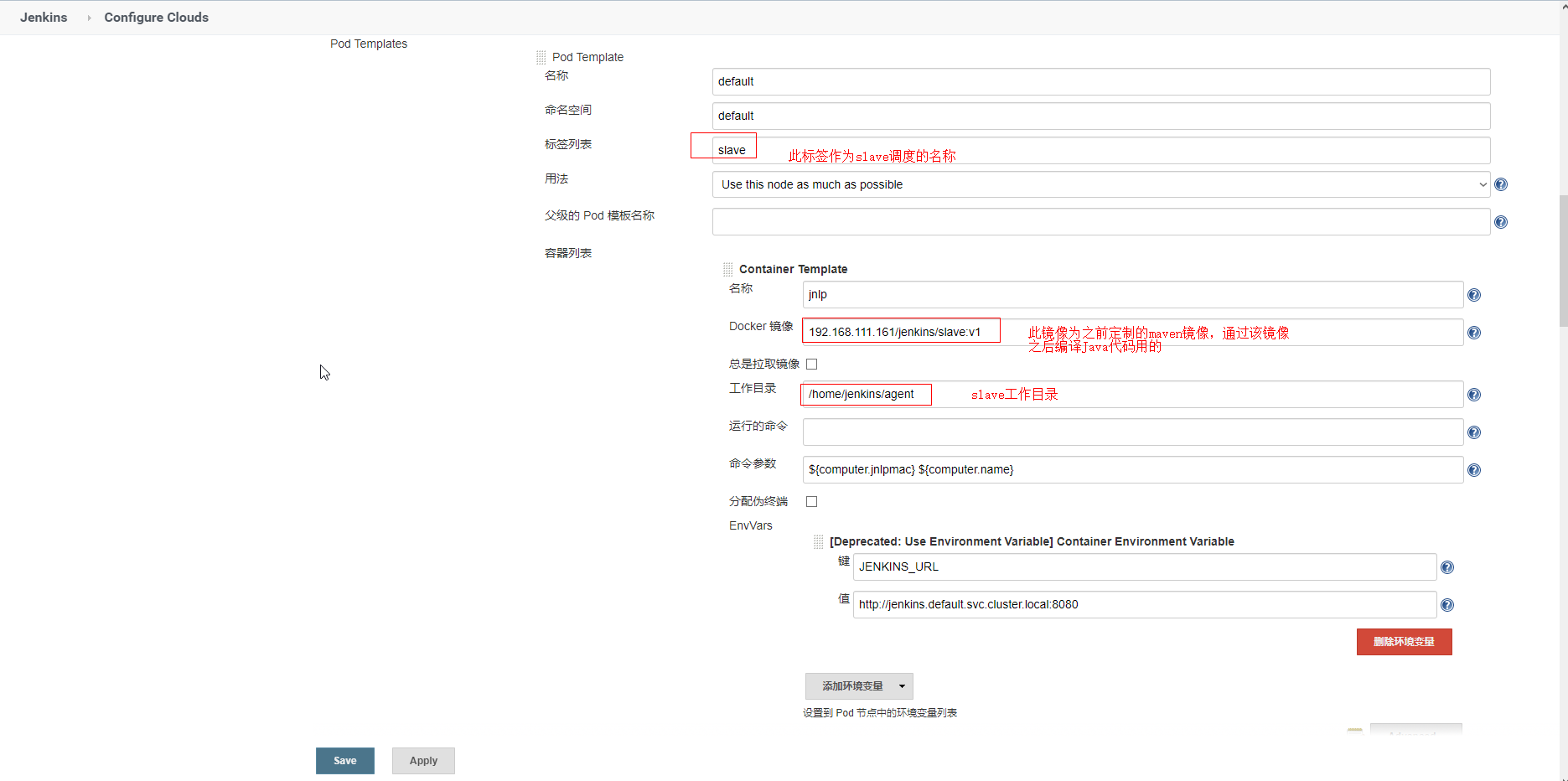

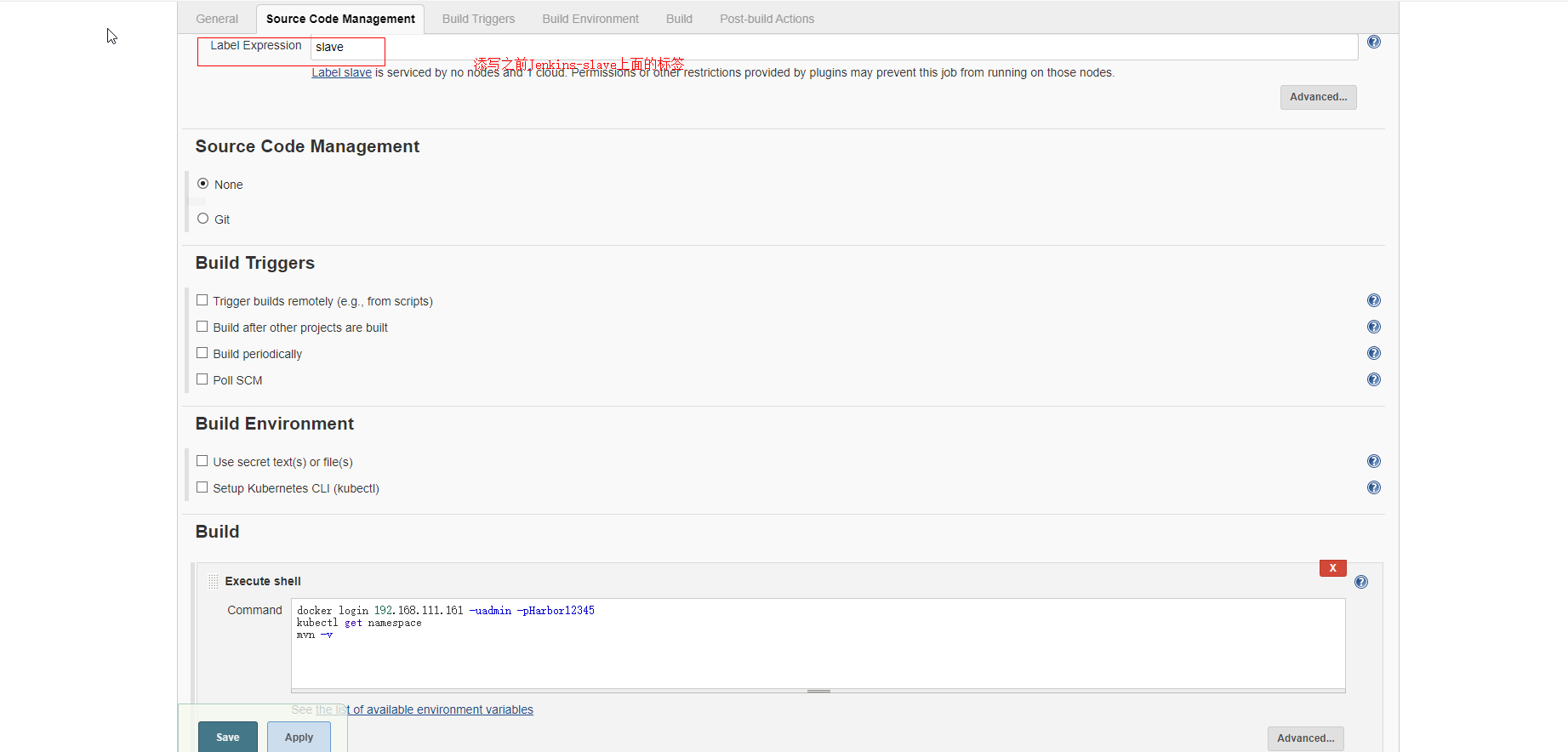

调整jenkins-slave相关配置

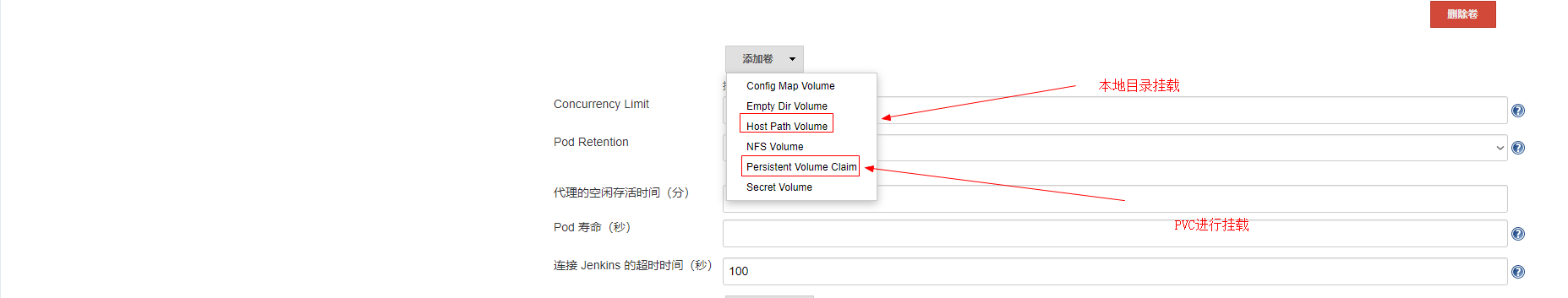

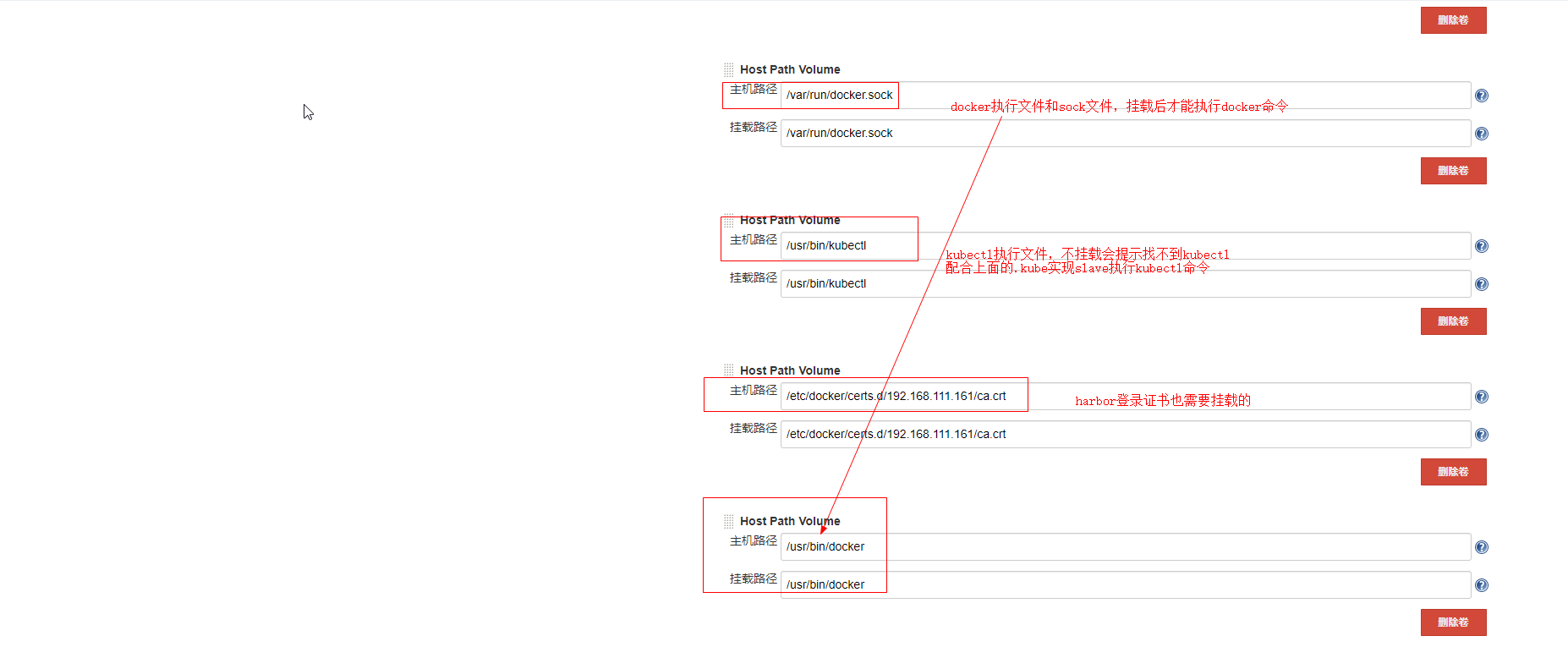

挂载说明:

将宿主机的docker socket文件挂在到slave上,这样可以直接在slave节点上访问宿主机的docker

将宿主机的的/root/.kube目录挂载到slave上,这样slave节点可以直接管理k8s。从而实现创建和部署deployment

将宿主机的jenkins工作目录挂载到slave上

将maven的本地仓库挂在到slave上,默认slave在完成任务后不会在本地缓存代码包,现在将m2持久化了,以后就不用每次下载更新本地maven仓库了

将宿主机的maven的配置文件挂载到slave上。

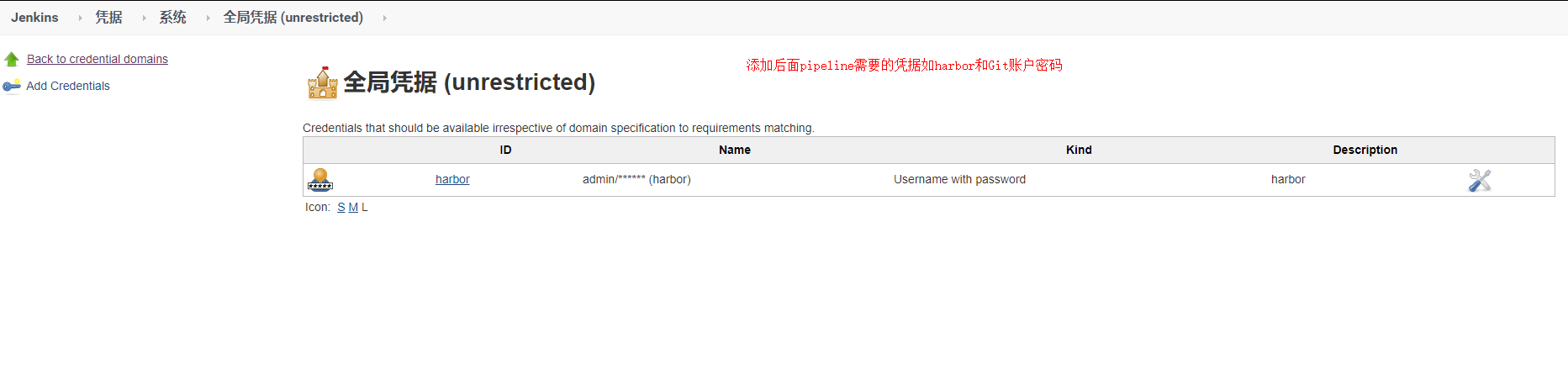

创建pipeline所需的凭证

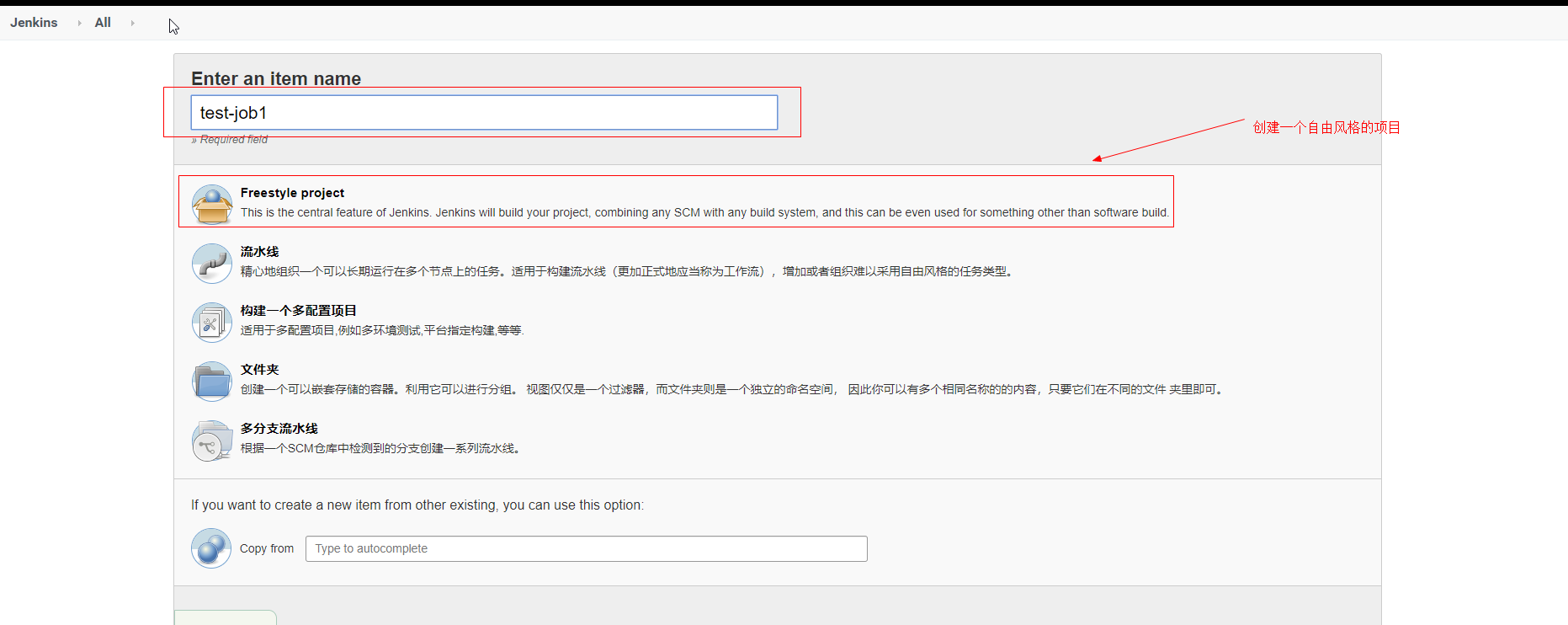

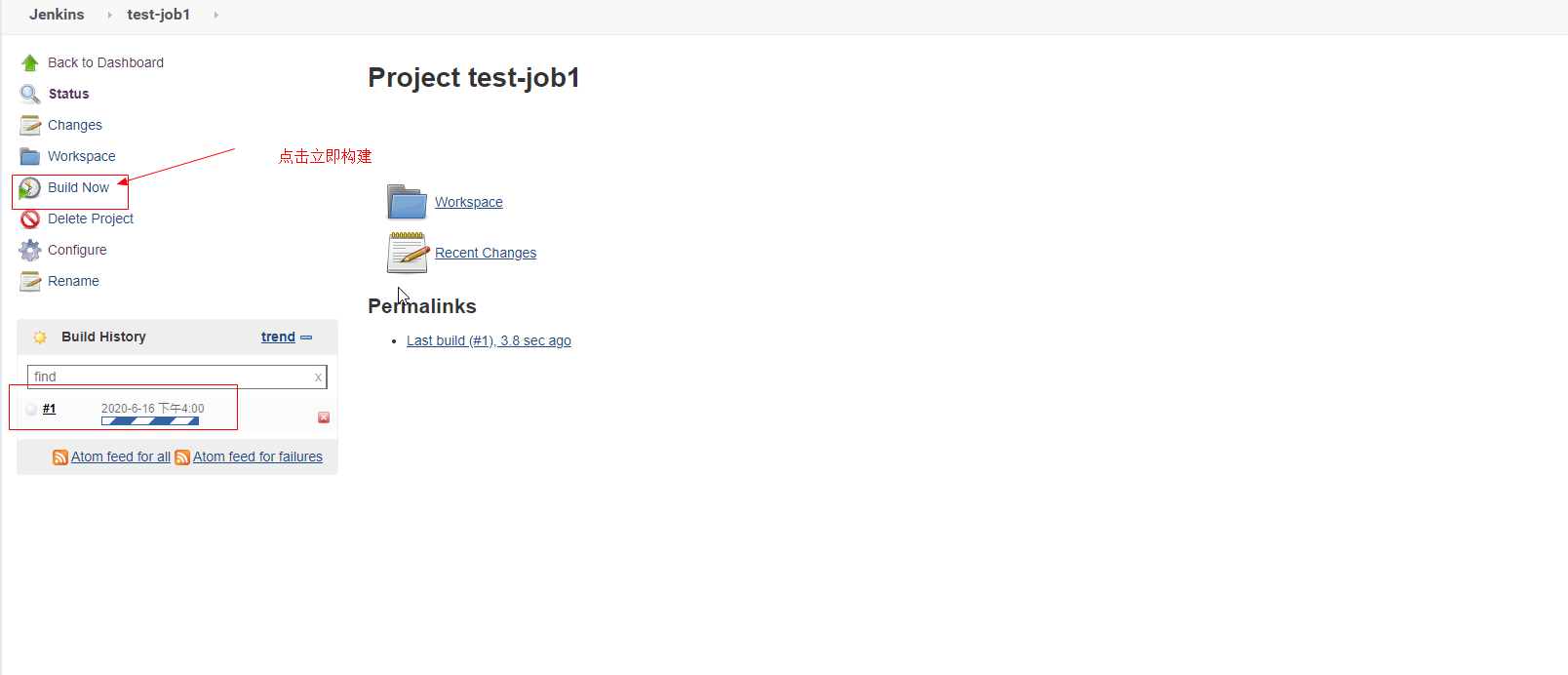

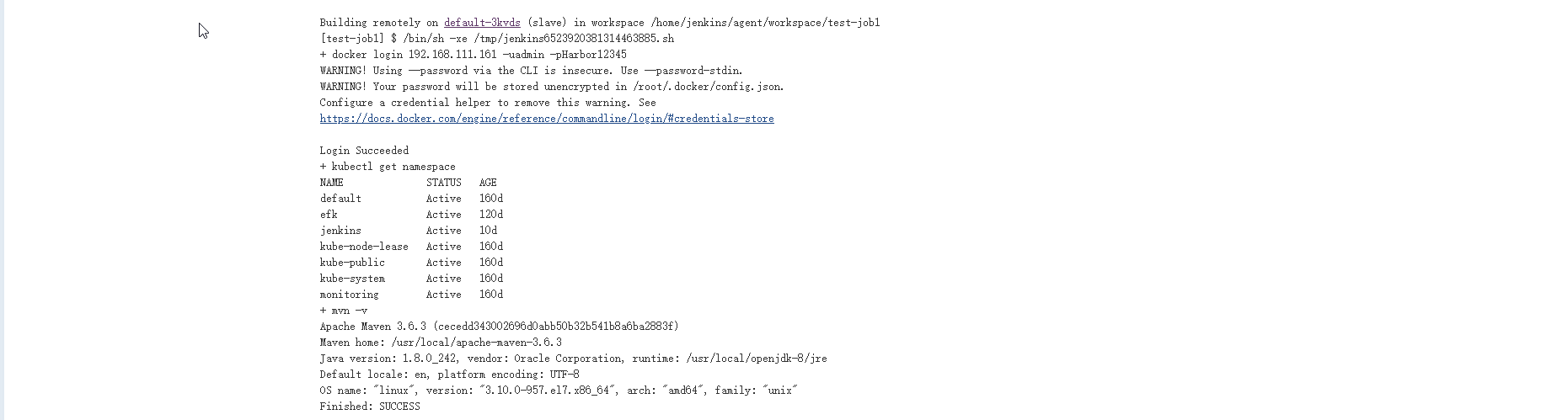

测试所有的配置项都是否可用:

测试dockerlogin、kubectl、mvn都是否可用:

预期结果显示如下,说明正常

浙公网安备 33010602011771号

浙公网安备 33010602011771号