全连接层

Outline

-

Matmul

-

Neural Network

-

Deep Learning

-

Multi-Layer

Recap

-

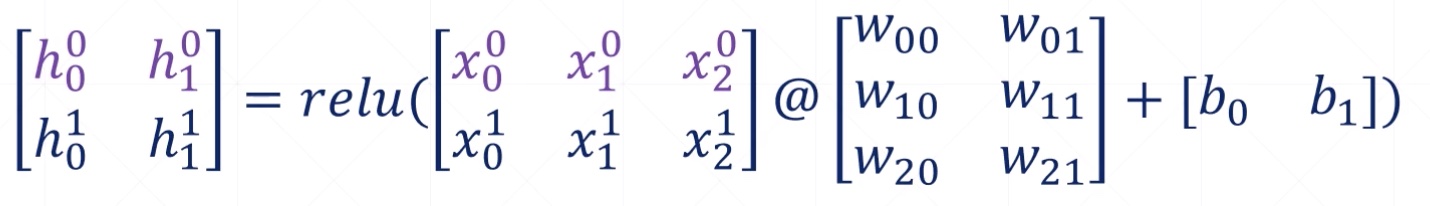

out = f(X@W + b)

-

out = relut(X@W + b)

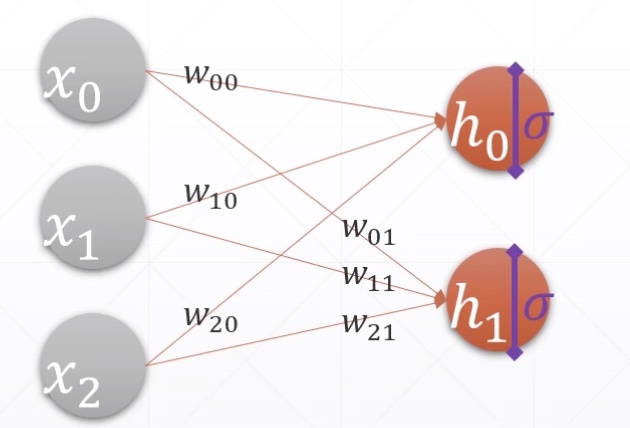

Neural Network

- h = relut(X@W + b)

-

# Input

-

# Hidden

-

# Output

-

Neural Network in the 1980s

- 3~5 layers

Here comes Deep Learning

- Deep Learning now

Heros

推动深度学习发展的功臣:

- BigDATA

- ReLU

- Dropout

- BatchNorm

- ResNet

- Xavier Initialization

- Caffe/TensorFlow/PyTorch

- ...

Fully connected layer

import tensorflow as tf

x = tf.random.normal([4, 784])

net = tf.keras.layers.Dense(512)

out = net(x)

out.shape

TensorShape([4, 512])

net.kernel.shape, net.bias.shape

(TensorShape([784, 512]), TensorShape([512]))

net = tf.keras.layers.Dense(10)

try:

net.bias

except Exception as e:

print(e)

'Dense' object has no attribute 'bias'

net.get_weights()

[]

net.weights

[]

net.build(input_shape=(None, 4))

net.kernel.shape, net.bias.shape

(TensorShape([4, 10]), TensorShape([10]))

net.build(input_shape=(None, 20))

net.kernel.shape, net.bias.shape

(TensorShape([20, 10]), TensorShape([10]))

net.build(input_shape=(2, 4))

net.kernel

<tf.Variable 'kernel:0' shape=(4, 10) dtype=float32, numpy=

array([[ 0.39824653, -0.56459695, 0.15540016, -0.25054374, -0.33711377,

-0.49766102, -0.27644783, -0.4385618 , 0.6163305 , 0.40391672],

[ 0.14267981, 0.04587489, -0.34641156, 0.41443396, 0.5877181 ,

-0.58475596, 0.6121434 , 0.3081839 , -0.29890376, 0.54232216],

[-0.61803645, 0.31125462, 0.40059066, -0.54361427, -0.6469191 ,

0.39140797, -0.53628796, 0.59679496, 0.41008878, -0.45868778],

[ 0.07785475, -0.45004582, -0.42372018, -0.39478874, 0.08843976,

0.09751028, 0.625625 , 0.2192722 , -0.527462 , 0.5550728 ]],

dtype=float32)>

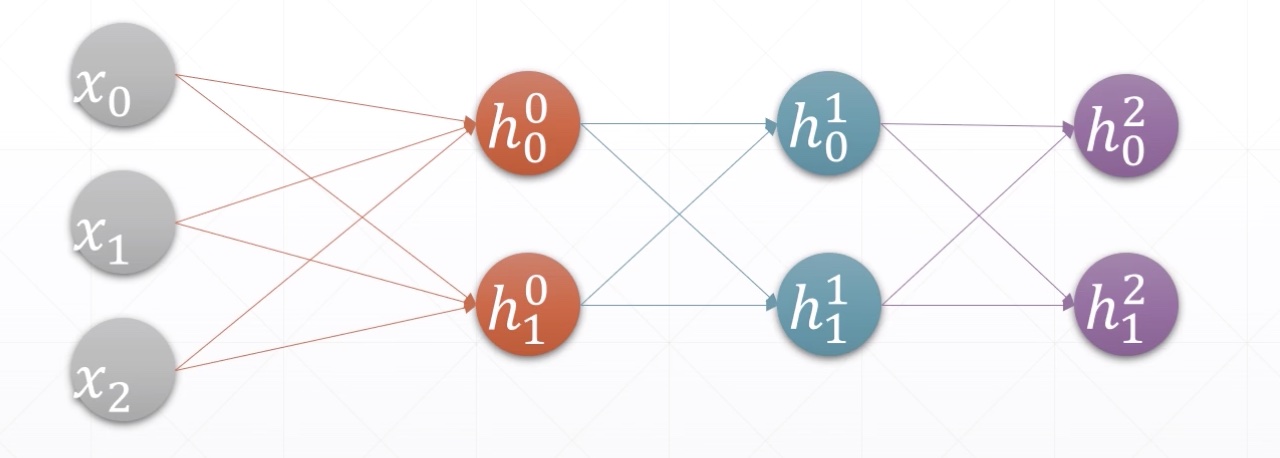

Multi-Layers

- keras.Sequential([layer1,layer2,layer3])

from tensorflow import keras

x = tf.random.normal([2, 3])

model = keras.Sequential([

keras.layers.Dense(2, activation='relu'),

keras.layers.Dense(2, activation='relu'),

keras.layers.Dense(2)

])

model.build(input_shape=[None, 3])

model.summary()

# [w1,b1,w2,b2,w3,b3]

for p in model.trainable_variables:

print(p.name, p.shape)

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_13 (Dense) multiple 8

_________________________________________________________________

dense_14 (Dense) multiple 6

_________________________________________________________________

dense_15 (Dense) multiple 6

=================================================================

Total params: 20

Trainable params: 20

Non-trainable params: 0

_________________________________________________________________

dense_13/kernel:0 (3, 2)

dense_13/bias:0 (2,)

dense_14/kernel:0 (2, 2)

dense_14/bias:0 (2,)

dense_15/kernel:0 (2, 2)

dense_15/bias:0 (2,)