使用mysql shell将MariaDB迁移到MySQL

2023-06-28 11:23 abce 阅读(217) 评论(0) 收藏 举报源库:mariadb 10.6

目标库:mysql 8.0.32

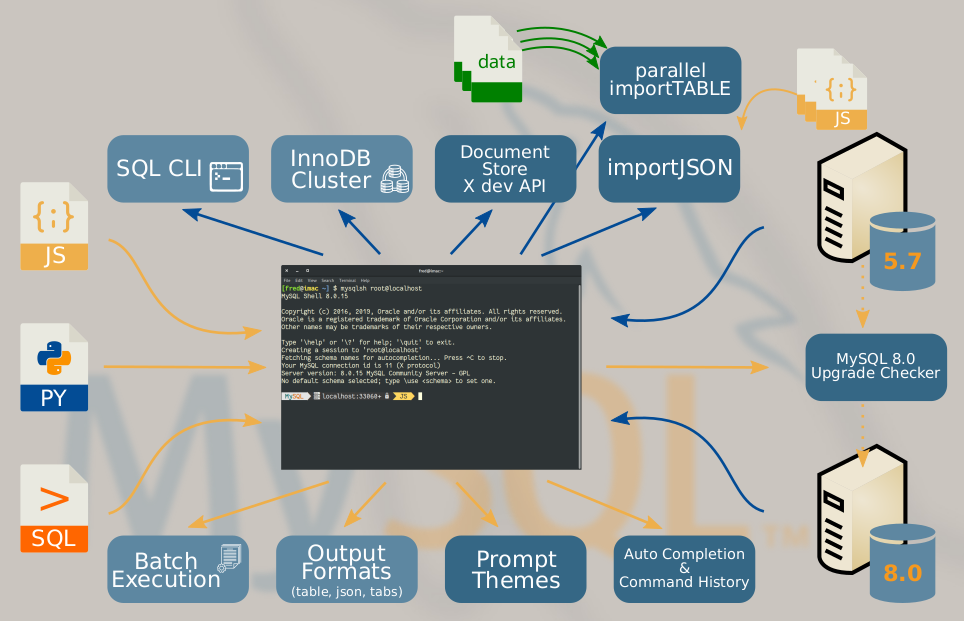

MySQL Shell Overview:

1.查看源库大小

SELECT sys.format_bytes(sum(data_length)) DATA,

sys.format_bytes(sum(index_length)) INDEXES,

sys.format_bytes(sum(data_length + index_length)) 'TOTAL SIZE'

FROM information_schema.TABLES ORDER BY data_length + index_length

;

如果是低版本:

SELECT

CONCAT( SUM( ROUND( DATA_LENGTH / (1024 / 1024) ,2)), 'MB' ) AS Data,

CONCAT( SUM( ROUND( INDEX_LENGTH / (1024 / 1024) ,2)), 'MB' ) AS Indexes,

CONCAT( SUM( ROUND( (DATA_LENGTH + INDEX_LENGTH) / (1024 * 1024) ,2)), 'MB' ) AS 'Total Size'

FROM information_schema.TABLES ORDER BY DATA_LENGTH + INDEX_LENGTH

;

2.查看源库使用了哪些存储引擎

SELECT count(*) as '# TABLES', sys.format_bytes(sum(data_length)) DATA,

sys.format_bytes(sum(index_length)) INDEXES,

sys.format_bytes(sum(data_length + index_length)) 'TOTAL SIZE',

engine `ENGINE` FROM information_schema.TABLES

WHERE TABLE_SCHEMA NOT IN ('sys','mysql', 'information_schema', 'performance_schema', 'mysql_innodb_cluster_metadata')

GROUP BY engine

ORDER BY engine;

如果是低版本:

SELECT

count(*) as '# TABLES',

CONCAT( SUM( ROUND( DATA_LENGTH / (1024 / 1024) ,2)), 'MB' ) AS Data,

CONCAT( SUM( ROUND( INDEX_LENGTH / (1024 / 1024) ,2)), 'MB' ) AS Indexes,

CONCAT( SUM( ROUND( (DATA_LENGTH + INDEX_LENGTH) / (1024 * 1024) ,2)), 'MB' ) AS 'Total Size',

engine `ENGINE` FROM information_schema.TABLES

WHERE TABLE_SCHEMA NOT IN ('sys','mysql', 'information_schema', 'performance_schema', 'mysql_innodb_cluster_metadata')

GROUP BY engine

ORDER BY engine

;

3.检查没有主键的表

SELECT tables.table_schema , tables.table_name , tables.engine

FROM information_schema.tables LEFT JOIN (

SELECT table_schema , table_name

FROM information_schema.statistics

GROUP BY table_schema, table_name, index_name

HAVING SUM(

case when non_unique = 0 and nullable != 'YES' then 1 else 0 end ) = count(*) ) puks

ON tables.table_schema = puks.table_schema

AND tables.table_name = puks.table_name

WHERE puks.table_name IS null

AND tables.table_type = 'BASE TABLE'

AND tables.TABLE_SCHEMA NOT IN ('mysql', 'performance_schema', 'information_schema', 'sys');

4.迁移数据

如果迁移整个实例,用dumpInstance。一个重要的选项是compatibility:

·强制使用innodb存储引擎(force_innodb)

·忽略主键缺失(ignore_missing_pks)

·自动添加主键等(create_invisible_pks)

导出整个实例:

JS>

util.dumpInstance("/data/dump", {threads: 8, compatibility: ["force_innodb"]})

导出部分库:

JS > util.dumpSchemas(['pm1','abce','abce_dev'],"/data/dump",{threads: 8, compatibility: ["force_innodb"]})

NOTE: Backup lock is not supported in MySQL 5.6 and DDL changes will not be blocked. The dump may fail with an error if schema changes are made while dumping.

Acquiring global read lock

Global read lock acquired

Initializing - done

WARNING: Failed to fetch value of @@GLOBAL.GTID_EXECUTED.

3 schemas will be dumped and within them 102 tables, 2 views.

Gathering information - done

All transactions have been started

Global read lock has been released

Writing global DDL files

Running data dump using 8 threads.

NOTE: Progress information uses estimated values and may not be accurate.

NOTE: Table statistics not available for `abce_dev`.`tab1`, chunking operation may be not optimal. Please consider running 'ANALYZE TABLE `abce_dev`.`tab1`;' first.

Writing schema metadata - done

Writing DDL - done

Writing table metadata - done

Starting data dump

115% (222.30M rows / ~192.98M rows), 5.78M rows/s, 481.51 MB/s uncompressed, 59.56 MB/s compressed

Dump duration: 00:00:36s

Total duration: 00:00:36s

Schemas dumped: 3

Tables dumped: 102

Uncompressed data size: 18.01 GB

Compressed data size: 2.14 GB

Compression ratio: 8.4

Rows written: 222295249

Bytes written: 2.14 GB

Average uncompressed throughput: 491.26 MB/s

Average compressed throughput: 58.46 MB/s

5.导入数据

JS>

\sql SET GLOBAL local_infile=1; --支持本地数据加载

\sql ALTER INSTANCE DISABLE INNODB REDO_LOG; --关闭redo日志,加速导入

util.loadDump("/data/dump", {threads: 8, ignoreVersion: true , skipBinlog: true, loadUsers: false})

\sql ALTER INSTANCE ENABLE INNODB REDO_LOG;

\sql SET GLOBAL local_infile=0;

浙公网安备 33010602011771号

浙公网安备 33010602011771号