手势识别控制pygame精灵

步骤:

- 编写简易pygame精灵游戏(只实现键盘上下左右控制)

- 解决opencv手势识别核心问题

- 上述2部分对接上

pygame部分我们只加载个背景,然后里面放1只乌龟精灵,用键盘的上下左右键来控制,直接给出代码:

乌龟精灵代码(DemoSpirit.py):

import pygame

class DemoSpirit(pygame.sprite.Sprite):

def __init__(self, target, screen_size, position):

pygame.sprite.Sprite.__init__(self)

self.target_surface = target

self.screen_size = screen_size

self.position = position

self.image = pygame.image.load("resources\\wugui.png").convert_alpha()

self.image = pygame.transform.smoothscale(self.image, (50, 50))

def draw(self):

# random_text = font_200.render('***', True, white_color)

self.target_surface.blit(self.image, self.position)

def move_left(self):

if self.position[0]-10 > 0:

self.position=(self.position[0]-10, self.position[1])

def move_right(self):

if self.position[0]+10 < self.screen_size[0]:

self.position=(self.position[0]+10, self.position[1])

def move_up(self):

if self.position[1] - 10 > 0:

self.position=(self.position[0], self.position[1]-10)

def move_down(self):

if self.position[1] + 10 < self.screen_size[1]:

self.position=(self.position[0], self.position[1]+10)

游戏主循环代码(game-main.py):

import pygame

from pygame.locals import *

background_image_filename = 'resources/back.jpg'

pygame.init() # 2、初始化init() 及设置

screen_list = pygame.display.list_modes()

screen_size = screen_list[16]

screen = pygame.display.set_mode(screen_size)

background = pygame.image.load(background_image_filename).convert()

background = pygame.transform.scale(background, screen_size)

clock = pygame.time.Clock()

pos = (screen_size[0] * 0.6, screen_size[1] * 0.3)

from cvgame.DemoSpirit import DemoSpirit

s1 = DemoSpirit(screen, screen_size, pos)

# 开始游戏循环

while True:

for event in pygame.event.get():

if event.type == KEYDOWN:

if event.key == K_UP:

s1.move_up()

if event.key == K_DOWN:

s1.move_down()

if event.key == K_LEFT:

s1.move_left()

if event.key == K_RIGHT:

s1.move_right()

elif event.key == K_q:

exit()

screen.blit(background, (0, 0))

s1.draw()

pygame.display.update() # 6、update 更新屏幕显示

clock.tick(100)

效果图:

接下来,进入手势识别领域

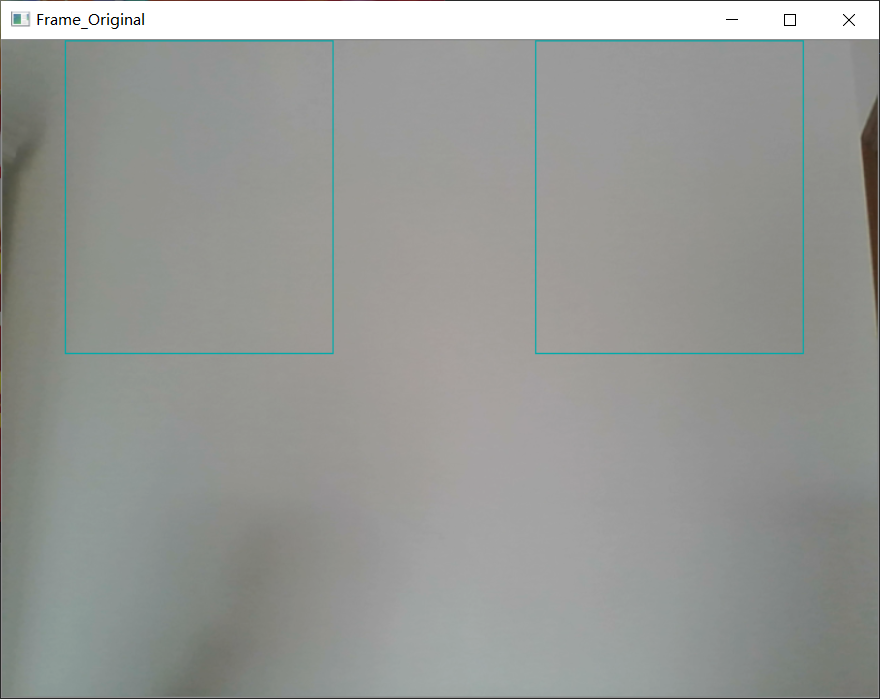

我们是做了个小技巧来侧面绕过手跟踪问题,如下图(直接指定了左手右手监控区域,这样就不需要动态跟踪手的rect了):

while success:

success, img = cap.read()

frame = imutils.resize(img, width=700)

cv2.rectangle(frame, (50, 0), (264, 250), (170, 170, 0)) #左手区域

cv2.rectangle(frame, (426, 0), (640, 250), (170, 170, 0)) #右手区域

cv2.imshow("Frame_Original", frame) #显示

rightHand = frame[0:250, 50:264]

leftHand = frame[0:210, 426:640]

left_hand_event = grdetect(leftHand, fgbg_left, verbose=True) #检测左手手势识别事件

right_hand_event = grdetect(rightHand, fgbg_right, verbose=True) #检测右手手势识别事件

print('left hand: ', left_hand_event, 'right hand: ', right_hand_event) #打印出来检测结果

主要看看grdetect和fgbg_left/fgbg_right:

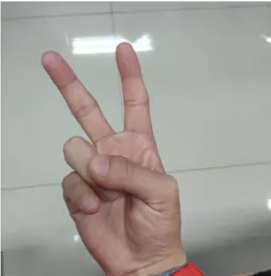

看上图,除了手之外,还有个大背景,首先得把背景去掉,才能识别出前景色-手,fgbg_left/fgbg_right其实就是用来干着活的,分别为左手、右手的背景减噪用的

fgbg_left = cv2.createBackgroundSubtractorMOG2()

fgbg_right = cv2.createBackgroundSubtractorMOG2()

def train_bg(fgbg, roi):

fgbg.apply(roi)

def start():

global fgbg_left

global fgbg_right

trainingBackgroundCount = 200 #200次来训练背景减噪训练

while trainingBackgroundCount>0:

success, img = cap.read()

frame = imutils.resize(img, width=700)

cv2.imshow("Frame_Original", frame)

rightHand = frame[0:250, 50:264]

leftHand = frame[0:210, 426:640]

train_bg(fgbg_left, leftHand) #训练左手区域

train_bg(fgbg_right, rightHand) #训练右手区域

key = cv2.waitKey(1) & 0xFF

trainingBackgroundCount -= 1

再来看看核心函数

def grdetect(array, fgbg, verbose=False):

event = {'type': 'none'}

copy = array.copy()

array = _remove_background(array, fgbg) #移除背景,会用到背景减噪(上述提到)

thresh = _bodyskin_detetc(array) #高斯+二值化

contours = _get_contours(thresh.copy()) #计算图像的轮廓点,可能会返回多个轮廓

largecont = max(contours, key=lambda contour: cv2.contourArea(contour)) #选择面积最大的轮廓

hull = cv2.convexHull(largecont, returnPoints=False) #根据轮廓点计算凸点

defects = cv2.convexityDefects(largecont, hull) #计算轮廓的凹点(凸缺陷)

if defects is not None:

# 利用凹陷点坐标, 根据余弦定理计算图像中锐角个数

copy, ndefects = _get_defects_count(copy, largecont, defects, verbose=verbose)

# 根据锐角个数判断手势, 会有一定的误差

if ndefects == 0:

event['type'] = '0'

elif ndefects == 1:

event['type'] = '2'

elif ndefects == 2:

event['type'] = '3'

elif ndefects == 3:

event['type'] = '4'

elif ndefects == 4:

event['type'] = '5'

return event

剩下的就是上述的支持函数了

def _remove_background(frame, fgbg):

fgmask = fgbg.apply(frame, learningRate=0) #learningRate=0代表不更新背景噪声,也就是不学习

kernel = np.ones((3, 3), np.uint8)

fgmask = cv2.erode(fgmask, kernel, iterations=1)

res = cv2.bitwise_and(frame, frame, mask=fgmask)

return res

def _bodyskin_detetc(frame):

# 肤色检测: YCrCb之Cr分量 + OTSU二值化

ycrcb = cv2.cvtColor(frame, cv2.COLOR_BGR2YCrCb) # 分解为YUV图像,得到CR分量

(_, cr, _) = cv2.split(ycrcb)

cr1 = cv2.GaussianBlur(cr, (5, 5), 0) # 高斯滤波

_, skin = cv2.threshold(cr1, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU) # OTSU图像二值化

return skin

# 检测图像中的凸点(手指)个数

def _get_contours(array):

# 利用findContours检测图像中的轮廓, 其中返回值contours包含了图像中所有轮廓的坐标点

contours, _ = cv2.findContours(array, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

return contours

_COLOR_RED = (0, 0, 255)

def _get_eucledian_distance(beg, end): # 计算两点之间的坐标

i = str(beg).split(',')

j = i[0].split('(')

x1 = int(j[1])

k = i[1].split(')')

y1 = int(k[0])

i = str(end).split(',')

j = i[0].split('(')

x2 = int(j[1])

k = i[1].split(')')

y2 = int(k[0])

d = math.sqrt((x1 - x2) * (x1 - x2) + (y1 - y2) * (y1 - y2))

return d

# 根据图像中凹凸点中的 (开始点, 结束点, 远点)的坐标, 利用余弦定理计算两根手指之间的夹角, 其必为锐角, 根据锐角的个数判别手势.

def _get_defects_count(array, contour, defects, verbose=False):

ndefects = 0

for i in range(defects.shape[0]):

s, e, f, _ = defects[i, 0]

beg = tuple(contour[s][0])

end = tuple(contour[e][0])

far = tuple(contour[f][0])

a = _get_eucledian_distance(beg, end)

b = _get_eucledian_distance(beg, far)

c = _get_eucledian_distance(end, far)

angle = math.acos((b ** 2 + c ** 2 - a ** 2) / (2 * b * c)) # * 57

if angle <= math.pi / 2: # 90:

ndefects = ndefects + 1

if verbose:

cv2.circle(array, far, 3, _COLOR_RED, -1)

if verbose:

cv2.line(array, beg, end, _COLOR_RED, 1)

return array, ndefects

自省推动进步,视野决定未来。

心怀远大理想。

为了家庭幸福而努力。

商业合作请看此处:https://www.magicube.ai

心怀远大理想。

为了家庭幸福而努力。

商业合作请看此处:https://www.magicube.ai

浙公网安备 33010602011771号

浙公网安备 33010602011771号