爬取中国大学的排名

爬取中国大学排名

代码如下:

1 # -*- coding: utf-8 -*-

2 '''

3 获取中国大学的排名

4 @author: hjx

5 '''

6 import requests

7 from bs4 import BeautifulSoup

8 import pandas

9 # 1. 获取网页内容

10 def getHTMLText(url):

11 try:

12 r = requests.get(url, timeout = 30)

13 r.raise_for_status()

14 r.encoding = 'utf-8'

15 return r.text

16 except Exception as e:

17 print("Error:", e)

18 return ""

19

20 # 2. 分析网页内容并提取有用数据

21 def fillTabelList(soup): # 获取表格的数据

22 tabel_list = [] # 存储整个表格数据

23 Tr = soup.find_all('tr')

24 for tr in Tr:

25 Td = tr.find_all('td')

26 if len(Td) == 0:

27 continue

28 tr_list = [] # 存储一行的数据

29 for td in Td:

30 tr_list.append(td.string)

31 tabel_list.append(tr_list)

32 return tabel_list

33

34 # 3. 可视化展示数据

35 def PrintTableList(tabel_list, num):

36 # 输出前num行数据

37 print("{1:^2}{2:{0}^10}{3:{0}^5}{4:{0}^5}{5:{0}^8}".format(chr(12288), "排名", "学校名称", "省市", "总分", "生涯质量"))

38 for i in range(num):

39 text = tabel_list[i]

40 print("{1:{0}^2}{2:{0}^10}{3:{0}^5}{4:{0}^8}{5:{0}^10}".format(chr(12288), *text))

41

42 # 4. 将数据存储为csv文件

43 def saveAsCsv(filename, tabel_list):

44 FormData = pandas.DataFrame(tabel_list)

45 FormData.columns = ["排名", "学校名称", "省市", "总分", "生涯质量", "培养结果", "科研规模", "科研质量", "顶尖成果", "顶尖人才", "科技服务", "产学研合作", "成果转化"]

46 FormData.to_csv(filename, encoding='utf-8', index=False)

47

48 if __name__ == "__main__":

49 url = "http://www.zuihaodaxue.cn/zuihaodaxuepaiming2016.html"

50 html = getHTMLText(url)

51 soup = BeautifulSoup(html, features="html.parser")

52 data = fillTabelList(soup)

53 #print(data)

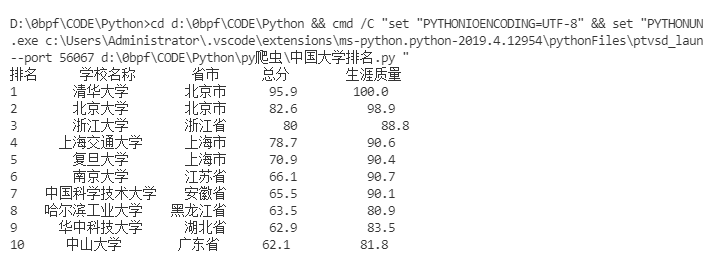

54 PrintTableList(data, 10) # 输出前10行数据

55 saveAsCsv("D:\\University_Rank.csv", data)

运行结果:

将爬取的排名保存下来:

# -*- coding: utf-8 -*-

"""

Created on Wed May 29 11:23:44 2019

@author: history

"""

import requests

import pandas

from bs4 import BeautifulSoup

allUniv = []

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = 'utf-8'

return r.text

except:

return ""

def fillUnivList(soup):

data = soup.find_all('tr')

for tr in data:

ltd = tr.find_all('td')

if len(ltd)==0:

continue

singleUniv = []

for td in ltd:

singleUniv.append(td.string)

allUniv.append(singleUniv)

def printUnivList(num):

print("{:^4}{:^10}{:^5}{:^8}{:^10}".format("排名","学校名称","省市","总分","培养规模"))

for i in range(num):

u=allUniv[i]

print("{:^4}{:^10}{:^5}{:^8}{:^10}".format(u[0],u[1],u[2],u[3],u[6]))

def main():

url = 'http://www.zuihaodaxue.cn/zuihaodaxuepaiming2016.html'

html = getHTMLText(url)

soup = BeautifulSoup(html, "html.parser")

fillUnivList(soup)

printUnivList(10)

saveAsCsv("D:/Python文件/工作簿1.xlsx", data)

main()

将爬取得到的进行修改:

# -*- coding: utf-8 -*-

"""

Created on Wed May 29 11:30:32 2019

@author: history

"""

import sqlite3

from pandas import DataFrame

import re

class SQL_method:

'''

function: 可以实现对数据库的基本操作

'''

def __init__(self, dbName, tableName, data, columns, COLUMNS, Read_All=True):

'''

function: 初始化参数

dbName: 数据库文件名

tableName: 数据库中表的名称

data: 从csv文件中读取且经过处理的数据

columns: 用于创建数据库,为表的第一行

COLUMNS: 用于数据的格式化输出,为输出的表头

Read_All: 创建表之后是否读取出所有数据

'''

self.dbName = dbName

self.tableName = tableName

self.data = data

self.columns = columns

self.COLUMNS = COLUMNS

self.Read_All = Read_All

def creatTable(self):

connect = sqlite3.connect(self.dbName)

connect.execute("CREATE TABLE {}({})".format(self.tableName, self.columns))

connect.commit()

connect.close()

def destroyTable(self):

connect = sqlite3.connect(self.dbName)

connect.execute("DROP TABLE {}".format(self.tableName))

connect.commit()

connect.close()

def insertDataS(self):

connect = sqlite3.connect(self.dbName)

connect.executemany("INSERT INTO {} VALUES(?,?,?,?,?,?,?,?,?,?,?,?,?)".format(self.tableName), self.data)

connect.commit()

connect.close()

def getAllData(self):

connect = sqlite3.connect(self.dbName)

cursor = connect.cursor()

cursor.execute("SELECT * FROM {}".format(self.tableName))

dataList = cursor.fetchall()

connect.close()

return dataList

def searchData(self, conditions, IfPrint=True):

connect = sqlite3.connect(self.dbName)

cursor = connect.cursor()

cursor.execute("SELECT * FROM {} WHERE {}".format(self.tableName, conditions))

data = cursor.fetchall()

cursor.close()

connect.close()

if IfPrint:

self.printData(data)

return data

def deleteData(self, conditions):

connect = sqlite3.connect(self.dbName)

connect.execute("DELETE FROM {} WHERE {}".format(self.tableName, conditions))

connect.commit()

connect.close()

def printData(self, data):

print("{1:{0}^3}{2:{0}<11}{3:{0}<4}{4:{0}<4}{5:{0}<5}{6:{0}<5}{7:{0}^5}{8:{0}^5}{9:{0}^5}{10:{0}^5}{11:{0}^5}{12:{0}^6}{13:{0}^5}".format(chr(12288), *self.COLUMNS))

for i in range(len(data)):

print("{1:{0}<4.0f}{2:{0}<10}{3:{0}<5}{4:{0}<6}{5:{0}<7}{6:{0}<8}{7:{0}<7.0f}{8:{0}<8}{9:{0}<7.0f}{10:{0}<6.0f}{11:{0}<9.0f}{12:{0}<6.0f}{13:{0}<6.0f}".format(chr(12288), *data[i]))

def run(self):

try:

self.creatTable()

print(">>> 数据库创建成功")

self.insertDataS()

print(">>> 表创建、数据插入成功!")

except:

print(">>> 数据库已创建!")

if self.Read_All:

self.printData(self.getAllData())

def get_data(fileName):

data = []

f = open(fileName, 'r', encoding='utf-8')

for line in f.readlines():

line = line.replace('\n', '')

line = line.replace('%','')

line = line.split(',')

for i in range(len(line)):

try:

if line[i] == '':

line[i] = '0'

line[i] = eval(line[i])

except:

continue

data.append(tuple(line))

EN_columns = "Rank real, University text, Province text, Grade real, SourseQuality real, TrainingResult real, ResearchScale real, \

ReserchQuality real, TopResult real, TopTalent real, TechnologyService real, Cooperation real, TransformationResults real"

CH_columns = ["排名", "学校名称", "省市", "总分", "生涯质量", "培养结果(%)", "科研规模", "科研质量", "顶尖成果", "顶尖人才", "科技服务", "产学研合作", "成果转化"]

return data[1:], EN_columns, CH_columns

if __name__ == "__main__":

fileName = "D:/Python文件/工作簿1.xlsx.csv"

data, EN_columns, CH_columns = get_data(fileName)

dbName = "university.db"

tableName = "university"

SQL = SQL_method(dbName, tableName, data, EN_columns, CH_columns, False)

SQL.run()

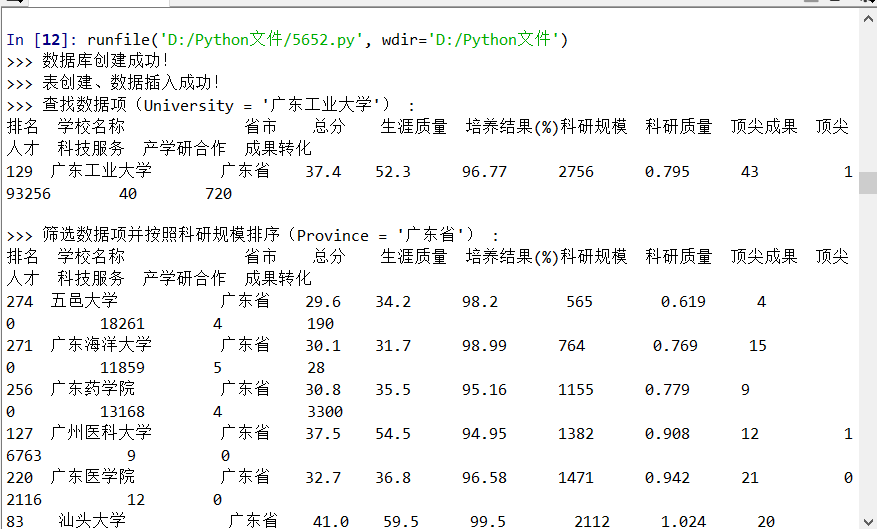

print(">>> 查找数据项(University = '广东工业大学') :")

SQL.searchData("University = '广东工业大学'", True)

print("\n>>> 筛选数据项并按照科研规模排序(Province = '广东省') :")

SQL.searchData("Province = '广东省' ORDER BY ResearchScale", True)

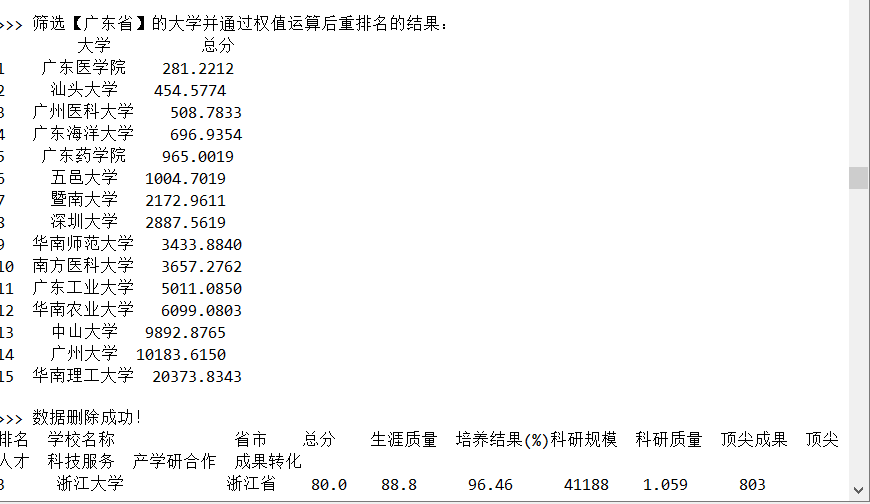

Weight = [0.3, 0.15, 0.1, 0.1, 0.1, 0.1, 0.05, 0.05, 0.05]

value, sum = [], 0

sample = SQL.searchData("Province = '广东省'", False)

for i in range(len(sample)):

for j in range(len(Weight)):

sum += sample[i][4+j] * Weight[j]

value.append(sum)

sum = 0

university = [university[1] for university in sample]

uv, tmp = [], []

for i in range(len(university)):

tmp.append(university[i])

tmp.append(value[i])

uv.append(tmp)

tmp = []

df = DataFrame(uv, columns=list(("大学", "总分")))

df = df.sort_values('总分')

df.index = [i for i in range(1, len(uv)+1)]

print("\n>>> 筛选【广东省】的大学并通过权值运算后重排名的结果:\n", df)

SQL.deleteData("Province = '北京市'")

SQL.deleteData("Province = '广东省'")

SQL.deleteData("Province = '山东省'")

SQL.deleteData("Province = '山西省'")

SQL.deleteData("Province = '江西省'")

SQL.deleteData("Province = '河南省'")

print("\n>>> 数据删除成功!")

SQL.printData(SQL.getAllData())

SQL.destroyTable()

print(">>> 表删除成功!")