1、集群安装

https://www.cnblogs.com/a120608yby/p/17295938.html

2、业务及管理网络创建

https://www.cnblogs.com/a120608yby/p/17140953.html

3、Ceph网络创建

# 配置ceph多网卡绑定

# vim /etc/network/interfaces

...

auto bond1

iface bond1 inet manual

ovs_bridge vmbr1

ovs_type OVSBond

ovs_bonds enp2s0f2 enp2s0f3

ovs_options bond_mode=balance-tcp lacp=active other_config:lacp-time=fast

...

# 创建ceph网络

# vim /etc/network/interfaces

...

auto ceph

iface ceph inet static

address 10.10.10.101/24

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=111

...

# 创建OVS Bridge Ceph网络

# vim /etc/network/interfaces

auto vmbr1

iface vmbr1 inet manual

ovs_type OVSBridge

ovs_ports bond1 ceph

# 最终配置

# vim /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto enp2s0f0

iface enp2s0f0 inet manual

auto enp2s0f1

iface enp2s0f1 inet manual

auto enp2s0f2

iface enp2s0f2 inet manual

auto enp2s0f3

iface enp2s0f3 inet manual

iface enp11s0 inet manual

iface enp12s0 inet manual

auto mgt

iface mgt inet static

address 192.168.0.101/24

gateway 192.168.0.254

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=110

#mgt

auto ceph

iface ceph inet static

address 10.10.10.101/24

ovs_type OVSIntPort

ovs_bridge vmbr1

ovs_options tag=111

#ceph

auto bond0

iface bond0 inet manual

ovs_bridge vmbr0

ovs_type OVSBond

ovs_bonds enp2s0f0 enp2s0f1

ovs_options bond_mode=balance-tcp lacp=active other_config:lacp-time=fast

auto bond1

iface bond1 inet manual

ovs_bridge vmbr1

ovs_type OVSBond

ovs_bonds enp2s0f2 enp2s0f3

ovs_options bond_mode=balance-tcp lacp=active other_config:lacp-time=fast

#ceph

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 mgt

auto vmbr1

iface vmbr1 inet manual

ovs_type OVSBridge

ovs_ports bond1 ceph

4、华为交换机配置

interface Eth-Trunk1 description link_to_pve01 port link-type trunk undo port trunk allow-pass vlan 1 port trunk allow-pass vlan 2 to 4094 mode lacp interface Eth-Trunk2 description link_to_pve01_ceph port link-type access port default vlan 111 mode lacp interface GigabitEthernet0/0/7 description link_to_pve01 eth-trunk 1 interface GigabitEthernet0/0/8 description link_to_pve01 eth-trunk 1 interface GigabitEthernet0/0/9 description link_to_pve01_ceph eth-trunk 2 interface GigabitEthernet0/0/10 description link_to_pve01_ceph eth-trunk 2

5、配置ceph安装源

# vim /etc/apt/sources.list.d/ceph.list deb https://mirrors.ustc.edu.cn/proxmox/debian/ceph-quincy bookworm no-subscription deb http://download.proxmox.com/debian/ceph-reef bookworm no-subscription

6、ceph安装

pveceph install

7、初始化ceph配置

pveceph init --network 10.10.10.0/24

8、创建Monitor(三个节点)

pveceph mon create

9、创建Manager(另外两个节点)

pveceph mgr create

10、创建OSD(三个节点)

pveceph osd create /dev/sdb pveceph osd create /dev/sdc

11、创建Pool(其中一个节点)

pveceph pool create vm --add_storages

12、配置PG Autoscaler

ceph mgr module enable pg_autoscaler

13、创建CephFS(三个节点)

# 创建服务 pveceph mds create # 增加配置 # cat /etc/pve/ceph.conf [global] ... mds standby replay = true ... # 创建cephfs pveceph fs create --pg_num 128 --add-storage

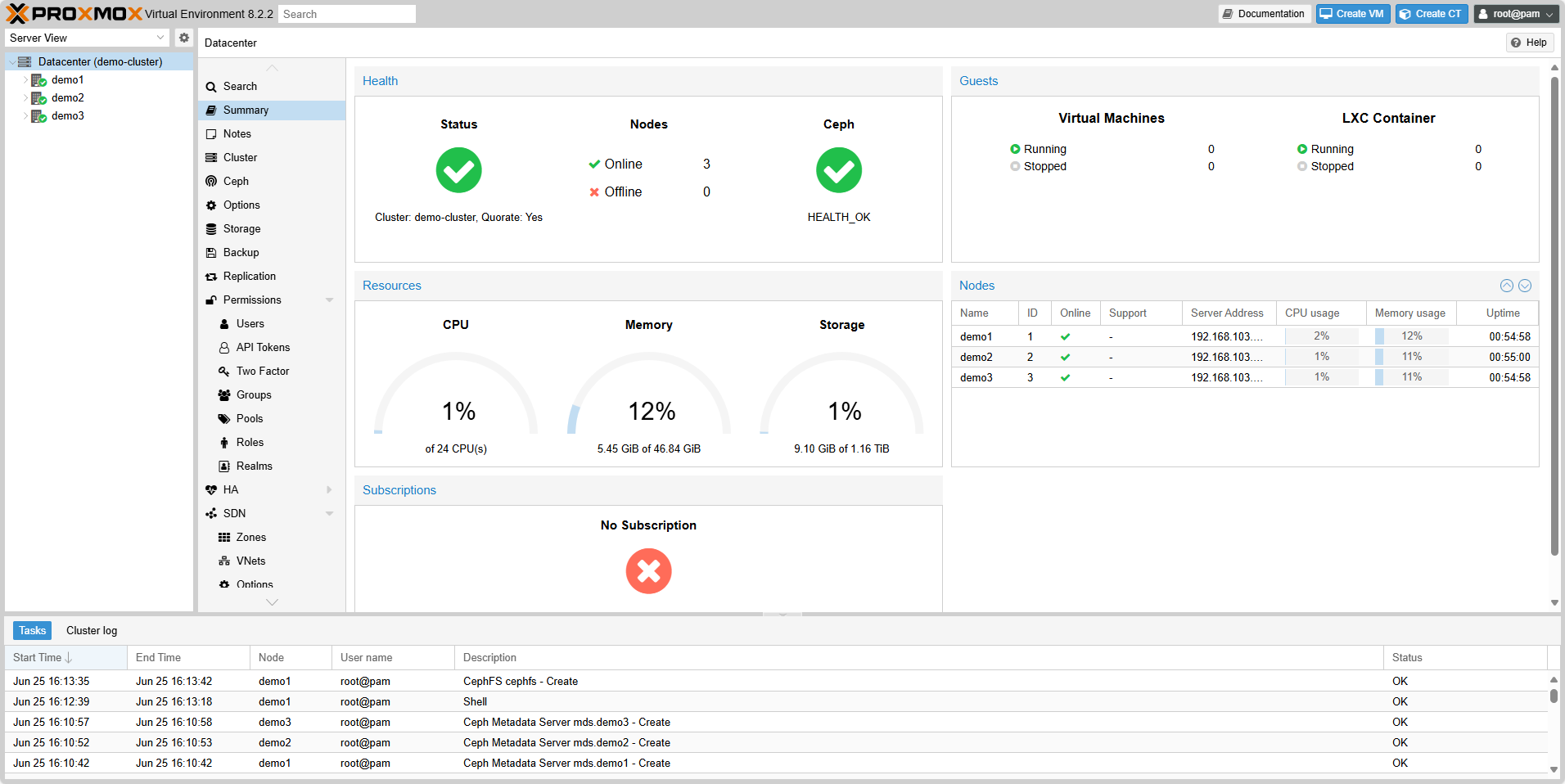

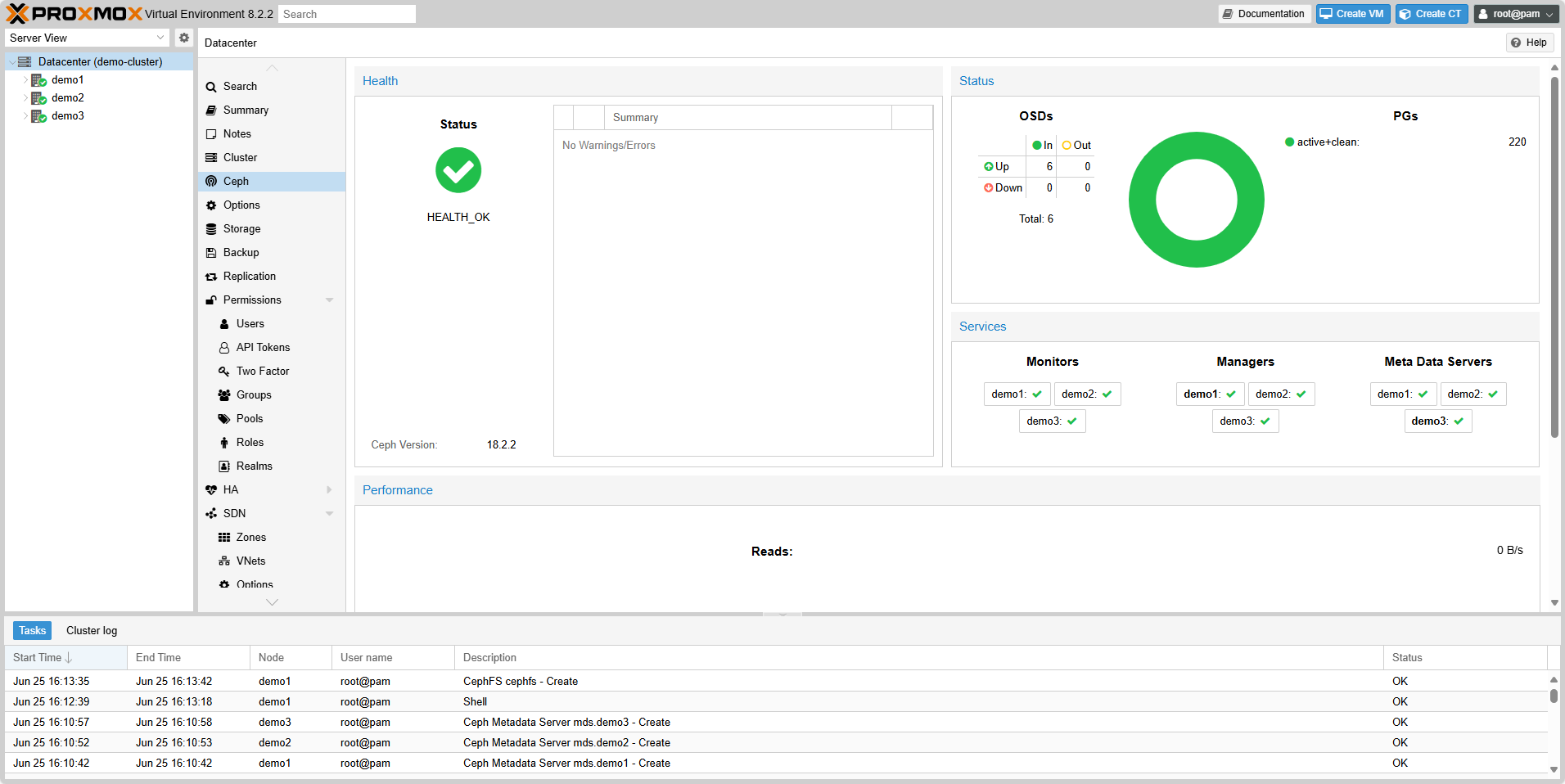

14、集群界面

15、ceph性能测试

# 创建测试pool

pveceph pool create test

# 性能测试

# rados bench -p test 10 write --no-cleanup

hints = 1

Maintaining 16 concurrent writes of 4194304 bytes to objects of size 4194304 for up to 10 seconds or 0 objects

Object prefix: benchmark_data_demo1_31123

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 18 2 7.99946 8 0.732808 0.73241

2 16 37 21 41.9949 76 1.26651 1.06485

3 16 53 37 49.3263 64 1.61704 1.06462

4 16 95 79 78.9883 168 0.0773377 0.757924

5 16 115 99 79.1883 80 0.0567698 0.679525

6 16 160 144 95.9856 180 0.413174 0.647237

7 16 219 203 115.982 236 0.248087 0.525356

8 16 300 284 141.978 324 0.241769 0.441897

9 16 368 352 156.42 272 0.127854 0.403269

10 16 440 424 169.574 288 0.131217 0.373617

Total time run: 10.223

Total writes made: 440

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 172.161

Stddev Bandwidth: 108.995

Max bandwidth (MB/sec): 324

Min bandwidth (MB/sec): 8

Average IOPS: 43

Stddev IOPS: 27.2486

Max IOPS: 81

Min IOPS: 2

Average Latency(s): 0.36814

Stddev Latency(s): 0.368086

Max latency(s): 2.25004

Min latency(s): 0.0247644

# rados bench -p test 10 seq

hints = 1

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 112 96 383.885 384 0.283408 0.149436

2 16 201 185 369.845 356 0.115177 0.144904

3 16 300 284 378.527 396 0.0560456 0.149155

4 16 412 396 395.877 448 0.0107278 0.157255

Total time run: 4.12085

Total reads made: 440

Read size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 427.097

Average IOPS: 106

Stddev IOPS: 9.62635

Max IOPS: 112

Min IOPS: 89

Average Latency(s): 0.148398

Max latency(s): 1.28936

Min latency(s): 0.00723618

参考:

https://pve.proxmox.com/wiki/Deploy_Hyper-Converged_Ceph_Cluster