数据采集与融合技术实践作业三

gitee仓库链接:gitee仓库链接

102102141 周嘉辉

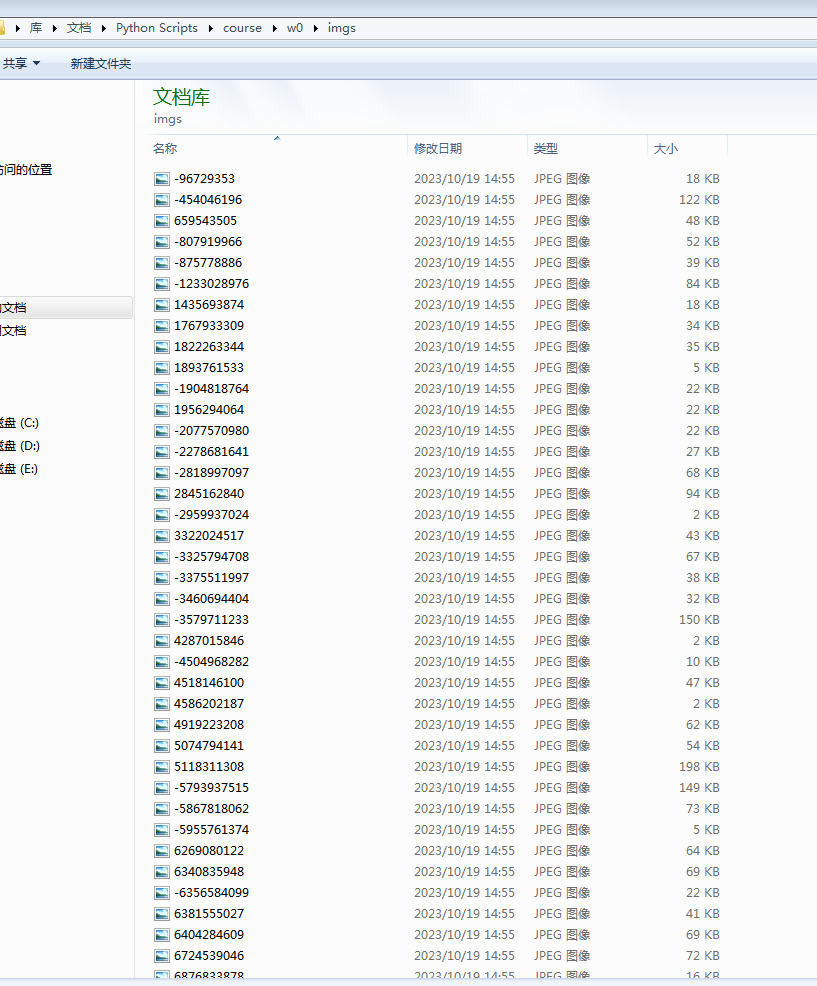

作业①

- 指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。

使用scrapy框架分别实现单线程和多线程的方式爬取。

部分代码:

class weatherSpider(scrapy.Spider):

name = "w0"

allowed_domains = ["weather.com.cn"]

start_urls = ["http://www.weather.com.cn/"]

def parse(self, response):

# filename = "teacher.html"

# open(filename, 'w').write(response.body)

print('=====================================================================')

items = []

soup = BeautifulSoup(response.body, "html.parser")

for img_tag in soup.find_all("img"):

url = img_tag["src"]

i = W0Item()

i['url'] = url

print(url)

items.append(i)

# yield i

print('=====================================================================')

return items

class Img_downloadPipeline:

def process_item(self,item,spider):

print('download...')

print(item)

url = item['url']#.get('src')

filename = '.\\imgs\\'+str(randint(-9999999999,9999999999))+'.jpg'

urllib.request.urlretrieve(url,filename)

结果:

心得体会:后缀名直接加.jpg并不是很好的写法。

gitee仓库链接:gitee仓库链接

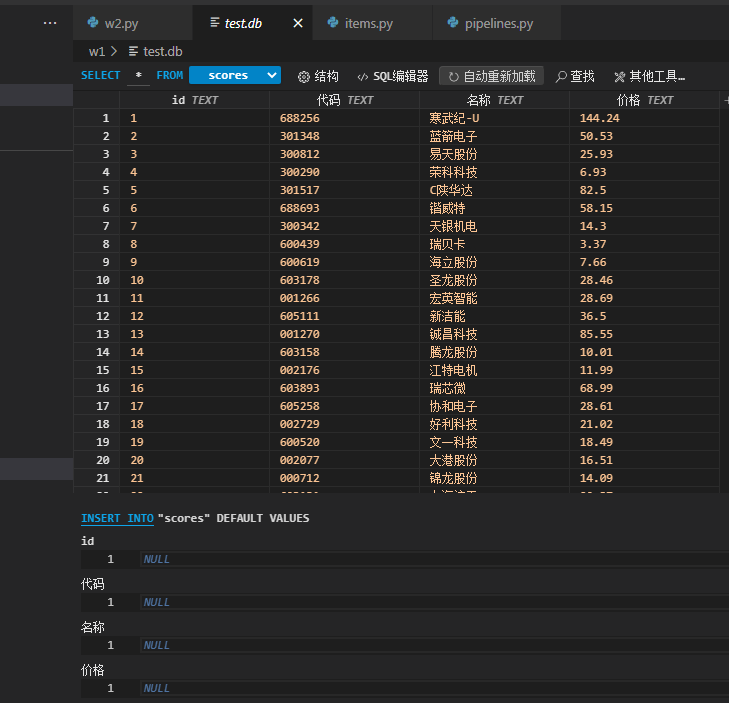

作业②

- 熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

class eastmoneySpider(scrapy.Spider):

name = "w1"

allowed_domains = ["eastmoney.com"]

start_urls = ["http://54.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124015380571520090935_1602750256400&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1602750256401"]

count = 1

# header = {

# "args": {},

# "headers": {

# "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8",

# "Accept-Encoding": "gzip, deflate",

# "Accept-Language": "zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2",

# "Host": "httpbin.org",

# "Upgrade-Insecure-Requests": "1",

# "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:109.0) Gecko/20100101 Firefox/113.0",

# "X-Amzn-Trace-Id": "Root=1-6530dac1-20e651693f4025e634bacb25"

# },

# "origin": "112.49.6.23",

# "url": "http://httpbin.org/get"

# }

def parse(self, response):

# filename = "teacher.html"

# open(filename, 'w').write(response.body)

print('==============================begin=======================================')

data = response.text

# 去除多余信息并转换成json

start = data.index('{')

data = json.loads(data[start:len(data) - 2])

if data['data']:

# 选择和处理数据格式

for stock in data['data']['diff']:

item = W1Item()

item['id'] = str(self.count)

self.count+=1

item["number"] = str(stock['f12'])

item["name"] = stock['f14']

item["value"] = None if stock['f2'] == "-" else str(stock['f2'])

yield item

# 查询当前页面并翻页

pn = re.compile("pn=[0-9]*").findall(response.url)[0]

page = int(pn[3:])

url = response.url.replace("pn="+str(page), "pn="+str(page+1))

yield scrapy.Request(url=url, callback=self.parse)

print('===============================end======================================')

# return items

class writeDB:

def open_spider(self,spider):

self.fp = sqlite3.connect('test.db')

# 建表的sql语句

sql_text_1 = '''CREATE TABLE scores

(id TEXT,

代码 TEXT,

名称 TEXT,

价格 TEXT);'''

# 执行sql语句

self.fp.execute(sql_text_1)

self.fp.commit()

def close_spider(self,spider):

self.fp.close()

def process_item(self, item, spider):

sql_text_1 = "INSERT INTO scores VALUES('"+item['id']+"', '"+item['number']+"', '"+item['name']+"','"+item['value']+"')"

# 执行sql语句

self.fp.execute(sql_text_1)

self.fp.commit()

return item

结果:

心得体会:robots.txt只是个君子协议。

gitee仓库链接:gitee仓库链接

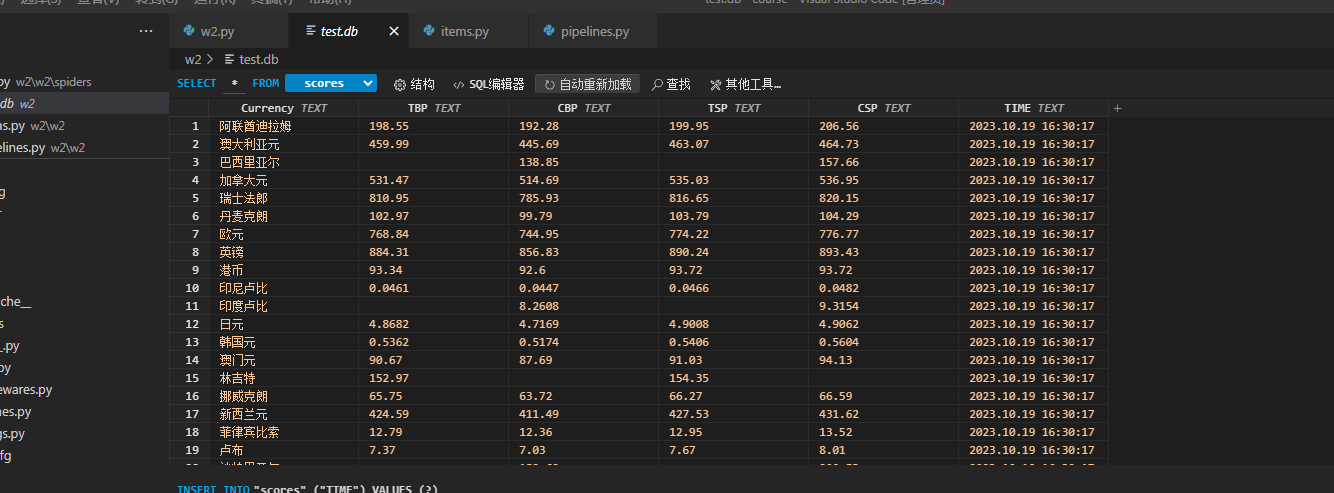

作业③

- 熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

完成代码:

class bocSpider(scrapy.Spider):

name = "w2"

allowed_domains = ["boc.cn"]

start_urls = ["https://www.boc.cn/sourcedb/whpj/"]

count = 0

def parse(self, response):

print('==============================begin=======================================')

# print(response.xpath('/html/body/div/div[5]/div[1]/div[2]/table'))

# print(response.text)

# print(response.xpath('/html/body/div'))

bs_obj=BeautifulSoup(response.body,features='lxml')

t=bs_obj.find_all('table')[1]

all_tr=t.find_all('tr')

all_tr.pop(0)

for r in all_tr:

item = W2Item()

all_td=r.find_all('td')

item['currency']=all_td[0].text

item['tbp']=all_td[1].text

item['cbp']=all_td[2].text

item['tsp']=all_td[3].text

item['csp']=all_td[4].text

item['time']=all_td[6].text

print(all_td)

yield item

self.count+=1

url = 'http://www.boc.cn/sourcedb/whpj/index_{}.html'.format(self.count)

if self.count != 5:

yield scrapy.Request(url=url, callback=self.parse)

print('===============================end======================================')

class writeDB:

def open_spider(self,spider):

self.fp = sqlite3.connect('test.db')

# 建表的sql语句

sql_text_1 = '''CREATE TABLE scores

(Currency TEXT,

TBP TEXT,

CBP TEXT,

TSP TEXT,

CSP TEXT,

TIME TEXT);'''

# 执行sql语句

self.fp.execute(sql_text_1)

self.fp.commit()

def close_spider(self,spider):

self.fp.close()

def process_item(self, item, spider):

sql_text_1 = "INSERT INTO scores VALUES('"+item['currency']+"', '"+item['tbp']+"', '"+item['cbp']+"', '"+item['tsp']+"', '"+item['csp']+"', '"+item['time']+"')"

# 执行sql语句

self.fp.execute(sql_text_1)

self.fp.commit()

return item

结果:

心得体会:有参考网上历年学长的代码,XPath真的难用。。。。

gitee仓库链接:gitee仓库链接