关于python 的实时口罩佩戴识别+接上篇

接求助

关于上次自己做的那个有些问题在查找一些opencv 的实例之后发现原来是自己的技术没有学好

看一篇获取摄像头的代码

#coding:utf-8 #Created by Pyangthon import cv2 # 获取视频流 cap = cv2.VideoCapture(0) # 指定编码格式 fourcc = cv2.VideoWriter_fourcc(*'MJPG') # cv2.VideoWriter的参数分别为:保存路径,编码格式,帧率,帧大小 out = cv2.VideoWriter("try.avi", fourcc, 20.0, (640,480)) while(cap.isOpened()): ret, frame = cap.read() if ret==True: # 如果帧的大小与上述的(640, 480)不一致,需要resize out.write(frame) cv2.imshow('frame',frame) if cv2.waitKey(1) & 0xFF == ord('q'): break else: break cap.release() out.release() cv2.destroyAllWindows()

我们看到是可以获取摄像头功能的

再来看看口罩识别

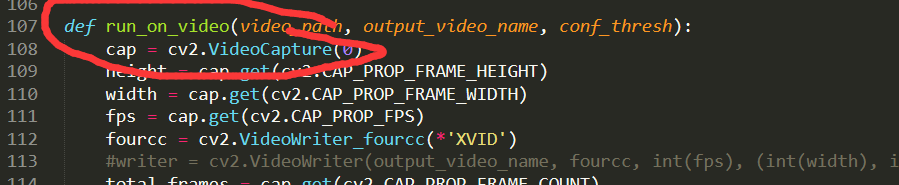

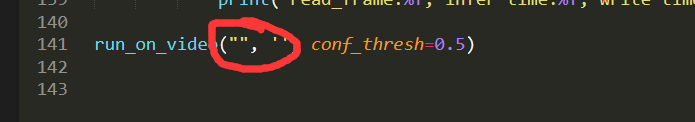

# -*- coding:utf-8 -*- import cv2 import time import argparse import numpy as np from PIL import Image from keras.models import model_from_json from utils.anchor_generator import generate_anchors from utils.anchor_decode import decode_bbox from utils.nms import single_class_non_max_suppression from load_model.tensorflow_loader import load_tf_model, tf_inference #导入口罩智能识别模型 sess, graph = load_tf_model('models\face_mask_detection.pb') # anchor configuration锚点设置 feature_map_sizes = [[33, 33], [17, 17], [9, 9], [5, 5], [3, 3]] anchor_sizes = [[0.04, 0.056], [0.08, 0.11], [0.16, 0.22], [0.32, 0.45], [0.64, 0.72]] anchor_ratios = [[1, 0.62, 0.42]] * 5 # generate anchors生成锚点 anchors = generate_anchors(feature_map_sizes, anchor_sizes, anchor_ratios) #以下用于推断(inference),批大小为1,模型输出形状为[1,N,4],因此将锚点的dim扩展为[1,anchor_num,4] anchors_exp = np.expand_dims(anchors, axis=0) #将推断结果分为两类,一类Mask,表示佩戴了口罩,另外一类是NoMask,表示没有佩戴口罩 id2class = {0: 'Mask', 1: 'NoMask'} def inference(image, conf_thresh=0.5, iou_thresh=0.4, target_shape=(160, 160), draw_result=True, show_result=True): ''' 检测推理的主要功能 # :param image:3D numpy图片数组 # :param conf_thresh:分类概率的最小阈值。 # :param iou_thresh:网管的IOU门限 # :param target_shape:模型输入大小。 # :param draw_result:是否将边框拖入图像。 # :param show_result:是否显示图像。 ''' # image = np.copy(image) output_info = [] height, width, _ = image.shape image_resized = cv2.resize(image, target_shape) image_np = image_resized / 255.0 # 归一化到0~1 image_exp = np.expand_dims(image_np, axis=0) y_bboxes_output, y_cls_output = tf_inference(sess, graph, image_exp) # remove the batch dimension, for batch is always 1 for inference. y_bboxes = decode_bbox(anchors_exp, y_bboxes_output)[0] y_cls = y_cls_output[0] # 为了加快速度,请执行单类NMS,而不是多类NMS。 bbox_max_scores = np.max(y_cls, axis=1) bbox_max_score_classes = np.argmax(y_cls, axis=1) # keep_idx是nms之后的活动边界框。 keep_idxs = single_class_non_max_suppression(y_bboxes, bbox_max_scores, conf_thresh=conf_thresh,iou_thresh=iou_thresh) for idx in keep_idxs: conf = float(bbox_max_scores[idx]) class_id = bbox_max_score_classes[idx] bbox = y_bboxes[idx] # 裁剪坐标,避免该值超出图像边界。 xmin = max(0, int(bbox[0] * width)) ymin = max(0, int(bbox[1] * height)) xmax = min(int(bbox[2] * width), width) ymax = min(int(bbox[3] * height), height) if draw_result: if class_id == 0: color = (0, 255, 0) else: color = (255, 0, 0) cv2.rectangle(image, (xmin, ymin), (xmax, ymax), color, 2) cv2.putText(image, "%s: %.2f" % (id2class[class_id], conf), (xmin + 2, ymin - 2), cv2.FONT_HERSHEY_SIMPLEX, 1, color) output_info.append([class_id, conf, xmin, ymin, xmax, ymax]) if show_result: Image.fromarray(image).show() return output_info def run_on_video(video_path, output_video_name, conf_thresh): cap = cv2.VideoCapture(video_path) height = cap.get(cv2.CAP_PROP_FRAME_HEIGHT) width = cap.get(cv2.CAP_PROP_FRAME_WIDTH) fps = cap.get(cv2.CAP_PROP_FPS) fourcc = cv2.VideoWriter_fourcc(*'XVID') #writer = cv2.VideoWriter(output_video_name, fourcc, int(fps), (int(width), int(height))) total_frames = cap.get(cv2.CAP_PROP_FRAME_COUNT) if not cap.isOpened(): raise ValueError("Video open failed.") return status = True idx = 0 while status: start_stamp = time.time() status, img_raw = cap.read() img_raw = cv2.cvtColor(img_raw, cv2.COLOR_BGR2RGB) read_frame_stamp = time.time() if (status): inference(img_raw, conf_thresh, iou_thresh=0.5, target_shape=(260, 260), draw_result=True, show_result=False) cv2.imshow('image', img_raw[:, :, ::-1]) cv2.waitKey(1) inference_stamp = time.time() # writer.write(img_raw) write_frame_stamp = time.time() idx += 1 print("%d of %d" % (idx, total_frames)) print("read_frame:%f, infer time:%f, write time:%f" % (read_frame_stamp - start_stamp, inference_stamp - read_frame_stamp, write_frame_stamp - inference_stamp)) run_on_video("mp4/test.mp4", '', conf_thresh=0.5)

其实就是路径的问题和摄像头调用的问题修改口罩佩戴py里面的两处就好了

就可以接着本人上次的代码改就行吧!

接下来就是我的文件夹

链接:https://pan.baidu.com/s/1-JGCDS8i3BsEKe0Dd3NLNg

下载后调试几下就可以用了

这里附上我做成功的视频

本文作者:Yang-blackSun

本文链接:https://www.cnblogs.com/Yang-blackSun/p/18025381

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

本文作者:Yang-blackSun

本文链接:https://www.cnblogs.com/Yang-blackSun/p/18025381

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步