LangChain官方示例教程(Build a Simple LLM Application)。重新组织顺序及说明方式,更加适合新手阅读。

![]()

pip install langchain

# 接入ollama本地大模型

pip install langchian-ollama

# 接入兼容OpenAI接口的国产大模型

pip install langchain-openai

提示词

输入

- 提示词主要有三种角色,LangChain有相应的Message类

from langchain_core.messages import SystemMessage, HumanMessage, AIMessage

# 原始写法

messages = [

{"role": "system", "content": "将下面的内容翻译成 英语"},

{"role": "user", "content": "你好,吃了吗?"},

{"role": "assistant", "content": "Hello, have you eaten yet?"},

]

# 使用消息类

messages = [

SystemMessage(content="将下面的内容翻译成 英语"),

HumanMessage(content="你好,吃了吗?"),

AIMessage(content="Hello, have you eaten yet?"),

]

# 另一种写法

messages = [

("system", "将下面的内容翻译成 英语"),

("human", "你好,吃了吗?"),

("ai", "Hello, have you eaten yet?"),

]

from langchain_core.messages import SystemMessage, HumanMessage, AIMessage

message = AIMessage(content="Hello, have you eaten yet?")

# 以下两个方法,SystemMessage、HumanMessage、AIMessage均适用

# 打印输出

message.pretty_print()

# Message继承Pydantic,可以使用Pydantic方法

print(message.model_dump_json())

模板

from langchain_core.prompts import ChatPromptTemplate

# 输入

inputs = {"language": "英语", "text": "你好,吃了吗?"}

# 定义模板

template = ChatPromptTemplate(

[("system", "将下面的内容翻译成 {language} "), ("human", "{text}")]

)

# 填充模板

result = template.invoke(inputs)

# result结果如下:

# messages = [

# SystemMessage(content="将下面的内容翻译成 英语", additional_kwargs={}, response_metadata={}),

# HumanMessage(content="你好,吃了吗?", additional_kwargs={}, response_metadata={}),

# ]

接入大模型

实例化大模型

from langchain_ollama import ChatOllama

# ollama大模型

llm = ChatOllama(base_url="http://localhost:11434", model="qwen2.5:latest")

from langchain_openai import ChatOpenAI

# 兼容OpenAI接口的国产大模型(如:阿里云、火山、腾讯云等)

llm = ChatOpenAI(

openai_api_base="各个大平台兼容OpenAI的地址",

openai_api_key="xxx-xxx",

model_name="模型名称/endpoint等"

)

from langchain_ollama import ChatOllama

from langchain_core.messages import HumanMessage

# ollama大模型

llm = ChatOllama(base_url="http://localhost:11434", model="qwen2.5:latest")

# 提示词

messages = [HumanMessage(content="你好,吃了吗?")]

result = llm.invoke(messages)

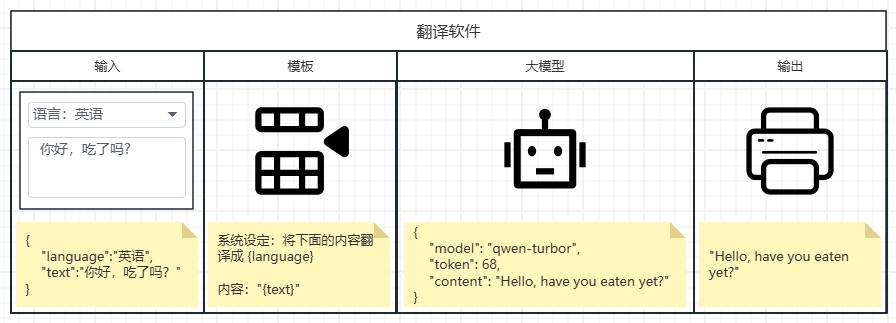

主线(模板+大模型)

from langchain_ollama import ChatOllama

from langchain_core.prompts import ChatPromptTemplate

# 输入

inputs = {"language": "英语", "text": "你好,吃了吗?"}

# 模板

template = ChatPromptTemplate(

[("system", "将下面的内容翻译成 {language} "), ("human", "{text}")]

)

# 大模型

llm = ChatOllama(base_url="http://localhost:11434", model="qwen2.5:latest")

# 调用

# result = template.invoke(inputs)

# result = llm.invoke(result)

# LangChain写法

chain = template | llm

result = chain.invoke(inputs)

输出转换

输出转换器

from langchain_core.output_parsers import StrOutputParser, JsonOutputParser

from langchain_core.messages import AIMessage

# 模拟大模型返回的文本消息

message = AIMessage(content='{"name": "Alice", "age": 30}')

# 字符串输出解析器

str_parser = StrOutputParser()

result = str_parser.invoke(message)

print(type(result)) # <class 'str'>

print(result) # {"name": "Alice", "age": 30}

# Json输出解析器(代码中呈现为字典)

json_parser = JsonOutputParser()

result = json_parser.invoke(message)

print(type(result)) # <class 'dict'>

print(result) # {'name': 'Alice', 'age': 30}

主线(模板+大模型+输出)

from langchain_ollama import ChatOllama

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

# 输入

inputs = {"language": "英语", "text": "你好,吃了吗?"}

# 模板

template = ChatPromptTemplate(

[("system", "将下面的内容翻译成 {language} "), ("human", "{text}")]

)

# 大模型

llm = ChatOllama(base_url="http://localhost:11434", model="qwen2.5:latest")

# 输出转换器

parser = StrOutputParser()

# 调用

# result = template.invoke(inputs)

# result = llm.invoke(result)

# result = parser.invoke(result)

# LangChain写法

chain = template | llm | parser

result = chain.invoke(inputs)

其他补充

模板

from langchain_core.prompts import ChatPromptTemplate

template = ChatPromptTemplate(

[("system", "你是导游,回答用户提出的问题"), ("placeholder", "{conversation}")]

)

inputs = {

"conversation": [

("human", "福州"),

("ai", "福州是一个....."),

("human", "什么季节去合适?"),

],

}

# 填充模板

messages = template.invoke(inputs)

# messages = [

# SystemMessage(content="你是导游,回答用户提出的问题", additional_kwargs={}, response_metadata={}),

# HumanMessage(content="福州", additional_kwargs={}, response_metadata={}),

# AIMessage(content="福州是一个.....", additional_kwargs={}, response_metadata={}),

# HumanMessage(content="什么季节去合适?", additional_kwargs={}, response_metadata={}),

# ]

from langchain_core.prompts import ChatPromptTemplate

template = ChatPromptTemplate(

[("system", "你是{role},回答用户提出的问题"), ("placeholder", "{conversation}")]

)

inputs = {

"role": "导游",

"conversation": [

("human", "福州"),

("ai", "福州是一个....."),

("human", "什么季节去合适?"),

],

}

messages = template.invoke(inputs)

浙公网安备 33010602011771号

浙公网安备 33010602011771号