数据采集与融合技术第三次作业

| 学号姓名 | 102202103王文豪 |

|---|---|

| gitee仓库地址 | https://gitee.com/wwhpower/project_wwh.git |

作业①:

要求:指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。–务必控制总页数(学号尾数2位)、总下载的图片数量(尾数后3位)等限制爬取的措施。输出信息: 将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

(1)代码如下:

#item.py

import scrapy

class WeatherImageItem(scrapy.Item):

image_urls = scrapy.Field() # 存储图片URL的字段

images = scrapy.Field() # 存储下载后的图片

#spider.py

import scrapy

from weather_image_scraper.items import WeatherImageItem

class WeatherSpiderSpider(scrapy.Spider):

name = 'weather_images'

allowed_domains = ['weather.com.cn']

start_urls = ['http://www.weather.com.cn/']

# 控制总页数和总下载的图片数量

total_pages = 3

total_images = 103

images_crawled = 0

current_page = 1

def parse(self, response):

# 提取当前页面的图片链接

img_urls = response.css('img::attr(src)').getall()

for img_url in img_urls:

if self.images_crawled < self.total_images:

yield {

'image_url': img_url,

}

self.images_crawled += 1

else:

break # 达到总图片数量限制,停止爬取

# 判断是否需要继续爬取下一页

if self.current_page < self.total_pages:

self.current_page += 1

next_page_url = f'http://www.weather.com.cn/page/{self.current_page}' # 假设分页 URL 规则

yield scrapy.Request(url=next_page_url, callback=self.parse)

#pipelines.py

from scrapy.pipelines.images import ImagesPipeline

import scrapy

class WeatherImagesPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

for image_url in item['image_urls']:

yield scrapy.Request(image_url)

def item_completed(self, results, item, info):

image_paths = [x['path'] for ok, x in results if ok]

item['images'] = image_paths

return item

#settings.py

ITEM_PIPELINES = {

'weather_image_scraper.pipelines.WeatherImagesPipeline': 1,

}

IMAGES_STORE = 'C:/Users/86158/examples' # 设置图片存储路径

ROBOTSTXT_OBEY = False

CONCURRENT_REQUESTS = 1 # 单线程

# CONCURRENT_REQUESTS = 10 # 多线程

结果如下:

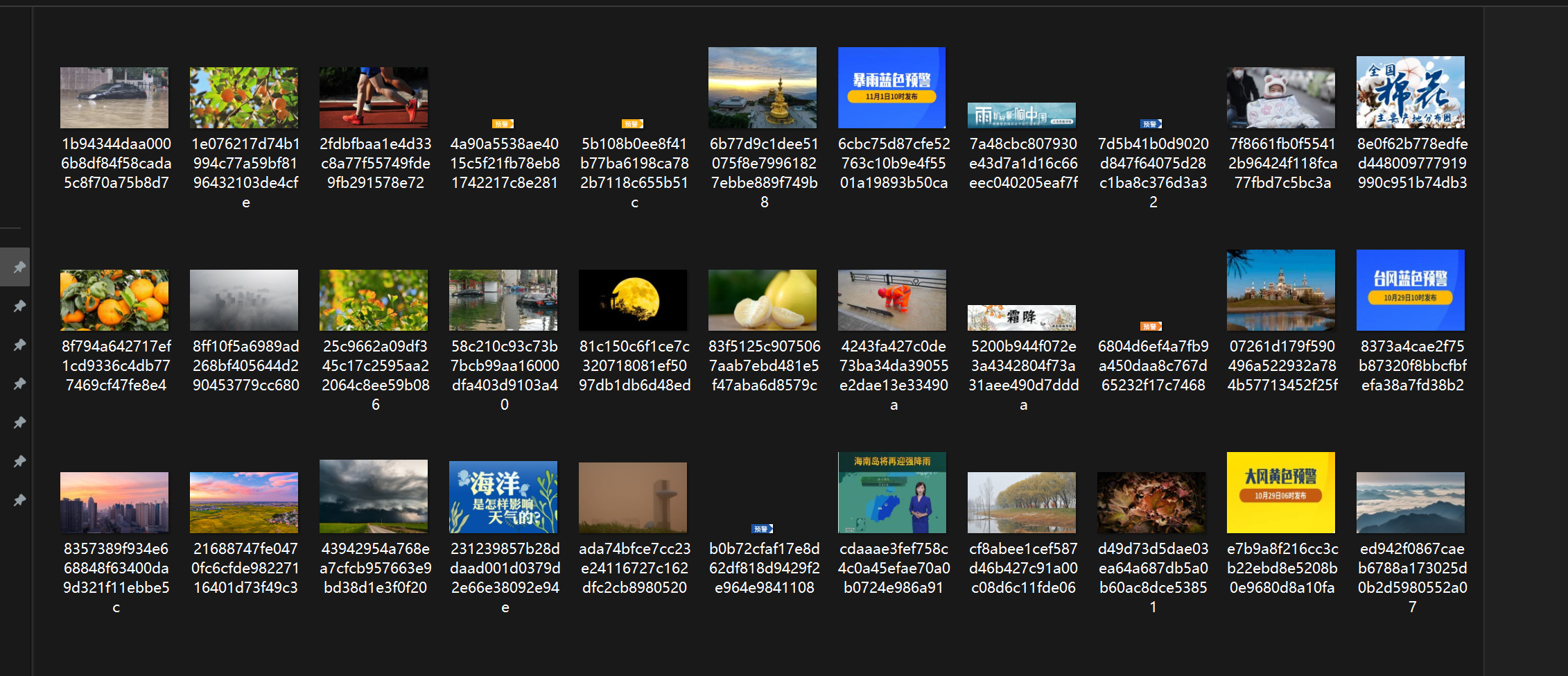

爬取的图片:

(图片有限,仅爬取这么多)

(2)作业心得:本次实验通过在实现单线程和多线程爬取的过程中,我明显感受到了多线程爬取的速度优势。通过Scrapy的异步处理能力,多线程爬取可以同时发起多个请求,显著提高了爬取效率。

作业②

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

候选网站:东方财富网:https://www.eastmoney.com/

输出信息:MySQL数据库存储和输出格式如下:

表头英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计

(1)代码如下:

#item.py

import scrapy

class StockItem(scrapy.Item):

id = scrapy.Field()

code = scrapy.Field()

name = scrapy.Field()

newPrice = scrapy.Field()

price_change_amplitude = scrapy.Field()

price_change_Lines = scrapy.Field()

volume = scrapy.Field()

turnover = scrapy.Field()

amplitude = scrapy.Field()

highest = scrapy.Field()

lowest = scrapy.Field()

today = scrapy.Field()

yesterday = scrapy.Field()

spider.py

import scrapy

from stock_scraper.items import StockItem

class StockSpider(scrapy.Spider):

name = 'stock_spider'

allowed_domains = ['www.eastmoney.com']

start_urls = ['https://quote.eastmoney.com/center/gridlist.html#hs_a_board']

def parse(self, response):

stocks = response.xpath("//tbody//tr")

for stock in stocks:

item = StockItem()

item['id'] = stock.xpath('.//td[1]//text()').get()

item['code'] = stock.xpath('.//td[2]//text()').get()

item['name'] = stock.xpath('.//td[3]//text()').get()

item['newPrice'] = stock.xpath('.//td[5]//text()').get()

item['price_change_amplitude'] = stock.xpath('.//td[6]//text()').get()

item['price_change_Lines'] = stock.xpath('.//td[7]//text()').get()

item['volume'] = stock.xpath('.//td[8]//text()').get()

item['turnover'] = stock.xpath('.//td[9]//text()').get()

item['amplitude'] = stock.xpath('.//td[10]//text()').get()

item['highest'] = stock.xpath('.//td[11]//text()').get()

item['lowest'] = stock.xpath('.//td[12]//text()').get()

item['today'] = stock.xpath('.//td[13]//text()').get()

item['yesterday'] = stock.xpath('.//td[14]//text()').get()

yield item

#pipelines.py

import mysql.connector

from mysql.connector import Error

class MySQLPipeline:

def open_spider(self, spider):

try:

self.connection = mysql.connector.connect(

host='127.0.0.1',

database='wwh', # 使用您的数据库名称

user='root',

password='123456' # 使用您的密码

)

self.cursor = self.connection.cursor()

self.cursor.execute('''

CREATE TABLE IF NOT EXISTS stockData (

id INTEGER PRIMARY KEY AUTO_INCREMENT,

code VARCHAR(255),

name VARCHAR(255),

newPrice VARCHAR(255),

price_change_amplitude VARCHAR(255),

price_change_Lines VARCHAR(255),

volume VARCHAR(255),

turnover VARCHAR(255),

amplitude VARCHAR(255),

highest VARCHAR(255),

lowest VARCHAR(255),

today VARCHAR(255),

yesterday VARCHAR(255)

)

''')

except Error as e:

spider.logger.error(f"Error connecting to MySQL: {e}")

def close_spider(self, spider):

try:

self.connection.commit()

except Error as e:

spider.logger.error(f"Error committing to MySQL: {e}")

finally:

self.cursor.close()

self.connection.close()

def process_item(self, item, spider):

try:

with self.connection.cursor() as cursor:

cursor.execute('''

INSERT INTO stockData (code, name, newPrice, price_change_amplitude, price_change_Lines, volume, turnover, amplitude, highest, lowest, today, yesterday)

VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)

ON DUPLICATE KEY UPDATE

newPrice=VALUES(newPrice),

price_change_amplitude=VALUES(price_change_amplitude),

price_change_Lines=VALUES(price_change_Lines),

volume=VALUES(volume),

turnover=VALUES(turnover),

amplitude=VALUES(amplitude),

highest=VALUES(highest),

lowest=VALUES(lowest),

today=VALUES(today),

yesterday=VALUES(yesterday)

''', (

item['code'],

item['name'],

item['newPrice'],

item['price_change_amplitude'],

item['price_change_Lines'],

item['volume'],

item['turnover'],

item['amplitude'],

item['highest'],

item['lowest'],

item['today'],

item['yesterday']

))

self.connection.commit()

except Error as e:

spider.logger.error(f"Error inserting data into MySQL: {e}")

return item

#middlewares.py

import time

from selenium import webdriver

from scrapy.http import HtmlResponse

class SeleniumMiddleware:

def process_request(self, request, spider):

# 设置Selenium WebDriver

driver = webdriver.Edge()

try:

# 访问URL

driver.get(request.url)

# 等待页面加载

time.sleep(3)

# 获取页面源代码

data = driver.page_source

finally:

# 关闭WebDriver

driver.quit()

# 返回构造的HtmlResponse对象

return HtmlResponse(url=request.url, body=data.encode('utf-8'), encoding='utf-8', request=request)

#settings.py

ITEM_PIPELINES = {

'stock_scraper.pipelines.MySQLPipeline': 300,

}

DOWNLOADER_MIDDLEWARES = {

'stock_scraper.middlewares.SeleniumMiddleware': 543,

}

MYSQL_HOST = '127.0.0.1'

MYSQL_DATABASE = 'wwh'

MYSQL_USER = 'root'

MYSQL_PASSWORD = '123456'

ROBOTSTXT_OBEY = False

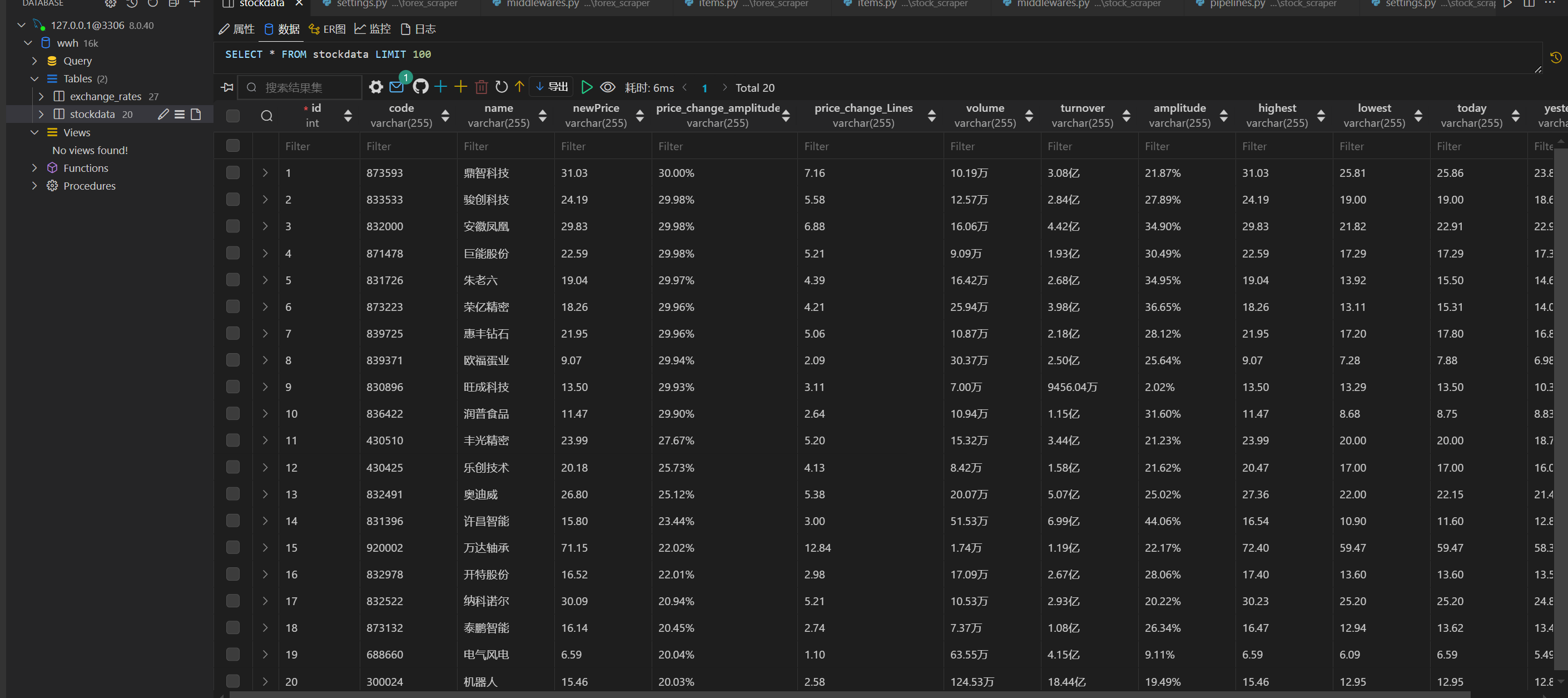

爬取结果:

(2)作业心得:通过本次实验,我对于使用XPath解析HTML文档有了进一步的理解,在Scrapy中使用XPath选择器可以精确地定位和提取网页中的数据。

作业③:

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:中国银行网:https://www.boc.cn/sourcedb/whpj/

(1)代码如下:

#items.py

import scrapy

class ForexItem(scrapy.Item):

currency = scrapy.Field()

tbp = scrapy.Field()

cbp = scrapy.Field()

tsp = scrapy.Field()

csp = scrapy.Field()

time = scrapy.Field()

#spider.py

import scrapy

from forex_scraper.items import ForexItem

class BankSpider(scrapy.Spider):

name = "forex_spider"

allowed_domains = ["www.boc.cn"]

start_urls = ["https://www.boc.cn/sourcedb/whpj/"]

def parse(self, response):

# 选择第一个 tbody 中的所有行

rows = response.xpath('//tbody[1]/tr')

# 调整循环范围,以遍历相关的行

for row in rows[2:-2]: # 从索引 2 开始,到倒数第二行结束

item = ForexItem()

item['currency'] = row.xpath(".//td[1]//text()").get() # 使用 .get() 简化语法

item['tbp'] = row.xpath(".//td[2]//text()").get()

item['cbp'] = row.xpath(".//td[3]//text()").get()

item['tsp'] = row.xpath(".//td[4]//text()").get()

item['csp'] = row.xpath(".//td[5]//text()").get()

item['time'] = row.xpath(".//td[8]//text()").get()

yield item

#pipelines.py

import mysql.connector

from mysql.connector import Error

class MySQLPipeline:

def open_spider(self, spider):

try:

self.connection = mysql.connector.connect(

host='127.0.0.1',

user='root', # 替换为你的MySQL用户名

password='123456', # 替换为你的MySQL密码

database='wwh', # 替换为你的数据库名

charset='utf8mb4',

use_unicode=True

)

self.cursor = self.connection.cursor()

self.cursor.execute('''

CREATE TABLE IF NOT EXISTS exchange_rates (

id Integer,

currency VARCHAR(255),

tbp VARCHAR(255),

cbp VARCHAR(255),

tsp VARCHAR(255),

csp VARCHAR(255),

time VARCHAR(255)

)

''')

self.connection.commit()

except Error as e:

print(f"Error connecting to MySQL: {e}")

def close_spider(self, spider):

if self.connection.is_connected():

self.cursor.close()

self.connection.close()

def process_item(self, item, spider):

try:

self.cursor.execute('''

INSERT INTO exchange_rates (currency, tbp, cbp, tsp, csp, time)

VALUES (%s, %s, %s, %s, %s, %s)

''', (item['currency'], item['tbp'], item['cbp'], item['tsp'], item['csp'], item['time']))

self.connection.commit()

except Error as e:

print(f"Error inserting item into MySQL: {e}")

return item

其它与上一题类似。

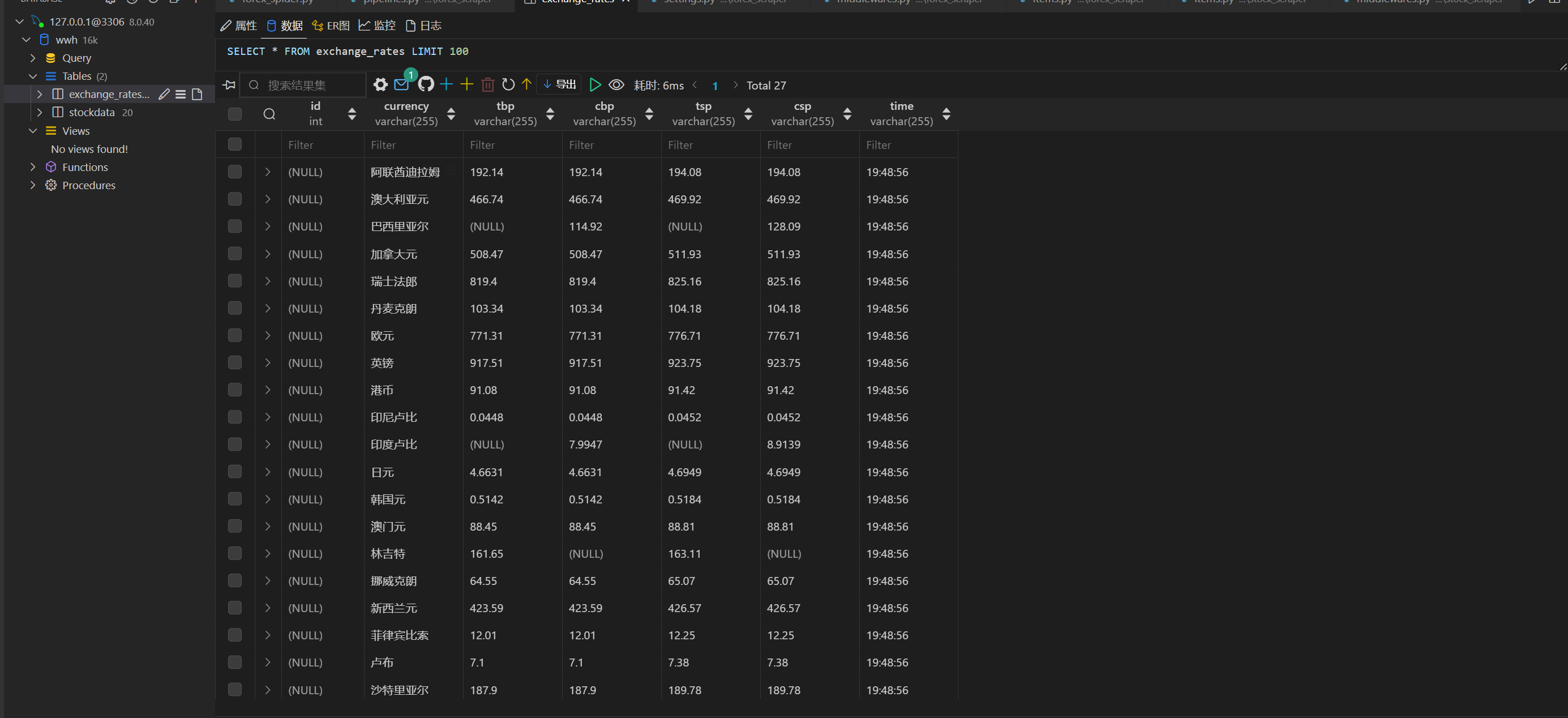

爬取结果:

(2)作业心得:跟上一题其实是一样的体会。