数据采集与融合技术第六次作业

作业①:

要求:

- 用requests和BeautifulSoup库方法爬取豆瓣电影Top250数据。

- 每部电影的图片,采用多线程的方法爬取,图片名字为电影名

- 了解正则的使用方法.

代码:

from bs4 import BeautifulSoup

import threading

import re

import requests

import urllib.request

import pymysql

import datetime

import time

class Myspiders:

def get_html(self, start_url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) '

'AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.183 Safari/537.36'}

res = requests.get(start_url, headers=headers)

res.encoding = res.apparent_encoding

html = res.text

self.prase(html)

def start(self):

try:

self.con = pymysql.connect(host='127.0.0.1', port=3306, user='root', passwd='yang6106', db='test',

charset='utf8')

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

try:

# 如果有表就删除

self.cursor.execute("delete from movie")

except:

pass

try:

sql = 'create table movie(mNo int,mName varchar(32), director varchar(32),actor varchar(32),time varchar(32),' \

'country varchar(16),type varchar(32),score varchar(32),num varchar(32),quote varchar(32),mFile varchar(32));'

self.cursor.execute(sql)

except:

pass

except:

pass

def prase(self, html):

urls = []

soup = BeautifulSoup(html, "html.parser")

movies = soup.find('ol')

movies = movies.find_all('li')

for i in movies:

try:

mNo = i.em.string

mName = i.find('span').text

info = i.find('p').text

director = re.findall(r'导演: (.*?) ', info)

actor = re.findall(r'主演: (.*?) ', info)

array = re.findall(r'\d+.+', info)[0].split('/')

time = array[0].strip()

country = array[1].strip()

type = array[2].strip()

score = i.find('span', attrs={"class": "rating_num"}).text

num = i.find('span',

attrs={"class": "rating_num"}).next_sibling.next_sibling.next_sibling.next_sibling.text

quote = i.find('span', attrs={"class": "inq"}).text

mFile = str(mName) + ".jpg"

self.cursor.execute(

"insert into movie(mNo,mName,director,actor,time,country,type,score,num,quote,mFile) "

"values( % s, % s, % s, % s, % s, % s, % s, % s, % s, % s, % s)",

(mNo, mName, director, actor, time, country, type, score, num, quote, mFile))

except Exception:

pass

# 查找页面内所有图片信息

images = soup.select("img")

for image in images:

try:

url = image['src']

mName = image['alt']

if url not in urls:

T = threading.Thread(target=self.download, args=(mName, url))

T.setDaemon(False)

T.start()

self.thread.append(T)

except Exception as err:

print(err)

def closeup(self):

try:

self.con.commit()

self.con.close()

except Exception as err:

print(err)

def download(self, pic_name, img):

req = urllib.request.Request(img)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

fobj = open("E:/imgs/movies_imgs/" + str(pic_name) + ".jpg", "wb")

fobj.write(data)

fobj.close()

def executespider(self, url):

#开始爬取

starttime = datetime.datetime.now()

print("开始爬取")

print("数据库连接中")

self.start()

self.thread = []

for page in range(0, 11):

self.get_html("https://movie.douban.com/top250?start=" + str(page * 25) + "&filter=")

for yang in self.thread:

yang.join()

self.closeup()

#爬取结束

print("爬取完成!")

endtime = datetime.datetime.now() # 计算爬虫耗时

elapsed = (endtime - starttime).seconds

print("总共花了", elapsed, " 秒")

def main():

url = 'https://movie.douban.com/top250'

spider = Myspiders()

spider.executespider(url)

if __name__ == '__main__':

main()

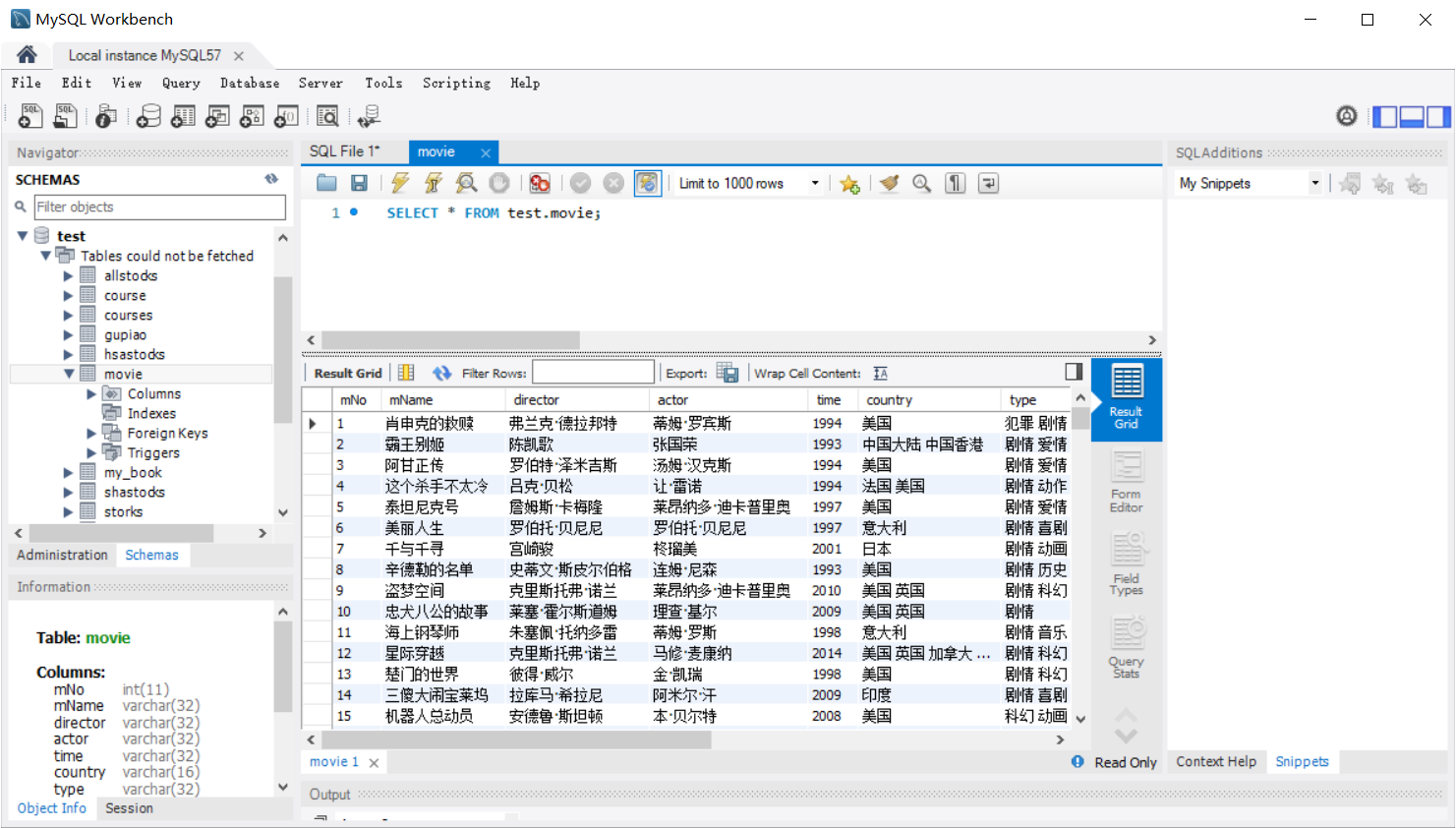

结果:

心得:刚开始爬取的时候遇到反扒机制,然后登陆账号再试了试就可以了

作业②

要求:

- 熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取科软排名信息

- 爬取科软学校排名,并获取学校的详细链接,进入下载学校Logo存储、获取官网Url、院校信息等内容。

-universitys:

import scrapy

from bs4 import UnicodeDammit

import requests

from ..items import UniversityGetsItem

from bs4 import BeautifulSoup

import os

class UniversitysSpider(scrapy.Spider):

name = 'universitys'

allowed_domains = ['https://www.shanghairanking.cn/rankings/bcur/2020']

start_urls = ['http://https://www.shanghairanking.cn/rankings/bcur/2020']

def start_requests(self, ):

start_url = 'https://www.shanghairanking.cn/rankings/bcur/2020'

yield scrapy.Request(url=start_url, callback=self.parse)

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", 'gbk'])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

trs = selector.xpath("//div[@class='rk-table-box']/table/tbody/tr")

for tr in trs:

no = tr.xpath("./td[position()=1]/text()").extract_first().strip()

name = tr.xpath("./td[position()=2]/a/text()").extract_first()

next = tr.xpath("./td[position()=2]/a/@href").extract_first()

city = tr.xpath("./td[position()=3]/text()").extract_first().strip()

# print(no,name,city)

start_url = 'https://www.shanghairanking.cn/' + next

# print(start_url)

html = requests.get(url=start_url)

dammit = UnicodeDammit(html.content, ['utf-8', 'gbk'])

newdata = dammit.unicode_markup

soup = BeautifulSoup(newdata, 'lxml')

try:

url = soup.select("div[class='univ-website'] a")[0].text

# print(url)

mFileq = soup.select("td[class='univ-logo'] img")[0]["src"]

File = str(no) + '.jpg'

logodata = requests.get(url=mFileq).content

path = r'E:/imgs/university/'

if not os.path.exists(path):

os.mkdir(path)

file_path = path + '/' + File

with open(file_path, 'wb') as fp:

fp.write(logodata)

fp.close()

# print(File)

brief = soup.select("div[class='univ-introduce'] p")[0].text

# print(brief)

except Exception as err:

print(err)

item = UniversityGetsItem()

item["sNo"] = no

item["universityName"] = name

item["city"] = city

item["officalUrl"] = url

item["info"] = brief

item["mFile"] = File

yield item

except Exception as err:

print(err)

except Exception as err:

print(err)

- pipelines:

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

from itemadapter import ItemAdapter

import pymysql

class UniversityGetsPipeline:

def open_spider(self, spider):

print("建立连接")

try:

self.con = pymysql.connect(host="localhost", port=3306, user="root", passwd='yang6106', db='test',

charset='utf8')

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

try:

self.cursor.execute("drop table if exists university")

sql = """create table university(

sNo varchar(32) primary key,

schoolName varchar(32),

city varchar(32),

officalUrl varchar(64),

info text,

mFile varchar(32)

)character set = utf8

"""

self.cursor.execute(sql)

except Exception as err:

print(err)

print("表格创建失败")

self.open = True

self.count = 1

except Exception as err:

print(err)

self.open = False

print("数据库连接失败")

def process_item(self, item, spider):

print(item['sNo'], item['schoolName'], item['city'], item['officalUrl'], item['info'], item['mFile'])

if self.open:

try:

self.cursor.execute(

"insert into university (sNo,schoolName,city,officalUrl,info,mFile) values(%s,%s,%s,%s,%s,%s)", \

(item['sNo'], item['schoolName'], item['city'], item['officalUrl'], item['info'], item['mFile']))

self.count += 1

except:

print("数据插入失败")

else:

print("数据库未连接")

return item

def close_spider(self, spider):

if self.open:

self.con.commit()

self.con.close()

self.open = False

- items:

import scrapy

class UniversityGetsItem(scrapy.Item):

sNo = scrapy.Field()

schoolName = scrapy.Field()

city = scrapy.Field()

officalUrl = scrapy.Field()

info = scrapy.Field()

mFile = scrapy.Field()

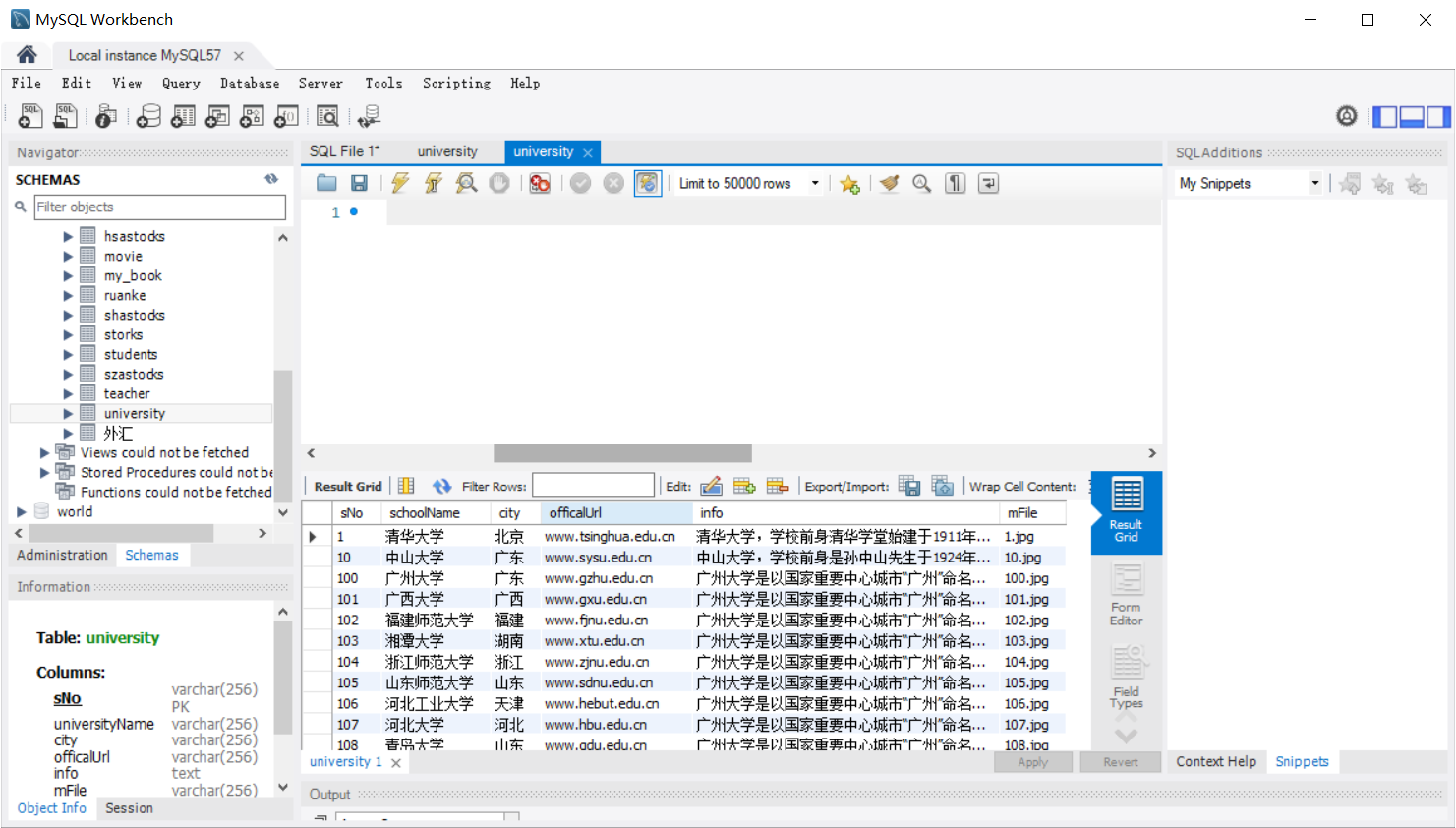

- 结果:

作业③

要求:

-熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素加载、网页跳转等内容。

-使用Selenium框架+ MySQL数据库存储技术模拟登录慕课网,并获取学生自己账户中已学课程的信息并保存在MYSQL中。

-其中模拟登录账号环节需要录制gif图。

代码

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import pymysql

import time

class MOOC:

def Load_in(self):

time.sleep(1)

# 点击登录

user = self.driver.find_element_by_xpath('//*[@id="j-topnav"]/div')

user.click()

time.sleep(1)

# 选择其他方式登录

way = self.driver.find_element_by_xpath('//div[@class="ux-login-set-scan-code_ft"]/span')

way.click()

time.sleep(1)

# 选择电话号码登录

telephone = self.driver.find_element_by_xpath('//ul[@class="ux-tabs-underline_hd"]/li[2]')

telephone.click()

time.sleep(1)

frame = self.driver.find_element_by_xpath(

"/html/body/div[13]/div[2]/div/div/div/div/div/div[1]/div/div[1]/div[2]/div[2]/div/iframe")

# 将操作切换至页面弹窗

self.driver.switch_to.frame(frame)

self.driver.find_element_by_xpath('//input[@type="tel"]').send_keys('13115920283')

time.sleep(1)

self.driver.find_element_by_xpath('//input[@class="j-inputtext dlemail"]').send_keys('5102095yang')

time.sleep(1)

load_in = self.driver.find_element_by_xpath('//*[@id="submitBtn"]')

load_in.click()

def MyClass(self):

time.sleep(2)

# 进入个人中心

myclass = self.driver.find_element_by_xpath('//*[@id="j-indexNav-bar"]/div/div/div/div/div[7]/div[3]/div')

myclass.click()

self.all_spider()

def all_spider(self):

time.sleep(1)

self.spider()

time.sleep(1)

# 进行翻页的尝试

try:

self.driver.find_element_by_xpath(

'//ul[@class="ux-pager"]/li[@class="ux-pager_btn ux-pager_btn__next"]/a[@class="th-bk-disable-gh"]')

except Exception:

self.driver.find_element_by_xpath(

'//ul[@class="ux-pager"]/li[@class="ux-pager_btn ux-pager_btn__next"]/a[@class="th-bk-main-gh"]').click()

self.all_spider()

def spider(self):

id = 0

time.sleep(1)

lis = self.driver.find_elements_by_xpath('//div[@class="course-card-wrapper"]')

print(lis)

for li in lis:

time.sleep(1)

li.click()

# 获取页面句柄,原网页为0,新网页为1

window = self.driver.window_handles

# 切换到新页面

self.driver.switch_to.window(window[1])

time.sleep(1)

self.driver.find_element_by_xpath('//*[@id="g-body"]/div[3]/div/div[1]/div/a').click()

# 重新获取句柄

window = self.driver.window_handles

# 切换到下一页面

self.driver.switch_to.window(window[2])

time.sleep(1)

id += 1

course = self.driver.find_element_by_xpath(

'//*[@id="g-body"]/div[1]/div/div[3]/div/div[1]/div[1]/span[1]').text

teacher = self.driver.find_element_by_xpath('//*[@id="j-teacher"]//h3[@class="f-fc3"]').text

collage = self.driver.find_element_by_xpath('//*[@id="j-teacher"]/div/a/img').get_attribute('alt')

process = self.driver.find_element_by_xpath('//*[@id="course-enroll-info"]/div/div[1]/div[2]/div[1]').text

count = self.driver.find_element_by_xpath('//*[@id="course-enroll-info"]/div/div[2]/div[1]/span').text

brief = self.driver.find_element_by_xpath('//*[@id="j-rectxt2"]').text

self.cursor.execute("insert into mooc(id, course, teacher, collage, process, count, brief) "

"values( % s, % s, % s, % s, % s, % s, % s)",

(id, course, teacher, collage, process, count, brief))

time.sleep(1)

# 关闭此窗口

self.driver.close()

# 切换回上一网页

self.driver.switch_to.window(window[1])

time.sleep(1)

# 进入两次新网页,所以要进行两次close()操作

self.driver.close()

self.driver.switch_to.window(window[0])

def start(self):

self.con = pymysql.connect(host='127.0.0.1', port=3306, user='root', passwd='yang6106', db='test', charset='utf8')

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

# sql = 'create table mooc(id int,course varchar(32),teacher varchar(16),collage varchar(32),process varchar(64),' \

# 'count varchar(64),brief text);'

# '''cursor.execute(sql)'''

self.cursor.execute("delete from mooc")

def stop(self):

try:

self.con.commit()

self.con.close()

except Exception as err:

print(err)

def executespider(self, url):

chrome_options = Options()

self.driver = webdriver.Chrome(chrome_options=chrome_options)

self.driver.get(url)

self.start()

self.Load_in()

self.MyClass()

self.stop()

def main():

url = 'https://www.icourse163.org/'

spider = MOOC()

spider.executespider(url)

if __name__ == '__main__':

main()

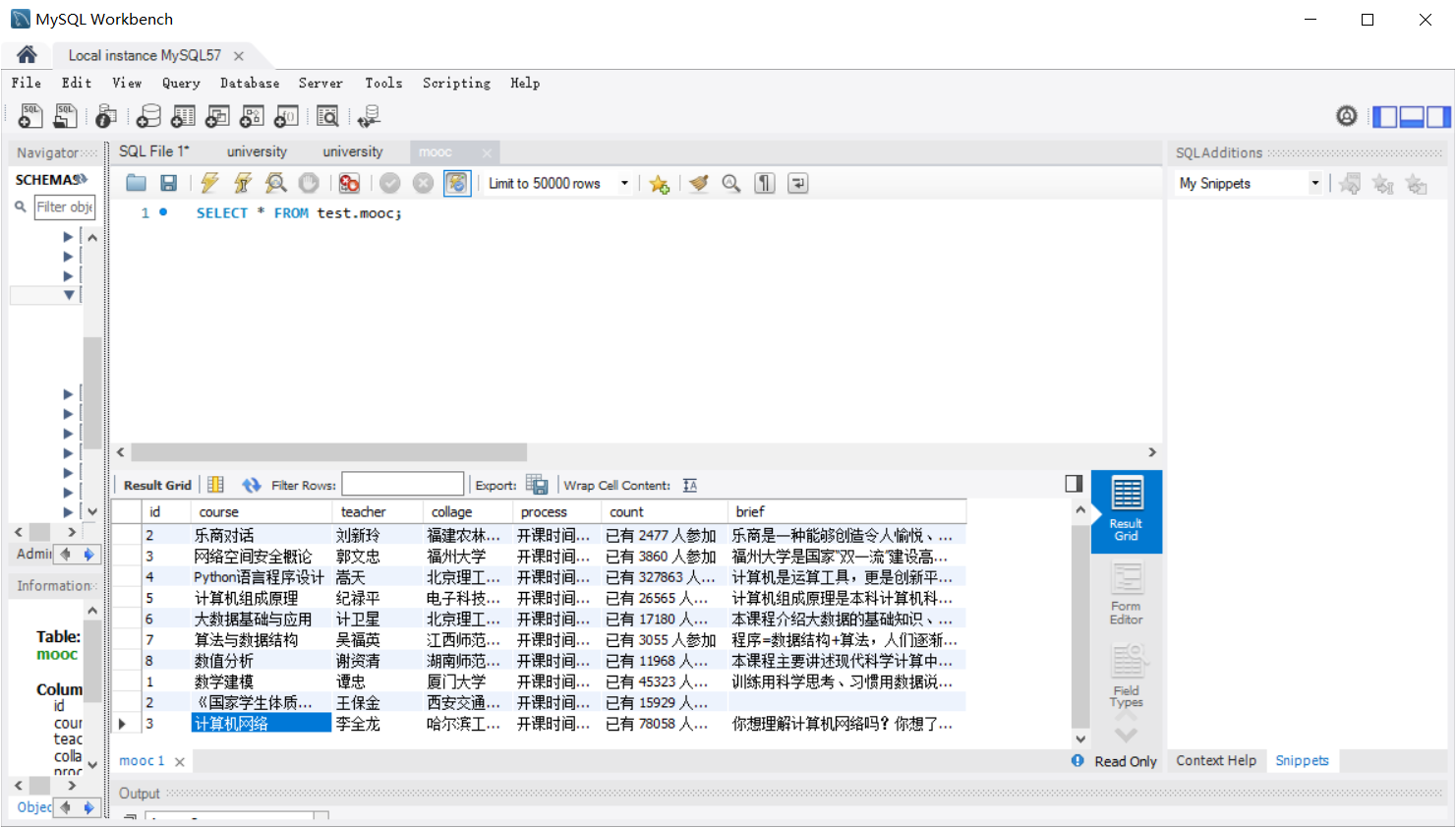

结果: