《计算机视觉入门》

主要分为几个部分:opencv入门+tensorflow入门、穿插numpy+matplotlib入门知识、最后来一个股票小案例

1.安装tensorflow1.10和opencv3.3.1:

安装tensorflow和opencv:

pip install --upgrade --ignore-installed tensorflow==1.10 -i https://pypi.douban.com/simple/ pip install python-opencv -i https://pypi.douban.com/simple/

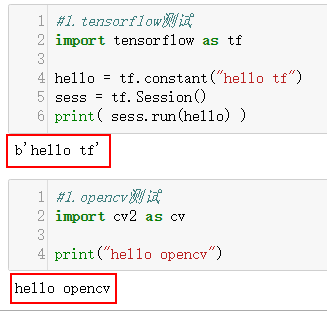

测试安装是否成功:

#1.tensorflow测试

import tensorflow as tf

hello = tf.constant("hello tf")

sess = tf.Session()

print( sess.run(hello) )

#1.opencv测试

import cv2 as cv

print("hello opencv")

2.opencv入门:

#(一)opencv图片读取与展示

import cv2

#imread完成了什么?

#1.数据读取 2.封装格式解析 3.数据解码 4。数据加载

img = cv2.imread("image0.jpg", 1)#1.读取图片,后面1代表彩色图片,0代表灰度

cv2.imshow("image", img)#2.展示图片,第一个是窗体名称,第二个是数据

cv2.waitKey(0)#3.暂停展示

#(二)图片的写入

import cv2

img = cv2.imread("image0.jpg", 1)

cv2.imwrite(("image_demo.jpg"), img)#写入:1 name 2 data数据

#(三)图片的质量 jpg图片 有损压缩

import cv2

img = cv2.imread('image0.jpg', 1)

#图片质量在imwrite里[cv2.IMWRITE_JPEG_QUALITY, 0到100]

#压缩率在0%到100%(0-100)

#是有损压缩,压缩率越大,图片越好

cv2.imwrite('image_demo1.jpg', img, [cv2.IMWRITE_JPEG_QUALITY, 50])

#(四)图片的质量 png图片 无损压缩

import cv2

img = cv2.imread('image0.jpg', 1)

#png图片处理:cv2.IMWRITE_PNG_COMPRESSION使用压缩比COMPRESSION

#压缩比:范围0-9,越小,图片越好

cv2.imwrite('image_demo1.png', img, [cv2.IMWRITE_PNG_COMPRESSION, 0])

#(五)像素操作基础

#1.什么是像素:一个个像素点

#2.像素点由RGB3种颜色组成,RGB 3个颜色通道:R G B

#3.RGB3种颜色 颜色深度:8bit 0-255

#4.图片宽高:像素点个数

#5.图片大小计算:宽 x 高 x 3 x 8 bit -> 除8 -> B --> M

#6.png图片可能还有RGB alpha(透明度)

#7.除了RGB还可能是bgr格式(蓝,绿, 红)

#opencv读取格式:bgr格式

#(六)像素读取、写入

#像素读取写入:img[y轴, x轴]

import cv2

#1.读取data 2.像素读取 3.像素写入

img = cv2.imread("image0.jpg", 1)

(b, g, r) = img[100, 100]#读取100,100处像素点

print(b, g, r)

#绘制一条横的直线(326,325)->(326, 425)

for i in range(325, 426):

img[326, i] = (255, 0, 0)#一条蓝色的线

cv2.imshow('image', img)

cv2.waitKey(0)

3.tensorflow入门:

#(一)定义常量,变量

import tensorflow as tf

data1 = tf.constant(2.5, dtype=tf.float32)#定义常量(data, 类型)

data2 = tf.Variable(10, name="var")#定义变量(data, name)

print(data1)

print(data2)

'''

sess = tf.Session()

#变量必须初始化

init = tf.global_variables_initializer()#变量必须初始化

sess.run(init)#变量必须初始化再用session跑一下

print(sess.run(data1))

print(sess.run(data2))

sess.close()

#tensorflow本质 = tensor op 计算图

#1.tensor = 数据 = data1 、data2

#2.op = 操作符 +-×÷

#3.计算图 = 对于数据的操作

#session = 交互核心

'''

#代码优化

sess = tf.Session()

init = tf.global_variables_initializer()#变量初始化

with sess:

sess.run(init)

print(sess.run(data1))

print(sess.run(data2))

#(二)常量的四则运算

import tensorflow as tf

sess = tf.Session()

data1 = tf.constant(2)

data2 = tf.constant(6)

dataAdd = tf.add(data1, data2)#加

dataSub = tf.subtract(data1, data2)#减

dataMul = tf.multiply(data1, data2)#乘

dataDiv = tf.divide(data1, data2)#除

with sess:

print(sess.run(dataAdd))

print(sess.run(dataSub))

print(sess.run(dataMul))

print(sess.run(dataDiv))

print('end')

#(三)变量的四则运算

import tensorflow as tf

sess = tf.Session()

data1 = tf.constant(2)

data2 = tf.Variable(6)#变量

dataAdd = tf.add(data1, data2)#加

#追加dataAdd的结果放到data2中 = 将dataAdd值赋给data2

datacopy = tf.assign(data2, dataAdd)#追加:dataAdd->data2

dataSub = tf.subtract(data1, data2)#减

dataMul = tf.multiply(data1, data2)#乘

dataDiv = tf.divide(data1, data2)#除

#变量初始化

init = tf.global_variables_initializer()

with sess:

sess.run(init)#变量初始化

print(sess.run(dataAdd))

print(sess.run(dataSub))

print(sess.run(dataMul))

print(sess.run(dataDiv))

print('sess.run(datacopy)',sess.run(datacopy))#此时已完成:将8赋值 -> data2

print('datacopy.eval()',datacopy.eval())#datacopy.eval() = sess.run(datacopy)

#为10因为 datacopy = dataAdd = data1 + data2,此时data2 = 8,所以8+2 = 10

#同时这时候将10赋值 -> data2

print('tf.get_default_session().run(datacopy)',tf.get_default_session().run(datacopy))

#tf.get_default_session()获取默认session

#12 = 10+2

#同时这时候将12赋值 -> data2

print('这时data2=',sess.run(data2))

print('end')

#(四)tensorflow矩阵运算

#矩阵 == 数组 == N行N列

#[ [列数据] ]

#[ [6,6] ]一行两列

import tensorflow as tf

data1 = tf.constant([[6,6]])

data2 = tf.constant([[2],

[2]])

data3 = tf.constant([[3,3]])

data4 = tf.constant([[1,2],

[3,4],

[5,6]])

print(data4.shape)#矩阵维度shape

with tf.Session() as sess:

#行和列都是从0开始

print(sess.run(data4))#打印全部

print(sess.run(data4[0]))#打印某一行

print(sess.run(data4[:,0]))#打印某一列

print(sess.run(data4[0,0]))#打印某一行某一列

#(五)矩阵的加法和乘法

import tensorflow as tf

data1 = tf.constant([[6,6]])

data2 = tf.constant([[2],

[2]])

data3 = tf.constant([[3,3]])

data4 = tf.constant([[1,2],

[3,4],

[5,6]])

#区分普通乘法和矩阵乘法

matMul = tf.matmul(data1, data2)#矩阵乘法

matMul2 = tf.multiply(data1, data2)#普通乘法

matAdd = tf.add(data1, data3)#矩阵加法

with tf.Session() as sess:

print(sess.run(matMul))

print(sess.run(matAdd))

print(sess.run(matMul2))#普通乘法 1x2 2x1 = 2x2

print(sess.run([matMul, matMul2]))#一次打印多个值

print('end!')

#(六)定义空矩阵、单位矩阵、填充矩阵、随机矩阵

import tensorflow as tf

#方法1

mat0 = tf.constant([[0,0], [0,0]])#2x2 空矩阵

#方法2

mat00 = tf.zeros([2,2])#2x2 空矩阵

mat1 = tf.ones([2,2])#单位矩阵:全1

matt = tf.fill([2,2], 15)#用15去填充矩阵

matt2 = tf.zeros_like(matt)#与matt相同维度的全0矩阵

mat_line = tf.linspace(0.0, 2.0, 11)#将0到2之间数据分成相等10份

mat_random = tf.random_uniform([2,2], -1, 2)#-1到2之间,2x2随机矩阵

with tf.Session() as sess:

print(sess.run(mat0))

print(sess.run(mat00))

print(sess.run(mat1))

print(sess.run(matt))

print(sess.run(matt2))

print(sess.run(mat_line))

print(sess.run(mat_random))

print('end!')

4.numpy入门:

#使用numpy模块

import numpy as np

data1 = np.array([1,2,3,4,5])#1x5

print(data1)

data2 = np.array([[1,2],

[3,4]])

print(data2)

print(data2.shape)

data3 = np.zeros([2,2])#全0矩阵

data4 = np.ones([2,2])#全1矩阵

print(data3)

print(data4)

#矩阵的修改和查找

#行列都是从0开始

data2[1,1] = 5#修改最后一个元素

print(data2)

print(data2[1,1])

#矩阵基本运算

data5 = np.ones([2,2])

print(data5*2)#每个元素都乘一次

print(data5/2)#每个元素都除一次

#两个矩阵间运算

data6 = np.array([[1,2],

[3,4]])

print(data5+data6)#对应相加

print(data5*data6)#对应相乘

5.matplotlib入门:

#使用matplotlib模块:折线plot,饼状,柱状bar import matplotlib.pyplot as plt import numpy as np #折线图 x = np.array([1,2,3,4,5,6,7,8]) y = np.array([2,8,7,16,5,5,7,66]) plt.plot(x, y, 'b', lw=10)#1 x轴 2 y轴 3 color 4线宽度 plt.show() #柱状图 x = np.array([1,2,3,4,5,6,7,8]) y = np.array([2,8,7,16,5,5,7,66]) plt.bar(x, y, 0.9, alpha=1, color='g')#1 x轴 2 y轴 3 柱宽比例 4透明度 5颜色 plt.show()

6.人工智能股票小案例:

首先来了解一下什么是神经网络:分为输入层->隐藏层->输出层

股票小案例神经网络构成:

还是没搞清楚b1这里为啥是【1,10】而不是【15,10】,按道理说A*w1以后已经-->是一个15*10的矩阵了

整体就是通过不断梯度下降法求出最佳的:w1 w2 b1 b2

代码:

#0.导入模块

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

#1.数据准备+绘图

date = np.linspace(1, 15, 15)#定义天数

#定义收盘价格

endPrice = np.array([2511.90,2538.26,2510.68,2591.66,2732.98,

2701.69,2701.29,2678.67,2726.50,2681.50,2739.17,2715.07,2823.58,2864.90,2919.08])

#定义开盘价格

beginPrice = np.array([2438.71,2500.88,2534.95,2512.52,2594.04,2743.26,2697.47,2695.24,

2678.23,2722.13,2674.93,2744.13,2717.46,2832.73,2877.40])

print(date)

#绘图

plt.figure()

for i in range(0, 15):

#柱状图

dateOne = np.zeros([2])

dateOne[0] = i

dateOne[1] = i

priceOne = np.zeros([2])

priceOne[0] = beginPrice[i]

priceOne[1] = endPrice[i]

if endPrice[i] > beginPrice[i]:

plt.plot(dateOne, priceOne, 'r', lw=8)

#例如:相当于x轴都是第1天,y轴是[2438.71,2511.90]一个1×2矩阵

#x轴:[1, 1] y轴:[2438.71至2511.90]

else:

plt.plot(dateOne, priceOne, 'g', lw=8)

#2.人工神经网络:

# A(15x1)*w1(1x10)+b1(1*10) = B(15x10) (不懂b1这里为啥是【1,10】而不是【15,10】)

# B(15x10)*w2(10x1)+b2(15x1) = C(15x1)

#2.1输入层准备

date_t = np.zeros([15,1])

price_t = np.zeros([15,1])

for i in range(0, 15):

date_t[i,0] = i/14.0

price_t[i,0] = endPrice[i]/3000.0

x = tf.placeholder(tf.float32, [None,1])

y = tf.placeholder(tf.float32, [None,1])

#2.2隐藏层准备

w1 = tf.Variable(tf.random_uniform([1,10],0,1))

#还是没搞清楚b1这里为啥是【1,10】而不是【15,10】

b1 = tf.Variable(tf.zeros([1,10]))

wb1 = tf.matmul(x,w1)+b1

lay1 = tf.nn.relu(wb1)#激励函数

w2 = tf.Variable(tf.random_uniform([10,1],0,1))

b2 = tf.Variable(tf.zeros([15,1]))

wb2 = tf.matmul(lay1,w2)+b2

lay2 = tf.nn.relu(wb2)#激励函数

#输出层准备

loss = tf.reduce_mean(tf.square(y-lay2))#损失函数loss:真实结果-实验结果

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

#3代码测试

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(10000):

sess.run(train_step, feed_dict={x:date_t,y:price_t})

nowp = sess.run(lay2, feed_dict={x:date_t})

nowPrice = np.zeros([15,1])

for i in range(0,15):

nowPrice[i,0] = (nowp*3000)[i,0]

print(nowPrice)

plt.plot(date,nowPrice,'b',lw=2)

plt.show()

结果: