Python 动态网页Fetch/XHR爬虫——以获取NBA球员信息为例

Python 动态网页Fetch/XHR爬虫——以获取NBA球员信息为例

动态网页抓取信息,一般利用F12开发者工具-网络-Fetch/XHR获取信息,实现难点有:

-

-

动态网页的加载方式

-

获取请求Url

-

编排处理Headers

-

分析返回的数据Json

-

-

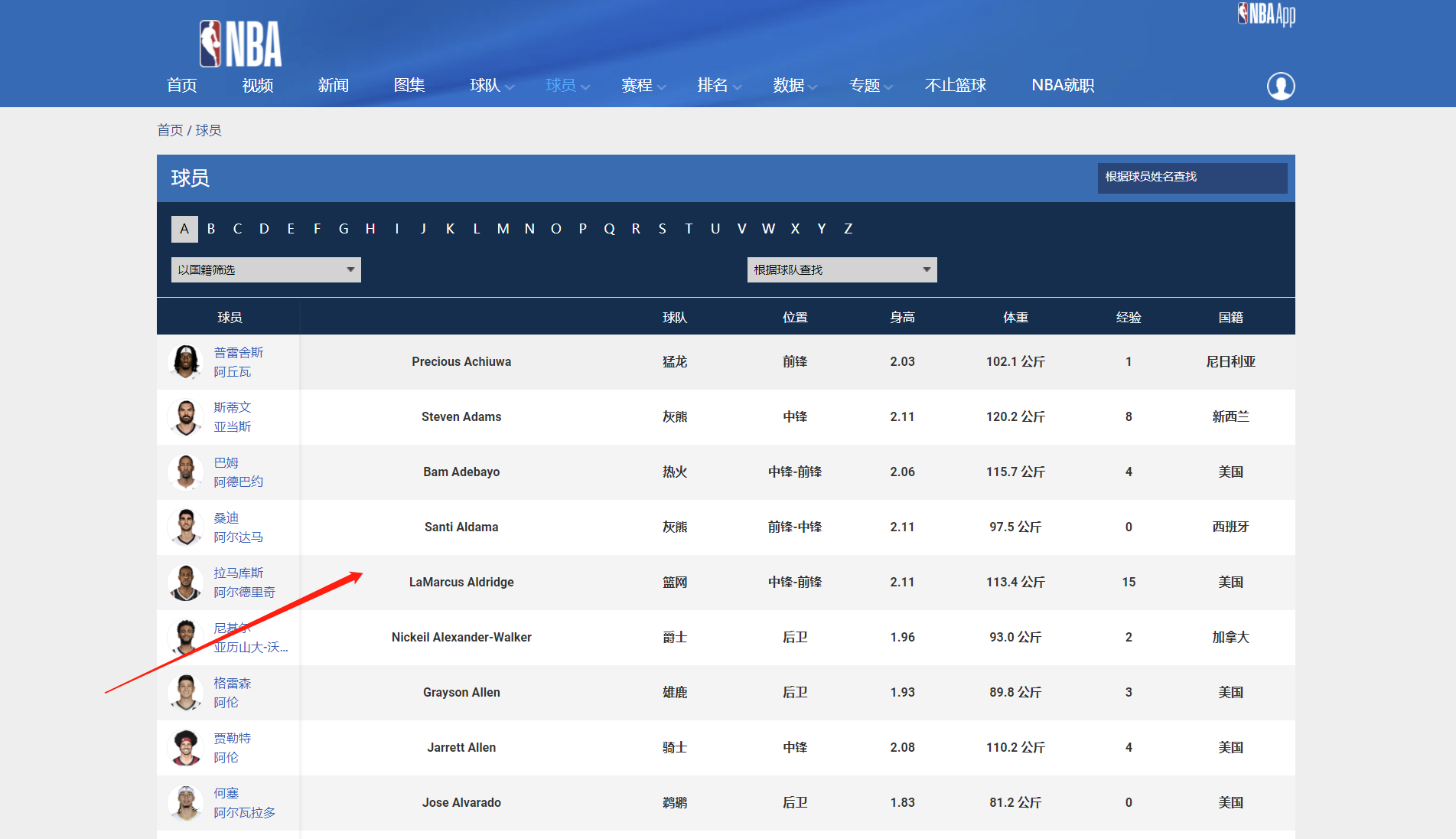

我们本次想获取的信息如下:

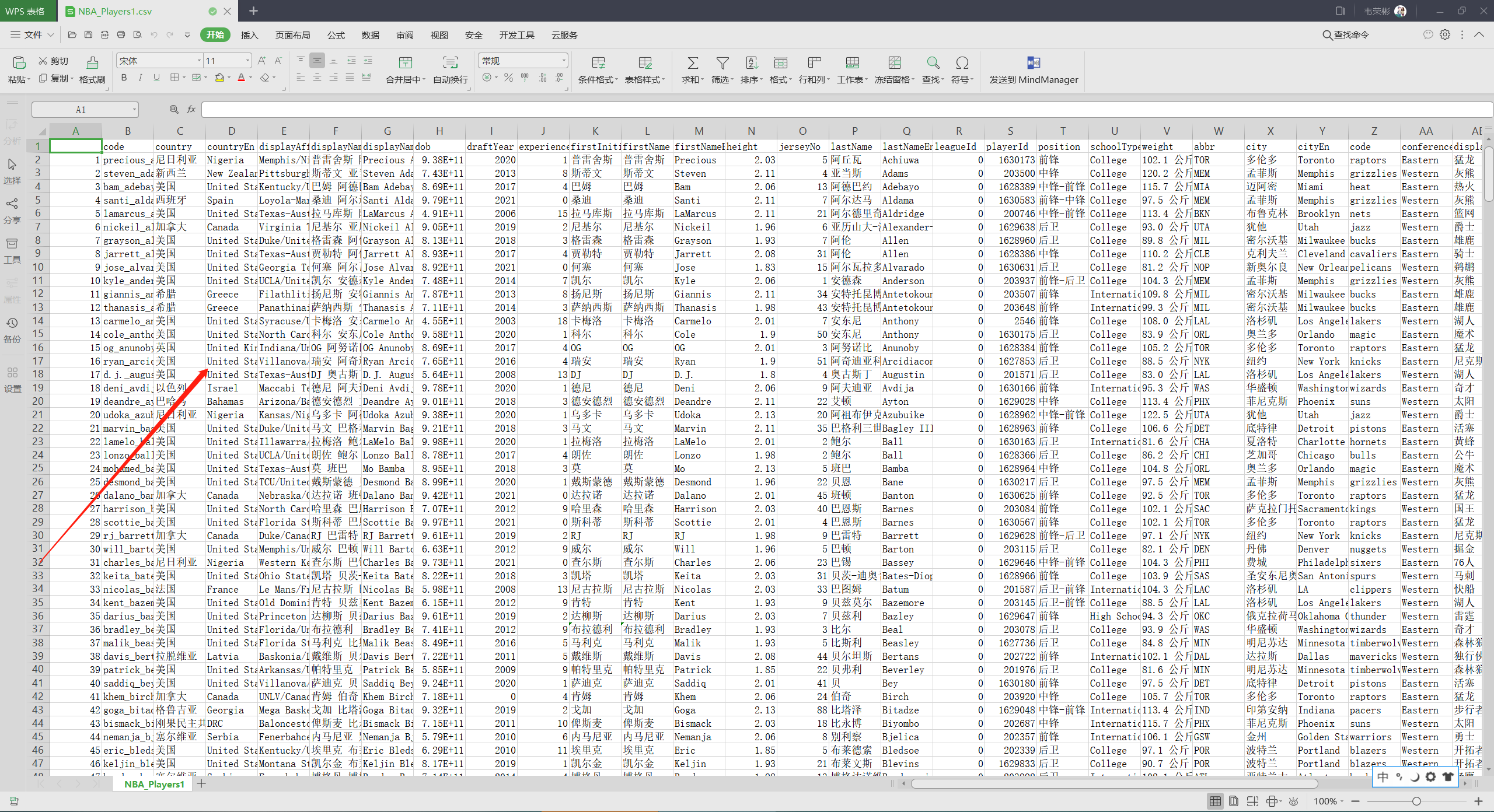

成功获取到的csv一共506位球员,具体如下:

实现代码:

import requests

import pandas as pd

def get_headers(header_raw):

return dict(line.split(": ", 1) for line in header_raw.split("\n") if line != '')

# 设置headers

headers_str = '''

accept: application/json, text/plain, */*

accept-encoding: gzip, deflate, br

accept-language: zh-CN,zh;q=0.9

referer: https://china.nba.cn/playerindex/

sec-ch-ua: " Not A;Brand";v="99", "Chromium";v="96", "Google Chrome";v="96"

sec-ch-ua-mobile: ?0

sec-ch-ua-platform: "Windows"

sec-fetch-dest: empty

sec-fetch-mode: cors

sec-fetch-site: same-origin

cookie: sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%22182d0029f842fc-0d281a685dd4e08-4303066-2400692-182d0029f85406%22%2C%22first_id%22%3A%22%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_referrer%22%3A%22%22%7D%2C%22identities%22%3A%22eyIkaWRlbnRpdHlfY29va2llX2lkIjoiMTgyZDAwMjlmODQyZmMtMGQyODFhNjg1ZGQ0ZTA4LTQzMDMwNjYtMjQwMDY5Mi0xODJkMDAyOWY4NTQwNiJ9%22%2C%22history_login_id%22%3A%7B%22name%22%3A%22%22%2C%22value%22%3A%22%22%7D%2C%22%24device_id%22%3A%22182d0029f842fc-0d281a685dd4e08-4303066-2400692-182d0029f85406%22%7D; privacyV2=true; i18next=zh_CN; locale=zh_CN

user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36

'''

headers = get_headers(headers_str)

# print(headers)

# requests请求

param = {'locale': 'zh_CN'}

url = 'https://china.nba.cn/stats2/league/playerlist.json'

response = requests.get(url=url, headers=headers, params=param)

print('返回状态码:', response.status_code)

print('编码:', response.encoding)

# json解码成字典

myjson = response.json()

# 保存为pandas DataFrame

# print(players_dicts['playerProfile'])

# print(players_dicts['teamProfile'])

# 遍历选手信息

players_info = []

for players_dicts in myjson['payload']['players']:

players_info.append(pd.DataFrame([players_dicts['playerProfile']]))

# 遍历队伍简介信息

teams_info = []

for players_dicts in myjson['payload']['players']:

teams_info.append(pd.DataFrame([players_dicts['teamProfile']]))

# 得到两个DataFrame

players_pandas = pd.concat(players_info)

teams_pandas = pd.concat(teams_info)

# 合并得到最终DataFrame

result = pd.concat([players_pandas, teams_pandas], axis=1)

result.to_csv(r'C:\Users\WeiRonbbin\Desktop\NBA_Players1.csv')WeiRonbbin 😉

浙公网安备 33010602011771号

浙公网安备 33010602011771号