朴素贝叶斯实现

1 朴素贝叶斯自编程实现

import numpy as np import pandas as pd %config ZMQInteractiveShell.ast_node_interactivity='all'

class NaiveBayes(): def __init__(self, lambda_): self.lambda_ = lambda_ # 贝叶斯系数,取0时,即为极大似然估计 self.y_types_count = None # y的(类型:数量) self.y_types_proba = None # y的(类型:概率) self.x_types_proba = dict() # (xi 的编号,xi 的取值,y 的类型):概率 def fit(self, X_train, y_train): self.y_types = np.unique(y_train) # np.unique()去除数组中重复数字并排序输出,得到 y 的所有取值类型 X = pd.DataFrame(X_train) # 转换成pandas DataFrame格式,下同 y = pd.DataFrame(y_train) # y的(类型:数量)统计 1 : 9 -1 : 6 self.y_types_count = y[0].value_counts() # y的(类型:概率)计算 self.y_types_proba = (self.y_types_count + self.lambda_) / (y.shape[0] + len(self.y_types) * self.lambda_) # (xi 的编号, xi的取值,y的类型):概率的计算 for idx in X.columns: # 遍历xi for j in self.y_types: # 选取每一个y的类型 # 选择所有y==j为真的数据点的第idx个特征的值,并对这些值进行(类型:数量)统计 p_x_y = X[(y == j).values][idx].value_counts() # 计算(xi 的编号,xi的取值,y的类型):概率 for i in p_x_y.index: self.x_types_proba[(idx, i, j)] = (p_x_y[i] + self.lambda_) / (self.y_types_count[j] + p_x_y.shape[0] * self.lambda_) def predict(self, X_new): res = [] for y in self.y_types: # 遍历y的可能取值 p_y = self.y_types_proba[y] # 计算y的先验概率 P(Y=ck) p_xy = 1 for idx, x in enumerate(X_new): p_xy *= self.x_types_proba[(idx, x, y)] # 计算P(x = (x1,x2,...xd) / Y = ck) res.append(p_y * p_xy) for i in range(len(self.y_types)): print("[{}]对应的概率:{:.2%}".format(self.y_types[i], res[i])) #返回最大后验概率对应的y值 return self.y_types[np.argmax(res)]

def main(): X_train = np.array([ [1,"S"], [1,"M"], [1,"M"], [1,"S"], [1,"S"], [2,"S"], [2,"M"], [2,"M"], [2,"L"], [2,"L"], [3,"L"], [3,"M"], [3,"M"], [3,"L"], [3,"L"] ]) y_train = np.array([-1,-1,1,1,-1,-1,-1,1,1,1,1,1,1,1,-1]) clf = NaiveBayes(lambda_ = 0.2) clf.fit(X_train, y_train) X_new = np.array([2, 'S']) y_predict = clf.predict(X_new) print("{}被分类为:{}".format(X_new, y_predict)) if __name__ == '__main__': main()

2 朴素贝叶斯的sklearn实现

import numpy as np from sklearn.naive_bayes import GaussianNB, BernoulliNB, MultinomialNB from sklearn import preprocessing # 预处理

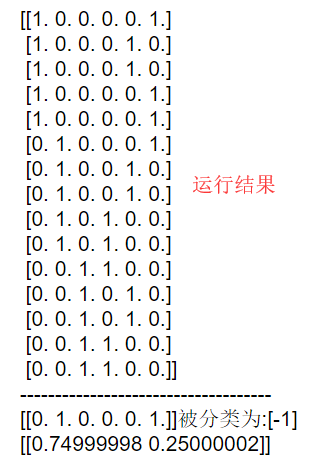

def main(): X_train=np.array([ [1,"S"], [1,"M"], [1,"M"], [1,"S"], [1,"S"], [2,"S"], [2,"M"], [2,"M"], [2,"L"], [2,"L"], [3,"L"], [3,"M"], [3,"M"], [3,"L"], [3,"L"] ]) y_train=np.array([-1,-1,1,1,-1,-1,-1,1,1,1,1,1,1,1,-1]) enc = preprocessing.OneHotEncoder(categories='auto') enc.fit(X_train) X_train = enc.transform(X_train).toarray() print(X_train) clf = MultinomialNB(alpha=0.0000001) clf.fit(X_train, y_train) X_new = np.array([[2, 'S']]) X_new = enc.transform(X_new).toarray() y_predict = clf.predict(X_new) print("------------------------------------") print("{}被分类为:{}".format(X_new,y_predict)) print(clf.predict_proba(X_new)) if __name__ == '__main__': main()

参考:

[1] 深度之眼统计学习方法集训营课后练习

[2] 《统计学习方法》李航