安装与重装k8s 1.15.0

CentOS 7.6系统配置

node1节点操作脚本,node2节点修改主机名名字,其他相同

所有节点执行以下脚本

# 修改主机名

echo "node1" > /etc/hostname

hostnamectl set-hostname node1

# 安装nslookup和ifconfig

# nslookup

yum install -y bind-utils

# ifconfig

yum install -y net-tools.x86_64

# 禁用防火墙

systemctl stop firewalld

systemctl disable firewalld

# 禁用SELINUX

setenforce 0

echo SELINUX=disabled >> /etc/selinux/config

# 创建/etc/sysctl.d/k8s.conf文件并添加内容

rm -f /etc/sysctl.d/k8s.conf

touch /etc/sysctl.d/k8s.conf

echo net.bridge.bridge-nf-call-ip6tables=1 >> /etc/sysctl.d/k8s.conf

echo net.bridge.bridge-nf-call-iptables=1 >> /etc/sysctl.d/k8s.conf

echo net.ipv4.ip_forward=1 >> /etc/sysctl.d/k8s.conf

# 使修改生效

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

# 配置kube-proxy开启ipvs的前置条件

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

yum install -y ipset

yum install -y ipvsadm

# 安装Docker并修改docker cgroup driver为systemd

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum install -y wget

cd /etc/yum.repos.d/

wget https://download.docker.com/linux/centos/docker-ce.repo

yum install -y --setopt=obsoletes=0 docker-ce-18.09.7-3.el7

systemctl start docker

systemctl enable docker

# 修改各个节点上docker的cgroup driver为systemd,默认是cgroupfs。

# 创建或修改/etc/docker/daemon.json

rm -f /etc/docker/daemon.json

touch /etc/docker/daemon.json

echo -e '{\n"exec-opts": ["native.cgroupdriver=systemd"]\n}' > /etc/docker/daemon.json

# 重启Docker

systemctl restart docker

# 配置kubeadm源

rm -f /etc/yum.repos.d/kubernetes.repo

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 安装kubelet、kubeadm 和 kubectl 1.15.0版本

yum makecache fast

# kubernetes-cni 1.0.0版本后生成的/opt/cni/bin目录中没有flannel

yum install -y kubernetes-cni-0.8.6 kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0

# 在各节点开机启动kubelet服务

systemctl enable kubelet.service

# 关闭系统swap

swapoff -a

# 注释SWAP的自动挂载

sed -i 's/.*swap.*/#&/g' /etc/fstab

echo vm.swappiness=0 >> /etc/sysctl.d/k8s.conf

sysctl -p /etc/sysctl.d/k8s.conf

# 拉取pause镜像

docker pull registry.aliyuncs.com/google_containers/pause:3.1

docker tag registry.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker rmi registry.aliyuncs.com/google_containers/pause:3.1

# 拉取kube-proxy镜像

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0 k8s.gcr.io/kube-proxy:v1.15.0

docker rmi registry.aliyuncs.com/google_containers/kube-proxy:v1.15.0使用kubeadm部署k8s并纳管节点

使用kubeadm init初始化集群

管理面节点执行以下脚本

rm -f kubeadm.yaml

cat > kubeadm.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta2

kind: InitConfiguration

localAPIEndpoint:

# master节点IP

advertiseAddress: 192.168.3.58

bindPort: 6443

nodeRegistration:

taints:

- effect: PreferNoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.15.0

networking:

podSubnet: 10.244.0.0/16

EOF

# 拉取镜像

# k8s.gcr.io/kube-apiserver:v1.15.0

# k8s.gcr.io/kube-controller-manager:v1.15.0

# k8s.gcr.io/kube-scheduler:v1.15.0

# k8s.gcr.io/etcd:3.3.10

# k8s.gcr.io/coredns:1.3.1

images=(kube-apiserver:v1.15.0 kube-controller-manager:v1.15.0 kube-scheduler:v1.15.0

etcd:3.3.10 coredns:1.3.1)

for imageName in ${images[@]} ; do

docker pull registry.aliyuncs.com/google_containers/$imageName

docker tag registry.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.aliyuncs.com/google_containers/$imageName

done

kubeadm init --config kubeadm.yaml

# master配置常规用户如何使用kubectl访问集群

# k8s集群默认需要加密方式访问。把k8s集群的安全配置文件保存到当前用户的.kube目录下,kubectl默认会使用这个目录下的授权信息访问k8s集群。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config纳管节点

# 从管理面节点上获取join token

kubeadm token create --print-join-command

# 管理面节点IP

kubeadm join 192.168.3.58:6443 --token ...安装容器网络flannel

echo "199.232.68.133 raw.githubusercontent.com" >> /etc/hosts

kubectl -n kube-system apply -f https://raw.githubusercontent.com/coreos/flannel/bc79dd1505b0c8681ece4de4c0d86c5cd2643275/Documentation/kube-flannel.ymlkube-proxy开启ipvs

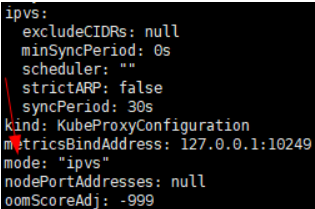

修改ConfigMap kube-proxy中的config.conf

kubectl edit cm kube-proxy -n kube-system

把mode改成ipvs。

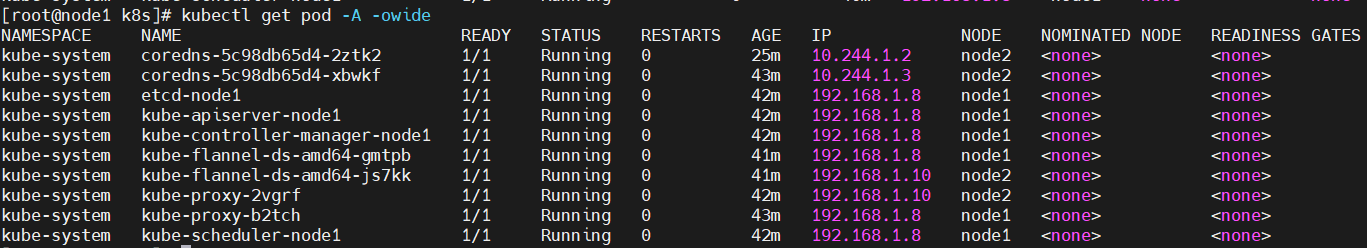

重启各个节点上的kube-proxy pod

kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

效果图

因虚拟机IP地址改变而重装k8s和纳管

卸载k8s

所有节点执行以下脚本

kubeadm reset -f

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /var/lib/etcd

rm -rf /var/etcd

rm -rf /var/lib/cni/

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/重装k8s

# 修改kubeadm.yaml和/etc/hosts里面的ip

hostIp=$(ifconfig ens33 | grep "inet " | awk '{print $2}')

sed -i "s/advertiseAddress:.*/advertiseAddress: ${hostIp}/" kubeadm.yaml

# 初始化集群

kubeadm init --config kubeadm.yaml

# 纳管节点

kubeadm join master_ip:6443 --token ...

# 添加kubectl执行权限

mkdir -p $HOME/.kube

cp /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

# 安装容器网络

kubectl create -f kube-flannel.yml参考资料

安装 Flannel 报错:network plugin is not ready: cni config uninitialized

failed to find plugin “flannel” in path [/opt/cni/bin],k8sNotReady解决方案