基于形状的三维医学图像插值(一)

目的

项目中需要改进脑部CT三维旋转的插值,目前使用的方法是线性插值,这样使得对于Z轴spacing大的数据来说,插值会造成颅骨区域比真实值过宽或者过窄(过宽造成的影响更大),如下图,导致mask经过旋转之后映射到颅骨上了,这里mask也是使用的线性插值,也存在一定的问题,根据手工检查的部分数据来看,造成下图现象的主要由于原图的插值

实验(目前只测试了在一个序列的相邻两层中间插值一层)

-

先求出待插值层的二值轮廓图

![image-20210612231223256]()

![image-20210612231445151]()

-

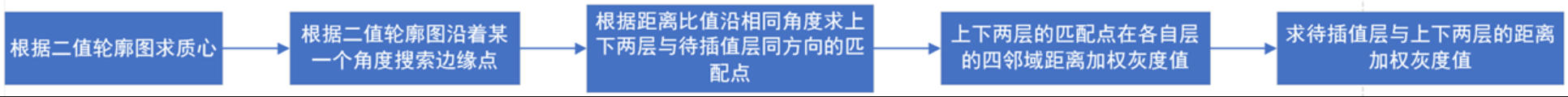

根据二值轮廓图求待插值点对应在上下两层的加权平均灰度值

![]()

可以看出插值的大致方向计算正确

以下是根据待插值层质心沿当前方向计算当前方向待插值点公式和示意图

其中n代表当前方向距离质心几个像素距离,这里需要注意的是在计算的时候坐标系的选取原点在左上角

代码

import math

import os

import time

import numpy as np

import SimpleITK as sitk

import scipy

import cv2

import matplotlib.pyplot as plt

from sklearn import linear_model

import skimage.transform as transform

from skimage.measure import label

def readMhd(mhdPath):

'''

根据文件路径读取mhd文件,并返回其内容Array、origin、spacing

'''

itkImage = sitk.ReadImage(mhdPath)

imageArray = sitk.GetArrayFromImage(itkImage) # [Z, H, W]

origin = itkImage.GetOrigin()

spacing = np.flipud(itkImage.GetSpacing()) # [X,Y,Z] -> [Z,Y,X]

# orientation = itkImage.GetMetaData()

# itkImage.SetMetaData()

return imageArray, origin, spacing

def saveMhd(imageArray, origin, spacing, savePath):

'''

根据图像Array、origin、spacing构造itk image对象,并将其保存到savePath

'''

itkImage = sitk.GetImageFromArray(imageArray, isVector=False)

itkImage.SetSpacing(spacing)

itkImage.SetOrigin(origin)

sitk.WriteImage(itkImage, savePath)

def OTSU_enhance(img_gray, th_begin, th_end, th_step=1):

'''

根据类间方差最大求最适合的阈值

在th_begin、th_end中寻找合适的阈值

'''

assert img_gray.ndim == 2, "must input a gary_img"

max_g = 0

suitable_th = 0

for threshold in range(th_begin, th_end, th_step): # 前闭后开区间

bin_img = img_gray > threshold

bin_img_inv = img_gray <= threshold

fore_pix = np.sum(bin_img)

back_pix = np.sum(bin_img_inv)

if 0 == fore_pix:

break

if 0 == back_pix:

continue

w0 = float(fore_pix) / img_gray.size

u0 = float(np.sum(img_gray * bin_img)) / fore_pix

w1 = float(back_pix) / img_gray.size

u1 = float(np.sum(img_gray * bin_img_inv)) / back_pix

# 类间方差公式

g = w0 * w1 * (u0 - u1) * (u0 - u1)

# print('threshold, g:', threshold, g)

if g > max_g:

max_g = g

suitable_th = threshold

return suitable_th

def largestConnectComponent(bw_img):

'''

求bw_img中的最大连通区域

:param bw_img: 二值图

:return: 最大连通区域

'''

labeled_img, num = label(bw_img, connectivity=2, background=0, return_num=True) # 取连通区域,connectivity=2表示8领域

max_label = 0

max_num = 0

for i in range(1, num + 1): # lable的编号从1开始,防止将背景设置为最大连通域

if np.sum(labeled_img == i) > max_num:

max_num = np.sum(labeled_img == i)

max_label = i

lcc = (labeled_img == max_label) # 只留下最大连通区域,其他区域置0

return lcc

def getSkeletonMap(CTImageArray):

'''

:param CTImageArray: itk读取的原图像

:return:返回原图像的所有层的二值轮廓图

'''

imageSkeletonMap = [] # 存放所有层轮廓图

for z in range(CTImageArray.shape[0]):

# 阈值分割得到二值图

binary_threshold = OTSU_enhance(CTImageArray[z], -600, 300, 1) # 使用类间方差来计算合适的阈值

_, binary = cv2.threshold(CTImageArray[z], binary_threshold, 255, cv2.THRESH_BINARY) # 二值化为0-255

# 取最大连通区域边界

imgMaxRegion = largestConnectComponent(binary)

imgMaxRegion = imgMaxRegion.astype(np.uint8) # 将bool值转为int8类型

# 填充孔洞,取二值图,openCV3和openCV2用法,3中有三个返回值

# _, contours, _ = cv2.findContours(imgMaxRegion, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

contours, _ = cv2.findContours(imgMaxRegion, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

newImage = np.zeros(CTImageArray[z].shape, np.uint8) # 创建黑色幕布

cv2.drawContours(newImage, contours, -1, (255, 255, 255), 3) # contours是轮廓,-1表示全画,然后轮廓线使用白色,厚度3

cv2.fillPoly(newImage, contours, (255, 255, 255)) # 填充外轮廓孔洞

imageSkeletonMap.append(newImage)

return imageSkeletonMap

def getDistanceMatrix(boundMap):

'''

:param boundMap: 所有层二值轮廓图

:return: 原图所有距离矩阵

这里没有根据距离转换公式,直接根据二值轮廓图判断在轮廓上、轮廓外、轮廓内

'''

distanceMaps = []

for z in range(len(boundMap)):

# 逐行扫描

curDistanceMap = np.zeros(boundMap[z].shape)

detectedEdge = cv2.Canny(boundMap[z], 255, 255) # 利用canny直接求二值轮廓图的边界

curDistanceMap[boundMap[z] == 0] = -1 # 原图像背景置为-1

curDistanceMap[boundMap[z] == 255] = 1 # 轮廓内部置为1

curDistanceMap[detectedEdge == 255] = 0 # 再把边界上置为0

distanceMaps.append(curDistanceMap)

return distanceMaps

def getInterSkeletonMap(distanceMaps, lambdaValue=0.5):

'''

:param distanceMap: 原序列所有层的距离矩阵

:param lambdaValue: 加权因子

:return: 插值后所有层的二值轮廓图(0-1)和边缘图

'''

newInterSkeletonMaps = []

newInterplotateEdges = []

for z in range(len(distanceMaps)):

preMap = distanceMaps[z].copy() # 前一层距离矩阵,类型为float64

preMap[preMap == 0] = 1 # 先把边界置为1

preMap[preMap == -1] = 0 # 再把背景置为0

preMap = preMap.astype(np.uint8)

preEdgeMap = cv2.Canny(preMap, 1, 1) # 低于0的不是边缘,大于1的会被认为是边缘,边缘值为255

newInterSkeletonMaps.append(preMap) # 每次将当前层先存到结果中

newInterplotateEdges.append(preEdgeMap)

if z == len(distanceMaps) - 1: # 最后一层以后不能插值了,现在测试的是在每两层中间插值一层

continue

# nextMap = distanceMaps[z + 1] # 下一层距离矩阵

curInterMap = lambdaValue * distanceMaps[z] + (1 - lambdaValue) * lambdaValue * distanceMaps[z + 1]

curInterMap[curInterMap >= 0] = 1

curInterMap[curInterMap < 0] = 0

curInterMap = curInterMap.astype(np.uint8)

newInterSkeletonMaps.append(curInterMap)

curInterEdge = cv2.Canny(curInterMap, 1, 1)

newInterplotateEdges.append(curInterEdge) # 插值的轮廓图,每一轮循环只需要将当前层和下一层插值的层的结果存入结果中

return newInterSkeletonMaps, newInterplotateEdges

def getCentriod(curSliceBoundMap):

'''

:param curSliceBoundMap:当前层的二值轮廓图

:return: 当前层的质心

'''

curSliceBoundMap = curSliceBoundMap.astype(np.uint8) # 将float64转为uint8才能调用findcontous

# _, contours, _ = cv2.findContours(curSliceBoundMap, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

# print('contous_length: ', len(contours))

contours, _ = cv2.findContours(curSliceBoundMap, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

if len(contours) == 1:

mu = cv2.moments(contours[0], False) # 取轮廓1

mc = [mu['m10'] / mu['m00'], mu['m01'] / mu['m00']]

else:

assert 1 == 2, '轮廓不只有一个,获取质心失败'

print('质心: ', mc) # 测试质心准确性

mc = np.array(mc,dtype = float) # 将mc转为nump数组,类型为float

return mc

def getLength(a, b, c, d):

'''

计算两点间的距离

'''

return np.sqrt(pow((a - c), 2) + pow((b - d), 2))

# return p.sqrt(np.sum(np.square(arr1-arr2))) # 计算两个np.array距离

def getEdgePoint(curBoundMap, angle, curCentriod):

'''

从待插值层的质心沿旋转角搜索边界点

'''

nextPointRow = curCentriod[0] + np.sin(angle)

nextPointCol = curCentriod[1] + np.cos(angle)

n = 1 # 每次间隔一个像素点

while curBoundMap[np.round(nextPointRow).astype(int)][np.round(nextPointCol).astype(int)] != 0:

nextPointRow = curCentriod[0] + n * np.sin(angle)

nextPointCol = curCentriod[1] + n * np.cos(angle)

n = n + 1

return [nextPointRow, nextPointCol] # 求边缘点是为了求当前半径的长度,边缘点坐标为浮点类型

def getDirectedPoints(curBoundMap, angle, curCentriod):

'''

求当前层从质心出发沿任一方向需要插值的点

'''

nextPointRow = curCentriod[0] + np.sin(angle)

nextPointCol = curCentriod[1] + np.cos(angle)

stepLength = [] # 每隔

directedPoints = []

n = 1

while curBoundMap[np.round(nextPointRow).astype(int)][np.round(nextPointCol).astype(int)] != 0: # 先强转为int

curLength = getLength(curCentriod[0], curCentriod[1], nextPointRow, nextPointCol)

# print('待插值层每取一个点和质心的距离: ', curLength) # 是以1.....n 待插值的点与质心的距离

stepLength.append(curLength)

directedPoints.append([nextPointRow,nextPointCol])

nextPointRow = curCentriod[0] + n * np.sin(angle)

nextPointCol = curCentriod[1] + n * np.cos(angle)

n = n + 1

# 循环结束时找到边界点

curEdgePoint = [nextPointRow, nextPointCol] # 当前边界点

curRadiusLength = getLength(curCentriod[0], curCentriod[1], nextPointRow, nextPointCol) #当前半径长度

stepLength = np.array(stepLength) # 先转nump数组

ratioArray = stepLength / curRadiusLength # 当前代插值层每一个点与半径的

return directedPoints, ratioArray, curEdgePoint

def getMatch(curBoundMap, angle, stepRatioArray, curCentriod):

'''

根据二值轮廓图 旋转角度 代插值层的比值数组从质心沿同方向求得相邻层的匹配点数组 当前层的边界点

'''

curEdgePoint = getEdgePoint(curBoundMap, angle, curCentriod)

curLineLength = getLength(curCentriod[0], curCentriod[1], curEdgePoint[0],curEdgePoint[1])

matchPointArray = []

for i in range(len(stepRatioArray)):

x = stepRatioArray[i] * curLineLength

curPointRow = curCentriod[0] + x * np.sin(angle)

curPointCol = curCentriod[1] + x * np.cos(angle)

matchPointArray.append([curPointRow,curPointCol])

return matchPointArray, curEdgePoint

def getMeanGreyValue(preCentriod, nextCentriod, preSliceImageArray, nextSliceImageArray, lambdaValue = 0.5):

'''

质心和边缘点不用找匹配点只需要根据上下两层的距离加权平均计算平均加权灰度值

'''

preGreyValue = preSliceImageArray[np.round(preCentriod[0]).astype(int)][np.round(preCentriod[1]).astype(int)]

nextGreyValue = nextSliceImageArray[np.round(nextCentriod[0]).astype(int)][np.round(nextCentriod[1]).astype(int)]

meanValue = lambdaValue * preGreyValue + (1 - lambdaValue) * nextGreyValue # 加权灰度值也会取整有误差?

return meanValue

def getNeighGreyValue(curMatchPoint, imageArray):

'''

求同方向上在上下两层对应点在当前层8邻域的距离加权平均灰度

'''

sumGreyValue = 0

sumLength = 0

distance = [[-1, 1], [-1, 0], [-1, 1], [0, 1], [1, 1], [1, 0], [-1, -1], [0, -1], [-1, 1]] # 8邻域

for i in range(0, 8): # 遍历当前点的8邻域

newX = curMatchPoint[0] + distance[i][0]

newY = curMatchPoint[1] + distance[i][1]

curLength = getLength(newX, newY, curMatchPoint[0], curMatchPoint[1])

sumLength += curLength

sumGreyValue += curLength * imageArray[np.round(newX).astype(int)][np.round(newY).astype(int)]

return sumGreyValue / sumLength # 四邻域距离加权平均

def getInterpolationMap(boundMap, CTImageArray, lambdaValue = 0.5):

'''

:param boundMap: 插值后的层二值轮廓图

:return: 在二值轮廓上插值

'''

newinterImageMaps = []

imageIndex = 0 # 原图的索引

for z in range(1, len(boundMap), 2):

preimageMap = CTImageArray[imageIndex]

nextimageMap = CTImageArray[imageIndex + 1]

imageIndex = imageIndex + 1

newInterImage = boundMap[z].copy() # 在待插值层的二值轮廓上插值

newInterImage[newInterImage == 0] = -1024 # CT中的背景灰度值是-1024

preBoundMap = boundMap[z - 1].copy()

if z == len(boundMap):

newinterImageMaps.append(CTImageArray[imageIndex])

continue

nextBoundMap = boundMap[z + 1].copy()

curCentriod = getCentriod(boundMap[z])

preCentriod = getCentriod(preBoundMap)

nextCentriod = getCentriod(nextBoundMap)

for a in np.arange(0,361,0.5): # 每次o.5°间隔搜索

a = math.radians(a) # 角度转为弧度

curDirectedPoins, curStepRatioArray, curEdgePoint = getDirectedPoints(boundMap[z], a,curCentriod)

preMatchPoints, preEdgePoint = getMatch(preBoundMap, a, curStepRatioArray,preCentriod)

nextMatchPoints, nextEdgePoint = getMatch(nextBoundMap, a, curStepRatioArray,nextCentriod)

newInterImage[np.round(curCentriod[0]).astype(int)][

np.round(curCentriod[1]).astype(int)] = getMeanGreyValue(preCentriod, nextCentriod, preimageMap,

nextimageMap)

newInterImage[np.round(curEdgePoint[0]).astype(int)][

np.round(curEdgePoint[1]).astype(int)] = getMeanGreyValue(preEdgePoint, nextEdgePoint,

preimageMap, nextimageMap)

for index in range(len(curDirectedPoins)): # 遍历当前方向待插值的每个点,计算对应在上下两层的灰度值

preGreyValue = getNeighGreyValue(preMatchPoints[index], preimageMap)

nextGreyValue = getNeighGreyValue(nextMatchPoints[index], nextimageMap)

# 计算上下两层的加权灰度值

meanValue = lambdaValue * preGreyValue + (1 - lambdaValue) * nextGreyValue

newInterImage[np.round(curDirectedPoins[index][0]).astype(int)][

np.round(curDirectedPoins[index][1]).astype(int)] = meanValue

# 当前代插值层灰度值赋值结束

# plt.figure()

# plt.subplot(131)

# plt.title('preImge')

# plt.imshow(preimageMap,'gray')

# plt.subplot(132)

# plt.title('curImage')

# plt.imshow(newinterImageMaps, 'gray')

# plt.subplot(133)

# plt.title('nextImage')

# plt.imshow(nextimageMap, 'gray')

# plt.show()

newinterImageMaps.append(preimageMap)

newinterImageMaps.append(newInterImage)

return newinterImageMaps

存在的问题

将待插值层局部放大,如下图可以看出许多点都没有找到相对应的点,一是由于待插值层是根据方向选取的点(度数的间隔比较大),二是根据方向搜索计算出来的点坐标是浮点数,经过取整造成的误差

解决方案

- 遍历待插值层轮廓内每一个坐标点,计算与质心点连接的方向,并求出当前坐标与质心距离与弦长长度比

- 根据上一步所求结果,求出当前坐标点对应在上一层和下一层映射的点坐标

- 根据映射点求出距离加权平均灰度值

具体的改进代码实现完成后再更新,这里先记录一下第一步的思路过程

参考文献

[1]卫旭芳, 田沄, 王毅,等. 一种基于形状的三维医学图像插值算法[J]. 计算机测量与控制, 2007, 15(009):1212-1213.

浙公网安备 33010602011771号

浙公网安备 33010602011771号