Tesnsorflow命名空间与变量管理参数reuse

一.TensorFlow中变量管理reuse参数的使用

1.TensorFlow用于变量管理的函数主要有两个:

(1)tf.get_variable:用于创建或获取变量的值

(2)tf.variable_scope():用于生成上下文管理器,创建命名空间,命名空间可以嵌套

2.函数tf.get_variable()既可以创建变量也可以获取变量。控制创建还是获取的开关来自函数tf.variable.scope()中的参数reuse为“True”还是"False",分两种情况进行说明:

(1)设置reuse=False时,函数get_variable()表示创建变量

with tf.variable_scope("foo",reuse=False):

v=tf.get_variable("v",[1],initializer=tf.constant_initializer(1.0))

#在tf.variable_scope()函数中,设置reuse=False时,在其命名空间"foo"中执行函数get_variable()时,表示创建变量"v"

(2)若在该命名空间中已经有了变量"v",则在创建时会报错,如下面的例子

import tensorflow as tf

with tf.variable_scope("foo"):

v=tf.get_variable("v",[1],initializer=tf.constant_initializer(1.0))

v1=tf.get_variable("v",[1])

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-1-eaed46cad84f> in <module>()

3 with tf.variable_scope("foo"):

4 v=tf.get_variable("v",[1],initializer=tf.constant_initializer(1.0))

----> 5 v1=tf.get_variable("v",[1])

6

ValueError: Variable foo/v already exists, disallowed.

Did you mean to set reuse=True or reuse=tf.AUTO_REUSE in VarScope?

(3)设置reuse=True时,函数get_variable()表示获取变量

import tensorflow as tf

with tf.variable_scope("foo"):

v=tf.get_variable("v",[1],initializer=tf.constant_initializer(1.0))

with tf.variable_scope("foo",reuse=True):

v1=tf.get_variable("v",[1])

print(v1==v)

运行结果为:

True

(4)在tf.variable_scope()函数中,设置reuse=True时,在其命名空间"foo"中执行函数get_variable()时,表示获取变量"v"。若在该命名空间中还没有该变量,则在获取时会报错,如下面的例子

import tensorflow as tf

with tf.variable_scope("foo",reuse=True):

v1=tf.get_variable("v",[1])

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-1-019a05c4b9a4> in <module>()

2

3 with tf.variable_scope("foo",reuse=True):

----> 4 v1=tf.get_variable("v",[1])

5

ValueError: Variable foo/v does not exist, or was not created with tf.get_variable().

Did you mean to set reuse=tf.AUTO_REUSE in VarScope?

二.Tensorflow中命名空间与变量命名问题

1. tf.Variable:创建变量;自动检测命名冲突并且处理;

import tensorflow as tf a1 = tf.Variable(tf.constant(1.0, shape=[1]),name="a") a2 = tf.Variable(tf.constant(1.0, shape=[1]),name="a") print(a1) #创建变量,命名为a print(a2)#自动检测命名冲突并且处理,命名为a_1

print(a1==a2)

运行结果:

<tf.Variable 'a:0' shape=(1,) dtype=float32_ref>

<tf.Variable 'a_1:0' shape=(1,) dtype=float32_ref>

False2. tf.get_variable创建与获取变量;在没有设置命名空间reuse的情况下变量命名冲突时报错

import tensorflow as tf

a3 = tf.get_variable("a", shape=[1], initializer=tf.constant_initializer(1.0))

a4 = tf.get_variable("a", shape=[1], initializer=tf.constant_initializer(1.0))

运行结果:

ValueError: Variable a already exists, disallowed.

Did you mean to set reuse=True or reuse=tf.AUTO_REUSE in VarScope?

3.tf.name_scope没有reuse功能,tf.get_variable命名不受它影响,并且命名冲突时报错;tf.Variable命名受它影响

import tensorflow as tf

a = tf.Variable(tf.constant(1.0, shape=[1]),name="a")

with tf.name_scope('layer2'):

a1 = tf.Variable(tf.constant(1.0, shape=[1]),name="a")

a2 = tf.Variable(tf.constant(1.0, shape=[1]),name="a")

a3 = tf.get_variable("b", shape=[1], initializer=tf.constant_initializer(1.0))

# a4 = tf.get_variable("b", shape=[1], initializer=tf.constant_initializer(1.0)) 该句会报错

print(a)

print(a1)

print(a2)

print(a3)

print(a1==a2)

运行结果:

<tf.Variable 'a_2:0' shape=(1,) dtype=float32_ref>

<tf.Variable 'layer2_1/a:0' shape=(1,) dtype=float32_ref>

<tf.Variable 'layer2_1/a_1:0' shape=(1,) dtype=float32_ref>

<tf.Variable 'b:0' shape=(1,) dtype=float32_ref>

False

4.tf.variable_scope可以配tf.get_variable实现变量共享;reuse默认为None,有False/True/tf.AUTO_REUSE可选:

- 设置reuse = None/False时tf.get_variable创建新变量,变量存在则报错

- 设置reuse = True时tf.get_variable只获取已存在的变量,变量不存在时报错

- 设置reuse = tf.AUTO_REUSE时tf.get_variable在变量已存在则自动复用,不存在则创建(!!!我的tensorflow好像不能用,报错说找不到这个模块)

(1) reuse=True的例子:

import tensorflow as tf

with tf.variable_scope('layer1'):

a3 = tf.get_variable("b", shape=[1], initializer=tf.constant_initializer(1.0))

with tf.variable_scope('layer1',reuse=True):

a1 = tf.Variable(tf.constant(1.0, shape=[1]),name="a")

a2 = tf.Variable(tf.constant(1.0, shape=[1]),name="a")

a4 = tf.get_variable("b", shape=[1], initializer=tf.constant_initializer(1.0))

print(a1)

print(a2)

print(a1==a2)

print()

print(a3)

print(a4)

print(a3==a4)

运行结果:

<tf.Variable 'layer1_1/a:0' shape=(1,) dtype=float32_ref>

<tf.Variable 'layer1_1/a_1:0' shape=(1,) dtype=float32_ref>

False

<tf.Variable 'layer1/b:0' shape=(1,) dtype=float32_ref>

<tf.Variable 'layer1/b:0' shape=(1,) dtype=float32_ref>

True

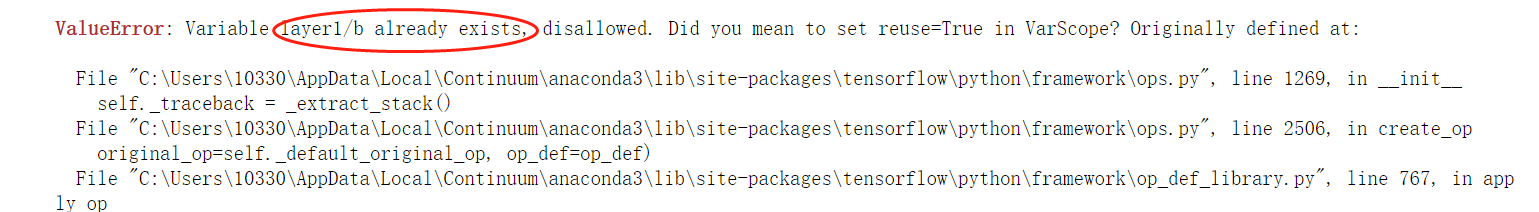

(2) reuse=None/False的例子:

import tensorflow as tf with tf.variable_scope('layer1'): a3 = tf.get_variable("b", shape=[1], initializer=tf.constant_initializer(1.0)) with tf.variable_scope('layer1'): #reuse默认为None a1 = tf.Variable(tf.constant(1.0, shape=[1]),name="a") a2 = tf.Variable(tf.constant(1.0, shape=[1]),name="a") a4 = tf.get_variable("b", shape=[1], initializer=tf.constant_initializer(1.0)) #a4创建新变量b(而b已经存在了,a3已经创建),报错 print(a1) print(a2) print(a1==a2) print() print(a3) print(a4) print(a3==a4)

参考博客:

https://blog.csdn.net/johnboat/article/details/84846628

https://www.cnblogs.com/jfl-xx/p/9885662.html