from mxnet import gluon,init

from mxnet.gluon import nn,loss as gloss

from mxnet.gluon import data as gdata

from mxnet import autograd,nd

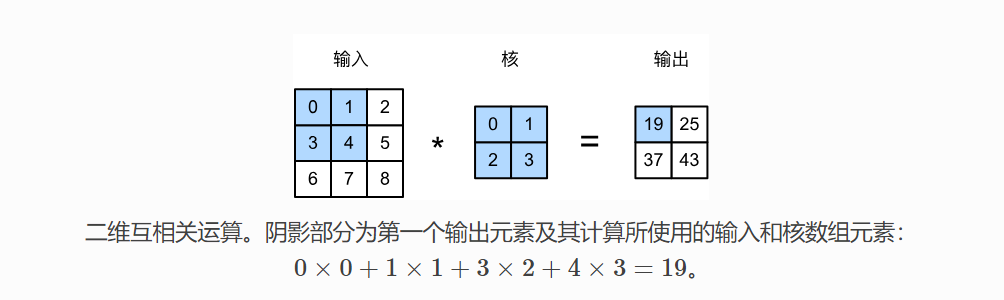

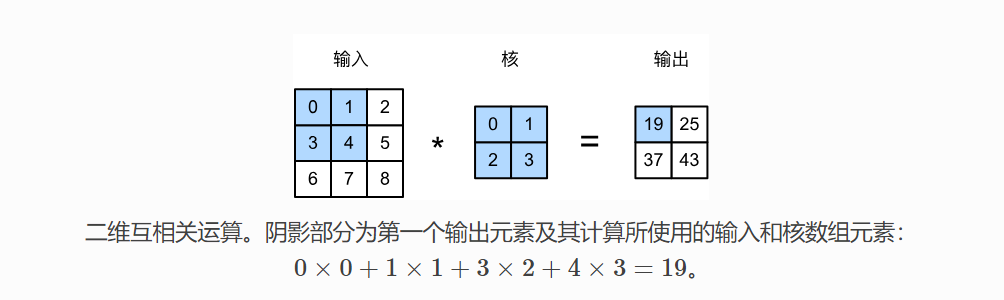

# 二维互相关运算

def corr2d(X, K):

h, w = K.shape

Y = nd.zeros((X.shape[0] - h + 1, X.shape[1] - w + 1))

for i in range(Y.shape[0]):

for j in range(Y.shape[1]):

Y[i, j] = (X[i: i + h, j: j + w] * K).sum()

return Y

X = nd.array([[0,1,2],[3,4,5],[6,7,8]])

K = nd.array([[0,1],[2,3]])

print(corr2d(X,K))

# 二维卷积层

class Conv2D(nn.Block):

def __init__(self,kernel_size,**kwargs):

super(Conv2D,self).__init__(**kwargs)

self.weight = self.params.get('weight',shape=kernel_size)

self.bias = self.params.get('bias', shape=(1,))

def forward(self, x):

return corr2d(x,self.weight.data()) + self.bias.data()

# 图像物体边缘检测

X = nd.ones((6,8))

X[:,2:6] = 0

print(X)

K = nd.array([[1,-1]])

Y = corr2d(X,K)

print(Y)

# 通过数据学习核数组

conv2d = nn.Conv2D(1,kernel_size=(1,2))

conv2d.initialize()

# 二维卷积层使用4维输入输出,格式为(样本,通道,高,宽)

# 这里样本数,通道数为 1

X = X.reshape((1,1,6,8))

Y = Y.reshape((1,1,6,7))

print(X)

print(Y)

for i in range(20):

with autograd.record():

Y_hat = conv2d(X)

l = (Y_hat - Y)**2

l.backward()

# 调整参数

conv2d.weight.data()[:] -= 3e-2*conv2d.weight.grad()

print('batch %d,loss %.3f'%(i+1,l.sum().asscalar()))

print(conv2d.weight.data().reshape((1,2)))

# 互相关运算和卷积运算

# 只需将核数组左右翻转,上下翻转,再与输入数组做互相关运算

# 深度学习核数组都是学出来的,卷积层使用互相关还是卷积不影响模型预测时的输出

浙公网安备 33010602011771号

浙公网安备 33010602011771号