一、 以编程方式执行Spark SQL查询

1. 编写Spark SQL程序实现RDD转换成DataFrame

前面我们学习了如何在Spark Shell中使用SQL完成查询,现在我们通过IDEA编写Spark SQL查询程序。

Spark官网提供了两种方法来实现从RDD转换得到DataFrame,第一种方法是利用反射机制,推导包含某种类型的RDD,通过反射将其转换为指定类型的DataFrame,适用于提前知道RDD的schema。第二种方法通过编程接口与RDD进行交互获取schema,并动态创建DataFrame,在运行时决定列及其类型。

首先在maven项目的pom.xml中添加Spark SQL的依赖。

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>2.1.3</version>

</dependency>

|

1.1. 通过反射推断Schema

Scala支持使用case class类型导入RDD转换为DataFrame,通过case class创建schema,case class的参数名称会被利用反射机制作为列名。这种RDD可以高效的转换为DataFrame并注册为表。

代码如下:

Java版本

package com.hzk.sparksql;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.function.ForeachFunction;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SaveMode;

import org.apache.spark.sql.SparkSession;

import java.io.Serializable;

public class ReflectTransform {

public static void main(String[] args) {

SparkSession spark = SparkSession.builder().master("local[*]").appName("Spark").getOrCreate();

JavaRDD<String> lines=spark.read().textFile("D:\\Bigdata\\20.sparksql\\2、以编程方式执行sparksql\\person.txt").javaRDD();

JavaRDD<Person> rowRDD = lines.map(line -> {

String parts[] = line.split(" ");

return new Person(Integer.valueOf(parts[0]),parts[1],Integer.valueOf(parts[2]));

});

Dataset<Row> df = spark.createDataFrame(rowRDD, Person.class);

// df.select("id", "name", "age").

// coalesce(1).write().mode(SaveMode.Append).parquet("parquet.res");

df.foreach(new ForeachFunction<Row>() {

@Override

public void call(Row row) throws Exception {

System.out.println("id:"+row.get(0)+",name:"+row.get(1)+",age:"+row.get(2));

}

});

}

static class Person implements Serializable {

private int id;

private String name;

private int age;

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

public Person(int id, String name, int age) {

this.id = id;

this.name = name;

this.age = age;

}

}

}

|

Scala版本

import org.apache.spark.SparkContext

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, SparkSession}

/**

* RDD转化成DataFrame:利用反射机制

*/

//todo:定义一个样例类Person

case class Person(id:Int,name:String,age:Int)

object CaseClassSchema {

def main(args: Array[String]): Unit = {

//todo:1、构建sparkSession 指定appName和master的地址

val spark: SparkSession = SparkSession.builder()

.appName("CaseClassSchema")

.master("local[2]").getOrCreate()

//todo:2、从sparkSession获取sparkContext对象

val sc: SparkContext = spark.sparkContext

sc.setLogLevel("WARN")//设置日志输出级别

//todo:3、加载数据

val dataRDD: RDD[String] = sc.textFile("D:\\person.txt")

//todo:4、切分每一行记录

val lineArrayRDD: RDD[Array[String]] = dataRDD.map(_.split(" "))

//todo:5、将RDD与Person类关联

val personRDD: RDD[Person] = lineArrayRDD.map(x=>Person(x(0).toInt,x(1),x(2).toInt))

//todo:6、创建dataFrame,需要导入隐式转换

import spark.implicits._

val personDF: DataFrame = personRDD.toDF()

//todo-------------------DSL语法操作 start--------------

//1、显示DataFrame的数据,默认显示20行

personDF.show()

//2、显示DataFrame的schema信息

personDF.printSchema()

//3、显示DataFrame记录数

println(personDF.count())

//4、显示DataFrame的所有字段

personDF.columns.foreach(println)

//5、取出DataFrame的第一行记录

println(personDF.head())

//6、显示DataFrame中name字段的所有值

personDF.select("name").show()

//7、过滤出DataFrame中年龄大于30的记录

personDF.filter($"age" > 30).show()

//8、统计DataFrame中年龄大于30的人数

println(personDF.filter($"age">30).count())

//9、统计DataFrame中按照年龄进行分组,求每个组的人数

personDF.groupBy("age").count().show()

//todo-------------------DSL语法操作 end-------------

//todo--------------------SQL操作风格 start-----------

//todo:将DataFrame注册成表

personDF.createOrReplaceTempView("t_person")

//todo:传入sql语句,进行操作

spark.sql("select * from t_person").show()

spark.sql("select * from t_person where name='zhangsan'").show()

spark.sql("select * from t_person order by age desc").show()

//todo--------------------SQL操作风格 end-------------

sc.stop()

spark.stop()

}

}

|

1.2. 通过StructType直接指定Schema

当case class不能提前定义好时,可以通过以下三步创建DataFrame

(1)将RDD转为包含Row对象的RDD

(2)基于StructType类型创建schema,与第一步创建的RDD相匹配

(3)通过sparkSession的createDataFrame方法对第一步的RDD应用schema创建DataFrame

Java版本

package com.hzk.sparksql;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FilterFunction;

import org.apache.spark.api.java.function.ForeachFunction;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.sql.*;

import org.apache.spark.sql.types.DataTypes;

import org.apache.spark.sql.types.StructField;

import org.apache.spark.sql.types.StructType;

import java.io.Serializable;

import java.util.ArrayList;

public class DynamicTransform {

public static void main(String[] args) {

SparkSession spark = SparkSession.builder().master("local[*]").appName("Spark").getOrCreate();

JavaRDD<String> lines=spark.read().textFile("D:\\Bigdata\\20.sparksql\\2、以编程方式执行sparksql\\person.txt").javaRDD();

JavaRDD<Row> personMaps=lines.map(new Function<String, Row>() {

@Override

public Row call(String s) throws Exception {

String[] personString=s.split(" ");

return RowFactory.create(Integer.valueOf(personString[0]),personString[1],Integer.valueOf(personString[2]));

}

});

ArrayList<StructField> fields = new ArrayList<StructField>();

StructField field = null;

field = DataTypes.createStructField("id", DataTypes.IntegerType, true);

fields.add(field);

field = DataTypes.createStructField("name", DataTypes.StringType, true);

fields.add(field);

field = DataTypes.createStructField("age", DataTypes.IntegerType, true);

fields.add(field);

StructType schema = DataTypes.createStructType(fields);

Dataset<Row> df = spark.createDataFrame(personMaps, schema);

df.coalesce(1).write().mode(SaveMode.Append).parquet("parquet.res1");

df.foreach(new ForeachFunction<Row>() {

@Override

public void call(Row row) throws Exception {

System.out.println("id:"+row.get(0)+",name:"+row.get(1)+",age:"+row.get(2));

}

});

}

}

|

Scala版本

import org.apache.spark.SparkContext

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

import org.apache.spark.sql.{DataFrame, Row, SparkSession}

/**

* RDD转换成DataFrame:通过指定schema构建DataFrame

*/

object SparkSqlSchema {

def main(args: Array[String]): Unit = {

//todo:1、创建SparkSession,指定appName和master

val spark: SparkSession = SparkSession.builder()

.appName("SparkSqlSchema")

.master("local[2]")

.getOrCreate()

//todo:2、获取sparkContext对象

val sc: SparkContext = spark.sparkContext

//todo:3、加载数据

val dataRDD: RDD[String] = sc.textFile("d:\\person.txt")

//todo:4、切分每一行

val dataArrayRDD: RDD[Array[String]] = dataRDD.map(_.split(" "))

//todo:5、加载数据到Row对象中

val personRDD: RDD[Row] = dataArrayRDD.map(x=>Row(x(0).toInt,x(1),x(2).toInt))

//todo:6、创建schema

val schema:StructType= StructType(Seq(

StructField("id", IntegerType, false),

StructField("name", StringType, false),

StructField("age", IntegerType, false)

))

//todo:7、利用personRDD与schema创建DataFrame

val personDF: DataFrame = spark.createDataFrame(personRDD,schema)

//todo:8、DSL操作显示DataFrame的数据结果

personDF.show()

//todo:9、将DataFrame注册成表

personDF.createOrReplaceTempView("t_person")

//todo:10、sql语句操作

spark.sql("select * from t_person").show()

spark.sql("select count(*) from t_person").show()

sc.stop()

spark.stop()

}

}

|

2. 编写Spark SQL程序操作HiveContext

HiveContext是对应spark-hive这个项目,与hive有部分耦合, 支持hql,是SqlContext的子类,在Spark2.0之后,HiveContext和SqlContext在SparkSession进行了统一,可以通过操作SparkSession来操作HiveContext和SqlContext。

2.1. 添加pom依赖

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.11</artifactId>

<version>2.1.3</version>

</dependency>

|

2.2. 代码实现

package gec.sql

import org.apache.spark.sql.SparkSession

/**

* todo:Sparksql操作hive的sql

*/

object HiveSupport {

def main(args: Array[String]): Unit = {

//todo:1、创建sparkSession

val spark: SparkSession = SparkSession.builder()

.appName("HiveSupport")

.master("local[2]")

.config("spark.sql.warehouse.dir", "d:\\spark-warehouse")

.enableHiveSupport() //开启支持hive

.getOrCreate()

spark.sparkContext.setLogLevel("WARN") //设置日志输出级别

//todo:2、操作sql语句

spark.sql("CREATE TABLE IF NOT EXISTS person (id int, name string, age int) row format delimited fields terminated by ' '")

spark.sql("LOAD DATA LOCAL INPATH './data/student.txt' INTO TABLE person")

spark.sql("select * from person ").show()

spark.stop()

}

}

需要在当前项目下创建一个data目录,然后在data目录下创建一个student.txt数据文件。

|

3.编写Spark SQL程序操作Mysql

1. JDBC

Spark SQL可以通过JDBC从关系型数据库中读取数据的方式创建DataFrame,通过对DataFrame一系列的计算后,还可以将数据再写回关系型数据库中。

1.1. SparkSql从MySQL中加载数据

1.1.1 通过IDEA编写SparkSql代码

Java版本

public static void dataFromMysql() {

SparkSession spark = SparkSession.builder().master("local[*]").appName("Spark").getOrCreate();

Properties properties = new Properties();

properties.setProperty("user", "root");

properties.setProperty("password", "123456");

//todo:3、读取mysql中的数据

Dataset<Row> df = spark.read().jdbc("jdbc:mysql://localhost:3306/baidu", "student", properties);

df.show();

}

|

Scala版本

package gec.sql

import java.util.Properties

import org.apache.spark.sql.{DataFrame, SparkSession}

/**

* todo:Sparksql从mysql中加载数据

*/

object DataFromMysql {

def main(args: Array[String]): Unit = {

//todo:1、创建sparkSession对象

val spark: SparkSession = SparkSession.builder()

.appName("DataFromMysql")

.master("local[2]")

.getOrCreate()

//todo:2、创建Properties对象,设置连接mysql的用户名和密码

val properties: Properties =new Properties()

properties.setProperty("user","root")

properties.setProperty("password","123456")

//todo:3、读取mysql中的数据

val mysqlDF: DataFrame = spark.read.jdbc("jdbc:mysql://192.168.200.100:3306/spark","iplocation",properties)

//todo:4、显示mysql中表的数据

mysqlDF.show()

spark.stop()

}

}

|

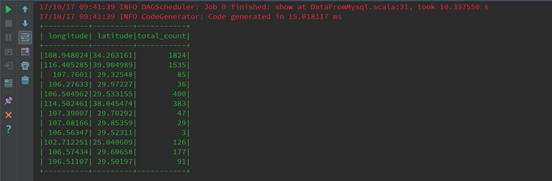

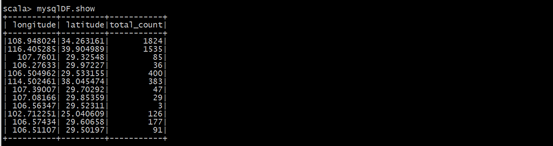

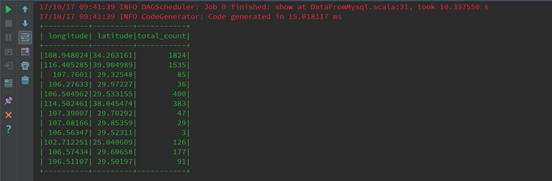

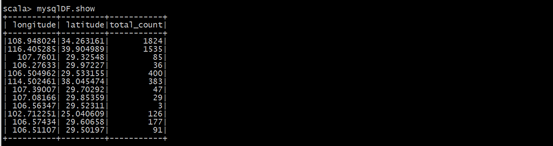

执行查看效果:

1.1.2 通过spark-shell运行

(1)、启动spark-shell(必须指定mysql的连接驱动包)

|

spark-shell \

--master spark://node1:7077 \

--executor-memory 1g \

--total-executor-cores 2 \

--jars /export/servers/hive/lib/mysql-connector-java-5.1.35.jar \

--driver-class-path /export/servers/hive/lib/mysql-connector-java-5.1.35.jar

|

(2)、从mysql中加载数据

|

val mysqlDF = spark.read.format("jdbc").options(Map("url" -> "jdbc:mysql://192.168.200.100:3306/spark", "driver" -> "com.mysql.jdbc.Driver", "dbtable" -> "iplocation", "user" -> "root", "password" -> "123456")).load()

|

(3)、执行查询

1.2. SparkSql将数据写入到MySQL中

1.2.1 通过IDEA编写SparkSql代码

(1)编写代码

Java版本

public static void sparkSqlToMysql() {

SparkSession spark = SparkSession.builder().master("local[*]").appName("Spark").getOrCreate();

Properties properties = new Properties();

properties.setProperty("user", "root");

properties.setProperty("password", "123456");

JavaRDD<String> lines = spark.read().textFile("D:\\Bigdata\\20.sparksql\\2、以编程方式执行sparksql\\person.txt").javaRDD();

JavaRDD<Person> personRDD = lines.map(new Function<String, Person>() {

@Override

public Person call(String s) throws Exception {

String[] strings = s.split(" ");

return new Person(Integer.valueOf(strings[0]), strings[1], Integer.valueOf(strings[2]));

}

});

Dataset<Row> df = spark.createDataFrame(personRDD, Person.class);

df.createOrReplaceTempView("person");

Dataset<Row> resultDF = spark.sql("select * from person order by age desc");

Properties properties1 = new Properties();

properties.setProperty("user", "root");

properties.setProperty("password", "123456");

resultDF.write().jdbc("jdbc:mysql://localhost:3306/baidu", "person", properties);

spark.stop();

}

public static class Person implements Serializable {

private int id;

private String name;

private int age;

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

public Person(int id, String name, int age) {

this.id = id;

this.name = name;

this.age = age;

}

}

|

Scala版本

package gec.sql

import java.util.Properties

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, Dataset, SaveMode, SparkSession}

/**

* todo:sparksql写入数据到mysql中

*/

object SparkSqlToMysql {

def main(args: Array[String]): Unit = {

//todo:1、创建sparkSession对象

val spark: SparkSession = SparkSession.builder()

.appName("SparkSqlToMysql")

.getOrCreate()

//todo:2、读取数据

val data: RDD[String] = spark.sparkContext.textFile(args(0))

//todo:3、切分每一行,

val arrRDD: RDD[Array[String]] = data.map(_.split(" "))

//todo:4、RDD关联Student

val studentRDD: RDD[Student] = arrRDD.map(x=>Student(x(0).toInt,x(1),x(2).toInt))

//todo:导入隐式转换

import spark.implicits._

//todo:5、将RDD转换成DataFrame

val studentDF: DataFrame = studentRDD.toDF()

//todo:6、将DataFrame注册成表

studentDF.createOrReplaceTempView("student")

//todo:7、操作student表 ,按照年龄进行降序排列

val resultDF: DataFrame = spark.sql("select * from student order by age desc")

//todo:8、把结果保存在mysql表中

//todo:创建Properties对象,配置连接mysql的用户名和密码

val prop =new Properties()

prop.setProperty("user","root")

prop.setProperty("password","123456")

resultDF.write.jdbc("jdbc:mysql://192.168.200.150:3306/spark","student",prop)

//todo:写入mysql时,可以配置插入mode,overwrite覆盖,append追加,ignore忽略,error默认表存在报错

//resultDF.write.mode(SaveMode.Overwrite).jdbc("jdbc:mysql://192.168.200.150:3306/spark","student",prop)

spark.stop()

}

}

//todo:创建样例类Student

case class Student(id:Int,name:String,age:Int)

|

(2)用maven将程序打包

通过IDEA工具打包即可

(3)将Jar包提交到spark集群

|

spark-submit \

--class gec.sql.SparkSqlToMysql \

--master spark://node1:7077 \

--executor-memory 1g \

--total-executor-cores 2 \

--jars /export/servers/hive/lib/mysql-connector-java-5.1.35.jar \

--driver-class-path /export/servers/hive/lib/mysql-connector-java-5.1.35.jar \

/root/original-spark-2.0.2.jar /person.txt

|

(4)查看mysql中表的数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号