【PyTorch】学习笔记

1. 查看PyTorch版本

import torch

print(torch.__version__)

2. 模型参数量

model = FPN()

num_params = sum(p.numel() for p in model.parameters())

print("num of params: {:.2f}k".format(num_params/1000.0))

# torch.numel()返回tensor的元素数目,即number of elements

# Returns the total number of elements in the input tensor.

3. 打印模型

model = FPN()

num_params = sum(p.numel() for p in model.parameters())

print("num of params: {:.2f}k".format(num_params/1000.0))

print("===========================")

#for p in model.parameters():

# print(p.name)

print(model)

4. with torch.no_grad()

with torch.no_grad():

# code no gradient Here!

5. ReLU激活函数

torch.nn.ReLU(inplace=False)官方文档:https://pytorch.org/docs/stable/nn.html#relu- inplace的作用:https://www.cnblogs.com/wanghui-garcia/p/10642665.html

- 节省显存小技巧:Pytorch有什么节省显存的小技巧? - 郑哲东的回答 - 知乎

https://www.zhihu.com/question/274635237/answer/573633662 👉 将inplace设置为True, 需要注意的是默认为False - 中文名:修正线性单元(Recified Linear Unit) 在网络中加入非线性

- AlexNet 2012年使用ReLU作为激活函数 https://zhuanlan.zhihu.com/p/39068853

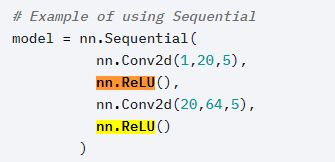

6. torch.nn.Sequential

7. 模型保存&&加载

https://zhuanlan.zhihu.com/p/76604532

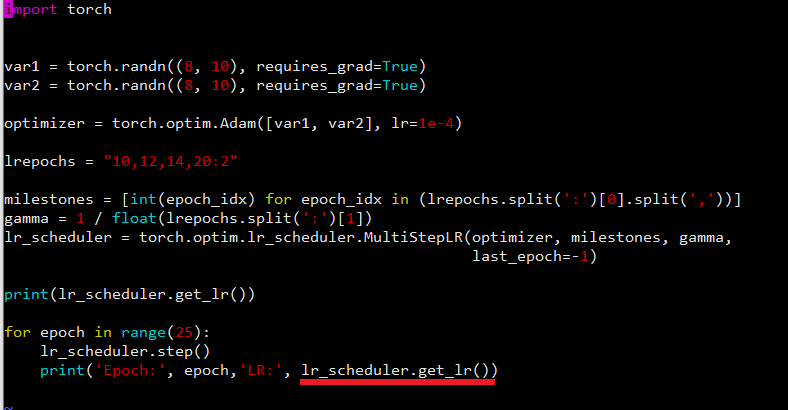

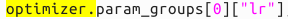

8. 调整学习率 How to adjust learning rate

其实Optimizer也可以打印学习率

9. nn.ModuleList

10. Tensor.detach()

Returns a new Tensor, detached from the current graph. # 返回一个新的Tensor,从现有的图中分离开来

The result will never require gradient. # 返回的Tensor不需要梯度信息

11. 二维卷积torch.nn.Conv2d

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0,

dilation=1, groups=1, bias=True, padding_mode='zeros')

12. torch.nn.Unfold()

13. 打印网络架构

14. 固定模型的部分参数

15. torch.unbind

Removes a tensor dimension

返回一个tuple

16. torch.clamp

- torch.clamp(input, min, max)

- torch.clamp(input, min=MIN)

- torch.clamp(input, max=MAX)

clamp_(): In-place- https://blog.finxter.com/understanding-the-pytorch-clamp-method-a-guided-exploration/

关于batch_size和num_epochs设置的问题

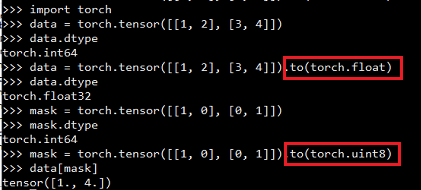

17. PyTorch数据类型转换

PyTorch中数据类型详见👉 torch-tensor

自从xx版本以后,没有Boolean类型的数据变量,转为torch.uint8类型,mask的时候会涉及到

18. 防止Loss为NaN

sqrt(s^2+0.001^2)Robust 防止s-->0 时无法反向传播1/(x+1e-8)在分母处加一个很小的数值

19. torch.from_numpy

记得copy(), 否则会报错

20. 节省显存

- Replace

BN+ReLUwithInplace-ABN - 另外BN与GN的关系和区别???

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· winform 绘制太阳,地球,月球 运作规律

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人