MapReduce案例WordCount

所需的 pom 依赖:

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

</dependencies>17

1

<dependencies>

2

<dependency>

3

<groupId>org.apache.hadoop</groupId>

4

<artifactId>hadoop-client</artifactId>

5

<version>2.7.3</version>

6

</dependency>

7

<dependency>

8

<groupId>org.apache.hadoop</groupId>

9

<artifactId>hadoop-common</artifactId>

10

<version>2.7.3</version>

11

</dependency>

12

<dependency>

13

<groupId>org.apache.hadoop</groupId>

14

<artifactId>hadoop-hdfs</artifactId>

15

<version>2.7.3</version>

16

</dependency>

17

</dependencies>

Mapper 实现:

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class WordCountMapper extends Mapper<LongWritable, Text, Text, LongWritable> {

private Text k = new Text();

private LongWritable v = new LongWritable();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

// 分词

String[] words = line.split(" ");

// 输出

for (String word : words) {

k.set(word);

v.set(1L);

context.write(k, v);

}

}

}1

import java.io.IOException;

2

import org.apache.hadoop.io.LongWritable;

3

import org.apache.hadoop.io.Text;

4

import org.apache.hadoop.mapreduce.Mapper;

5

6

public class WordCountMapper extends Mapper<LongWritable, Text, Text, LongWritable> {

7

8

private Text k = new Text();

9

private LongWritable v = new LongWritable();

10

11

12

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

13

String line = value.toString();

14

// 分词

15

String[] words = line.split(" ");

16

// 输出

17

for (String word : words) {

18

k.set(word);

19

v.set(1L);

20

context.write(k, v);

21

}

22

}

23

}

Reducer 实现:

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

private LongWritable value = new LongWritable();

@Override

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

long sum = 0;

// 计数

for (LongWritable v : values) {

sum += v.get();

}

// 输出

value.set(sum);

context.write(key, value);

}

}22

1

import java.io.IOException;

2

3

import org.apache.hadoop.io.LongWritable;

4

import org.apache.hadoop.io.Text;

5

import org.apache.hadoop.mapreduce.Reducer;

6

7

public class WordCountReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

8

9

private LongWritable value = new LongWritable();

10

11

12

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

13

long sum = 0;

14

// 计数

15

for (LongWritable v : values) {

16

sum += v.get();

17

}

18

// 输出

19

value.set(sum);

20

context.write(key, value);

21

}

22

}

Driver 实现:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class Driver {

public static void main(String[] args) throws Exception {

args = new String[]{"D:/EclipseWorkspace/mapreducetop10/hello.txt",

"D:/EclipseWorkspace/mapreducetop10/output"};

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

// 指明程序的入口

job.setJarByClass(Driver.class);

// 指明mapper

job.setMapperClass(WordCountMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

// 指明reducer

job.setReducerClass(WordCountReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

// 指明任务的输入输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 启动任务

job.waitForCompletion(true);

}

}37

1

import org.apache.hadoop.conf.Configuration;

2

import org.apache.hadoop.fs.Path;

3

import org.apache.hadoop.io.LongWritable;

4

import org.apache.hadoop.io.Text;

5

import org.apache.hadoop.mapreduce.Job;

6

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

7

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

8

9

public class Driver {

10

11

public static void main(String[] args) throws Exception {

12

args = new String[]{"D:/EclipseWorkspace/mapreducetop10/hello.txt",

13

"D:/EclipseWorkspace/mapreducetop10/output"};

14

15

Configuration conf = new Configuration();

16

Job job = Job.getInstance(conf);

17

// 指明程序的入口

18

job.setJarByClass(Driver.class);

19

20

// 指明mapper

21

job.setMapperClass(WordCountMapper.class);

22

job.setMapOutputKeyClass(Text.class);

23

job.setMapOutputValueClass(LongWritable.class);

24

25

// 指明reducer

26

job.setReducerClass(WordCountReducer.class);

27

job.setOutputKeyClass(Text.class);

28

job.setOutputValueClass(LongWritable.class);

29

30

// 指明任务的输入输出路径

31

FileInputFormat.setInputPaths(job, new Path(args[0]));

32

FileOutputFormat.setOutputPath(job, new Path(args[1]));

33

34

// 启动任务

35

job.waitForCompletion(true);

36

}

37

}

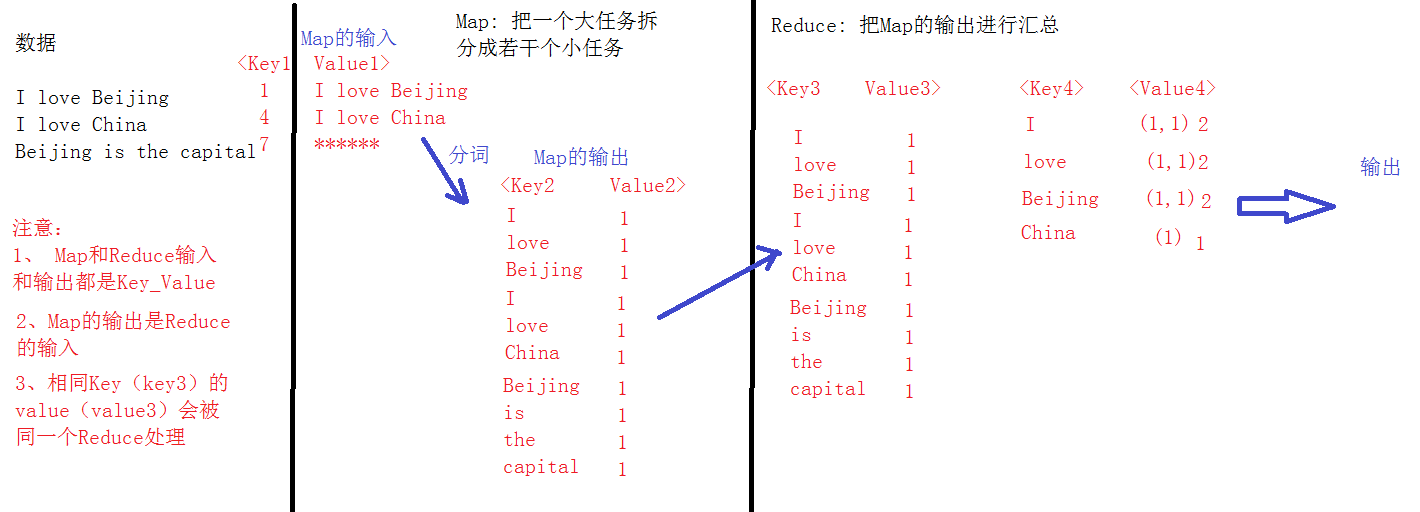

WordCount执行过程:(图示)

本文版权归作者和博客园共有,欢迎转载,但必须给出原文链接,并保留此段声明,否则保留追究法律责任的权利。